ITP method: Difference between revisions

Citation bot (talk | contribs) Add: s2cid, isbn, author pars. 1-1. Removed parameters. Some additions/deletions were actually parameter name changes. Upgrade ISBN10 to ISBN13. | You can use this bot yourself. Report bugs here. | Suggested by Ost316 | Category:AfC pending submissions by age/4 days ago | via #UCB_Category 30/63 |

No edit summary |

||

| (40 intermediate revisions by 25 users not shown) | |||

| Line 1: | Line 1: | ||

{{Short description|Root-finding algorithm}} |

|||

| ⚫ | In [[numerical analysis]], the ITP |

||

{{Multiple issues| |

|||

| ⚫ | The ITP |

||

{{Primary sources|date=November 2024}} |

|||

{{more citations needed|date=January 2021}} |

|||

{{Notability|date=May 2024}} |

|||

}} |

|||

| ⚫ | In [[numerical analysis]], the '''ITP method''', short for ''Interpolate Truncate and Project'', is the first [[Root-finding algorithms|root-finding algorithm]] that achieves the [[superlinear convergence]] of the [[secant method]]<ref>{{Cite book|last1=Argyros|first1=I. K.|last2=Hernández-Verón|first2=M. A.|last3=Rubio|first3=M. J.|chapter=On the Convergence of Secant-Like Methods|date=2019|title=Current Trends in Mathematical Analysis and Its Interdisciplinary Applications|chapter-url=http://springer.nl.go.kr/chapter/10.1007/978-3-030-15242-0_5|language=en|pages=141–183|doi=10.1007/978-3-030-15242-0_5|isbn=978-3-030-15241-3|s2cid=202156085}}{{Dead link|date=August 2024 |bot=InternetArchiveBot |fix-attempted=yes }}</ref> while retaining the optimal<ref>{{Cite journal|last=Sikorski|first=K.|date=1982-02-01|title=Bisection is optimal|url=https://doi.org/10.1007/BF01459080|journal=Numerische Mathematik|language=en|volume=40|issue=1|pages=111–117|doi=10.1007/BF01459080|s2cid=119952605|issn=0945-3245}}</ref> [[Best, worst and average case|worst-case]] performance of the [[bisection method]].<ref name=":0">{{Cite journal|last1=Oliveira|first1=I. F. D.|last2=Takahashi|first2=R. H. C.|date=2020-12-06|title=An Enhancement of the Bisection Method Average Performance Preserving Minmax Optimality|url=https://doi.org/10.1145/3423597|journal=ACM Transactions on Mathematical Software|volume=47|issue=1|pages=5:1–5:24|doi=10.1145/3423597|s2cid=230586635 |issn=0098-3500}}</ref> It is also the first method with guaranteed average performance strictly better than the bisection method under any continuous distribution.<ref name=":0" /> In practice it performs better than traditional interpolation and hybrid based strategies ([[Brent's method|Brent's Method]], [[Ridders' method|Ridders]], [[Regula falsi|Illinois]]), since it not only converges super-linearly over well behaved functions but also guarantees fast performance under ill-behaved functions where interpolations fail.<ref name=":0" /> |

||

| ⚫ | The ITP method follows the same structure of standard bracketing strategies that keeps track of upper and lower bounds for the location of the root; but it also keeps track of the region where worst-case performance is kept upper-bounded. As a bracketing strategy, in each iteration the ITP queries the value of the function on one point and discards the part of the interval between two points where the function value shares the same sign. The queried point is calculated with three steps: it interpolates finding the [[regula falsi]] estimate, then it perturbes/truncates the estimate (similar to {{section link|Regula falsi|Improvements in ''regula falsi''}}) and then projects the perturbed estimate onto an interval in the neighbourhood of the bisection midpoint. The neighbourhood around the bisection point is calculated in each iteration in order to guarantee minmax optimality (Theorem 2.1 of <ref name=":0" />). The method depends on three hyper-parameters <math>\kappa_1\in (0,\infty), \kappa_2 \in \left[1,1+\phi\right) </math> and <math>n_0\in[0,\infty) </math> where <math>\phi </math> is the golden ratio <math>\tfrac{1}{2}(1+\sqrt{5}) </math>: the first two control the size of the truncation and the third is a slack variable that controls the size of the interval for the projection step.{{efn|1=For a more in-depth discussion of the hyper-parameters, see the documentation for [https://docs.rs/kurbo/0.8.1/kurbo/common/fn.solve_itp.html ITP in the kurbo library].}} |

||

| ⚫ | |||

| ⚫ | |||

| ⚫ | Given a continuous function <math>f</math> defined from <math>[a,b]</math> to <math>\mathbb{R}</math> such that <math>f(a)f(b)\leq 0</math>, where at the cost of one query one can access the values of <math>f(x)</math> on any given <math>x</math>. And, given a pre-specified target precision <math>\epsilon>0</math>, a root-finding algorithm is |

||

| ⚫ | Given a continuous function <math>f</math> defined from <math>[a,b]</math> to <math>\mathbb{R}</math> such that <math>f(a)f(b)\leq 0</math>, where at the cost of one query one can access the values of <math>f(x)</math> on any given <math>x</math>. And, given a pre-specified target precision <math>\epsilon>0</math>, a root-finding algorithm is designed to solve the following problem with the least amount of queries as possible:<blockquote> |

||

'''Problem Definition:''' Find <math>\hat{x}</math> such that <math>|\hat{x}-x^*|\leq \epsilon</math>, where <math>x^*</math> satisfies <math>f(x^*) = 0</math>.</blockquote> |

'''Problem Definition:''' Find <math>\hat{x}</math> such that <math>|\hat{x}-x^*|\leq \epsilon</math>, where <math>x^*</math> satisfies <math>f(x^*) = 0</math>.</blockquote> |

||

This problem is very common in [[numerical analysis]], [[computer science]] and [[engineering]]; and, root-finding algorithms are the standard |

This problem is very common in [[numerical analysis]], [[computer science]] and [[engineering]]; and, root-finding algorithms are the standard approach to solve it. Often, the root-finding procedure is called by more complex parent algorithms within a larger context, and, for this reason solving root problems efficiently is of extreme importance since an inefficient approach might come at a high computational cost when the larger context is taken into account. This is what the ITP method attempts to do by simultaneously exploiting interpolation guarantees as well as minmax optimal guarantees of the bisection method that terminates in at most <math>n_{1/2}\equiv\lceil\log_2((b_0-a_0)/2\epsilon)\rceil </math> iterations when initiated on an interval <math>[a_0,b_0] </math>. |

||

== The |

== The method == |

||

Given <math>\kappa_1\in (0,\infty), \kappa_2 \in \left[1,1+\phi\right) </math>, <math>n_{1/2} \equiv \lceil(b_0-a_0)/2\epsilon\rceil </math> and <math>n_0\in[0,\infty) </math> where <math>\phi </math> is the golden |

Given <math>\kappa_1\in (0,\infty), \kappa_2 \in \left[1,1+\phi\right) </math>, <math>n_{1/2} \equiv \lceil\log_2((b_0-a_0)/2\epsilon)\rceil </math> and <math>n_0\in[0,\infty) </math> where <math>\phi </math> is the golden ratio <math>\tfrac{1}{2}(1+\sqrt{5}) </math>, in each iteration <math>j = 0,1,2\dots </math> the ITP method calculates the point <math>x_{\text{ITP}} </math> following three steps: |

||

[[File:ITPstep1.png|thumb|Step 1 of the ITP method.]] |

[[File:ITPstep1.png|thumb|Step 1 of the ITP method.]] |

||

[[File:ITPstep2.png|thumb|Step 2 of the ITP method.]] |

[[File:ITPstep2.png|thumb|Step 2 of the ITP method.]] |

||

[[File:ITPstep3.png|thumb|Step 3 of the ITP method.]] |

[[File:ITPstep3.png|thumb|Step 3 of the ITP method.]] |

||

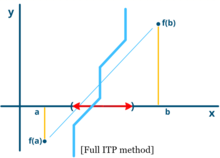

[[File:ITPall steps.png|thumb|All three steps combined form the ITP method. The thick blue line represents the "projected-truncated-interpolation" of the method.]] |

[[File:ITPall steps.png|thumb|All three steps combined form the ITP method. The thick blue line represents the "projected-truncated-interpolation" of the method.]] |

||

# |

# [Interpolation Step] Calculate the bisection and the regula falsi points: <math>x_{1/2} \equiv \frac{a+b}{2} </math> and <math>x_f \equiv \frac{bf(a)-af(b)}{f(a)-f(b)} </math> ; |

||

# |

# [Truncation Step] Perturb the estimator towards the center: <math>x_t \equiv x_f+\sigma \delta </math> where <math>\sigma \equiv \text{sign}(x_{1/2}-x_f) </math> and <math>\delta \equiv \min\{\kappa_1|b-a|^{\kappa_2},|x_{1/2}-x_f|\} </math> ; |

||

# [Projection Step] Project the estimator to minmax interval: <math>x_{\text{ITP}} \equiv x_{1/2} -\sigma \rho_k </math> where <math>\rho_k \equiv \min\left\{\epsilon 2^{n_{1/2}+n_0-j} - \frac{b-a}{2},|x_t-x_{1/2}|\right\} </math>. |

# [Projection Step] Project the estimator to minmax interval: <math>x_{\text{ITP}} \equiv x_{1/2} -\sigma \rho_k </math> where <math>\rho_k \equiv \min\left\{\epsilon 2^{n_{1/2}+n_0-j} - \frac{b-a}{2},|x_t-x_{1/2}|\right\} </math>. |

||

The value of the function <math>f(x_{\text{ITP}}) </math> on this point is queried, and the interval is then reduced to bracket the root by keeping the sub-interval with function values of opposite sign on each end. |

The value of the function <math>f(x_{\text{ITP}}) </math> on this point is queried, and the interval is then reduced to bracket the root by keeping the sub-interval with function values of opposite sign on each end. |

||

=== The algorithm === |

|||

The following algorithm (written in [[pseudocode]]) assumes the initial values of |

The following algorithm (written in [[pseudocode]]) assumes the initial values of <math>y_a </math> and <math>y_b </math> are given and satisfy <math>y_a<0 <y_b </math> where <math>y_a\equiv f(a) </math> and <math>y_b\equiv f(b) </math>; and, it returns an estimate <math>\hat{x} </math> that satisfies <math>|\hat{x} - x^*|\leq \epsilon </math> in at most <math>n_{1/2}+n_0 </math> function evaluations. |

||

'''Input: <math>a, b, \epsilon, \kappa_1, \kappa_2, n_0, f </math>''' |

'''Input: <math>a, b, \epsilon, \kappa_1, \kappa_2, n_0, f </math>''' |

||

'''Preprocessing:''' '''<math>n_{1/2} = \lceil \log_2\tfrac{b-a}{2\epsilon}\rceil </math>''', '''<math>n_{\max} = n_{1/2}+n_0 </math>''', and '''<math>j = 0 </math>'''; |

'''Preprocessing:''' '''<math>n_{1/2} = \lceil \log_2\tfrac{b-a}{2\epsilon}\rceil </math>''', '''<math>n_{\max} = n_{1/2}+n_0 </math>''', and '''<math>j = 0 </math>'''; |

||

'''While ( <math>b-a>2\epsilon </math>''' ''')''' |

'''While ( <math>b-a>2\epsilon </math>''' ''')''' |

||

'''Calculating Parameters:''' |

'''Calculating Parameters:''' |

||

'''<math>x_{1/2} = \tfrac{a+b}{2} </math>''', '''<math>r = \epsilon 2^{n_{\max} - j}-(b-a)/2 </math>''', '''<math>\delta = \kappa_1(b-a)^{\kappa_2} </math>'''; |

|||

'''Interpolation:''' |

'''Interpolation:''' |

||

'''<math>x_f = \tfrac{y_ba-y_a b}{y_b-y_a} </math>'''; |

|||

'''Truncation:''' |

'''Truncation:''' |

||

If '''<math>\delta\leq|x_{1/2}-x_f| </math>''' then '''<math>x_t = x_f+\sigma \delta </math>''', |

'''<math>\sigma = \text{sign}(x_{1/2}-x_f) </math>'''; |

||

If '''<math>\delta\leq|x_{1/2}-x_f| </math>''' then '''<math>x_t = x_f+\sigma \delta </math>''', |

|||

Else '''<math>x_t = x_{1/2} </math>'''; |

|||

'''Projection:''' |

'''Projection:''' |

||

If '''<math>|x_t-x_{1/2}|\leq r </math>''' then '''<math>x_{\text{ITP}} = x_t </math>''', |

|||

Else '''<math>x_{\text{ITP}} = x_{1/2}-\sigma r </math>'''; |

|||

'''Updating Interval:''' |

'''Updating Interval:''' |

||

'''<math>y_{\text{ITP}} = f(x_{\text{ITP}}) </math>'''; |

|||

If '''<math>y_{\text{ITP}}>0 </math>''' then '''<math>b = x_{ITP} </math>''' and '''<math>y_b = y_{\text{ITP}} </math>''', |

|||

Elseif '''<math>y_{\text{ITP}}<0 </math>''' then '''<math>a = x_{\text{ITP}} </math>''' and '''<math>y_a = y_{\text{ITP}} </math>''', |

|||

Else '''<math>a = x_{\text{ITP}} </math>''' and '''<math>b = x_{\text{ITP}} </math>'''; |

|||

'''<math>j = j+1 </math>'''; |

|||

'''Output: <math>\hat{x} = \tfrac{a+b}{2} </math>''' |

'''Output: <math>\hat{x} = \tfrac{a+b}{2} </math>''' |

||

== Example: Finding the root of a polynomial == |

== Example: Finding the root of a polynomial == |

||

Suppose that the ITP method is used to find a root of the polynomial <math> f(x) = x^3 - x - 2 \,.</math> Using <math> \epsilon = 0.0005, \kappa_1 = 0.1, \kappa_2 = 2</math> and <math> n_0 = |

Suppose that the ITP method is used to find a root of the polynomial <math> f(x) = x^3 - x - 2 \,.</math> Using <math> \epsilon = 0.0005, \kappa_1 = 0.1, \kappa_2 = 2</math> and <math> n_0 = 1</math> we find that: |

||

{| class="wikitable" |

{| class="wikitable" |

||

!Iteration |

!Iteration |

||

| Line 89: | Line 97: | ||

|1.52137899116052 |

|1.52137899116052 |

||

|1.52138301273268 |

|1.52138301273268 |

||

| colspan="2" | |

| colspan="2" |← Stopping Criteria Satisfied |

||

|} |

|} |

||

This example can be compared to |

This example can be compared to {{section link|Bisection method|Example: Finding the root of a polynomial}}. The ITP method required less than half the number of iterations than the bisection to obtain a more precise estimate of the root with no cost on the minmax guarantees. Other methods might also attain a similar speed of convergence (such as Ridders, Brent etc.) but without the minmax guarantees given by the ITP method. |

||

== Analysis == |

== Analysis == |

||

The main advantage of the ITP method is that it is guaranteed to require no more iterations than the bisection method when <math> n_0 = 0</math>. And so |

The main advantage of the ITP method is that it is guaranteed to require no more iterations than the bisection method when <math> n_0 = 0</math>. And so its average performance is guaranteed to be better than the bisection method even when interpolation fails. Furthermore, if interpolations do not fail (smooth functions), then it is guaranteed to enjoy the high order of convergence as interpolation based methods. |

||

=== Worst case performance === |

|||

Because the ITP method projects the estimator onto the minmax interval with a <math> n_0</math> slack, it will require at most <math> n_{1/2}+n_0</math> iterations (Theorem 2.1 of <ref name=":0" />). This is minmax optimal like the bisection method when <math> n_0</math> is chosen to be <math> n_0 = 0</math>. |

|||

=== Average performance === |

|||

Because it does not take more than <math> n_{1/2}+n_0</math> iterations, the average number of iterations will always be less than that of the bisection method for any distribution considered when <math> n_0 = 0</math> (Corollary 2.2 of <ref name=":0" />). |

|||

=== Asymptotic performance === |

|||

If the function <math> f(x)</math> is twice differentiable and the root <math> x^*</math> is simple, then the intervals produced by the ITP method converges to 0 with an order of convergence of <math> \sqrt{\kappa_2}</math> if <math> n_0 \neq 0</math> or if <math> n_0 = 0</math> and <math> (b-a)/\epsilon</math> is not a power of 2 with the term <math> \tfrac{\epsilon 2^{n_{1/2}}}{b-a}</math> not too close to zero (Theorem 2.3 of <ref name=":0" />). |

|||

== |

== Software == |

||

* The itp<ref>{{Citation | last= Northrop | first= P. J. | title= itp: The Interpolate, Truncate, Project (ITP) Root-Finding Algorithm | year= 2023 | url= https://CRAN.R-project.org/package=itp}}</ref> contributed package in [[R (programming language)|R]]. |

|||

| ⚫ | |||

| ⚫ | |||

== See also == |

|||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

== Notes == |

|||

{{notelist}} |

|||

== References == |

== References == |

||

<!--- See http://en.wikipedia.org/wiki/Wikipedia:Footnotes on how to create references using |

<!--- See http://en.wikipedia.org/wiki/Wikipedia:Footnotes on how to create references using<ref></ref> tags, these references will then appear here automatically --> |

||

{{Reflist}} |

{{Reflist}} |

||

== External links == |

== External links == |

||

* https://link.growkudos.com/1iwxps83474 |

* [https://link.growkudos.com/1iwxps83474 An Improved Bisection Method], by [https://info.growkudos.com/ Kudos] |

||

{{root-finding algorithms}} |

|||

[[Category:Root-finding algorithms]] |

|||

{{AfC submission|||ts=20201218153948|u=Trojan.chess|ns=2}} |

|||

Latest revision as of 17:31, 14 November 2024

This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

In numerical analysis, the ITP method, short for Interpolate Truncate and Project, is the first root-finding algorithm that achieves the superlinear convergence of the secant method[1] while retaining the optimal[2] worst-case performance of the bisection method.[3] It is also the first method with guaranteed average performance strictly better than the bisection method under any continuous distribution.[3] In practice it performs better than traditional interpolation and hybrid based strategies (Brent's Method, Ridders, Illinois), since it not only converges super-linearly over well behaved functions but also guarantees fast performance under ill-behaved functions where interpolations fail.[3]

The ITP method follows the same structure of standard bracketing strategies that keeps track of upper and lower bounds for the location of the root; but it also keeps track of the region where worst-case performance is kept upper-bounded. As a bracketing strategy, in each iteration the ITP queries the value of the function on one point and discards the part of the interval between two points where the function value shares the same sign. The queried point is calculated with three steps: it interpolates finding the regula falsi estimate, then it perturbes/truncates the estimate (similar to Regula falsi § Improvements in regula falsi) and then projects the perturbed estimate onto an interval in the neighbourhood of the bisection midpoint. The neighbourhood around the bisection point is calculated in each iteration in order to guarantee minmax optimality (Theorem 2.1 of [3]). The method depends on three hyper-parameters and where is the golden ratio : the first two control the size of the truncation and the third is a slack variable that controls the size of the interval for the projection step.[a]

Root finding problem

[edit]Given a continuous function defined from to such that , where at the cost of one query one can access the values of on any given . And, given a pre-specified target precision , a root-finding algorithm is designed to solve the following problem with the least amount of queries as possible:

Problem Definition: Find such that , where satisfies .

This problem is very common in numerical analysis, computer science and engineering; and, root-finding algorithms are the standard approach to solve it. Often, the root-finding procedure is called by more complex parent algorithms within a larger context, and, for this reason solving root problems efficiently is of extreme importance since an inefficient approach might come at a high computational cost when the larger context is taken into account. This is what the ITP method attempts to do by simultaneously exploiting interpolation guarantees as well as minmax optimal guarantees of the bisection method that terminates in at most iterations when initiated on an interval .

The method

[edit]Given , and where is the golden ratio , in each iteration the ITP method calculates the point following three steps:

- [Interpolation Step] Calculate the bisection and the regula falsi points: and ;

- [Truncation Step] Perturb the estimator towards the center: where and ;

- [Projection Step] Project the estimator to minmax interval: where .

The value of the function on this point is queried, and the interval is then reduced to bracket the root by keeping the sub-interval with function values of opposite sign on each end.

The algorithm

[edit]The following algorithm (written in pseudocode) assumes the initial values of and are given and satisfy where and ; and, it returns an estimate that satisfies in at most function evaluations.

Input: Preprocessing: , , and ; While ( ) Calculating Parameters: , , ; Interpolation: ; Truncation: ; If then , Else ; Projection: If then , Else ; Updating Interval: ; If then and , Elseif then and , Else and ; ; Output:

Example: Finding the root of a polynomial

[edit]Suppose that the ITP method is used to find a root of the polynomial Using and we find that:

| Iteration | ||||

|---|---|---|---|---|

| 1 | 1 | 2 | 1.43333333333333 | -0.488629629629630 |

| 2 | 1.43333333333333 | 2 | 1.52713145056966 | 0.0343383329048983 |

| 3 | 1.43333333333333 | 1.52713145056966 | 1.52009281150978 | -0.00764147709265051 |

| 4 | 1.52009281150978 | 1.52713145056966 | 1.52137899116052 | -4.25363464540141e-06 |

| 5 | 1.52137899116052 | 1.52713145056966 | 1.52138301273268 | 1.96497878177659e-05 |

| 6 | 1.52137899116052 | 1.52138301273268 | ← Stopping Criteria Satisfied | |

This example can be compared to Bisection method § Example: Finding the root of a polynomial. The ITP method required less than half the number of iterations than the bisection to obtain a more precise estimate of the root with no cost on the minmax guarantees. Other methods might also attain a similar speed of convergence (such as Ridders, Brent etc.) but without the minmax guarantees given by the ITP method.

Analysis

[edit]The main advantage of the ITP method is that it is guaranteed to require no more iterations than the bisection method when . And so its average performance is guaranteed to be better than the bisection method even when interpolation fails. Furthermore, if interpolations do not fail (smooth functions), then it is guaranteed to enjoy the high order of convergence as interpolation based methods.

Worst case performance

[edit]Because the ITP method projects the estimator onto the minmax interval with a slack, it will require at most iterations (Theorem 2.1 of [3]). This is minmax optimal like the bisection method when is chosen to be .

Average performance

[edit]Because it does not take more than iterations, the average number of iterations will always be less than that of the bisection method for any distribution considered when (Corollary 2.2 of [3]).

Asymptotic performance

[edit]If the function is twice differentiable and the root is simple, then the intervals produced by the ITP method converges to 0 with an order of convergence of if or if and is not a power of 2 with the term not too close to zero (Theorem 2.3 of [3]).

Software

[edit]See also

[edit]Notes

[edit]- ^ For a more in-depth discussion of the hyper-parameters, see the documentation for ITP in the kurbo library.

References

[edit]- ^ Argyros, I. K.; Hernández-Verón, M. A.; Rubio, M. J. (2019). "On the Convergence of Secant-Like Methods". Current Trends in Mathematical Analysis and Its Interdisciplinary Applications. pp. 141–183. doi:10.1007/978-3-030-15242-0_5. ISBN 978-3-030-15241-3. S2CID 202156085.[permanent dead link]

- ^ Sikorski, K. (1982-02-01). "Bisection is optimal". Numerische Mathematik. 40 (1): 111–117. doi:10.1007/BF01459080. ISSN 0945-3245. S2CID 119952605.

- ^ a b c d e f g Oliveira, I. F. D.; Takahashi, R. H. C. (2020-12-06). "An Enhancement of the Bisection Method Average Performance Preserving Minmax Optimality". ACM Transactions on Mathematical Software. 47 (1): 5:1–5:24. doi:10.1145/3423597. ISSN 0098-3500. S2CID 230586635.

- ^ Northrop, P. J. (2023), itp: The Interpolate, Truncate, Project (ITP) Root-Finding Algorithm

![{\displaystyle [a,b]}](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935)

![{\displaystyle [a_{0},b_{0}]}](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/2838ee9034d5bf96adfb08ff6cc185c1aee56c18)