Sampling bias: Difference between revisions

Striver3000 (talk | contribs) No edit summary Tag: Reverted |

|||

| (22 intermediate revisions by 18 users not shown) | |||

| Line 1: | Line 1: | ||

{{ |

{{Short description|Bias in the sampling of a population}} |

||

{{Redirect|Spotlight fallacy|the psychological effect|Spotlight effect}} |

|||

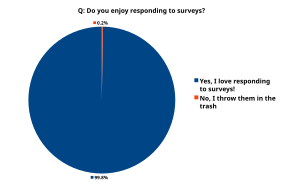

[[File:Survey-survey.svg|alt=A pie chart showing the results of a hypothetical survey, in which the question asked was "Do you enjoy responding to surveys?" The chart shows that 99.8 percent of participants said, "Yes, I love responding to surveys!" and that 0.2 percent said "No, I throw them in the trash"|thumb|An example of a biased sample in a hypothetical population survey, which asks if participants like responding to surveys, thus selecting for the individuals who were most likely to respond in the first place.|300x300px]] |

|||

In [[statistics]], '''sampling bias''' is a [[bias (statistics)|bias]] in which a sample is collected in such a way that some members of the intended [[statistical population|population]] have a lower or higher [[sampling probability]] than others. It results in a '''biased sample'''<ref>{{cite web | url = http://www.medilexicon.com/medicaldictionary.php?t=10087 | work = Medical Dictionary | title = Sampling Bias | access-date = 23 September 2009 | archive-url = https://web.archive.org/web/20160310100307/http://www.medilexicon.com/medicaldictionary.php?t=10087 |archive-date = 10 March 2016 }}</ref> of a population (or non-human factors) in which all individuals, or instances, were not equally likely to have been selected.<ref>{{cite web | url = http://medical-dictionary.thefreedictionary.com/Sample+bias | work = TheFreeDictionary | title = Biased sample | access-date = 23 September 2009 | quote = Mosby's Medical Dictionary, 8th edition }}</ref> If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of [[sampling (statistics)|sampling]]. |

In [[statistics]], '''sampling bias''' is a [[bias (statistics)|bias]] in which a sample is collected in such a way that some members of the intended [[statistical population|population]] have a lower or higher [[sampling probability]] than others. It results in a '''biased sample'''<ref>{{cite web | url = http://www.medilexicon.com/medicaldictionary.php?t=10087 | work = Medical Dictionary | title = Sampling Bias | access-date = 23 September 2009 | archive-url = https://web.archive.org/web/20160310100307/http://www.medilexicon.com/medicaldictionary.php?t=10087 |archive-date = 10 March 2016 }}</ref> of a population (or non-human factors) in which all individuals, or instances, were not equally likely to have been selected.<ref>{{cite web | url = http://medical-dictionary.thefreedictionary.com/Sample+bias | work = TheFreeDictionary | title = Biased sample | access-date = 23 September 2009 | quote = Mosby's Medical Dictionary, 8th edition }}</ref> If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of [[sampling (statistics)|sampling]]. |

||

| Line 5: | Line 7: | ||

==Distinction from selection bias== |

==Distinction from selection bias== |

||

Sampling bias is usually classified as a subtype of [[selection bias]],<ref>{{cite web | url = http://medical.webends.com/kw/Selection%20Bias | work = Dictionary of Cancer Terms | title = Selection Bias | archive-url = https://web.archive.org/web/20090609002707/http://medical.webends.com/kw/Selection%20Bias | archive-date = 9 June 2009 | access-date = 23 September 2009 }}</ref> sometimes specifically termed '''sample selection bias''',<ref name="ArdsChung1998">{{cite journal | vauthors = Ards S, Chung C, Myers SL | title = The effects of sample selection bias on racial differences in child abuse reporting | journal = Child Abuse & Neglect | volume = 22 | issue = 2 | pages = 103–15 | date = February 1998 | pmid = 9504213 | doi = 10.1016/S0145-2134(97)00131-2 }}</ref><ref name="CortesMohri2008">{{cite |

Sampling bias is usually classified as a subtype of [[selection bias]],<ref>{{cite web | url = http://medical.webends.com/kw/Selection%20Bias | work = Dictionary of Cancer Terms | title = Selection Bias | archive-url = https://web.archive.org/web/20090609002707/http://medical.webends.com/kw/Selection%20Bias | archive-date = 9 June 2009 | access-date = 23 September 2009 }}</ref> sometimes specifically termed '''sample selection bias''',<ref name="ArdsChung1998">{{cite journal | vauthors = Ards S, Chung C, Myers SL | title = The effects of sample selection bias on racial differences in child abuse reporting | journal = Child Abuse & Neglect | volume = 22 | issue = 2 | pages = 103–15 | date = February 1998 | pmid = 9504213 | doi = 10.1016/S0145-2134(97)00131-2 | doi-access = free }}</ref><ref name="CortesMohri2008">{{cite book | vauthors = Cortes C, Mohri M, Riley M, Rostamizadeh A |chapter=Sample Selection Bias Correction Theory|title=Algorithmic Learning Theory|volume=5254|year=2008|pages=38–53|chapter-url=http://www.cs.nyu.edu/~mohri/postscript/bias.pdf|doi=10.1007/978-3-540-87987-9_8|arxiv=0805.2775|series=Lecture Notes in Computer Science|isbn=978-3-540-87986-2|citeseerx=10.1.1.144.4478|s2cid=842488}}</ref><ref name="CortesMohri2014">{{cite journal | vauthors = Cortes C, Mohri M |title=Domain adaptation and sample bias correction theory and algorithm for regression|journal=Theoretical Computer Science|volume=519|year=2014|pages=103–126 |url= http://www.cs.nyu.edu/~mohri/pub/nsmooth.pdf |doi=10.1016/j.tcs.2013.09.027|citeseerx=10.1.1.367.6899}}</ref> but some classify it as a separate type of bias.<ref name="Fadem2009">{{cite book| vauthors = Fadem B |title=Behavioral Science|url=https://books.google.com/books?id=f0IDHvLiWqUC|year=2009|publisher=Lippincott Williams & Wilkins|isbn=978-0-7817-8257-9|page=262}}</ref> |

||

A distinction, albeit not universally accepted, of sampling bias is that it undermines the [[external validity]] of a test (the ability of its results to be generalized to the entire population), while [[selection bias]] mainly addresses [[internal validity]] for differences or similarities found in the sample at hand. |

A distinction, albeit not universally accepted, of sampling bias is that it undermines the [[external validity]] of a test (the ability of its results to be generalized to the entire population), while [[selection bias]] mainly addresses [[internal validity]] for differences or similarities found in the sample at hand. In this sense, errors occurring in the process of gathering the sample or cohort cause sampling bias, while errors in any process thereafter cause selection bias. |

||

However, selection bias and sampling bias are often used synonymously.<ref name="Wallace2007">{{cite book| vauthors = Wallace R |title=Maxcy-Rosenau-Last Public Health and Preventive Medicine|url=https://books.google.com/books?id=EBq63uyt87QC |edition=15th|year=2007|publisher=McGraw Hill Professional|isbn=978-0-07-159318-2|page=21}}</ref> |

However, selection bias and sampling bias are often used synonymously.<ref name="Wallace2007">{{cite book| vauthors = Wallace R |title=Maxcy-Rosenau-Last Public Health and Preventive Medicine|url=https://books.google.com/books?id=EBq63uyt87QC |edition=15th|year=2007|publisher=McGraw Hill Professional|isbn=978-0-07-159318-2|page=21}}</ref> |

||

| Line 15: | Line 17: | ||

* '''Exclusion''' bias results from exclusion of particular groups from the sample, e.g. exclusion of subjects who have recently [[human migration|migrated]] into the study area (this may occur when newcomers are not available in a register used to identify the source population). Excluding subjects who move out of the study area during follow-up is rather equivalent of dropout or nonresponse, a [[selection bias]] in that it rather affects the internal validity of the study. |

* '''Exclusion''' bias results from exclusion of particular groups from the sample, e.g. exclusion of subjects who have recently [[human migration|migrated]] into the study area (this may occur when newcomers are not available in a register used to identify the source population). Excluding subjects who move out of the study area during follow-up is rather equivalent of dropout or nonresponse, a [[selection bias]] in that it rather affects the internal validity of the study. |

||

* '''[[Healthy user bias]]''', when the study population is likely healthier than the general population. For example, someone in poor health is unlikely to have a job as manual laborer. |

* '''[[Healthy user bias]]''', when the study population is likely healthier than the general population. For example, someone in poor health is unlikely to have a job as manual laborer, so if a study is conducted on manual laborers, the health of the general population will likely be overestimated. |

||

* '''[[Berkson's fallacy]]''', when the study population is selected from a hospital and so is less healthy than the general population. This can result in a spurious negative correlation between diseases: a hospital patient without diabetes is ''more'' likely to have another given disease such as [[cholecystitis]], since they must have had some reason to enter the hospital in the first place. |

* '''[[Berkson's fallacy]]''', when the study population is selected from a hospital and so is less healthy than the general population. This can result in a spurious negative correlation between diseases: a hospital patient without diabetes is ''more'' likely to have another given disease such as [[cholecystitis]], since they must have had some reason to enter the hospital in the first place. |

||

* '''[[Overmatching]]''', matching for an apparent [[Confounding|confounder]] that actually is a result of the exposure{{clarify|reason=Exposure to what? Exposure to the study or to the studied variable?|date=August 2014}}. The control group becomes more similar to the cases in regard to exposure than does the general population. |

* '''[[Overmatching]]''', matching for an apparent [[Confounding|confounder]] that actually is a result of the exposure{{clarify|reason=Exposure to what? Exposure to the study or to the studied variable?|date=August 2014}}. The control group becomes more similar to the cases in regard to exposure than does the general population. |

||

* '''[[Survivorship bias]]''', in which only "surviving" subjects are selected, ignoring those that fell out of view. For example, using the record of current companies as an indicator of business climate or economy ignores the businesses that failed and no longer exist. |

* '''[[Survivorship bias]]''', in which only "surviving" subjects are selected, ignoring those that fell out of view. For example, using the record of current companies as an indicator of business climate or economy ignores the businesses that failed and no longer exist. |

||

* '''[[Malmquist bias]]''', an effect in observational astronomy which leads to the preferential detection of intrinsically bright objects. |

* '''[[Malmquist bias]]''', an effect in observational astronomy which leads to the preferential detection of intrinsically bright objects. |

||

{{anchor|Spotlight fallacy}} |

|||

* '''Spotlight fallacy''', the uncritical assumption that all members or cases of a certain class or type are like those that receive the most attention or coverage in the media. |

|||

===Symptom-based sampling=== |

===Symptom-based sampling=== |

||

| Line 50: | Line 54: | ||

[[File:Acid2compliancebyusage.png|thumb|right|250px|Example of biased sample: as of June 2008 55% of web browsers ([[Internet Explorer]]) in use did not pass the [[Acid2]] test. Due to the nature of the test, the sample consisted mostly of web developers.<ref>{{cite web |url=https://www.w3schools.com/browsers/browsers_stats.asp |title=Browser Statistics |publisher=Refsnes Data |date=June 2008 |access-date=2008-07-05}}</ref>]] |

[[File:Acid2compliancebyusage.png|thumb|right|250px|Example of biased sample: as of June 2008 55% of web browsers ([[Internet Explorer]]) in use did not pass the [[Acid2]] test. Due to the nature of the test, the sample consisted mostly of web developers.<ref>{{cite web |url=https://www.w3schools.com/browsers/browsers_stats.asp |title=Browser Statistics |publisher=Refsnes Data |date=June 2008 |access-date=2008-07-05}}</ref>]] |

||

A classic example of a biased sample and the misleading results it produced occurred in 1936. In the early days of opinion polling, the American ''[[Literary Digest]]'' magazine collected over two million postal surveys and predicted that the Republican candidate in the [[1936 United States presidential election|U.S. presidential election]], [[Alf Landon]], would beat the incumbent president, [[Franklin Roosevelt]], by a large margin. The result was the exact opposite. The Literary Digest survey represented a sample collected from readers of the magazine, supplemented by records of registered automobile owners and telephone users. This sample included an over-representation of individuals |

A classic example of a biased sample and the misleading results it produced occurred in 1936. In the early days of opinion polling, the American ''[[Literary Digest]]'' magazine collected over two million postal surveys and predicted that the Republican candidate in the [[1936 United States presidential election|U.S. presidential election]], [[Alf Landon]], would beat the incumbent president, [[Franklin Roosevelt]], by a large margin. The result was the exact opposite. The Literary Digest survey represented a sample collected from readers of the magazine, supplemented by records of registered automobile owners and telephone users. This sample included an over-representation of wealthy individuals, who, as a group, were more likely to vote for the Republican candidate. In contrast, a poll of only 50 thousand citizens selected by [[George Gallup]]'s organization successfully predicted the result, leading to the popularity of the [[Gallup poll]]. |

||

Another classic example occurred in the [[1948 United States presidential election|1948 presidential election]]. On election night, the [[Chicago Tribune]] printed the headline ''[[Dewey Defeats Truman|DEWEY DEFEATS TRUMAN]]'', which turned out to be mistaken. In the morning the grinning [[president-elect of the United States|president-elect]], [[Harry S. Truman]], was photographed holding a newspaper bearing this headline. The reason the Tribune was mistaken is that their editor trusted the results of a [[phone survey]]. Survey research was then in its infancy, and few academics realized that a sample of telephone users was not representative of the general population. Telephones were not yet widespread, and those who had them tended to be prosperous and have stable addresses. (In many cities, the [[Bell System]] [[telephone directory]] contained the same names as the [[Social Register]]). In addition, the Gallup poll that the Tribune based its headline on was over two weeks old at the time of the printing.<ref>{{cite web | vauthors = Lienhard JH | title = Gallup Poll | url = http://www.uh.edu/engines/epi1199.htm | access-date = 29 September 2007 | work = The Engines of Our Ingenuity }}</ref> |

Another classic example occurred in the [[1948 United States presidential election|1948 presidential election]]. On election night, the [[Chicago Tribune]] printed the headline ''[[Dewey Defeats Truman|DEWEY DEFEATS TRUMAN]]'', which turned out to be mistaken. In the morning the grinning [[president-elect of the United States|president-elect]], [[Harry S. Truman]], was photographed holding a newspaper bearing this headline. The reason the Tribune was mistaken is that their editor trusted the results of a [[phone survey]]. Survey research was then in its infancy, and few academics realized that a sample of telephone users was not representative of the general population. Telephones were not yet widespread, and those who had them tended to be prosperous and have stable addresses. (In many cities, the [[Bell System]] [[telephone directory]] contained the same names as the [[Social Register]]). In addition, the Gallup poll that the Tribune based its headline on was over two weeks old at the time of the printing.<ref>{{cite web | vauthors = Lienhard JH | title = Gallup Poll | url = http://www.uh.edu/engines/epi1199.htm | access-date = 29 September 2007 | work = The Engines of Our Ingenuity }}</ref> |

||

| Line 56: | Line 60: | ||

In [[air quality]] data, pollutants (such as [[carbon monoxide]], [[nitrogen monoxide]], [[nitrogen dioxide]], or [[ozone]]) frequently show high [[correlations]], as they stem from the same chemical process(es). These correlations depend on space (i.e., location) and time (i.e., period). Therefore, a pollutant distribution is not necessarily representative for every location and every period. If a low-cost measurement instrument is calibrated with field data in a multivariate manner, more precisely by collocation next to a reference instrument, the relationships between the different compounds are incorporated into the calibration model. By relocation of the measurement instrument, erroneous results can be produced.<ref>{{cite journal | vauthors = Tancev G, Pascale C | title = The Relocation Problem of Field Calibrated Low-Cost Sensor Systems in Air Quality Monitoring: A Sampling Bias | journal = Sensors | volume = 20 | issue = 21 | pages = 6198 | date = October 2020 | pmid = 33143233 | pmc = 7662848 | doi = 10.3390/s20216198 | bibcode = 2020Senso..20.6198T | doi-access = free }}</ref> |

In [[air quality]] data, pollutants (such as [[carbon monoxide]], [[nitrogen monoxide]], [[nitrogen dioxide]], or [[ozone]]) frequently show high [[correlations]], as they stem from the same chemical process(es). These correlations depend on space (i.e., location) and time (i.e., period). Therefore, a pollutant distribution is not necessarily representative for every location and every period. If a low-cost measurement instrument is calibrated with field data in a multivariate manner, more precisely by collocation next to a reference instrument, the relationships between the different compounds are incorporated into the calibration model. By relocation of the measurement instrument, erroneous results can be produced.<ref>{{cite journal | vauthors = Tancev G, Pascale C | title = The Relocation Problem of Field Calibrated Low-Cost Sensor Systems in Air Quality Monitoring: A Sampling Bias | journal = Sensors | volume = 20 | issue = 21 | pages = 6198 | date = October 2020 | pmid = 33143233 | pmc = 7662848 | doi = 10.3390/s20216198 | bibcode = 2020Senso..20.6198T | doi-access = free }}</ref> |

||

A |

A twenty-first century example is the [[COVID-19 pandemic]], where variations in sampling bias in [[COVID-19 testing]] have been shown to account for wide variations in both [[Case fatality rate|case fatality rates]] and the [[age distribution]] of cases across countries.<ref>{{cite report | vauthors = Ward D | title = Sampling Bias: Explaining Wide Variations in COVID-19 Case Fatality Rates. | date = 20 April 2020| work = Preprint | location = Bern, Switzerland | doi = 10.13140/RG.2.2.24953.62564/1 }}</ref><ref>{{cite journal | vauthors = Böttcher L, [[Maria Rita D'Orsogna|D'Orsogna MR]], Chou T | title = Using excess deaths and testing statistics to determine COVID-19 mortalities. | journal = European Journal of Epidemiology | volume = 36 | pages = 545–558 | date = May 2021 | issue = 5 | doi = 10.1007/s10654-021-00748-2 | doi-access = free | pmid = 34002294 | pmc = 8127858 }}</ref> |

||

==Statistical corrections for a biased sample== |

==Statistical corrections for a biased sample== |

||

If entire segments of the population are excluded from a sample, then there are no adjustments that can produce estimates that are representative of the entire population. But if some groups are underrepresented and the degree of underrepresentation can be quantified, then sample weights can correct the bias. However, the success of the correction is limited to the selection model chosen. If certain variables are missing the methods used to correct the bias could be inaccurate.<ref>{{Cite journal| vauthors = Cuddeback G, Wilson E, Orme JG, Combs-Orme T |date=2004|title=Detecting and Statistically Correcting Sample Selection Bias |

If entire segments of the population are excluded from a sample, then there are no adjustments that can produce estimates that are representative of the entire population. But if some groups are underrepresented and the degree of underrepresentation can be quantified, then sample weights can correct the bias. However, the success of the correction is limited to the selection model chosen. If certain variables are missing the methods used to correct the bias could be inaccurate.<ref>{{Cite journal| vauthors = Cuddeback G, Wilson E, Orme JG, Combs-Orme T |date=2004|title=Detecting and Statistically Correcting Sample Selection Bias|journal=Journal of Social Service Research|volume=30|issue=3|pages=19–33|doi=10.1300/J079v30n03_02|s2cid=11685550}}</ref> |

||

For example, a hypothetical population might include 10 million men and 10 million women. Suppose that a biased sample of 100 patients included 20 men and 80 women. A researcher could correct for this imbalance by attaching a weight of 2.5 for each male and 0.625 for each female. This would adjust any estimates to achieve the same expected value as a sample that included exactly 50 men and 50 women, unless men and women differed in their likelihood of taking part in the survey. |

For example, a hypothetical population might include 10 million men and 10 million women. Suppose that a biased sample of 100 patients included 20 men and 80 women. A researcher could correct for this imbalance by [[weighting|attaching a weight]] of 2.5 for each male and 0.625 for each female. This would adjust any estimates to achieve the same expected value as a sample that included exactly 50 men and 50 women, unless men and women differed in their likelihood of taking part in the survey.{{Citation needed|date=April 2022}} |

||

== See also == |

== See also == |

||

| Line 72: | Line 76: | ||

* [[Sampling probability]] |

* [[Sampling probability]] |

||

* [[Selection bias]] |

* [[Selection bias]] |

||

* [[Common Source Bias|Common source bias]] |

|||

* [[Spectrum bias]] |

* [[Spectrum bias]] |

||

* [[Truncated regression model]] |

* [[Truncated regression model]] |

||

| Line 85: | Line 90: | ||

[[Category:Sampling (statistics)]] |

[[Category:Sampling (statistics)]] |

||

[[Category:Misuse of statistics]] |

[[Category:Misuse of statistics]] |

||

[[Category: |

[[Category:Experimental bias]] |

||

[[Category:Design of experiments]] |

|||

Latest revision as of 21:01, 17 November 2024

In statistics, sampling bias is a bias in which a sample is collected in such a way that some members of the intended population have a lower or higher sampling probability than others. It results in a biased sample[1] of a population (or non-human factors) in which all individuals, or instances, were not equally likely to have been selected.[2] If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of sampling.

Medical sources sometimes refer to sampling bias as ascertainment bias.[3][4] Ascertainment bias has basically the same definition,[5][6] but is still sometimes classified as a separate type of bias.[5]

Distinction from selection bias

[edit]Sampling bias is usually classified as a subtype of selection bias,[7] sometimes specifically termed sample selection bias,[8][9][10] but some classify it as a separate type of bias.[11] A distinction, albeit not universally accepted, of sampling bias is that it undermines the external validity of a test (the ability of its results to be generalized to the entire population), while selection bias mainly addresses internal validity for differences or similarities found in the sample at hand. In this sense, errors occurring in the process of gathering the sample or cohort cause sampling bias, while errors in any process thereafter cause selection bias.

However, selection bias and sampling bias are often used synonymously.[12]

Types

[edit]- Selection from a specific real area. For example, a survey of high school students to measure teenage use of illegal drugs will be a biased sample because it does not include home-schooled students or dropouts. A sample is also biased if certain members are underrepresented or overrepresented relative to others in the population. For example, a "man on the street" interview which selects people who walk by a certain location is going to have an overrepresentation of healthy individuals who are more likely to be out of the home than individuals with a chronic illness. This may be an extreme form of biased sampling, because certain members of the population are totally excluded from the sample (that is, they have zero probability of being selected).

- Self-selection bias (see also Non-response bias), which is possible whenever the group of people being studied has any form of control over whether to participate (as current standards of human-subject research ethics require for many real-time and some longitudinal forms of study). Participants' decision to participate may be correlated with traits that affect the study, making the participants a non-representative sample. For example, people who have strong opinions or substantial knowledge may be more willing to spend time answering a survey than those who do not. Another example is online and phone-in polls, which are biased samples because the respondents are self-selected. Those individuals who are highly motivated to respond, typically individuals who have strong opinions, are overrepresented, and individuals that are indifferent or apathetic are less likely to respond. This often leads to a polarization of responses with extreme perspectives being given a disproportionate weight in the summary. As a result, these types of polls are regarded as unscientific.

- Exclusion bias results from exclusion of particular groups from the sample, e.g. exclusion of subjects who have recently migrated into the study area (this may occur when newcomers are not available in a register used to identify the source population). Excluding subjects who move out of the study area during follow-up is rather equivalent of dropout or nonresponse, a selection bias in that it rather affects the internal validity of the study.

- Healthy user bias, when the study population is likely healthier than the general population. For example, someone in poor health is unlikely to have a job as manual laborer, so if a study is conducted on manual laborers, the health of the general population will likely be overestimated.

- Berkson's fallacy, when the study population is selected from a hospital and so is less healthy than the general population. This can result in a spurious negative correlation between diseases: a hospital patient without diabetes is more likely to have another given disease such as cholecystitis, since they must have had some reason to enter the hospital in the first place.

- Overmatching, matching for an apparent confounder that actually is a result of the exposure[clarification needed]. The control group becomes more similar to the cases in regard to exposure than does the general population.

- Survivorship bias, in which only "surviving" subjects are selected, ignoring those that fell out of view. For example, using the record of current companies as an indicator of business climate or economy ignores the businesses that failed and no longer exist.

- Malmquist bias, an effect in observational astronomy which leads to the preferential detection of intrinsically bright objects.

- Spotlight fallacy, the uncritical assumption that all members or cases of a certain class or type are like those that receive the most attention or coverage in the media.

Symptom-based sampling

[edit]The study of medical conditions begins with anecdotal reports. By their nature, such reports only include those referred for diagnosis and treatment. A child who can't function in school is more likely to be diagnosed with dyslexia than a child who struggles but passes. A child examined for one condition is more likely to be tested for and diagnosed with other conditions, skewing comorbidity statistics. As certain diagnoses become associated with behavior problems or intellectual disability, parents try to prevent their children from being stigmatized with those diagnoses, introducing further bias. Studies carefully selected from whole populations are showing that many conditions are much more common and usually much milder than formerly believed.

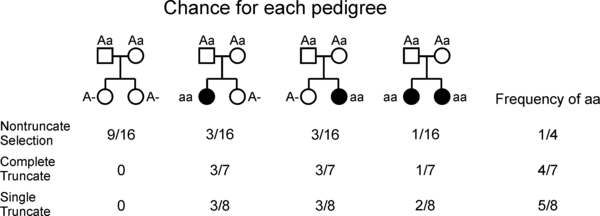

Truncate selection in pedigree studies

[edit]

Geneticists are limited in how they can obtain data from human populations. As an example, consider a human characteristic. We are interested in deciding if the characteristic is inherited as a simple Mendelian trait. Following the laws of Mendelian inheritance, if the parents in a family do not have the characteristic, but carry the allele for it, they are carriers (e.g. a non-expressive heterozygote). In this case their children will each have a 25% chance of showing the characteristic. The problem arises because we can't tell which families have both parents as carriers (heterozygous) unless they have a child who exhibits the characteristic. The description follows the textbook by Sutton.[13]

The figure shows the pedigrees of all the possible families with two children when the parents are carriers (Aa).

- Nontruncate selection. In a perfect world we should be able to discover all such families with a gene including those who are simply carriers. In this situation the analysis would be free from ascertainment bias and the pedigrees would be under "nontruncate selection" In practice, most studies identify, and include, families in a study based upon them having affected individuals.

- Truncate selection. When afflicted individuals have an equal chance of being included in a study this is called truncate selection, signifying the inadvertent exclusion (truncation) of families who are carriers for a gene. Because selection is performed on the individual level, families with two or more affected children would have a higher probability of becoming included in the study.

- Complete truncate selection is a special case where each family with an affected child has an equal chance of being selected for the study.

The probabilities of each of the families being selected is given in the figure, with the sample frequency of affected children also given. In this simple case, the researcher will look for a frequency of 4⁄7 or 5⁄8 for the characteristic, depending on the type of truncate selection used.

The caveman effect

[edit]An example of selection bias is called the "caveman effect". Much of our understanding of prehistoric peoples comes from caves, such as cave paintings made nearly 40,000 years ago. If there had been contemporary paintings on trees, animal skins or hillsides, they would have been washed away long ago. Similarly, evidence of fire pits, middens, burial sites, etc. are most likely to remain intact to the modern era in caves. Prehistoric people are associated with caves because that is where the data still exists, not necessarily because most of them lived in caves for most of their lives.[14]

Problems due to sampling bias

[edit]Sampling bias is problematic because it is possible that a statistic computed of the sample is systematically erroneous. Sampling bias can lead to a systematic over- or under-estimation of the corresponding parameter in the population. Sampling bias occurs in practice as it is practically impossible to ensure perfect randomness in sampling. If the degree of misrepresentation is small, then the sample can be treated as a reasonable approximation to a random sample. Also, if the sample does not differ markedly in the quantity being measured, then a biased sample can still be a reasonable estimate.

The word bias has a strong negative connotation. Indeed, biases sometimes come from deliberate intent to mislead or other scientific fraud. In statistical usage, bias merely represents a mathematical property, no matter if it is deliberate or unconscious or due to imperfections in the instruments used for observation. While some individuals might deliberately use a biased sample to produce misleading results, more often, a biased sample is just a reflection of the difficulty in obtaining a truly representative sample, or ignorance of the bias in their process of measurement or analysis. An example of how ignorance of a bias can exist is in the widespread use of a ratio (a.k.a. fold change) as a measure of difference in biology. Because it is easier to achieve a large ratio with two small numbers with a given difference, and relatively more difficult to achieve a large ratio with two large numbers with a larger difference, large significant differences may be missed when comparing relatively large numeric measurements. Some have called this a 'demarcation bias' because the use of a ratio (division) instead of a difference (subtraction) removes the results of the analysis from science into pseudoscience (See Demarcation Problem).

Some samples use a biased statistical design which nevertheless allows the estimation of parameters. The U.S. National Center for Health Statistics, for example, deliberately oversamples from minority populations in many of its nationwide surveys in order to gain sufficient precision for estimates within these groups.[15] These surveys require the use of sample weights (see later on) to produce proper estimates across all ethnic groups. Provided that certain conditions are met (chiefly that the weights are calculated and used correctly) these samples permit accurate estimation of population parameters.

Historical examples

[edit]

A classic example of a biased sample and the misleading results it produced occurred in 1936. In the early days of opinion polling, the American Literary Digest magazine collected over two million postal surveys and predicted that the Republican candidate in the U.S. presidential election, Alf Landon, would beat the incumbent president, Franklin Roosevelt, by a large margin. The result was the exact opposite. The Literary Digest survey represented a sample collected from readers of the magazine, supplemented by records of registered automobile owners and telephone users. This sample included an over-representation of wealthy individuals, who, as a group, were more likely to vote for the Republican candidate. In contrast, a poll of only 50 thousand citizens selected by George Gallup's organization successfully predicted the result, leading to the popularity of the Gallup poll.

Another classic example occurred in the 1948 presidential election. On election night, the Chicago Tribune printed the headline DEWEY DEFEATS TRUMAN, which turned out to be mistaken. In the morning the grinning president-elect, Harry S. Truman, was photographed holding a newspaper bearing this headline. The reason the Tribune was mistaken is that their editor trusted the results of a phone survey. Survey research was then in its infancy, and few academics realized that a sample of telephone users was not representative of the general population. Telephones were not yet widespread, and those who had them tended to be prosperous and have stable addresses. (In many cities, the Bell System telephone directory contained the same names as the Social Register). In addition, the Gallup poll that the Tribune based its headline on was over two weeks old at the time of the printing.[17]

In air quality data, pollutants (such as carbon monoxide, nitrogen monoxide, nitrogen dioxide, or ozone) frequently show high correlations, as they stem from the same chemical process(es). These correlations depend on space (i.e., location) and time (i.e., period). Therefore, a pollutant distribution is not necessarily representative for every location and every period. If a low-cost measurement instrument is calibrated with field data in a multivariate manner, more precisely by collocation next to a reference instrument, the relationships between the different compounds are incorporated into the calibration model. By relocation of the measurement instrument, erroneous results can be produced.[18]

A twenty-first century example is the COVID-19 pandemic, where variations in sampling bias in COVID-19 testing have been shown to account for wide variations in both case fatality rates and the age distribution of cases across countries.[19][20]

Statistical corrections for a biased sample

[edit]If entire segments of the population are excluded from a sample, then there are no adjustments that can produce estimates that are representative of the entire population. But if some groups are underrepresented and the degree of underrepresentation can be quantified, then sample weights can correct the bias. However, the success of the correction is limited to the selection model chosen. If certain variables are missing the methods used to correct the bias could be inaccurate.[21]

For example, a hypothetical population might include 10 million men and 10 million women. Suppose that a biased sample of 100 patients included 20 men and 80 women. A researcher could correct for this imbalance by attaching a weight of 2.5 for each male and 0.625 for each female. This would adjust any estimates to achieve the same expected value as a sample that included exactly 50 men and 50 women, unless men and women differed in their likelihood of taking part in the survey.[citation needed]

See also

[edit]- Censored regression model

- Cherry picking (fallacy)

- File drawer problem

- Friendship paradox

- Reporting bias

- Sampling probability

- Selection bias

- Common source bias

- Spectrum bias

- Truncated regression model

References

[edit]- ^ "Sampling Bias". Medical Dictionary. Archived from the original on 10 March 2016. Retrieved 23 September 2009.

- ^ "Biased sample". TheFreeDictionary. Retrieved 23 September 2009.

Mosby's Medical Dictionary, 8th edition

- ^ Weising K (2005). DNA fingerprinting in plants: principles, methods, and applications. London: Taylor & Francis Group. p. 180. ISBN 978-0-8493-1488-9.

- ^ Ramírez i Soriano A (29 November 2008). Selection and linkage desequilibrium tests under complex demographies and ascertainment bias (PDF) (Ph.D. thesis). Universitat Pompeu Fabra. p. 34.

- ^ a b Panacek EA (May 2009). "Error and Bias in Clinical Research" (PDF). SAEM Annual Meeting. New Orleans, LA: Society for Academic Emergency Medicine. Archived from the original (PDF) on 17 August 2016. Retrieved 14 November 2009.

- ^ "Ascertainment Bias". Medilexicon Medical Dictionary. Archived from the original on 6 August 2016. Retrieved 14 November 2009.

- ^ "Selection Bias". Dictionary of Cancer Terms. Archived from the original on 9 June 2009. Retrieved 23 September 2009.

- ^ Ards S, Chung C, Myers SL (February 1998). "The effects of sample selection bias on racial differences in child abuse reporting". Child Abuse & Neglect. 22 (2): 103–15. doi:10.1016/S0145-2134(97)00131-2. PMID 9504213.

- ^ Cortes C, Mohri M, Riley M, Rostamizadeh A (2008). "Sample Selection Bias Correction Theory" (PDF). Algorithmic Learning Theory. Lecture Notes in Computer Science. Vol. 5254. pp. 38–53. arXiv:0805.2775. CiteSeerX 10.1.1.144.4478. doi:10.1007/978-3-540-87987-9_8. ISBN 978-3-540-87986-2. S2CID 842488.

- ^ Cortes C, Mohri M (2014). "Domain adaptation and sample bias correction theory and algorithm for regression" (PDF). Theoretical Computer Science. 519: 103–126. CiteSeerX 10.1.1.367.6899. doi:10.1016/j.tcs.2013.09.027.

- ^ Fadem B (2009). Behavioral Science. Lippincott Williams & Wilkins. p. 262. ISBN 978-0-7817-8257-9.

- ^ Wallace R (2007). Maxcy-Rosenau-Last Public Health and Preventive Medicine (15th ed.). McGraw Hill Professional. p. 21. ISBN 978-0-07-159318-2.

- ^ Sutton HE (1988). An Introduction to Human Genetics (4th ed.). Harcourt Brace Jovanovich. ISBN 978-0-15-540099-3.

- ^ Berk RA (June 1983). "An Introduction to Sample Selection Bias in Sociological Data". American Sociological Review. 48 (3): 386–398. doi:10.2307/2095230. JSTOR 2095230.

- ^ "Minority Health". National Center for Health Statistics. 2007.

- ^ "Browser Statistics". Refsnes Data. June 2008. Retrieved 2008-07-05.

- ^ Lienhard JH. "Gallup Poll". The Engines of Our Ingenuity. Retrieved 29 September 2007.

- ^ Tancev G, Pascale C (October 2020). "The Relocation Problem of Field Calibrated Low-Cost Sensor Systems in Air Quality Monitoring: A Sampling Bias". Sensors. 20 (21): 6198. Bibcode:2020Senso..20.6198T. doi:10.3390/s20216198. PMC 7662848. PMID 33143233.

- ^ Ward D (20 April 2020). Sampling Bias: Explaining Wide Variations in COVID-19 Case Fatality Rates. Preprint (Report). Bern, Switzerland. doi:10.13140/RG.2.2.24953.62564/1.

- ^ Böttcher L, D'Orsogna MR, Chou T (May 2021). "Using excess deaths and testing statistics to determine COVID-19 mortalities". European Journal of Epidemiology. 36 (5): 545–558. doi:10.1007/s10654-021-00748-2. PMC 8127858. PMID 34002294.

- ^ Cuddeback G, Wilson E, Orme JG, Combs-Orme T (2004). "Detecting and Statistically Correcting Sample Selection Bias". Journal of Social Service Research. 30 (3): 19–33. doi:10.1300/J079v30n03_02. S2CID 11685550.