Fourier transform: Difference between revisions

0<alpha<1 →Distributions |

Added reference to "Fourier-Stieltjes" subsection. Extended the definition a bit. Maybe the subsection heading could be more clarifying |

||

| Line 1: | Line 1: | ||

{{Short description|Mathematical transform that expresses a function of time as a function of frequency}} |

|||

In [[mathematics]], the '''Fourier transform''' (often abbreviated '''FT''') is an operation that [[Transform (mathematics)|transforms]] one [[complex number|complex]]-valued [[function (mathematics)|function]] of a [[real variable]] into another. In such applications as [[signal processing]], the domain of the original function is typically [[time]] and is accordingly called the ''[[time domain]]''. That of the new function is [[frequency]], and so the Fourier transform is often called the ''[[frequency domain]] representation'' of the original function. It describes which frequencies are present in the original function. This is in a similar spirit to the way that a chord of music can be described by notes that are being played. In effect, the Fourier transform decomposes a function into [[Oscillation|oscillatory]] functions. The term Fourier transform refers both to the frequency domain representation of a function and to the process or formula that "transforms" one function into the other. |

|||

{{Not to be confused with|Sine and cosine transforms|text=Fourier's original [[sine and cosine transforms]], which may be a simpler introduction to the Fourier transform}} |

|||

{{Fourier transforms}} |

|||

[[File:CQT-piano-chord.png|thumb|An example application of the Fourier transform is determining the constituent pitches in a [[music]]al [[waveform]]. This image is the result of applying a [[constant-Q transform]] (a [[Fourier-related transform]]) to the waveform of a [[C major]] [[piano]] [[chord (music)|chord]]. The first three peaks on the left correspond to the frequencies of the [[fundamental frequency]] of the chord (C, E, G). The remaining smaller peaks are higher-frequency [[overtone]]s of the fundamental pitches. A [[pitch detection algorithm]] could use the relative intensity of these peaks to infer which notes the pianist pressed.]] |

|||

In [[mathematics]], the '''Fourier transform''' ('''FT''') is an [[integral transform]] that takes a [[function (mathematics)|function]] as input and outputs another function that describes the extent to which various [[Frequency|frequencies]] are present in the original function. The output of the transform is a [[complex number|complex]]-valued function of frequency. The term ''Fourier transform'' refers to both this complex-valued function and the [[Operation (mathematics)|mathematical operation]]. When a distinction needs to be made, the output of the operation is sometimes called the [[frequency domain]] representation of the original function. The Fourier transform is analogous to decomposing the [[sound]] of a musical [[Chord (music)|chord]] into the [[sound intensity|intensities]] of its constituent [[Pitch (music)|pitches]]. |

|||

The Fourier transform and its generalizations are the subject of [[Fourier analysis]]. In this specific case, both the time and frequency domains are [[bounded set|unbounded]] [[linear continuum|linear continua]]. It is possible to define the Fourier transform of a function of several variables, which is important for instance in the physical study of [[wave motion]] and [[optics]]. It is also possible to generalize the Fourier transform on [[discrete mathematics|discrete]] structures such as [[finite group]]s, efficient computation of which through a [[fast Fourier transform]] is essential for high-speed computing. |

|||

[[File:Fourier transform time and frequency domains (small).gif|thumb|right|The Fourier transform relates the time domain, in red, with a function in the domain of the frequency, in blue. The component frequencies, extended for the whole frequency spectrum, are shown as peaks in the domain of the frequency.]] |

|||

{{multiple image |

|||

| total_width = 300 |

|||

| align = right |

|||

| image1 = Sine voltage.svg |

|||

| image2 = Phase shift.svg |

|||

| footer = |

|||

The red [[sine wave|sinusoid]] can be described by peak amplitude (1), peak-to-peak (2), [[root mean square|RMS]] (3), and [[wavelength]] (4). The red and blue sinusoids have a phase difference of {{mvar|θ}}. |

|||

}}Functions that are localized in the time domain have Fourier transforms that are spread out across the frequency domain and vice versa, a phenomenon known as the [[#Uncertainty principle|uncertainty principle]]. The [[critical point (mathematics)|critical]] case for this principle is the [[Gaussian function]], of substantial importance in [[probability theory]] and [[statistics]] as well as in the study of physical phenomena exhibiting [[normal distribution]] (e.g., [[diffusion]]). The Fourier transform of a Gaussian function is another Gaussian function. [[Joseph Fourier]] introduced [[sine and cosine transforms]] (which [[Sine and cosine transforms#Relation with complex exponentials|correspond to the imaginary and real components]] of the modern Fourier transform) in his study of [[heat transfer]], where Gaussian functions appear as solutions of the [[heat equation]]. |

|||

The Fourier transform can be formally defined as an [[improper integral|improper]] [[Riemann integral]], making it an integral transform, although this definition is not suitable for many applications requiring a more sophisticated integration theory.<ref group=note>Depending on the application a [[Lebesgue integral]], [[distribution (mathematics)|distributional]], or other approach may be most appropriate.</ref> For example, many relatively simple applications use the [[Dirac delta function]], which can be treated formally as if it were a function, but the justification requires a mathematically more sophisticated viewpoint.<ref group=note>{{harvtxt|Vretblad|2000}} provides solid justification for these formal procedures without going too deeply into [[functional analysis]] or the [[distribution (mathematics)|theory of distributions]].</ref> |

|||

The Fourier transform can also be generalized to functions of several variables on [[Euclidean space]], sending a function of {{nowrap|3-dimensional}} 'position space' to a function of {{nowrap|3-dimensional}} momentum (or a function of space and time to a function of [[4-momentum]]). This idea makes the spatial Fourier transform very natural in the study of waves, as well as in [[quantum mechanics]], where it is important to be able to represent wave solutions as functions of either position or momentum and sometimes both. In general, functions to which Fourier methods are applicable are complex-valued, and possibly [[vector-valued function|vector-valued]].<ref group=note>In [[relativistic quantum mechanics]] one encounters vector-valued Fourier transforms of multi-component wave functions. In [[quantum field theory]], operator-valued Fourier transforms of operator-valued functions of spacetime are in frequent use, see for example {{harvtxt|Greiner|Reinhardt|1996}}.</ref> Still further generalization is possible to functions on [[group (mathematics)|groups]], which, besides the original Fourier transform on [[Real number#Arithmetic|{{math|'''R'''}}]] or {{math|'''R'''<sup>''n''</sup>}}, notably includes the [[discrete-time Fourier transform]] (DTFT, group = {{math|[[integers|'''Z''']]}}), the [[discrete Fourier transform]] (DFT, group = [[cyclic group|{{math|'''Z''' mod ''N''}}]]) and the [[Fourier series]] or circular Fourier transform (group = {{math|[[circle group|''S''<sup>1</sup>]]}}, the unit circle ≈ closed finite interval with endpoints identified). The latter is routinely employed to handle [[periodic function]]s. The [[fast Fourier transform]] (FFT) is an algorithm for computing the DFT. |

|||

{{Fourier transforms}} |

|||

== Definition == |

== Definition == |

||

There are several common conventions for defining the Fourier transform of an [[Lebesgue integration|integrable]] function {{nowrap|''ƒ'' : '''R''' → '''C'''}} {{harv|Kaiser|1994}}. This article will use the definition: |

|||

:<math>\hat{f}(\xi) = \int_{-\infty}^{\infty} f(x)\ e^{- 2\pi i x \xi}\,dx, </math> for every [[real number]] [[Xi (letter)|''ξ'']]. |

|||

The Fourier transform is an ''analysis'' process, decomposing a complex-valued function <math>\textstyle f(x)</math> into its constituent frequencies and their amplitudes. The inverse process is ''synthesis'', which recreates <math>\textstyle f(x)</math> from its transform. |

|||

When the independent variable ''x'' represents ''time'' (with [[SI]] unit of [[second]]s), the transform variable ''ξ'' represents [[frequency]] (in [[hertz]]). Under suitable conditions, ''ƒ'' can be reconstructed from <math>\hat f</math> by the '''inverse transform''': |

|||

We can start with an analogy, the [[Fourier series]], which analyzes <math>\textstyle f(x)</math> over a bounded interval <math>[-P/2, P/2]</math> on the real line. The constituent frequencies at <math>\tfrac{n}{P}, n \in \mathbb Z,</math> form a discrete set of ''harmonics'' whose amplitude and phase are given by the '''analysis formula:'''<math display="block">c_n = \frac{1}{P} \int_{-P/2}^{P/2} f(x) \, e^{-i 2\pi \frac{n}{P}x} \, dx.</math>The actual '''Fourier series''' is the '''synthesis formula:'''<math display="block">f(x) = \sum_{n=-\infty}^\infty c_n\, e^{i 2\pi \tfrac{n}{P}x},\quad \textstyle x \in [-P/2, P/2].</math>On an unbounded interval, <math>P\to\infty,</math> the constituent frequencies are a continuum''':''' <math>\tfrac{n}{P} \to \xi \in \mathbb R,</math><ref>{{harvnb|Khare|Butola|Rajora|2023|pp=13–14}}</ref><ref>{{harvnb|Kaiser|1994|p=29}}</ref><ref>{{harvnb|Rahman|2011|p=11}}</ref> and <math>c_n</math> is replaced by a function''':'''<ref>{{harvnb|Dym|McKean|1985}}</ref>{{Equation box 1|title =Fourier transform |

|||

:<math>f(x) = \int_{-\infty}^{\infty} \hat{f}(\xi)\ e^{2 \pi i x \xi}\,d\xi, </math> for every real number ''x''. |

|||

|indent =:|cellpadding= 6 |border |border colour = #0073CF |background colour=#F5FFFA |

|||

|equation = {{NumBlk|| |

|||

<math>\widehat{f}(\xi) = \int_{-\infty}^{\infty} f(x)\ e^{-i 2\pi \xi x}\,dx.</math> |

|||

|{{EquationRef|Eq.1}}}} |

|||

}} |

|||

Evaluating the Fourier transform for all values of <math>\xi</math> produces the ''frequency-domain'' function. Though, in general, the integral can diverge at some frequencies, if <math>f(x)</math> decays with all derivatives, i.e., |

|||

For other common conventions and notations, see [[Fourier transform#Other conventions|Other conventions]] and [[Fourier transform#Other notations|Other notations]] below. The [[#Fourier transform on Euclidean space|Fourier transform on Euclidean space]] is treated separately, in which the variable ''x'' often represents position and ''ξ'' momentum. |

|||

<math display="block">\lim_{|x|\to\infty} f^{(n)}(x) = 0, \quad \forall n\in \mathbb{N},</math> then <math>\widehat f</math> converges for all frequencies and, by the [[Riemann–Lebesgue lemma]], <math>\widehat f</math> also decays with all derivatives. |

|||

The complex number <math>\widehat{f}(\xi)</math>, in polar coordinates, conveys both [[amplitude]] and [[phase offset|phase]] of frequency <math>\xi.</math> The intuitive interpretation of {{EquationNote|Eq.1}} is that the effect of multiplying <math>f(x)</math> by <math>e^{-i 2\pi \xi x}</math> is to subtract <math>\xi</math> from every frequency component of function <math>f(x).</math><ref group="note">A possible source of confusion is the [[#Frequency shifting|frequency-shifting property]]; i.e. the transform of function <math>f(x)e^{-i 2\pi \xi_0 x}</math> is <math>\widehat{f}(\xi+\xi_0).</math> The value of this function at <math>\xi=0</math> is <math>\widehat{f}(\xi_0),</math> meaning that a frequency <math>\xi_0</math> has been shifted to zero (also see [[Negative frequency#Simplifying the Fourier transform|Negative frequency]]).</ref> Only the component that was at frequency <math>\xi</math> can produce a non-zero value of the infinite integral, because (at least formally) all the other shifted components are oscillatory and integrate to zero. (see {{slink||Example}}) |

|||

==Introduction== |

|||

{{See also|Fourier analysis}} |

|||

The motivation for the Fourier transform comes from the study of [[Fourier series]]. In the study of Fourier series, complicated periodic functions are written as the sum of simple waves mathematically represented by [[sine]]s and [[cosine]]s. Due to the properties of sine and cosine it is possible to recover the amount of each wave in the sum by an integral. In many cases it is desirable to use [[Euler's formula]], which states that ''e''<sup>2''πiθ''</sup> = cos 2''πθ'' + ''i'' sin 2''πθ'', to write Fourier series in terms of the basic waves ''e''<sup>2''πiθ''</sup>. This has the advantage of simplifying many of the formulas involved and providing a formulation for Fourier series that more closely resembles the definition followed in this article. This passage from sines and cosines to [[complex exponentials]] makes it necessary for the Fourier coefficients to be complex valued. The usual interpretation of this complex number is that it gives you both the [[amplitude]] (or size) of the wave present in the function and the [[phase (waves)|phase]] (or the initial angle) of the wave. This passage also introduces the need for negative "frequencies". If θ were measured in seconds then the waves ''e''<sup>2''πiθ''</sup> and ''e''<sup>−2''πiθ''</sup> would both complete one cycle per second, but they represent different frequencies in the Fourier transform. Hence, frequency no longer measures the number of cycles per unit time, but is closely related. |

|||

The corresponding synthesis formula is: |

|||

We may use Fourier series to motivate the Fourier transform as follows. Suppose that ''ƒ'' is a function which is zero outside of some interval [−''L''/2, ''L''/2]. Then for any ''T'' ≥ ''L'' we may expand ''ƒ'' in a Fourier series on the interval [−T/2,T/2], where the "amount" (denoted by ''c''<sub>''n''</sub>) of the wave ''e''<sup>2''πinx/T''</sup> in the Fourier series of ''ƒ'' is given by |

|||

{{Equation box 1|title = Inverse transform |

|||

:<math>\hat{f}(n/T)=c_n=\int_{-T/2}^{T/2} e^{-2\pi i nx/T}f(x)\,dx</math> |

|||

|indent =:|cellpadding= 6 |border |border colour = #0073CF |background colour=#F5FFFA |

|||

|equation = {{NumBlk|| |

|||

<math>f(x) = \int_{-\infty}^{\infty} \widehat f(\xi)\ e^{i 2 \pi \xi x}\,d\xi,\quad \forall\ x \in \mathbb R.</math> |

|||

|{{EquationRef|Eq.2}}}} |

|||

}} |

|||

{{EquationNote|Eq.2}} is a representation of <math>f(x)</math> as a weighted summation of complex exponential functions. |

|||

and ''ƒ'' should be given by the formula |

|||

This is also known as the [[Fourier inversion theorem]], and was first introduced in [[Joseph Fourier|Fourier's]] ''Analytical Theory of Heat''.<ref>{{harvnb|Fourier|1822|p=525}}</ref><ref>{{harvnb|Fourier|1878|p=408}}</ref><ref>{{harvtxt|Jordan|1883}} proves on pp. 216–226 the [[Fourier inversion theorem#Fourier integral theorem|Fourier integral theorem]] before studying Fourier series.</ref><ref>{{harvnb|Titchmarsh|1986|p=1}}</ref> |

|||

:<math>f(x)=\frac{1}{T}\sum_{n=-\infty}^\infty \hat{f}(n/T) e^{2\pi i nx/T}.</math> |

|||

The functions <math>f</math> and <math>\widehat{f}</math> are referred to as a '''Fourier transform pair'''.<ref>{{harvnb|Rahman|2011|p=10}}.</ref> A common notation for designating transform pairs is''':'''<ref>{{harvnb|Oppenheim|Schafer|Buck|1999|p=58}}</ref> |

|||

If we let ''ξ''<sub>''n''</sub> = ''n''/''T'', and we let Δ''ξ'' = (''n'' + 1)/''T'' − ''n''/''T'' = 1/''T'', then this last sum becomes the [[Riemann sum]] |

|||

<math display="block">f(x)\ \stackrel{\mathcal{F}}{\longleftrightarrow}\ \widehat f(\xi),</math> for example <math>\operatorname{rect}(x)\ \stackrel{\mathcal{F}}{\longleftrightarrow}\ \operatorname{sinc}(\xi).</math> |

|||

=== Lebesgue integrable functions === |

|||

:<math>f(x)=\sum_{n=-\infty}^\infty \hat{f}(n/T) e^{2\pi i x\xi_n}\Delta\xi.</math> |

|||

{{see also|Lp space#Lp spaces and Lebesgue integrals}} |

|||

A [[measurable function]] <math>f:\mathbb R\to\mathbb C</math> is called (Lebesgue) integrable if the [[Lebesgue integral]] of its absolute value is finite: |

|||

By letting ''T'' → ∞ this Riemann sum converges to the integral for the inverse Fourier transform given in the Definition section. Under suitable conditions this argument may be made precise {{harv|Stein|Shakarchi|2003}}. Hence, as in the case of Fourier series, the Fourier transform can be thought of as a function that measures how much of each individual frequency is present in our function, and we can recombine these waves by using an integral (or "continuous sum") to reproduce the original function. |

|||

<math display="block">\|f\|_1 = \int_{\mathbb R}|f(x)|\,dx < \infty.</math> |

|||

For a Lebesgue integrable function <math>f</math> the Fourier transform is defined by {{EquationNote|Eq.1}}.{{sfn|Stade|2005|pp=298-299}} |

|||

The integral {{EquationNote|Eq.1}} is well-defined for all <math>\xi\in\mathbb R,</math> because of the assumption <math>\|f\|_1<\infty</math>. (It can be shown that the function <math>\widehat f\in L^\infty\cap C(\mathbb R)</math> is bounded and [[uniformly continuous]] in the frequency domain, and moreover, by the [[Riemann–Lebesgue lemma]], it is zero at infinity.) |

|||

The space <math>L^1(\mathbb R)</math> is the space of measurable functions for which the norm <math>\|f\|_1</math> is finite, modulo the [[Equivalence_class|equivalence relation]] of equality [[almost everywhere]]. The Fourier transform is [[Bijection,_injection_and_surjection|one-to-one]] on <math>L^1(\mathbb R)</math>. However, there is no easy characterization of the image, and thus no easy characterization of the inverse transform. In particular, {{EquationNote|Eq.2}} is no longer valid, as it was stated only under the hypothesis that <math>f(x)</math> decayed with all derivatives. |

|||

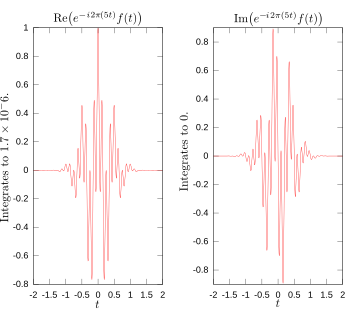

The following images provide a visual illustration of how the Fourier transform measures whether a frequency is present in a particular function. The function depicted <math>f(t)=\cos(6\pi t)e^{-\pi t^2}</math> oscillates at 3 hertz (if ''t'' measures seconds) and tends quickly to 0. This function was specially chosen to have a real Fourier transform which can easily be plotted. The first image contains its graph. In order to calculate <math>\hat{f}(3)</math> |

|||

we must integrate ''e''<sup>−2''πi(''3''t'')</sup>''ƒ''(''t''). The second image shows the plot of the real and imaginary parts of this function. The real part of the integrand is almost always positive, this is because when ''ƒ''(''t'') is negative, then the real part of ''e''<sup>−2''πi(''3''t'')</sup> is negative as well. Because they oscillate at the same rate, when ''ƒ''(''t'') is positive, so is the real part of ''e''<sup>−2''πi(''3''t'')</sup>. The result is that when you integrate the real part of the integrand you get a relatively large number (in this case 0.5). On the other hand, when you try to measure a frequency that is not present, as in the case when we look at <math>\hat{f}(5)</math>, the integrand oscillates enough so that the integral is very small. The general situation may be a bit more complicated than this, but this in spirit is how the Fourier transform measures how much of an individual frequency is present in a function ''ƒ''(''t''). |

|||

<gallery> |

|||

Image:Function ocsillating at 3 hertz.svg|Original function showing oscillation 3 hertz. |

|||

Image:Onfreq.svg| Real and imaginary parts of integrand for Fourier transform at 3 hertz |

|||

Image:Offfreq.svg| Real and imaginary parts of integrand for Fourier transform at 5 hertz |

|||

Image:Fourier transform of oscillating function.svg| Fourier transform with 3 and 5 hertz labeled. |

|||

</gallery> |

|||

Moreover, while {{EquationNote|Eq.1}} defines the Fourier transform for (complex-valued) functions in <math>L^1(\mathbb R)</math>, it is easy to see that it is not well-defined for other integrability classes, most importantly the space of [[square-integrable function]]s <math>L^2(\mathbb R)</math>. For example, the function <math>f(x)=(1+x^2)^{-1/2}</math> is in <math>L^2</math> but not <math>L^1</math>, so the integral {{EquationNote|Eq.1}} diverges. However, the Fourier transform on the dense subspace <math>L^1\cap L^2(\mathbb R) \subset L^2(\mathbb R)</math> admits a unique continuous extension to a [[unitary operator]] on <math>L^2(\mathbb R)</math>. This extension is important in part because the Fourier transform preserves the space <math>L^2(\mathbb R)</math>. That is, unlike the case of <math>L^1</math>, both the Fourier transform and its inverse act on same function space <math>L^2(\mathbb R)</math>. |

|||

== Properties of the Fourier transform== |

|||

In such cases, the Fourier transform can be obtained explicitly by regularizing the integral, and then passing to a limit. In practice, the integral is often regarded as an [[improper integral]] instead of a proper Lebesgue integral, but sometimes for convergence one needs to use [[weak limit]] or [[Cauchy principal value|principal value]] instead of the (pointwise) limits implicit in an improper integral. {{harvtxt|Titchmarsh|1986}} and {{harvtxt|Dym|McKean|1985}} each gives three rigorous ways of extending the Fourier transform to square integrable functions using this procedure. A general principle in working with the <math>L^2</math> Fourier transform is that Gaussians are dense in <math>L^1\cap L^2</math>, and the various features of the Fourier transform, such as its unitarity, are easily inferred for Gaussians. Many of the properties of the Fourier transform, can then be proven from two facts about Gaussians:{{sfn|Howe|1980}} |

|||

An ''integrable function'' is a function ''ƒ'' on the real line that is [[Measurable function|Lebesgue-measurable]] and satisfies |

|||

* that <math>e^{-\pi x^2}</math> is its own Fourier transform; and |

|||

* that the Gaussian integral <math>\int_{-\infty}^\infty e^{-\pi x^2}\,dx = 1.</math> |

|||

A feature of the <math>L^1</math> Fourier transform is that it is a homomorphism of Banach algebras from <math>L^1</math> equipped with the convolution operation to the Banach algebra of continuous functions under the <math>L^\infty</math> (supremum) norm. The conventions chosen in this article are those of [[harmonic analysis]], and are characterized as the unique conventions such that the Fourier transform is both [[Unitary operator|unitary]] on {{math|''L''<sup>2</sup>}} and an algebra homomorphism from {{math|''L''<sup>1</sup>}} to {{math|''L''<sup>∞</sup>}}, without renormalizing the Lebesgue measure.<ref>{{harvnb|Folland|1989}}</ref> |

|||

:<math>\int_{-\infty}^\infty |f(x)| \, dx < \infty.</math> |

|||

=== Angular frequency (''ω'') === |

|||

When the independent variable (<math>x</math>) represents ''time'' (often denoted by <math>t</math>), the transform variable (<math>\xi</math>) represents [[frequency]] (often denoted by <math>f</math>). For example, if time is measured in [[second]]s, then frequency is in [[hertz]]. The Fourier transform can also be written in terms of [[angular frequency]], <math>\omega = 2\pi \xi,</math> whose units are [[radian]]s per second. |

|||

The substitution <math>\xi = \tfrac{\omega}{2 \pi}</math> into {{EquationNote|Eq.1}} produces this convention, where function <math>\widehat f</math> is relabeled <math>\widehat {f_1}:</math> |

|||

<math display="block">\begin{align} |

|||

\widehat {f_3}(\omega) &\triangleq \int_{-\infty}^{\infty} f(x)\cdot e^{-i\omega x}\, dx = \widehat{f_1}\left(\tfrac{\omega}{2\pi}\right),\\ |

|||

f(x) &= \frac{1}{2\pi} \int_{-\infty}^{\infty} \widehat{f_3}(\omega)\cdot e^{i\omega x}\, d\omega. |

|||

\end{align} |

|||

</math> |

|||

Unlike the {{EquationNote|Eq.1}} definition, the Fourier transform is no longer a [[unitary transformation]], and there is less symmetry between the formulas for the transform and its inverse. Those properties are restored by splitting the <math>2 \pi</math> factor evenly between the transform and its inverse, which leads to another convention: |

|||

<math display="block">\begin{align} |

|||

\widehat{f_2}(\omega) &\triangleq \frac{1}{\sqrt{2\pi}} \int_{-\infty}^{\infty} f(x)\cdot e^{- i\omega x}\, dx = \frac{1}{\sqrt{2\pi}}\ \ \widehat{f_1}\left(\tfrac{\omega}{2\pi}\right), \\ |

|||

f(x) &= \frac{1}{\sqrt{2\pi}} \int_{-\infty}^{\infty} \widehat{f_2}(\omega)\cdot e^{ i\omega x}\, d\omega. |

|||

\end{align}</math> |

|||

Variations of all three conventions can be created by conjugating the complex-exponential [[integral kernel|kernel]] of both the forward and the reverse transform. The signs must be opposites. |

|||

{| class="wikitable" |

|||

|+ Summary of popular forms of the Fourier transform, one-dimensional |

|||

|- |

|||

! ordinary frequency {{mvar|ξ}} (Hz) |

|||

! unitary |

|||

| <math>\begin{align} |

|||

\widehat{f_1}(\xi)\ &\triangleq\ \int_{-\infty}^{\infty} f(x)\, e^{-i 2\pi \xi x}\, dx = \sqrt{2\pi}\ \ \widehat{f_2}(2 \pi \xi) = \widehat{f_3}(2 \pi \xi) \\ |

|||

f(x) &= \int_{-\infty}^{\infty} \widehat{f_1}(\xi)\, e^{i 2\pi x \xi}\, d\xi \end{align}</math> |

|||

|- |

|||

! rowspan="2" | angular frequency {{mvar|ω}} (rad/s) |

|||

! unitary |

|||

| <math>\begin{align} |

|||

\widehat{f_2}(\omega)\ &\triangleq\ \frac{1}{\sqrt{2\pi}}\ \int_{-\infty}^{\infty} f(x)\, e^{-i \omega x}\, dx = \frac{1}{\sqrt{2\pi}}\ \ \widehat{f_1} \! \left(\frac{\omega}{2 \pi}\right) = \frac{1}{\sqrt{2\pi}}\ \ \widehat{f_3}(\omega) \\ |

|||

f(x) &= \frac{1}{\sqrt{2\pi}}\ \int_{-\infty}^{\infty} \widehat{f_2}(\omega)\, e^{i \omega x}\, d\omega \end{align}</math> |

|||

|- |

|||

! non-unitary |

|||

| <math>\begin{align} |

|||

\widehat{f_3}(\omega) \ &\triangleq\ \int_{-\infty}^{\infty} f(x)\, e^{-i\omega x}\, dx = \widehat{f_1} \left(\frac{\omega}{2 \pi}\right) = \sqrt{2\pi}\ \ \widehat{f_2}(\omega) \\ |

|||

f(x) &= \frac{1}{2 \pi} \int_{-\infty}^{\infty} \widehat{f_3}(\omega)\, e^{i \omega x}\, d\omega \end{align}</math> |

|||

|} |

|||

{| class="wikitable" |

|||

|+ Generalization for {{math|''n''}}-dimensional functions |

|||

|- |

|||

! ordinary frequency {{mvar|ξ}} (Hz) |

|||

! unitary |

|||

| <math>\begin{align} |

|||

\widehat{f_1}(\xi)\ &\triangleq\ \int_{\mathbb{R}^n} f(x) e^{-i 2\pi \xi\cdot x}\, dx = (2 \pi)^\frac{n}{2}\widehat{f_2}(2\pi \xi) = \widehat{f_3}(2\pi \xi) \\ |

|||

f(x) &= \int_{\mathbb{R}^n} \widehat{f_1}(\xi) e^{i 2\pi \xi\cdot x}\, d\xi \end{align}</math> |

|||

|- |

|||

! rowspan="2" | angular frequency {{mvar|ω}} (rad/s) |

|||

! unitary |

|||

| <math>\begin{align} |

|||

\widehat{f_2}(\omega)\ &\triangleq\ \frac{1}{(2 \pi)^\frac{n}{2}} \int_{\mathbb{R}^n} f(x) e^{-i \omega\cdot x}\, dx = \frac{1}{(2 \pi)^\frac{n}{2}} \widehat{f_1} \! \left(\frac{\omega}{2 \pi}\right) = \frac{1}{(2 \pi)^\frac{n}{2}} \widehat{f_3}(\omega) \\ |

|||

f(x) &= \frac{1}{(2 \pi)^\frac{n}{2}} \int_{\mathbb{R}^n} \widehat{f_2}(\omega)e^{i \omega\cdot x}\, d\omega \end{align}</math> |

|||

|- |

|||

! non-unitary |

|||

| <math>\begin{align} |

|||

\widehat{f_3}(\omega) \ &\triangleq\ \int_{\mathbb{R}^n} f(x) e^{-i\omega\cdot x}\, dx = \widehat{f_1} \left(\frac{\omega}{2 \pi}\right) = (2 \pi)^\frac{n}{2} \widehat{f_2}(\omega) \\ |

|||

f(x) &= \frac{1}{(2 \pi)^n} \int_{\mathbb{R}^n} \widehat{f_3}(\omega) e^{i \omega\cdot x}\, d\omega \end{align}</math> |

|||

|} |

|||

== Background == |

|||

=== History === |

|||

{{Main|Fourier analysis#History|Fourier series#History}} |

|||

In 1822, Fourier claimed (see {{Slink|Joseph Fourier|The Analytic Theory of Heat}}) that any function, whether continuous or discontinuous, can be expanded into a series of sines.<ref>{{harvnb|Fourier|1822}}</ref> That important work was corrected and expanded upon by others to provide the foundation for the various forms of the Fourier transform used since. |

|||

[[File:unfasor.gif|thumb|right|Fig.1 When function <math>A \cdot e^{i 2\pi \xi t}</math> is depicted in the complex plane, the vector formed by its [[complex number|imaginary and real parts]] rotates around the origin. Its real part <math>y(t)</math> is a cosine wave.]] |

|||

=== Complex sinusoids === |

|||

In general, the coefficients <math>\widehat f(\xi)</math> are complex numbers, which have two equivalent forms (see [[Euler's formula]]): |

|||

<math display="block"> \widehat f(\xi) = \underbrace{A e^{i \theta}}_{\text{polar coordinate form}} |

|||

= \underbrace{A \cos(\theta) + i A \sin(\theta)}_{\text{rectangular coordinate form}}.</math> |

|||

The product with <math>e^{i 2 \pi \xi x}</math> ({{EquationNote|Eq.2}}) has these forms: |

|||

<math display="block">\begin{aligned}\widehat f(\xi)\cdot e^{i 2 \pi \xi x} |

|||

&= A e^{i \theta} \cdot e^{i 2 \pi \xi x}\\ |

|||

&= \underbrace{A e^{i (2 \pi \xi x+\theta)}}_{\text{polar coordinate form}}\\ |

|||

&= \underbrace{A\cos(2\pi \xi x +\theta) + i A\sin(2\pi \xi x +\theta)}_{\text{rectangular coordinate form}}.\end{aligned}</math> |

|||

It is noteworthy how easily the product was simplified using the polar form, and how easily the rectangular form was deduced by an application of Euler's formula. |

|||

=== Negative frequency === |

|||

{{See also|Negative frequency#Simplifying the Fourier transform|l1=Negative frequency § Simplifying the Fourier transform}} |

|||

Euler's formula introduces the possibility of negative <math>\xi.</math> And {{EquationNote|Eq.1}} is defined <math>\forall \xi \in \mathbb{R}.</math> Only certain complex-valued <math> f(x)</math> have transforms <math> \widehat f =0, \ \forall \ \xi < 0</math> (See [[Analytic signal]]. A simple example is <math> e^{i 2 \pi \xi_0 x}\ (\xi_0 > 0).</math>) But negative frequency is necessary to characterize all other complex-valued <math> f(x),</math> found in [[signal processing]], [[partial differential equations]], [[radar]], [[nonlinear optics]], [[quantum mechanics]], and others. |

|||

For a real-valued <math> f(x),</math> {{EquationNote|Eq.1}} has the symmetry property <math>\widehat f(-\xi) = \widehat {f}^* (\xi)</math> (see {{slink||Conjugation}} below). This redundancy enables {{EquationNote|Eq.2}} to distinguish <math>f(x) = \cos(2 \pi \xi_0 x)</math> from <math>e^{i2 \pi \xi_0 x}.</math> But of course it cannot tell us the actual sign of <math>\xi_0,</math> because <math>\cos(2 \pi \xi_0 x)</math> and <math>\cos(2 \pi (-\xi_0) x)</math> are indistinguishable on just the real numbers line. |

|||

=== Fourier transform for periodic functions === |

|||

The Fourier transform of a periodic function cannot be defined using the integral formula directly. In order for integral in {{EquationNote|Eq.1}} to be defined the function must be [[Absolutely integrable function|absolutely integrable]]. Instead it is common to use [[Fourier series]]. It is possible to extend the definition to include periodic functions by viewing them as [[Distribution (mathematics)#Tempered distributions|tempered distributions]]. |

|||

This makes it possible to see a connection between the [[Fourier series]] and the Fourier transform for periodic functions that have a [[Convergence of Fourier series|convergent Fourier series]]. If <math>f(x)</math> is a [[periodic function]], with period <math>P</math>, that has a convergent Fourier series, then: |

|||

<math display="block"> |

|||

\widehat{f}(\xi) = \sum_{n=-\infty}^\infty c_n \cdot \delta \left(\xi - \tfrac{n}{P}\right), |

|||

</math> |

|||

where <math>c_n</math> are the Fourier series coefficients of <math>f</math>, and <math>\delta</math> is the [[Dirac delta function]]. In other words, the Fourier transform is a [[Dirac comb]] function whose ''teeth'' are multiplied by the Fourier series coefficients. |

|||

=== Sampling the Fourier transform === |

|||

{{Broader|Poisson summation formula}} |

|||

The Fourier transform of an [[Absolutely integrable function|integrable]] function <math>f</math> can be sampled at regular intervals of arbitrary length <math>\tfrac{1}{P}.</math> These samples can be deduced from one cycle of a periodic function <math>f_P</math> which has [[Fourier series]] coefficients proportional to those samples by the [[Poisson summation formula]]: |

|||

<math display="block">f_P(x) \triangleq \sum_{n=-\infty}^{\infty} f(x+nP) = \frac{1}{P}\sum_{k=-\infty}^{\infty} \widehat f\left(\tfrac{k}{P}\right) e^{i2\pi \frac{k}{P} x}, \quad \forall k \in \mathbb{Z}</math> |

|||

The integrability of <math>f</math> ensures the periodic summation converges. Therefore, the samples <math>\widehat f\left(\tfrac{k}{P}\right)</math> can be determined by Fourier series analysis: |

|||

<math display="block">\widehat f\left(\tfrac{k}{P}\right) = \int_{P} f_P(x) \cdot e^{-i2\pi \frac{k}{P} x} \,dx.</math> |

|||

When <math>f(x)</math> has [[compact support]], <math>f_P(x)</math> has a finite number of terms within the interval of integration. When <math>f(x)</math> does not have compact support, numerical evaluation of <math>f_P(x)</math> requires an approximation, such as tapering <math>f(x)</math> or truncating the number of terms. |

|||

== Units == |

|||

{{see also|Spectral density#Units}} |

|||

The frequency variable must have inverse units to the units of the original function's domain (typically named <math>t</math> or <math>x</math>). For example, if <math>t</math> is measured in seconds, <math>\xi</math> should be in cycles per second or [[hertz]]. If the scale of time is in units of <math>2\pi</math> seconds, then another Greek letter <math>\omega</math> is typically used instead to represent [[angular frequency]] (where <math>\omega=2\pi \xi</math>) in units of [[radian]]s per second. If using <math>x</math> for units of length, then <math>\xi</math> must be in inverse length, e.g., [[wavenumber]]s. That is to say, there are two versions of the real line: one which is the [[Range of a function|range]] of <math>t</math> and measured in units of <math>t,</math> and the other which is the range of <math>\xi</math> and measured in inverse units to the units of <math>t.</math> These two distinct versions of the real line cannot be equated with each other. Therefore, the Fourier transform goes from one space of functions to a different space of functions: functions which have a different domain of definition. |

|||

In general, <math>\xi</math> must always be taken to be a [[linear form]] on the space of its domain, which is to say that the second real line is the [[dual space]] of the first real line. See the article on [[linear algebra]] for a more formal explanation and for more details. This point of view becomes essential in generalizations of the Fourier transform to general [[symmetry group]]s, including the case of Fourier series. |

|||

That there is no one preferred way (often, one says "no canonical way") to compare the two versions of the real line which are involved in the Fourier transform—fixing the units on one line does not force the scale of the units on the other line—is the reason for the plethora of rival conventions on the definition of the Fourier transform. The various definitions resulting from different choices of units differ by various constants. |

|||

In other conventions, the Fourier transform has {{mvar|i}} in the exponent instead of {{math|−''i''}}, and vice versa for the inversion formula. This convention is common in modern physics<ref>{{harvnb|Arfken|1985}}</ref> and is the default for [https://www.wolframalpha.com Wolfram Alpha], and does not mean that the frequency has become negative, since there is no canonical definition of positivity for frequency of a complex wave. It simply means that <math>\hat f(\xi)</math> is the amplitude of the wave <math>e^{-i 2\pi \xi x}</math> instead of the wave <math>e^{i 2\pi \xi x}</math> (the former, with its minus sign, is often seen in the time dependence for [[Sinusoidal plane-wave solutions of the electromagnetic wave equation]], or in the [[Wave function#Time dependence|time dependence for quantum wave functions]]). Many of the identities involving the Fourier transform remain valid in those conventions, provided all terms that explicitly involve {{math|''i''}} have it replaced by {{math|−''i''}}. In [[Electrical engineering]] the letter {{math|''j''}} is typically used for the [[imaginary unit]] instead of {{math|''i''}} because {{math|''i''}} is used for current. |

|||

When using [[dimensionless units]], the constant factors might not even be written in the transform definition. For instance, in [[probability theory]], the characteristic function {{mvar|Φ}} of the probability density function {{mvar|f}} of a random variable {{mvar|X}} of continuous type is defined without a negative sign in the exponential, and since the units of {{mvar|x}} are ignored, there is no 2{{pi}} either: |

|||

<math display="block">\phi (\lambda) = \int_{-\infty}^\infty f(x) e^{i\lambda x} \,dx.</math> |

|||

(In probability theory, and in mathematical statistics, the use of the Fourier—Stieltjes transform is preferred, because so many random variables are not of continuous type, and do not possess a density function, and one must treat not functions but [[Distribution (mathematics)|distributions]], i.e., measures which possess "atoms".) |

|||

From the higher point of view of [[character theory|group characters]], which is much more abstract, all these arbitrary choices disappear, as will be explained in the later section of this article, which treats the notion of the Fourier transform of a function on a [[locally compact abelian group|locally compact Abelian group]]. |

|||

== Properties == |

|||

Let <math>f(x)</math> and <math>h(x)</math> represent ''integrable functions'' [[Lebesgue-measurable]] on the real line satisfying: |

|||

<math display="block">\int_{-\infty}^\infty |f(x)| \, dx < \infty.</math> |

|||

We denote the Fourier transforms of these functions as <math>\hat f(\xi)</math> and <math>\hat h(\xi)</math> respectively. |

|||

=== Basic properties === |

=== Basic properties === |

||

The Fourier transform has the following basic properties:<ref name="Pinsky-2002">{{harvnb|Pinsky|2002}}</ref> |

|||

==== Linearity ==== |

|||

Given integrable functions ''f''(''x''), ''g''(''x''), and ''h''(''x'') denote their Fourier transforms by <math>\hat{f}(\xi)</math>, <math>\hat{g}(\xi)</math>, and <math>\hat{h}(\xi)</math> respectively. The Fourier transform has the following basic properties {{harv|Pinsky|2002}}. |

|||

<math display="block">a\ f(x) + b\ h(x)\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ a\ \widehat f(\xi) + b\ \widehat h(\xi);\quad \ a,b \in \mathbb C</math> |

|||

; Linearity |

|||

: For any [[complex number]]s ''a'' and ''b'', if ''h''(''x'') = ''aƒ''(''x'') + ''bg''(''x''), then  <math>\hat{h}(\xi)=a\cdot \hat{f}(\xi) + b\cdot\hat{g}(\xi).</math> |

|||

; Translation |

|||

: For any [[real number]] ''x''<sub>0</sub>, if ''h''(''x'') = ''ƒ''(''x'' − ''x''<sub>0</sub>), then  <math>\hat{h}(\xi)= e^{-2\pi i x_0\xi }\hat{f}(\xi).</math> |

|||

; Modulation |

|||

: For any [[real number]] ''ξ''<sub>0</sub>, if ''h''(''x'') = ''e''<sup>2''πixξ''<sub><small>0</small></sub></sup>''ƒ''(''x''), then  <math>\hat{h}(\xi) = \hat{f}(\xi-\xi_{0})</math>. |

|||

; Scaling |

|||

: For a non-zero [[real number]] ''a'', if ''h''(''x'') = ''ƒ''(''ax''), then  <math>\hat{h}(\xi)=\frac{1}{|a|}\hat{f}\left(\frac{\xi}{a}\right)</math>. The case ''a'' = −1 leads to the ''time-reversal'' property, which states: if ''h''(''x'') = ''ƒ''(−''x''), then  <math>\hat{h}(\xi)=\hat{f}(-\xi)</math>. |

|||

; Conjugation |

|||

: If <math>h(x)=\overline{f(x)}</math>, then  <math>\hat{h}(\xi) = \overline{\hat{f}(-\xi)}.</math> |

|||

:In particular, if ''ƒ'' is real, then one has the ''reality condition''  <math>\hat{f}(-\xi)=\overline{\hat{f}(\xi)}.</math> |

|||

; Convolution |

|||

: If <math>h(x)=\left(f*g\right)(x)</math>, then  <math> \hat{h}(\xi)=\hat{f}(\xi)\cdot \hat{g}(\xi).</math> |

|||

==== Time shifting ==== |

|||

=== Uniform continuity and the Riemann–Lebesgue lemma=== |

|||

[[File:Rectangular function.svg|thumb|The [[rectangular function]] is Lebesgue integrable.]] |

|||

[[File:Sinc function (normalized).svg|thumb|The [[sinc function]], the Fourier transform of the rectangular function, is bounded and continuous, but not Lebesgue integrable.]] |

|||

The Fourier transform of integrable functions have additional properties that do not always hold. The Fourier transforms of integrable functions ''ƒ'' are [[uniformly continuous]] and <math>\|\hat{f}\|_{\infty}\leq \|f\|_1</math> {{harv|Katznelson|1976}}. The Fourier transform of integrable functions also satisfy the ''[[Riemann–Lebesgue lemma]]'' which states that {{harv|Stein|Weiss|1971}} |

|||

<math display="block">f(x-x_0)\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ e^{-i 2\pi x_0 \xi}\ \widehat f(\xi);\quad \ x_0 \in \mathbb R</math> |

|||

:<math>\hat{f}(\xi)\to 0\text{ as }|\xi|\to \infty.\,</math> |

|||

==== Frequency shifting ==== |

|||

The Fourier transform <math>\hat f</math> of an integrable function ''ƒ'' is bounded and continuous, but need not be integrable – for example, the Fourier transform of the [[rectangular function]], which is a [[step function]] (and hence integrable) is the [[sinc function]], which is not Lebesgue integrable, though it does have an improper integral: one has an analog to the [[alternating harmonic series]], which is a convergent sum but not [[absolutely convergent]]. |

|||

<math display="block">e^{i 2\pi \xi_0 x} f(x)\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ \widehat f(\xi - \xi_0);\quad \ \xi_0 \in \mathbb R</math> |

|||

It is not possible in general to write the ''inverse transform'' as a Lebesgue integral. However, when both ''ƒ'' and <math>\hat f</math> are integrable, the following inverse equality holds true for almost every ''x'': |

|||

==== Time scaling ==== |

|||

:<math>f(x) = \int_{-\infty}^\infty \hat f(\xi) e^{2 i \pi x \xi} \, d\xi.</math> |

|||

<math display="block">f(ax)\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ \frac{1}{|a|}\widehat{f}\left(\frac{\xi}{a}\right);\quad \ a \ne 0 </math> |

|||

Almost everywhere, ''ƒ'' is equal to the continuous function given by the right-hand side. If ''ƒ'' is given as continuous function on the line, then equality holds for every ''x''. |

|||

The case <math>a=-1</math> leads to the ''time-reversal property'': |

|||

<math display="block">f(-x)\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ \widehat f (-\xi)</math> |

|||

{{Annotated image |

|||

A consequence of the preceding result is that the Fourier transform is injective on ''L''<sup>1</sup>('''R'''). |

|||

| caption=The transform of an even-symmetric real-valued function <math>(f(t) = f_{RE})</math> is also an even-symmetric real-valued function <math>(\hat f_{RE}).</math> The time-shift, <math>(g(t) = g_{RE} + g_{RO}),</math> creates an imaginary component, <math>i\cdot \hat g_{IO}.</math> (see {{slink||Symmmetry}}. |

|||

| image=Fourier_unit_pulse.svg |

|||

| image-width = 300 |

|||

| annotations = |

|||

{{Annotation|20|40|<math>\scriptstyle f(t)</math>}} |

|||

{{Annotation|170|40|<math>\scriptstyle \widehat{f}(\omega)</math>}} |

|||

{{Annotation|20|140|<math>\scriptstyle g(t)</math>}} |

|||

{{Annotation|170|140|<math>\scriptstyle \widehat{g}(\omega)</math>}} |

|||

{{Annotation|130|80|<math>\scriptstyle t</math>}} |

|||

{{Annotation|280|85|<math>\scriptstyle \omega</math>}} |

|||

{{Annotation|130|192|<math>\scriptstyle t</math>}} |

|||

{{Annotation|280|180|<math>\scriptstyle \omega</math>}} |

|||

}} |

|||

==== Symmetry ==== |

|||

===The Plancherel theorem and Parseval's theorem=== |

|||

When the real and imaginary parts of a complex function are decomposed into their [[Even and odd functions#Even–odd decomposition|even and odd parts]], there are four components, denoted below by the subscripts RE, RO, IE, and IO. And there is a one-to-one mapping between the four components of a complex time function and the four components of its complex frequency transform:<ref name="ProakisManolakis1996">{{cite book|last1=Proakis|first1=John G. |last2=Manolakis|first2=Dimitris G.|author2-link= Dimitris Manolakis |title=Digital Signal Processing: Principles, Algorithms, and Applications|url=https://archive.org/details/digitalsignalpro00proa|url-access=registration|year=1996|publisher=Prentice Hall|isbn=978-0-13-373762-2|edition=3rd|page=[https://archive.org/details/digitalsignalpro00proa/page/291 291]}}</ref> |

|||

<math> |

|||

Let ''f''(''x'') and ''g''(''x'') be integrable, and let <math>\hat{f}(\xi)</math> and <math>\hat{g}(\xi)</math> be their Fourier transforms. If ''f''(''x'') and ''g''(''x'') are also square-integrable, then we have [[Parseval's theorem]] {{harv|Rudin|1987|loc=p. 187}}''':''' |

|||

\begin{array}{rlcccccccc} |

|||

\mathsf{Time\ domain} & f & = & f_{_{\text{RE}}} & + & f_{_{\text{RO}}} & + & i\ f_{_{\text{IE}}} & + & \underbrace{i\ f_{_{\text{IO}}}} \\ |

|||

&\Bigg\Updownarrow\mathcal{F} & &\Bigg\Updownarrow\mathcal{F} & &\ \ \Bigg\Updownarrow\mathcal{F} & &\ \ \Bigg\Updownarrow\mathcal{F} & &\ \ \Bigg\Updownarrow\mathcal{F}\\ |

|||

\mathsf{Frequency\ domain} & \widehat f & = & \widehat f_{_\text{RE}} & + & \overbrace{i\ \widehat f_{_\text{IO}}\,} & + & i\ \widehat f_{_\text{IE}} & + & \widehat f_{_\text{RO}} |

|||

\end{array} |

|||

</math> |

|||

From this, various relationships are apparent, for example''':''' |

|||

: <math>\int_{-\infty}^{\infty} f(x) \overline{g(x)} \, dx = \int_{-\infty}^\infty \hat{f}(\xi) \overline{\hat{g}(\xi)} \, d\xi,</math> |

|||

*The transform of a real-valued function <math>(f_{_{RE}}+f_{_{RO}})</math> is the ''[[Even and odd functions#Complex-valued functions|conjugate symmetric]]'' function <math>\hat f_{RE}+i\ \hat f_{IO}.</math> Conversely, a ''conjugate symmetric'' transform implies a real-valued time-domain. |

|||

*The transform of an imaginary-valued function <math>(i\ f_{_{IE}}+i\ f_{_{IO}})</math> is the ''[[Even and odd functions#Complex-valued functions|conjugate antisymmetric]]'' function <math>\hat f_{RO}+i\ \hat f_{IE},</math> and the converse is true. |

|||

*The transform of a ''[[Even and odd functions#Complex-valued functions|conjugate symmetric]]'' function <math>(f_{_{RE}}+i\ f_{_{IO}})</math> is the real-valued function <math>\hat f_{RE}+\hat f_{RO},</math> and the converse is true. |

|||

*The transform of a ''[[Even and odd functions#Complex-valued functions|conjugate antisymmetric]]'' function <math>(f_{_{RO}}+i\ f_{_{IE}})</math> is the imaginary-valued function <math>i\ \hat f_{IE}+i\hat f_{IO},</math> and the converse is true. |

|||

==== Conjugation ==== |

|||

where the bar denotes [[complex conjugation]]. |

|||

<math display="block">\bigl(f(x)\bigr)^*\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ \left(\widehat{f}(-\xi)\right)^*</math> |

|||

(Note: the ∗ denotes [[Complex conjugate|complex conjugation]].) |

|||

In particular, if <math>f</math> is '''real''', then <math>\widehat f</math> is [[Even and odd functions#Complex-valued functions|even symmetric]] (aka [[Hermitian function]]): |

|||

The [[Plancherel theorem]], which is equivalent to [[Parseval's theorem]], states {{harv|Rudin|1987|loc=p. 186}}''':''' |

|||

<math display="block">\widehat{f}(-\xi)=\bigl(\widehat f(\xi)\bigr)^*.</math> |

|||

And if <math>f</math> is purely imaginary, then <math>\widehat f</math> is [[Even and odd functions#Complex-valued functions|odd symmetric]]: |

|||

:<math>\int_{-\infty}^\infty \left| f(x) \right|^2\, dx = \int_{-\infty}^\infty \left| \hat{f}(\xi) \right|^2\, d\xi. </math> |

|||

<math display="block">\widehat f(-\xi) = -(\widehat f(\xi))^*.</math> |

|||

==== Real and imaginary parts ==== |

|||

The Plancherel theorem makes it possible to define the Fourier transform for functions in ''L''<sup>2</sup>('''R'''), as described in [[Fourier transform#Generalizations|Generalizations]] below. The Plancherel theorem has the interpretation in the sciences that the Fourier transform preserves the energy of the original quantity. It should be noted that depending on the author either of these theorems might be referred to as the Plancherel theorem or as Parseval's theorem. |

|||

<math display="block">\operatorname{Re}\{f(x)\}\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ |

|||

\tfrac{1}{2} \left( \widehat f(\xi) + \bigl(\widehat f (-\xi) \bigr)^* \right)</math> |

|||

<math display="block">\operatorname{Im}\{f(x)\}\ \ \stackrel{\mathcal{F}}{\Longleftrightarrow}\ \ |

|||

\tfrac{1}{2i} \left( \widehat f(\xi) - \bigl(\widehat f (-\xi) \bigr)^* \right)</math> |

|||

==== Zero frequency component ==== |

|||

See [[Pontryagin duality]] for a general formulation of this concept in the context of locally compact abelian groups. |

|||

Substituting <math>\xi = 0</math> in the definition, we obtain: |

|||

<math display="block">\widehat{f}(0) = \int_{-\infty}^{\infty} f(x)\,dx.</math> |

|||

The integral of <math>f</math> over its domain is known as the average value or [[DC bias]] of the function. |

|||

===Poisson summation formula=== |

|||

{{Main|Poisson summation formula}} |

|||

=== Uniform continuity and the Riemann–Lebesgue lemma === |

|||

The [[Poisson summation formula]] provides a link between the study of Fourier transforms and Fourier Series. Given an integrable function ''ƒ'' we can consider the periodization of ''ƒ'' given by: |

|||

[[File:Rectangular function.svg|thumb|The [[rectangular function]] is [[Lebesgue integrable]].]] |

|||

[[File:Sinc function (normalized).svg|thumb|The [[sinc function]], which is the Fourier transform of the rectangular function, is bounded and continuous, but not Lebesgue integrable.]] |

|||

The Fourier transform may be defined in some cases for non-integrable functions, but the Fourier transforms of integrable functions have several strong properties. |

|||

The Fourier transform <math>\hat{f}</math> of any integrable function <math>f</math> is [[uniformly continuous]] and<ref name="Katznelson-1976">{{harvnb|Katznelson|1976}}</ref> |

|||

:<math>\bar f(x)=\sum_{k\in\mathbb{Z}} f(x+k),</math> |

|||

<math display="block">\left\|\hat{f}\right\|_\infty \leq \left\|f\right\|_1</math> |

|||

By the ''[[Riemann–Lebesgue lemma]]'',<ref name="Stein-Weiss-1971">{{harvnb|Stein|Weiss|1971}}</ref> |

|||

where the summation is taken over the set of all [[integer]]s ''k''. The Poisson summation formula relates the Fourier series of <math>\bar f</math> to the Fourier transform of ''ƒ''. Specifically it states that the Fourier series of <math>\bar f</math> is given by: |

|||

<math display="block">\hat{f}(\xi) \to 0\text{ as }|\xi| \to \infty.</math> |

|||

However, <math>\hat{f}</math> need not be integrable. For example, the Fourier transform of the [[rectangular function]], which is integrable, is the [[sinc function]], which is not [[Lebesgue integrable]], because its [[improper integral]]s behave analogously to the [[alternating harmonic series]], in converging to a sum without being [[absolutely convergent]]. |

|||

:<math>\bar f(x) \sim \sum_{k\in\mathbb{Z}} \hat{f}(k)e^{2\pi i k x}.</math> |

|||

It is not generally possible to write the ''inverse transform'' as a [[Lebesgue integral]]. However, when both <math>f</math> and <math>\hat{f}</math> are integrable, the inverse equality |

|||

===Convolution theorem === |

|||

<math display="block">f(x) = \int_{-\infty}^\infty \hat f(\xi) e^{i 2\pi x \xi} \, d\xi</math> holds for almost every {{mvar|x}}. As a result, the Fourier transform is [[injective]] on {{math|[[Lp space|''L''<sup>1</sup>('''R''')]]}}. |

|||

{{Main|Convolution theorem}} |

|||

=== Plancherel theorem and Parseval's theorem === |

|||

The Fourier transform translates between [[convolution]] and multiplication of functions. If ''ƒ''(''x'') and ''g''(''x'') are integrable functions with Fourier transforms <math>\hat{f}(\xi)</math> and <math>\hat{g}(\xi)</math> respectively, then the Fourier transform of the convolution is given by the product of the Fourier transforms <math>\hat{f}(\xi)</math> and <math>\hat{g}(\xi)</math> (under other conventions for the definition of the Fourier transform a constant factor may appear). |

|||

{{main|Plancherel theorem|Parseval's theorem}} |

|||

Let {{math|''f''(''x'')}} and {{math|''g''(''x'')}} be integrable, and let {{math|''f̂''(''ξ'')}} and {{math|''ĝ''(''ξ'')}} be their Fourier transforms. If {{math|''f''(''x'')}} and {{math|''g''(''x'')}} are also [[square-integrable]], then the Parseval formula follows:<ref>{{harvnb|Rudin|1987|p=187}}</ref> |

|||

<math display="block">\langle f, g\rangle_{L^{2}} = \int_{-\infty}^{\infty} f(x) \overline{g(x)} \,dx = \int_{-\infty}^\infty \hat{f}(\xi) \overline{\hat{g}(\xi)} \,d\xi,</math> |

|||

where the bar denotes [[complex conjugation]]. |

|||

The [[Plancherel theorem]], which follows from the above, states that<ref>{{harvnb|Rudin|1987|p=186}}</ref> |

|||

This means that if''':''' |

|||

<math display="block">\|f\|^2_{L^{2}} = \int_{-\infty}^\infty \left| f(x) \right|^2\,dx = \int_{-\infty}^\infty \left| \hat{f}(\xi) \right|^2\,d\xi. </math> |

|||

Plancherel's theorem makes it possible to extend the Fourier transform, by a continuity argument, to a [[unitary operator]] on {{math|''L''<sup>2</sup>('''R''')}}. On {{math|''L''<sup>1</sup>('''R''') ∩ ''L''<sup>2</sup>('''R''')}}, this extension agrees with original Fourier transform defined on {{math|''L''<sup>1</sup>('''R''')}}, thus enlarging the domain of the Fourier transform to {{math|''L''<sup>1</sup>('''R''') + ''L''<sup>2</sup>('''R''')}} (and consequently to {{math|{{math|''L''{{i sup|''p''}}}}('''R''')}} for {{math|1 ≤ ''p'' ≤ 2}}). Plancherel's theorem has the interpretation in the sciences that the Fourier transform preserves the [[energy]] of the original quantity. The terminology of these formulas is not quite standardised. Parseval's theorem was proved only for Fourier series, and was first proved by Lyapunov. But Parseval's formula makes sense for the Fourier transform as well, and so even though in the context of the Fourier transform it was proved by Plancherel, it is still often referred to as Parseval's formula, or Parseval's relation, or even Parseval's theorem. |

|||

:<math>h(x) = (f*g)(x) = \int_{-\infty}^\infty f(y)g(x - y)\,dy,</math> |

|||

See [[Pontryagin duality]] for a general formulation of this concept in the context of locally compact abelian groups. |

|||

where ∗ denotes the convolution operation, then''':''' |

|||

=== Convolution theorem === |

|||

:<math>\hat{h}(\xi) = \hat{f}(\xi)\cdot \hat{g}(\xi).</math> |

|||

{{Main|Convolution theorem}} |

|||

The Fourier transform translates between [[convolution]] and multiplication of functions. If {{math|''f''(''x'')}} and {{math|''g''(''x'')}} are integrable functions with Fourier transforms {{math|''f̂''(''ξ'')}} and {{math|''ĝ''(''ξ'')}} respectively, then the Fourier transform of the convolution is given by the product of the Fourier transforms {{math|''f̂''(''ξ'')}} and {{math|''ĝ''(''ξ'')}} (under other conventions for the definition of the Fourier transform a constant factor may appear). |

|||

In [[LTI system theory|linear time invariant (LTI) system theory]], it is common to interpret ''g''(''x'') as the [[impulse response]] of an LTI system with input ''ƒ''(''x'') and output ''h''(''x''), since substituting the [[Dirac delta function|unit impulse]] for ''ƒ''(''x'') yields ''h''(''x'') = ''g''(''x''). In this case,  <math>\hat{g}(\xi)</math>  represents the [[frequency response]] of the system. |

|||

This means that if: |

|||

Conversely, if ''ƒ''(''x'') can be decomposed as the product of two square integrable functions ''p''(''x'') and ''q''(''x''), then the Fourier transform of ''ƒ''(''x'') is given by the convolution of the respective Fourier transforms <math>\hat{p}(\xi)</math> and <math>\hat{q}(\xi)</math>. |

|||

<math display="block">h(x) = (f*g)(x) = \int_{-\infty}^\infty f(y)g(x - y)\,dy,</math> |

|||

where {{math|∗}} denotes the convolution operation, then: |

|||

<math display="block">\hat{h}(\xi) = \hat{f}(\xi)\, \hat{g}(\xi).</math> |

|||

In [[LTI system theory|linear time invariant (LTI) system theory]], it is common to interpret {{math|''g''(''x'')}} as the [[impulse response]] of an LTI system with input {{math|''f''(''x'')}} and output {{math|''h''(''x'')}}, since substituting the [[Dirac delta function|unit impulse]] for {{math|''f''(''x'')}} yields {{math|1=''h''(''x'') = ''g''(''x'')}}. In this case, {{math|''ĝ''(''ξ'')}} represents the [[frequency response]] of the system. |

|||

Conversely, if {{math|''f''(''x'')}} can be decomposed as the product of two square integrable functions {{math|''p''(''x'')}} and {{math|''q''(''x'')}}, then the Fourier transform of {{math|''f''(''x'')}} is given by the convolution of the respective Fourier transforms {{math|''p̂''(''ξ'')}} and {{math|''q̂''(''ξ'')}}. |

|||

=== Cross-correlation theorem === |

=== Cross-correlation theorem === |

||

{{Main|Cross-correlation}} |

{{Main|Cross-correlation|Wiener–Khinchin_theorem}} |

||

In an analogous manner, it can be shown that if ''h''(''x'') is the [[cross-correlation]] of '' |

In an analogous manner, it can be shown that if {{math|''h''(''x'')}} is the [[cross-correlation]] of {{math|''f''(''x'')}} and {{math|''g''(''x'')}}: |

||

<math display="block">h(x) = (f \star g)(x) = \int_{-\infty}^\infty \overline{f(y)}g(x + y)\,dy</math> |

|||

then the Fourier transform of {{math|''h''(''x'')}} is: |

|||

<math display="block">\hat{h}(\xi) = \overline{\hat{f}(\xi)} \, \hat{g}(\xi).</math> |

|||

As a special case, the [[autocorrelation]] of function {{math|''f''(''x'')}} is: |

|||

:<math>h(x)=(f\star g)(x) = \int_{-\infty}^\infty \overline{f(y)}\,g(x+y)\,dy</math> |

|||

<math display="block">h(x) = (f \star f)(x) = \int_{-\infty}^\infty \overline{f(y)}f(x + y)\,dy</math> |

|||

for which |

|||

<math display="block">\hat{h}(\xi) = \overline{\hat{f}(\xi)}\hat{f}(\xi) = \left|\hat{f}(\xi)\right|^2.</math> |

|||

=== Differentiation === |

|||

then the Fourier transform of ''h''(''x'') is: |

|||

Suppose {{math|''f''(''x'')}} is an absolutely continuous differentiable function, and both {{math|''f''}} and its derivative {{math|''f′''}} are integrable. Then the Fourier transform of the derivative is given by |

|||

<math display="block">\widehat{f'\,}(\xi) = \mathcal{F}\left\{ \frac{d}{dx} f(x)\right\} = i 2\pi \xi\hat{f}(\xi).</math> |

|||

More generally, the Fourier transformation of the {{mvar|n}}th derivative {{math|''f''{{isup|(''n'')}}}} is given by |

|||

<math display="block">\widehat{f^{(n)}}(\xi) = \mathcal{F}\left\{ \frac{d^n}{dx^n} f(x) \right\} = (i 2\pi \xi)^n\hat{f}(\xi).</math> |

|||

Analogously, <math>\mathcal{F}\left\{ \frac{d^n}{d\xi^n} \hat{f}(\xi)\right\} = (i 2\pi x)^n f(x)</math>, so <math>\mathcal{F}\left\{ x^n f(x)\right\} = \left(\frac{i}{2\pi}\right)^n \frac{d^n}{d\xi^n} \hat{f}(\xi).</math> |

|||

By applying the Fourier transform and using these formulas, some [[ordinary differential equation]]s can be transformed into algebraic equations, which are much easier to solve. These formulas also give rise to the rule of thumb "{{math|''f''(''x'')}} is smooth [[if and only if]] {{math|''f̂''(''ξ'')}} quickly falls to 0 for {{math|{{abs|''ξ''}} → ∞}}." By using the analogous rules for the inverse Fourier transform, one can also say "{{math|''f''(''x'')}} quickly falls to 0 for {{math|{{abs|''x''}} → ∞}} if and only if {{math|''f̂''(''ξ'')}} is smooth." |

|||

===Eigenfunctions=== |

|||

One important choice of an orthonormal basis for ''L''<sup>2</sup>('''R''') is given by the Hermite functions |

|||

=== Eigenfunctions === |

|||

: <math>{\psi}_n(x) = \frac{2^{1/4}}{\sqrt{n!}} \, e^{-\pi x^2}H_n(2x\sqrt{\pi}),</math> |

|||

{{see also|Mehler kernel|Hermite polynomials#Hermite functions as eigenfunctions of the Fourier transform}} |

|||

The Fourier transform is a linear transform which has eigenfunctions obeying <math>\mathcal{F}[\psi] = \lambda \psi,</math> with <math> \lambda \in \mathbb{C}.</math> |

|||

A set of eigenfunctions is found by noting that the homogeneous differential equation |

|||

where <math>H_n(x)</math> are the "probabilist's" [[Hermite polynomial]]s, defined by ''H<sub>n</sub>''(''x'') = (−1)<sup>''n''</sup>exp(''x''<sup>2</sup>/2) D<sup>''n''</sup> exp(−''x''<sup>2</sup>/2). Under this convention for the Fourier transform, we have that |

|||

<math display="block">\left[ U\left( \frac{1}{2\pi}\frac{d}{dx} \right) + U( x ) \right] \psi(x) = 0</math> |

|||

leads to eigenfunctions <math>\psi(x)</math> of the Fourier transform <math>\mathcal{F}</math> as long as the form of the equation remains invariant under Fourier transform.<ref group=note>The operator <math>U\left( \frac{1}{2\pi}\frac{d}{dx} \right)</math> is defined by replacing <math>x</math> by <math>\frac{1}{2\pi}\frac{d}{dx}</math> in the [[Taylor series|Taylor expansion]] of <math>U(x).</math></ref> In other words, every solution <math>\psi(x)</math> and its Fourier transform <math>\hat\psi(\xi)</math> obey the same equation. Assuming [[Ordinary differential equation#Existence and uniqueness of solutions|uniqueness]] of the solutions, every solution <math>\psi(x)</math> must therefore be an eigenfunction of the Fourier transform. The form of the equation remains unchanged under Fourier transform if <math>U(x)</math> can be expanded in a power series in which for all terms the same factor of either one of <math>\pm 1, \pm i</math> arises from the factors <math>i^n</math> introduced by the [[#Differentiation|differentiation]] rules upon Fourier transforming the homogeneous differential equation because this factor may then be cancelled. The simplest allowable <math>U(x)=x</math> leads to the [[Normal distribution#Fourier transform and characteristic function|standard normal distribution]].<ref>{{harvnb|Folland|1992|p=216}}</ref> |

|||

More generally, a set of eigenfunctions is also found by noting that the [[#Differentiation|differentiation]] rules imply that the [[ordinary differential equation]] |

|||

: <math> \hat\psi_n(\xi) = (-i)^n {\psi}_n(\xi) .</math> |

|||

<math display="block">\left[ W\left( \frac{i}{2\pi}\frac{d}{dx} \right) + W(x) \right] \psi(x) = C \psi(x)</math> |

|||

with <math>C</math> constant and <math>W(x)</math> being a non-constant even function remains invariant in form when applying the Fourier transform <math>\mathcal{F}</math> to both sides of the equation. The simplest example is provided by <math>W(x) = x^2</math> which is equivalent to considering the Schrödinger equation for the [[Quantum harmonic oscillator#Natural length and energy scales|quantum harmonic oscillator]].<ref>{{harvnb|Wolf|1979|p=307ff}}</ref> The corresponding solutions provide an important choice of an orthonormal basis for {{math|[[Square-integrable function|''L''<sup>2</sup>('''R''')]]}} and are given by the "physicist's" [[Hermite polynomials#Hermite functions as eigenfunctions of the Fourier transform|Hermite functions]]. Equivalently one may use |

|||

<math display="block">\psi_n(x) = \frac{\sqrt[4]{2}}{\sqrt{n!}} e^{-\pi x^2}\mathrm{He}_n\left(2x\sqrt{\pi}\right),</math> |

|||

where {{math|He<sub>''n''</sub>(''x'')}} are the "probabilist's" [[Hermite polynomial]]s, defined as |

|||

<math display="block">\mathrm{He}_n(x) = (-1)^n e^{\frac{1}{2}x^2}\left(\frac{d}{dx}\right)^n e^{-\frac{1}{2}x^2}.</math> |

|||

Under this convention for the Fourier transform, we have that |

|||

In other words, the Hermite functions form a complete [[orthonormal]] system of [[eigenfunctions]] for the Fourier transform on ''L''<sup>2</sup>('''R''') {{harv|Pinsky|2002}}. However, this choice of eigenfunctions is not unique. There are only four different [[eigenvalue]]s of the Fourier transform (±1 and ±''i'') and any linear combination of eigenfunctions with the same eigenvalue gives another eigenfunction. As a consequence of this, it is possible to decompose ''L''<sup>2</sup>('''R''') as a direct sum of four spaces ''H''<sub>0</sub>, ''H''<sub>1</sub>, ''H''<sub>2</sub>, and ''H''<sub>3</sub> where the Fourier transform acts on ''H''<sub>''k''</sub> simply by multiplication by ''i''<sup>''k''</sup>. This approach to define the Fourier transform is due to N. Wiener {{harv|Duoandikoetxea|2001}}. The choice of Hermite functions is convenient because they are exponentially localized in both frequency and time domains, and thus give rise to the [[fractional Fourier transform]] used in time-frequency analysis {{Citation needed|date=October 2008}}. |

|||

<math display="block">\hat\psi_n(\xi) = (-i)^n \psi_n(\xi).</math> |

|||

In other words, the Hermite functions form a complete [[orthonormal]] system of [[eigenfunctions]] for the Fourier transform on {{math|''L''<sup>2</sup>('''R''')}}.<ref name="Pinsky-2002" /><ref>{{harvnb|Folland|1989|p=53}}</ref> However, this choice of eigenfunctions is not unique. Because of <math>\mathcal{F}^4 = \mathrm{id}</math> there are only four different [[eigenvalue]]s of the Fourier transform (the fourth roots of unity ±1 and ±{{mvar|i}}) and any linear combination of eigenfunctions with the same eigenvalue gives another eigenfunction.<ref>{{harvnb|Celeghini|Gadella|del Olmo|2021}}</ref> As a consequence of this, it is possible to decompose {{math|''L''<sup>2</sup>('''R''')}} as a direct sum of four spaces {{math|''H''<sub>0</sub>}}, {{math|''H''<sub>1</sub>}}, {{math|''H''<sub>2</sub>}}, and {{math|''H''<sub>3</sub>}} where the Fourier transform acts on {{math|He<sub>''k''</sub>}} simply by multiplication by {{math|''i''<sup>''k''</sup>}}. |

|||

== Fourier transform on Euclidean space == |

|||

Since the complete set of Hermite functions {{math|''ψ<sub>n</sub>''}} provides a resolution of the identity they diagonalize the Fourier operator, i.e. the Fourier transform can be represented by such a sum of terms weighted by the above eigenvalues, and these sums can be explicitly summed: |

|||

The Fourier transform can be in any arbitrary number of dimensions ''n''. As with the one-dimensional case there are many conventions, for an integrable function ''ƒ''(''x'') this article takes the definition''':''' |

|||

<math display="block">\mathcal{F}[f](\xi) = \int dx f(x) \sum_{n \geq 0} (-i)^n \psi_n(x) \psi_n(\xi) ~.</math> |

|||

This approach to define the Fourier transform was first proposed by [[Norbert Wiener]].<ref name="Duoandikoetxea-2001">{{harvnb|Duoandikoetxea|2001}}</ref> Among other properties, Hermite functions decrease exponentially fast in both frequency and time domains, and they are thus used to define a generalization of the Fourier transform, namely the [[fractional Fourier transform]] used in time–frequency analysis.<ref name="Boashash-2003">{{harvnb|Boashash|2003}}</ref> In [[physics]], this transform was introduced by [[Edward Condon]].<ref>{{harvnb|Condon|1937}}</ref> This change of basis functions becomes possible because the Fourier transform is a unitary transform when using the right [[#Other conventions|conventions]]. Consequently, under the proper conditions it may be expected to result from a self-adjoint generator <math>N</math> via<ref>{{harvnb|Wolf|1979|p=320}}</ref> |

|||

:<math>\hat{f}(\xi) = \mathcal{F}(f)(\xi) = \int_{\R^n} f(x) e^{-2\pi i x\cdot\xi} \, dx</math> |

|||

<math display="block">\mathcal{F}[\psi] = e^{-i t N} \psi.</math> |

|||

The operator <math>N</math> is the [[Quantum harmonic oscillator#Ladder operator method|number operator]] of the quantum harmonic oscillator written as<ref name="auto">{{harvnb|Wolf|1979|p=312}}</ref><ref>{{harvnb|Folland|1989|p=52}}</ref> |

|||

where ''x'' and ''ξ'' are ''n''-dimensional [[vector (mathematics)|vectors]], and {{nowrap|''x'' '''·''' ''ξ''}} is the [[dot product]] of the vectors. The dot product is sometimes written as <math>\left\langle x,\xi \right\rangle</math>. |

|||

<math display="block">N \equiv \frac{1}{2}\left(x - \frac{\partial}{\partial x}\right)\left(x + \frac{\partial}{\partial x}\right) = \frac{1}{2}\left(-\frac{\partial^2}{\partial x^2} + x^2 - 1\right).</math> |

|||

It can be interpreted as the [[symmetry in quantum mechanics|generator]] of [[Mehler kernel#Fractional Fourier transform|fractional Fourier transforms]] for arbitrary values of {{mvar|t}}, and of the conventional continuous Fourier transform <math>\mathcal{F}</math> for the particular value <math>t = \pi/2,</math> with the [[Mehler kernel#Physics version|Mehler kernel]] implementing the corresponding [[active and passive transformation#In abstract vector spaces|active transform]]. The eigenfunctions of <math> N</math> are the [[Hermite polynomials#Hermite functions|Hermite functions]] <math>\psi_n(x)</math> which are therefore also eigenfunctions of <math>\mathcal{F}.</math> |

|||

All of the basic properties listed above hold for the ''n''-dimensional Fourier transform, as do Plancherel's and Parseval's theorem. When the function is integrable, the Fourier transform is still uniformly continuous and the Riemann-Lebesgue lemma holds. {{harv|Stein|Weiss|1971}} |

|||

Upon extending the Fourier transform to [[distribution (mathematics)|distributions]] the [[Dirac comb#Fourier transform|Dirac comb]] is also an eigenfunction of the Fourier transform. |

|||

===Uncertainty principle=== |

|||

Generally speaking, the more concentrated ''f''(''x'') is, the more spread out its Fourier transform <math>\hat{f}(\xi)</math>  must be. In particular, the scaling property of the Fourier transform may be seen as saying: if we "squeeze" a function in ''x'', its Fourier transform "stretches out" in ''ξ''. It is not possible to arbitrarily concentrate both a function and its Fourier transform. |

|||

=== Inversion and periodicity === |

|||

The trade-off between the compaction of a function and its Fourier transform can be formalized in the form of an '''Uncertainty Principle''', and is formalized by viewing a function and its Fourier transform as [[conjugate variables]] with respect to the [[symplectic form]] on the [[time–frequency representation|time–frequency domain]]: from the point of view of the [[linear canonical transformation]], the Fourier transform is rotation by 90° in the time–frequency domain, and preserves the symplectic form. |

|||

{{Further|Fourier inversion theorem|Fractional Fourier transform}} |

|||

Under suitable conditions on the function <math>f</math>, it can be recovered from its Fourier transform <math>\hat{f}</math>. Indeed, denoting the Fourier transform operator by <math>\mathcal{F}</math>, so <math>\mathcal{F} f := \hat{f}</math>, then for suitable functions, applying the Fourier transform twice simply flips the function: <math>\left(\mathcal{F}^2 f\right)(x) = f(-x)</math>, which can be interpreted as "reversing time". Since reversing time is two-periodic, applying this twice yields <math>\mathcal{F}^4(f) = f</math>, so the Fourier transform operator is four-periodic, and similarly the inverse Fourier transform can be obtained by applying the Fourier transform three times: <math>\mathcal{F}^3\left(\hat{f}\right) = f</math>. In particular the Fourier transform is invertible (under suitable conditions). |

|||

Suppose ''ƒ''(''x'') is an integrable and [[square-integrable]] function. Without loss of generality, assume that ''ƒ''(''x'') is normalized: |

|||

More precisely, defining the ''parity operator'' <math>\mathcal{P}</math> such that <math>(\mathcal{P} f)(x) = f(-x)</math>, we have: |

|||

:<math>\int_{-\infty}^\infty |f(x)|^2 \,dx=1.</math> |

|||

<math display="block">\begin{align} |

|||

\mathcal{F}^0 &= \mathrm{id}, \\ |

|||

\mathcal{F}^1 &= \mathcal{F}, \\ |

|||

\mathcal{F}^2 &= \mathcal{P}, \\ |

|||

\mathcal{F}^3 &= \mathcal{F}^{-1} = \mathcal{P} \circ \mathcal{F} = \mathcal{F} \circ \mathcal{P}, \\ |

|||

\mathcal{F}^4 &= \mathrm{id} |

|||

\end{align}</math> |

|||

These equalities of operators require careful definition of the space of functions in question, defining equality of functions (equality at every point? equality [[almost everywhere]]?) and defining equality of operators – that is, defining the topology on the function space and operator space in question. These are not true for all functions, but are true under various conditions, which are the content of the various forms of the [[Fourier inversion theorem]]. |

|||

This fourfold periodicity of the Fourier transform is similar to a rotation of the plane by 90°, particularly as the two-fold iteration yields a reversal, and in fact this analogy can be made precise. While the Fourier transform can simply be interpreted as switching the time domain and the frequency domain, with the inverse Fourier transform switching them back, more geometrically it can be interpreted as a rotation by 90° in the [[time–frequency domain]] (considering time as the {{mvar|x}}-axis and frequency as the {{mvar|y}}-axis), and the Fourier transform can be generalized to the [[fractional Fourier transform]], which involves rotations by other angles. This can be further generalized to [[linear canonical transformation]]s, which can be visualized as the action of the [[special linear group]] {{math|[[SL2(R)|SL<sub>2</sub>('''R''')]]}} on the time–frequency plane, with the preserved symplectic form corresponding to the [[#Uncertainty principle|uncertainty principle]], below. This approach is particularly studied in [[signal processing]], under [[time–frequency analysis]]. |

|||

It follows from the [[Plancherel theorem]] that <math>\hat{f}(\xi)</math>  is also normalized. |

|||

=== Connection with the Heisenberg group === |

|||

The spread around ''x'' = 0 may be measured by the ''dispersion about zero'' {{harv|Pinsky|2002}} defined by |

|||

The [[Heisenberg group]] is a certain [[group (mathematics)|group]] of [[unitary operator]]s on the [[Hilbert space]] {{math|''L''<sup>2</sup>('''R''')}} of square integrable complex valued functions {{mvar|f}} on the real line, generated by the translations {{math|1=(''T<sub>y</sub> f'')(''x'') = ''f'' (''x'' + ''y'')}} and multiplication by {{math|''e''<sup>''i''2π''ξx''</sup>}}, {{math|1=(''M<sub>ξ</sub> f'')(''x'') = ''e''<sup>''i''2π''ξx''</sup> ''f'' (''x'')}}. These operators do not commute, as their (group) commutator is |

|||

<math display="block">\left(M^{-1}_\xi T^{-1}_y M_\xi T_yf\right)(x) = e^{i 2\pi\xi y}f(x)</math> |

|||

which is multiplication by the constant (independent of {{mvar|x}}) {{math|''e''<sup>''i''2π''ξy''</sup> ∈ ''U''(1)}} (the [[circle group]] of unit modulus complex numbers). As an abstract group, the Heisenberg group is the three-dimensional [[Lie group]] of triples {{math|(''x'', ''ξ'', ''z'') ∈ '''R'''<sup>2</sup> × ''U''(1)}}, with the group law |

|||

<math display="block">\left(x_1, \xi_1, t_1\right) \cdot \left(x_2, \xi_2, t_2\right) = \left(x_1 + x_2, \xi_1 + \xi_2, t_1 t_2 e^{i 2\pi \left(x_1 \xi_1 + x_2 \xi_2 + x_1 \xi_2\right)}\right).</math> |

|||

Denote the Heisenberg group by {{math|''H''<sub>1</sub>}}. The above procedure describes not only the group structure, but also a standard [[unitary representation]] of {{math|''H''<sub>1</sub>}} on a Hilbert space, which we denote by {{math|''ρ'' : ''H''<sub>1</sub> → ''B''(''L''<sup>2</sup>('''R'''))}}. Define the linear automorphism of {{math|'''R'''<sup>2</sup>}} by |

|||

:<math>D_0(f)=\int_{-\infty}^\infty x^2|f(x)|^2\,dx.</math> |

|||

<math display="block">J \begin{pmatrix} |

|||

x \\ |

|||

\xi |

|||