IBM Watson: Difference between revisions

We need lots more work on this... |

Tags: Mobile edit Mobile web edit |

||

| Line 1: | Line 1: | ||

{{short description|Artificial intelligence computer system made by IBM}} |

|||

{{Redirect|IBM Watson|the IBM Watson Laboratory |Thomas J. Watson Research Center}} |

|||

{{About|IBM's QA machine revealed in 2011|generative AI tool released in 2023|IBM Watsonx}} |

|||

'''Watson''', named after IBM's founder, [[Thomas J. Watson]], is an [[artificial intelligence]] program developed by [[IBM]] designed to answer questions posed in [[natural language]].<ref name="Smartest Machine on Earth">[http://documentarystorm.com/science-tech/smartest-machine-on-earth/ Smartest Machine on Earth] Retrieved {{Nowrap|14 February 2011}}.</ref> It is being developed as part of the DeepQA research project.<ref>[http://www.research.ibm.com/deepqa/deepqa.shtml The DeepQA Project]</ref> The program is in the final stages of completion and will run on a [[POWER7]] processor-based system. It is scheduled to compete on the television [[quiz show]] ''[[Jeopardy!]]'' as a test of its abilities; the competition will be aired in three ''Jeopardy!'' episodes running from February 14–16, 2011 at 7 PM EST. Watson will compete against [[Brad Rutter]], the current biggest all-time money winner on ''Jeopardy!'', and [[Ken Jennings]], the record holder for the longest championship streak.<ref>{{cite news|url=http://www.nytimes.com/2009/04/27/technology/27jeopardy.html?_r=2&hp|title=Computer Program to Take On ‘Jeopardy!’ |last=Markoff|first=John|date=2009-04-26|publisher=New York Times|accessdate=2009-04-27}}</ref><ref>{{cite web|url=http://gizmodo.com/5228887/ibm-prepping-soul+crushing-watson-computer-to-compete-on-jeopardy|title=IBM Prepping Soul-Crushing 'Watson' Computer to Compete on Jeopardy!|last=Loftus|first=Jack|date=2009-04-26|publisher=Gizmodo|accessdate=2009-04-27}}</ref> |

|||

{{Infobox custom computer |

|||

|Logo=IBM_Watson_Logo_2017.png |

|||

|Image=IBM Watson.PNG |

|||

|Storage= |

|||

|Website={{URL|https://www.ibm.com/watson|IBM Watson}} |

|||

|Emulators= |

|||

|Legacy= |

|||

|Purpose= |

|||

|ChartDate= |

|||

|ChartPosition= |

|||

|ChartName= |

|||

|Cost= |

|||

|Speed=80 [[Teraflop|teraFLOPS]] |

|||

|Memory=16 [[terabyte]]s of [[RAM]] |

|||

|Image_Size= |

|||

|Space= |

|||

|OS= |

|||

|Power= |

|||

|Architecture= 2,880 [[POWER7]] processor threads |

|||

|Location=[[Thomas J. Watson Research Center]], [[New York (state)|New York, USA]] |

|||

|Operators=[[IBM]] |

|||

|Sponsors= |

|||

|Dates= |

|||

|Caption= |

|||

|Alt= |

|||

|Sources=}} |

|||

'''IBM Watson''' is a computer system capable of [[question answering|answering questions]] posed in [[natural language]].<ref name="ibm">{{cite web |url=http://www.research.ibm.com/deepqa/faq.shtml |title=DeepQA Project: FAQ |work=IBM |access-date=February 11, 2011 |archive-date=June 29, 2011 |archive-url=https://web.archive.org/web/20110629122514/http://www.research.ibm.com/deepqa/faq.shtml |url-status=live }}</ref> It was developed as a part of [[IBM]]'s DeepQA project by a research team, led by [[principal investigator]] [[David Ferrucci]].<ref>{{Cite journal|last1=Ferrucci|first1=David|last2=Levas|first2=Anthony|last3=Bagchi|first3=Sugato|last4=Gondek|first4=David|last5=Mueller|first5=Erik T.|date=2013-06-01|title=Watson: Beyond Jeopardy!|journal=Artificial Intelligence|volume=199|pages=93–105|doi=10.1016/j.artint.2012.06.009|doi-access=free}}</ref> Watson was named after IBM's founder and first CEO, industrialist [[Thomas J. Watson]].<ref name="NYT_20110208">{{cite news |url=https://www.nytimes.com/2011/02/09/arts/television/09nova.html |title=Actors and Their Roles for $300, HAL? HAL! |first=Mike |last=Hale |newspaper=[[The New York Times]] |date=February 8, 2011 |access-date=February 11, 2011}}</ref><ref>{{cite web |url=http://www.research.ibm.com/deepqa/deepqa.shtml |title=The DeepQA Project |work=IBM Research |access-date=February 18, 2011 |archive-date=June 29, 2011 |archive-url=https://web.archive.org/web/20110629122438/http://www.research.ibm.com/deepqa/deepqa.shtml |url-status=live }}</ref> |

|||

==Technology== |

|||

IBM states: |

|||

<blockquote> |

|||

Watson is an application of advanced [[natural language processing]], [[information retrieval]], [[knowledge representation]] and [[automated reasoning|reasoning]], and [[machine learning]] technologies to the field of [[open domain question answering]]. At its core, Watson is built on IBM's DeepQA technology for hypothesis generation, massive evidence gathering, analysis, and scoring.<ref>{{cite web|url=http://www.research.ibm.com/deepqa/faq.shtml#3|title=What kind of technology is Watson based on?|publisher=IBM Corporation|accessdate=2011-02-11}}</ref> |

|||

The computer system was initially developed to answer questions on the popular quiz show ''[[Jeopardy!]]''<ref>{{cite web |title=Dave Ferrucci at Computer History Museum – How It All Began and What's Next |url=http://ibmresearchnews.blogspot.com/2011/12/dave-ferrucci-at-computer-history.html |work=IBM Research |date=December 1, 2011 |access-date=February 11, 2012 |archive-date=March 13, 2012 |archive-url=https://web.archive.org/web/20120313005431/http://ibmresearchnews.blogspot.com/2011/12/dave-ferrucci-at-computer-history.html |url-status=live }}</ref> and in 2011, the Watson computer system competed on ''Jeopardy!'' against champions [[Brad Rutter]] and [[Ken Jennings]],<ref name="NYT_20110208" /><ref>{{cite web |url=https://gizmodo.com/5228887/ibm-prepping-watson-computer-to-compete-on-jeopardy |title=IBM Prepping 'Watson' Computer to Compete on Jeopardy! |last=Loftus |first=Jack |work=[[Gizmodo]] |date=April 26, 2009 |access-date=September 18, 2017 |archive-date=July 31, 2017 |archive-url=https://web.archive.org/web/20170731231411/http://gizmodo.com/5228887/ibm-prepping-watson-computer-to-compete-on-jeopardy |url-status=live }}</ref> winning the first-place prize of US$1 million.<ref>{{cite web |title=IBM's "Watson" Computing System to Challenge All Time Henry Lambert Jeopardy! Champions |url=http://www.jeopardy.com/news/watson1x7ap4.php |date=December 14, 2010 |work=[[Sony Pictures Television]] |archive-url=https://web.archive.org/web/20130616092431/http://www.jeopardy.com/news/watson1x7ap4.php |archive-date=June 16, 2013 }}</ref> |

|||

Watson is a workload optimized system designed for complex analytics, made possible by integrating massively parallel POWER7 processors and the IBM DeepQA software to answer Jeopardy! questions in under three seconds. Watson is made up of a cluster of ninety IBM Power 750 servers (plus additional I/O, network and cluster controller nodes in 10 racks) with a total of 2880 POWER7 processor cores and 16 Terabytes of RAM. Each Power 750 server uses a 3.5 GHz POWER7 eight core processor, with four threads per core. The POWER7 processor's massively parallel processing capability is an ideal match for Watsons IBM DeepQA software which is [[embarrassingly parallel]] (that is a workload that executes multiple threads in parallel).<ref>{{cite web|url=http://www.cs.umbc.edu/2011/02/is-watson-the-smartest-machine-on-earth/ |title=Is Watson the smartest machine on earth?|publisher=Computer Science and Electrical Engineering Department, UMBC|date=February 10, 2011|accessdate=2011-02-11}}</ref><!-- surely there's a more direct source of this presumed quote from IBM --> |

|||

</blockquote> |

|||

In February 2013, IBM announced that Watson's first commercial application would be for [[utilization management]] decisions in lung cancer treatment, at [[Memorial Sloan Kettering Cancer Center]], New York City, in conjunction with WellPoint (now [[Elevance Health]]).<ref name=wellpoint/> |

|||

While primarily an IBM effort, the development team includes faculty and students from [[Carnegie Mellon University]], |

|||

[[University of Massachusetts]], [[University of Southern California]]/[[Information Sciences Institute]], |

|||

[[University of Texas]], [[Massachusetts Institute of Technology]], [[University of Trento]], and [[Rensselaer Polytechnic Institute]].<ref>[http://www.aaai.org/ojs/index.php/aimagazine/article/view/2303?hpg1=bn Building Watson: An Overview of the DeepQA Project.] AI Magazine, Vol 31, No 3. 2010</ref> |

|||

{{TOC limit|5}} |

|||

==Genesis== |

|||

An IBM executive had proposed that Watson compete on ''Jeopardy!'', but the suggestion was initially dismissed. In competitions run by the United States government, Watson's predecessors were able to answer no more than 70% of questions correctly and often took several minutes to come up with an answer. To compete successfully on ''Jeopardy!'', Watson would need to come up with answers in no more than a few seconds, and the problems posed by the challenge of competing on the game show were initially deemed to be impossible to solve.<ref name=NYT2010/> |

|||

==Description== |

|||

In initial tests run in 2006 by David Ferrucci, the senior manager of IBM's Semantic Analysis and Integration department, Watson was given 500 clues from past ''Jeopardy!'' programs. While the top real-life competitors buzzed in half the time and answered as much as 95% of questions correctly, Watson's first pass could only get about 15% right. In 2007, the IBM team was given three to five years and a staff of 15 people to develop a solution to the problems posed. The IBM team provided Watson with millions of documents, including dictionaries, encyclopedias and other reference material that it could use to build its knowledge, such as bibles, novels and plays. Rather than relying on a single algorithm, Watson uses thousands of algorithms simultaneously to understand the question being asked and find the correct path to the answer.<ref>{{ cite web | url=http://www.pbs.org/wgbh/nova/tech/will-watson-win-jeopardy.html | title=PBS Nova ScienceNOW: Will Watson Win On Jeopardy!? | date=2011-01-20 | accessdate=2011-01-27 }} Three artificial-intelligence experts, including the leader of the Watson team, discuss the supercomputer’s prospects.</ref> The more algorithms that independently arrive at the same answer, the more likely Watson is to be correct. Once Watson comes up with a small number of potential solutions, it is able to check against its database to ascertain if the solution made sense. In a sequence of 20 mock games, human participants were able to use the six to eight seconds it takes to read the clue to decide whether to buzz in with the correct answer. During that time, Watson is also able to evaluate the answer and determine if it is sufficiently confident in the result to buzz in.<ref name=NYT2010/> |

|||

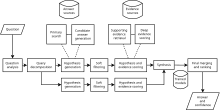

[[File:DeepQA.svg|thumb|The high-level architecture of IBM's DeepQA used in Watson<ref name="AIMagazine"/>]] |

|||

Watson was created as a [[question answering]] (QA) computing system that IBM built to apply advanced [[natural language processing]], [[information retrieval]], [[knowledge representation]], [[automated reasoning]], and [[machine learning]] technologies to the field of [[open domain question answering]].<ref name=ibm/> |

|||

IBM stated that Watson uses "more than 100 different techniques to analyze natural language, identify sources, find and generate hypotheses, find and score evidence, and merge and rank hypotheses."<ref>{{cite web |title=Watson, A System Designed for Answers: The Future of Workload Optimized Systems Design |url=http://www-01.ibm.com/common/ssi/cgi-bin/ssialias?infotype=SA&subtype=WH&htmlfid=POW03061USEN |work=IBM Systems and Technology |date=February 2011 |page=3 |access-date=September 9, 2015 |archive-date=March 4, 2016 |archive-url=https://web.archive.org/web/20160304055519/http://www-01.ibm.com/common/ssi/cgi-bin/ssialias?infotype=SA&subtype=WH&htmlfid=POW03061USEN |url-status=live }}</ref> |

|||

By 2008, the developers had advanced to the point where Watson could compete with ''Jeopardy!'' champions. That year, IBM contacted ''Jeopardy!'' executive producer [[Harry Friedman]] about the possibility of having Watson compete as a contestant on the show. The show's producers agreed.<ref name=NYT2010/><ref>{{cite news |author=[[Brian Stelter]] |coauthors= |title=I.B.M. Supercomputer ‘Watson’ to Challenge ‘Jeopardy’ Stars |url=http://mediadecoder.blogs.nytimes.com/2010/12/14/i-b-m-supercomputer-watson-to-challenge-jeopardy-stars/?src=me&ref=business |quote=An I.B.M. supercomputer system named after the company’s founder, Thomas J. Watson Sr., is almost ready for a televised test: a bout of questioning on the quiz show “Jeopardy.” I.B.M. and the producers of “Jeopardy” will announce on Tuesday that the computer, “Watson,” will face the two most successful players in “Jeopardy” history, Ken Jennings and Brad Rutter, in three episodes that will be broadcast Feb. 14–16, 2011. |newspaper=[[New York Times]] |date=December 14, 2010 |accessdate=2010-12-14 }}</ref> |

|||

In recent years, Watson's capabilities have been extended and the way in which Watson works has been changed to take advantage of new deployment models (Watson on [[IBM Cloud]]), evolved machine learning capabilities, and optimized hardware available to developers and researchers. {{Citation needed|date=April 2022}} |

|||

==Performance== |

|||

{{Current|date=February 2011}} |

|||

As of February 2010 Watson can beat human ''Jeopardy!'' contestants on a regular basis.<ref>[http://www.networkworld.com/news/2010/021010-ibm-jeopardy-game.html?hpg1=bn IBM's Jeopardy-playing machine can now beat human contestants] NetworkWorld, February 10, 2010</ref> IBM set up a mock set to mimic the one used on ''Jeopardy!'' in a conference room at one of its technology sites, and had individuals including former ''Jeopardy!'' contestants participate in mock games against Watson, with Todd Alan Crain of ''[[The Onion]]'' playing host. Watson, located on another floor, was given the clues electronically and was able to buzz in and speak with an electronic voice when it gave the responses in ''Jeopardy!'''s question format.<ref name=NYT2010>{{cite news |author=[[Clive Thompson (journalist)|Clive Thompson]] |coauthors= |title=What Is I.B.M.’s Watson? |url=http://www.nytimes.com/2010/06/20/magazine/20Computer-t.html |quote= |work=[[New York Times]] |date= June 14, 2010|accessdate=2010-07-02 }}</ref> |

|||

===Software=== |

|||

In a practice match before the press on January 13, 2011, Watson won a 15-question round against Ken Jennings and Brad Rutter with a score of $4,400 to Jennings's $3,400 and Rutter's $1,200, though Jennings and Watson were tied before the final $1,000 question.<ref name="PracticeMatch">{{cite web | url=http://www.zdnet.com/blog/btl/ibms-watson-wins-jeopardy-practice-round-can-humans-hang/43601 | title=IBM's Watson wins Jeopardy practice round: Can humans hang? | accessdate=2011-01-13 | author=Dignan, Larry | date=2011-1-13 | pages=[[ZDnet]]}}</ref> None of the three players answered a question incorrectly; the match was decided by who could buzz in more quickly with their correct answer.<ref name="PracticeMatch2">{{cite web | url=http://money.cnn.com/2011/01/13/technology/ibm_jeopardy_watson/ | title=IBM's Jeopardy supercomputer beats humans in practice bout | accessdate=2011-01-13 | author=Pepitone, Julianne | date=2011-1-13 | publisher=CNNMoney}}</ref> |

|||

Watson uses IBM's DeepQA software and the Apache [[UIMA]] (Unstructured Information Management Architecture) framework implementation. The system was written in various languages, including [[Java (programming language)|Java]], [[C++]], and [[Prolog]], and runs on the [[SUSE Linux Enterprise Server]] 11 operating system using the Apache [[Hadoop]] framework to provide distributed computing.<ref name="contentpages">{{cite web|url=https://www.pcworld.com/article/219893/ibm_watson_vanquishes_human_jeopardy_foes.html|title=IBM Watson Vanquishes Human Jeopardy Foes|last=Jackson|first=Joab|date=February 17, 2011|work=[[PC World (magazine)|PC World]]|access-date=February 17, 2011|agency=IDG News|archive-date=February 20, 2011|archive-url=https://web.archive.org/web/20110220020908/http://www.pcworld.com/article/219893/ibm_watson_vanquishes_human_jeopardy_foes.html|url-status=live}}</ref><ref>{{cite web |last=Takahashi |first=Dean |url=https://venturebeat.com/2011/02/17/ibm-researcher-explains-what-watson-gets-right-and-wrong/ |title=IBM researcher explains what Watson gets right and wrong |work=VentureBeat |date=February 17, 2011 |access-date=February 18, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218132333/http://venturebeat.com/2011/02/17/ibm-researcher-explains-what-watson-gets-right-and-wrong/ |url-status=live }}</ref><ref>{{cite web |author=Novell |author-link=Novell |archive-url=https://web.archive.org/web/20110421061200/http://online.wsj.com/article/PR-CO-20110202-906855.html |url=https://online.wsj.com/article/PR-CO-20110202-906855.html |archive-date=April 21, 2011 |title=Watson Supercomputer to Compete on 'Jeopardy!' – Powered by SUSE Linux Enterprise Server on IBM POWER7 |work=The Wall Street Journal |date=February 2, 2011 |access-date=February 21, 2011}}</ref> |

|||

== |

===Hardware=== |

||

The system is workload-optimized, integrating [[massively parallel]] [[POWER7]] processors and built on IBM's ''DeepQA'' technology,<ref name="Smartest Machine on Earth">{{cite web |url=http://www.cs.umbc.edu/2011/02/is-watson-the-smartest-machine-on-earth/ |title=Is Watson the smartest machine on earth? |work=Computer Science and Electrical Engineering Department, University of Maryland Baltimore County |date=February 10, 2011 |access-date=February 11, 2011 |archive-date=September 27, 2011 |archive-url=https://web.archive.org/web/20110927014129/http://www.cs.umbc.edu/2011/02/is-watson-the-smartest-machine-on-earth/ |url-status=live }}</ref> which it uses to generate hypotheses, gather massive evidence, and analyze data.<ref name=ibm/> Watson employs a cluster of ninety IBM Power 750 servers, each of which uses a 3.5 GHz [[POWER7]] eight-core processor, with four threads per core. In total, the system uses 2,880 POWER7 processor threads and 16 [[terabyte]]s of RAM.<ref name="Smartest Machine on Earth"/> |

|||

According to IBM, "The goal is to have computers start to interact in natural human terms across a range of applications and processes, understanding the questions that humans ask and providing answers that humans can understand and justify."<ref>http://www.networkworld.com/news/2010/021010-ibm-jeopardy-game.html?page=2</ref> |

|||

According to [[John Rennie (editor)|John Rennie]], Watson can process 500 gigabytes (the equivalent of a million books) per second.<ref name=rennie>{{cite web |last=Rennie |first=John |url=http://blogs.plos.org/retort/2011/02/14/how-ibm%E2%80%99s-watson-computer-will-excel-at-jeopardy/ |title=How IBM's Watson Computer Excels at Jeopardy! |work=PLoS blogs |date=February 14, 2011 |access-date=February 19, 2011 |archive-date=February 22, 2011 |archive-url=https://web.archive.org/web/20110222031017/http://blogs.plos.org/retort/2011/02/14/how-ibm%E2%80%99s-watson-computer-will-excel-at-jeopardy/ |url-status=dead }}</ref> IBM master inventor and senior consultant Tony Pearson estimated Watson's hardware cost at about three million dollars.<ref>{{cite web |last=Lucas |first=Mearian |url=http://www.computerworld.com/s/article/9210381/Can_anyone_afford_an_IBM_Watson_supercomputer_Yes_?taxonomyId=67&pageNumber=2 |title=Can anyone afford an IBM Watson supercomputer? (Yes) |work=[[Computerworld]] |date=February 21, 2011 |access-date=February 21, 2011 |archive-date=December 12, 2013 |archive-url=https://web.archive.org/web/20131212014259/http://www.computerworld.com/s/article/9210381/Can_anyone_afford_an_IBM_Watson_supercomputer_Yes_?taxonomyId=67&pageNumber=2 |url-status=live }}</ref> Its [[Linpack]] performance stands at 80 [[Teraflop|TeraFLOPs]], which is about half as fast as the cut-off line for the [[Top 500 Supercomputers]] list.<ref>{{cite web |title=Top500 List – November 2013 |work=Top500.org |url=http://www.top500.org/list/2013/11/ |access-date=2014-01-04 |archive-date=2013-12-31 |archive-url=https://web.archive.org/web/20131231183454/http://www.top500.org/list/2013/11/ |url-status=live }}</ref> According to Rennie, all content was stored in Watson's RAM for the Jeopardy game because data stored on [[hard drive]]s would be too slow to compete with human Jeopardy champions.<ref name=rennie/> |

|||

Watson is based on commercially available IBM Power 750 servers that have been marketed since February 2010. IBM also intends to market the DeepQA software to large corporations, with a price in the millions of dollars, reflecting the $1 million needed to acquire the complete system that runs Watson. IBM expects the price to drop substantially within a decade as the technology improves.<ref name=NYT2010/> |

|||

===Data=== |

|||

The sources of information for Watson include encyclopedias, [[dictionary|dictionaries]], [[thesaurus|thesauri]], [[newswire]] articles and [[literary work]]s. Watson also used databases, [[Taxonomy (general)|taxonomies]] and [[ontology (information science)|ontologies]] including [[DBPedia]], [[WordNet]] and [[YAGO (database)|Yago]].<ref>{{cite journal |title=The AI Behind Watson – The Technical Article |first=David |last=Ferrucci |display-authors=etal |url=http://www.aaai.org/Magazine/Watson/watson.php |journal=AI Magazine |issue=Fall 2010 |access-date=November 11, 2013 |archive-date=November 6, 2020 |archive-url=https://web.archive.org/web/20201106232453/https://www.aaai.org/Magazine/Watson/watson.php |url-status=live }}</ref> The IBM team provided Watson with millions of documents, including dictionaries, encyclopedias and other reference material, that it could use to build its knowledge.<ref name="nytmag">{{cite news|url=https://www.nytimes.com/2010/06/20/magazine/20Computer-t.html|title=Smarter Than You Think: What Is I.B.M.'s Watson?|last=Thompson|first=Clive|date=June 16, 2010|newspaper=[[The New York Times Magazine]]|access-date=February 18, 2011|author-link=Clive Thompson (journalist)|archive-date=June 5, 2011|archive-url=https://web.archive.org/web/20110605195202/http://www.nytimes.com/2010/06/20/magazine/20Computer-t.html|url-status=live}}</ref> |

|||

==Operation== |

|||

Watson parses questions into different keywords and sentence fragments in order to find statistically related phrases.<ref name=nytmag/> Watson's main innovation was not in the creation of a new [[algorithm]] for this operation, but rather its ability to quickly execute hundreds of proven [[Computational semantics|language analysis]] algorithms simultaneously.<ref name=nytmag/><ref>{{cite web |archive-url=https://web.archive.org/web/20110414214425/http://www.pbs.org/wgbh/nova/tech/will-watson-win-jeopardy.html |archive-date=April 14, 2011 |url=https://www.pbs.org/wgbh/nova/tech/will-watson-win-jeopardy.html |work=Nova ScienceNOW |title=Will Watson Win On Jeopardy!? |publisher=[[Public Broadcasting Service]] |date=January 20, 2011 |access-date=January 27, 2011}}</ref> The more algorithms that find the same answer independently, the more likely Watson is to be correct. Once Watson has a small number of potential solutions, it is able to check against its database to ascertain whether the solution makes sense or not.<ref name=nytmag/> |

|||

===Comparison with human players=== |

|||

[[File:Watson Jeopardy.jpg|thumb|[[Ken Jennings]], Watson, and [[Brad Rutter]] in their ''[[Jeopardy!]]'' exhibition match]] |

|||

Watson's basic working principle is to parse keywords in a clue while searching for related terms as responses. This gives Watson some advantages and disadvantages compared with human ''Jeopardy!'' players.<ref name=cnet /> Watson has deficiencies in [[natural language understanding|understanding]] the context of the clues. Watson can read, analyze, and learn from natural language, which gives it the ability to make human-like decisions.<ref>{{Cite journal |last1=Russo-Spena |first1=Tiziana |last2=Mele |first2=Cristina |last3=Marzullo |first3=Marialuisa |date=2018 |title=Practising Value Innovation through Artificial Intelligence: The IBM Watson Case |journal=Journal of Creating Value |language=en |volume=5 |issue=1 |pages=11–24 |doi=10.1177/2394964318805839 |s2cid=56759835 |issn=2394-9643|doi-access=free }}</ref> As a result, human players usually generate responses faster than Watson, especially to short clues.<ref name=nytmag /> Watson's programming prevents it from using the popular tactic of buzzing before it is sure of its response.<ref name=nytmag /> However, Watson has consistently better [[reaction time]] on the buzzer once it has generated a response, and is immune to human players' psychological tactics, such as jumping between categories on every clue.<ref name=nytmag /><ref name=jenning /> |

|||

In a sequence of 20 mock games of ''Jeopardy!'', human participants were able to use the six to seven seconds that Watson needed to hear the clue and decide whether to signal for responding.<ref name=nytmag/> During that time, Watson also has to evaluate the response and determine whether it is sufficiently confident in the result to signal.<ref name=nytmag/> Part of the system used to win the ''Jeopardy!'' contest was the electronic circuitry that receives the "ready" signal and then examines whether Watson's confidence level was great enough to activate the buzzer. Given the speed of this circuitry compared to the speed of human reaction times, Watson's reaction time was faster than the human contestants except when the human anticipated (instead of reacted to) the ready signal.<ref name=david>{{cite web |url=https://www.ibm.com/blogs/research/2011/01/how-watson-sees-hears-and-speaks-to-play-jeopardy/ |title=How Watson "sees," "hears," and "speaks" to play Jeopardy! |last=Gondek |first=David |date=January 10, 2011 |work=IBM Research News |access-date=February 21, 2011}}</ref> After signaling, Watson speaks with an electronic voice and gives the responses in ''Jeopardy!''{{'s}} question format.<ref name=nytmag/> Watson's voice was synthesized from recordings that actor Jeff Woodman made for an IBM [[Speech synthesis|text-to-speech]] program in 2004.<ref>{{cite web |last=Avery |first=Lise |date=February 14, 2011 |title=Interview with Actor Jeff Woodman, Voice of IBM's Watson Computer |url=http://www.anythinggoesradio.com/Interviews/JeffWoodman_02_14_11.MP3 |work=Anything Goes!! |format=MP3 |access-date=February 15, 2011 |archive-date=September 21, 2019 |archive-url=https://web.archive.org/web/20190921013845/http://www.anythinggoesradio.com/Interviews/JeffWoodman_02_14_11.MP3 |url-status=live }}</ref> |

|||

The ''Jeopardy!'' staff used different means to notify Watson and the human players when to buzz,<ref name=david /> which was critical in many rounds.<ref name=jenning /> The humans were notified by a light, which took them tenths of a second to [[perception|perceive]].<ref>{{cite web |url=http://www.fon.hum.uva.nl/rob/Courses/InformationInSpeech/CDROM/Literature/LOTwinterschool2006/biae.clemson.edu/bpc/bp/Lab/110/reaction.htm |title=A Literature Review on Reaction Time |first=Robert J. |last=Kosinski |work=Clemson University |year=2008 |access-date=January 10, 2016 |archive-date=March 17, 2016 |archive-url=https://web.archive.org/web/20160317055637/http://www.fon.hum.uva.nl/rob/Courses/InformationInSpeech/CDROM/Literature/LOTwinterschool2006/biae.clemson.edu/bpc/bp/Lab/110/reaction.htm |url-status=live }}</ref>{{sfnp|Baker|2011|p=174}} Watson was notified by an electronic signal and could activate the buzzer within about eight milliseconds.{{sfnp|Baker|2011|p=178}} The humans tried to compensate for the perception delay by anticipating the light,<ref name=strachan /> but the variation in the anticipation time was generally too great to fall within Watson's response time.<ref name=jenning /> Watson did not attempt to anticipate the notification signal.{{sfnp|Baker|2011|p=174}}<ref name=strachan /> |

|||

==History== |

|||

===Development=== |

|||

Since [[Deep Blue (chess computer)|Deep Blue]]'s victory over [[Garry Kasparov]] in chess in 1997, IBM had been on the hunt for a new challenge. In 2004, IBM Research manager Charles Lickel, over dinner with coworkers, noticed that the restaurant they were in had fallen silent. He soon discovered the cause of this evening's hiatus: [[Ken Jennings]], who was then in the middle of his successful 74-game run on ''[[Jeopardy!]]''. Nearly the entire restaurant had piled toward the televisions, mid-meal, to watch ''Jeopardy!''. Intrigued by the quiz show as a possible challenge for IBM, Lickel passed the idea on, and in 2005, IBM Research executive [[Paul Horn (computer scientist)|Paul Horn]] supported Lickel, pushing for someone in his department to take up the challenge of playing ''Jeopardy!'' with an IBM system. Though he initially had trouble finding any research staff willing to take on what looked to be a much more complex challenge than the wordless game of chess, eventually David Ferrucci took him up on the offer.{{sfnp|Baker|2011|pp=6–8}} In competitions managed by the United States government, Watson's predecessor, a system named Piquant, was usually able to respond correctly to only about 35% of clues and often required several minutes to respond.{{sfnp|Baker|2011|p=30}}<ref>{{Cite conference |last1=Radev |first1=Dragomir R. |last2=Prager |first2=John |last3=Samn |first3=Valerie |title=Ranking potential answers to natural language questions |book-title=Proceedings of the 6th Conference on Applied Natural Language Processing |year=2000 |url=http://clair.si.umich.edu/~radev/papers/anlp00.pdf |conference= |access-date=2011-02-23 |archive-date=2011-08-26 |archive-url=https://web.archive.org/web/20110826180039/http://clair.si.umich.edu/~radev/papers/anlp00.pdf |url-status=dead }}</ref><ref>{{Cite conference |last1=Prager |first1=John |last2=Brown |first2=Eric |last3=Coden |first3=Annie |last4=Radev |first4=Dragomir R. |title=Question-answering by predictive annotation |book-title=Proceedings, 23rd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval |date=July 2000 |url=http://clair.si.umich.edu/~radev/papers/sigir00.pdf |conference= |access-date=2011-02-23 |archive-date=2011-08-23 |archive-url=https://web.archive.org/web/20110823221004/http://clair.si.umich.edu/~radev/papers/sigir00.pdf |url-status=dead }}</ref> To compete successfully on ''Jeopardy!'', Watson would need to respond in no more than a few seconds, and at that time, the problems posed by the game show were deemed to be impossible to solve.<ref name=nytmag/> |

|||

In initial tests run during 2006 by David Ferrucci, the senior manager of IBM's Semantic Analysis and Integration department, Watson was given 500 clues from past ''Jeopardy!'' programs. While the best real-life competitors buzzed in half the time and responded correctly to as many as 95% of clues, Watson's first pass could get only about 15% correct. During 2007, the IBM team was given three to five years and a staff of 15 people to solve the problems.<ref name=nytmag/> [[John E. Kelly III]] succeeded Paul Horn as head of [[IBM Research]] in 2007.<ref name="Leopold2007">{{cite news |title=IBM's Paul Horn retires, Kelly named research chief |last1=Leopold |first1=George |url=https://www.eetimes.com/ibms-paul-horn-retires-kelly-named-research-chief/ |work=[[EE Times]] |date=July 18, 2007 |access-date=May 27, 2020 |archive-date=June 3, 2020 |archive-url=https://web.archive.org/web/20200603054131/https://www.eetimes.com/ibms-paul-horn-retires-kelly-named-research-chief/ |url-status=live }}</ref> ''[[InformationWeek]]'' described Kelly as "the father of Watson" and credited him for encouraging the system to compete against humans on ''Jeopardy!''.<ref name="Babcock">{{cite news |title=IBM Cognitive Colloquium Spotlights Uncovering Dark Data |last1=Babcock |first1=Charles |url=https://www.informationweek.com/cloud/software-as-a-service/ibm-cognitive-colloquium-spotlights-uncovering-dark-data/d/d-id/1322647 |work=[[InformationWeek]] |date=October 14, 2015 |access-date=May 27, 2020 |archive-date=June 3, 2020 |archive-url=https://web.archive.org/web/20200603054133/https://www.informationweek.com/cloud/software-as-a-service/ibm-cognitive-colloquium-spotlights-uncovering-dark-data/d/d-id/1322647 |url-status=live }}</ref> By 2008, the developers had advanced Watson such that it could compete with ''Jeopardy!'' champions.<ref name=nytmag/> By February 2010, Watson could beat human ''Jeopardy!'' contestants on a regular basis.<ref name=networld>{{cite web |last=Brodkin |first=Jon |url=http://www.networkworld.com/news/2010/021010-ibm-jeopardy-game.html?hpg1=bn |title=IBM's Jeopardy-playing machine can now beat human contestants |work=[[Network World]] |date=February 10, 2010 |access-date=February 19, 2011 |url-status=dead |archive-url=https://web.archive.org/web/20130603034018/http://www.networkworld.com/news/2010/021010-ibm-jeopardy-game.html?hpg1=bn |archive-date=June 3, 2013 }}</ref> |

|||

During the game, Watson had access to 200 million pages of structured and unstructured content consuming four [[terabyte]]s of [[disk storage]]<ref name="contentpages" /> including the full text of the 2011 edition of Wikipedia,<ref name="atlantic20110217">{{cite web|url=https://www.theatlantic.com/technology/archive/2011/02/is-it-time-to-welcome-our-new-computer-overlords/71388/|title=Is It Time to Welcome Our New Computer Overlords?|last=Zimmer|first=Ben|author-link=Ben Zimmer|date=February 17, 2011|work=[[The Atlantic]]|access-date=February 17, 2011|archive-date=August 29, 2018|archive-url=https://web.archive.org/web/20180829072116/https://www.theatlantic.com/technology/archive/2011/02/is-it-time-to-welcome-our-new-computer-overlords/71388/|url-status=live}}</ref> but was not connected to the Internet.<ref>{{cite web|url=https://www.npr.org/2011/01/08/132769575/Can-A-Computer-Become-A-Jeopardy-Champ|title=Can a Computer Become a Jeopardy! Champ?|last=Raz|first=Guy|date=January 28, 2011|work=[[National Public Radio]]|access-date=February 18, 2011|archive-date=February 28, 2011|archive-url=https://web.archive.org/web/20110228020641/http://www.npr.org/2011/01/08/132769575/Can-A-Computer-Become-A-Jeopardy-Champ|url-status=live}}</ref><ref name="nytmag" /> For each clue, Watson's three most probable responses were displayed on the television screen. Watson consistently outperformed its human opponents on the game's signaling device, but had trouble in a few categories, notably those having short clues containing only a few words.{{Citation needed|date=April 2022}} |

|||

Although the system is primarily an IBM effort, Watson's development involved faculty and graduate students from [[Rensselaer Polytechnic Institute]], [[Carnegie Mellon University]], [[University of Massachusetts Amherst]], the [[University of Southern California]]'s [[Information Sciences Institute]], the [[University of Texas at Austin]], the [[Massachusetts Institute of Technology]], and the [[University of Trento]],<ref name="AIMagazine">{{cite journal |last=Ferrucci |first=D. |url=http://www.aaai.org/ojs/index.php/aimagazine/article/view/2303/2165 |title=Building Watson: An Overview of the DeepQA Project |journal=AI Magazine |volume=31 |pages=59–79 |number=3 |year=2010 |access-date=February 19, 2011 |display-authors=etal |doi=10.1609/aimag.v31i3.2303 |archive-date=December 28, 2017 |archive-url=https://web.archive.org/web/20171228231436/https://www.aaai.org/ojs/index.php/aimagazine/article/view/2303/2165 |url-status=live |doi-access=free }}</ref> as well as students from [[New York Medical College]].<ref>{{cite journal |title=Medical Students Offer Expertise to IBM's ''Jeopardy!''-Winning Computer Watson as It Pursues a New Career in Medicine |url=http://www.nymc.edu/OfficesAndServices/PublicRelations/Assets/June2012_InTouch.pdf |journal=InTouch |volume=18 |publisher=[[New York Medical College]] |date=June 2012 |page=4 |archive-url=https://web.archive.org/web/20121123173835/http://www.nymc.edu/OfficesAndServices/PublicRelations/Assets/June2012_InTouch.pdf |archive-date=2012-11-23 |url-status=dead}}</ref> Among the team of IBM programmers who worked on Watson was 2001 ''[[Who Wants to Be a Millionaire (American game show)|Who Wants to Be a Millionaire?]]'' top prize winner Ed Toutant, who himself had appeared on ''Jeopardy!'' in 1989 (winning one game).<ref>{{Cite web|url=https://www.statesman.com/news/20181116/millionaire-quiz-whiz-toutant-had-passion-for-trivia-austins-arts-scene|title='Millionaire' quiz whiz Toutant had passion for trivia, Austin's arts scene|access-date=2021-09-23|archive-date=2021-09-23|archive-url=https://web.archive.org/web/20210923220949/https://www.statesman.com/news/20181116/millionaire-quiz-whiz-toutant-had-passion-for-trivia-austins-arts-scene|url-status=live}}</ref> |

|||

===''Jeopardy!''=== |

|||

====Preparation==== |

|||

[[File:IBMWatson.jpg|thumb|Watson demo at an IBM booth at a trade show]] |

|||

In 2008, IBM representatives communicated with ''Jeopardy!'' executive producer [[Harry Friedman]] about the possibility of having Watson compete against [[Ken Jennings]] and [[Brad Rutter]], two of the most successful contestants on the show, and the program's producers agreed.<ref name=nytmag/><ref>{{cite news |first=Brian |last=Stelter |author-link=Brian Stelter |title=I.B.M. Supercomputer 'Watson' to Challenge 'Jeopardy' Stars |url=http://mediadecoder.blogs.nytimes.com/2010/12/14/i-b-m-supercomputer-watson-to-challenge-jeopardy-stars/ |newspaper=[[The New York Times]] |date=December 14, 2010 |access-date=December 14, 2010}}</ref> Watson's differences with human players had generated conflicts between IBM and ''Jeopardy!'' staff during the planning of the competition.<ref name=cnet>{{cite web |last=Needleman |first=Rafe |url=http://chkpt.zdnet.com/chkpt/1pcast.roundtable/http://podcast-files.cnet.com/podcast/cnet_roundtable_021811.mp3 |title=Reporters' Roundtable: Debating the robobrains |work=[[CNET]] |date=February 18, 2011 |access-date=February 18, 2011 }}{{dead link|date=July 2024|bot=medic}}{{cbignore|bot=medic}}</ref> IBM repeatedly expressed concerns that the show's writers would exploit Watson's cognitive deficiencies when writing the clues, thereby turning the game into a [[Turing test]]. To alleviate that claim, a third party randomly picked the clues from previously written shows that were never broadcast.<ref name=cnet/> ''Jeopardy!'' staff also showed concerns over Watson's reaction time on the buzzer. Originally Watson signaled electronically, but show staff requested that it press a button physically, as the human contestants would.{{sfnp|Baker|2011|p=171}} Even with a robotic "finger" pressing the buzzer, Watson remained faster than its human competitors. Ken Jennings noted, "If you're trying to win on the show, the buzzer is all", and that Watson "can knock out a microsecond-precise buzz every single time with little or no variation. Human reflexes can't compete with computer circuits in this regard."<ref name=jenning>{{cite news |title=Jeopardy! Champ Ken Jennings |newspaper=[[The Washington Post]] |date=February 15, 2011 |url=https://live.washingtonpost.com/jeopardy-ken-jennings.html |access-date=February 15, 2011 |archive-date=February 14, 2011 |archive-url=https://web.archive.org/web/20110214030622/http://live.washingtonpost.com/jeopardy-ken-jennings.html |url-status=live }}</ref><ref name=strachan>{{cite news|first=Alex |last=Strachan |title=For Jennings, it's a man vs. man competition |newspaper=[[The Vancouver Sun]] |date=February 12, 2011 |archive-url=https://web.archive.org/web/20110221235755/http://www.vancouversun.com/entertainment/Jennings%2Bcompetition/4270840/story.html |archive-date=February 21, 2011 |url=https://vancouversun.com/entertainment/Jennings+competition/4270840/story.html |access-date=February 15, 2011 |url-status=dead }}</ref><ref name=flatow>{{cite web |last=Flatow |first=Ira |title=IBM Computer Faces Off Against 'Jeopardy' Champs |work=[[Talk of the Nation]] |publisher=[[National Public Radio]] |date=February 11, 2011 |url=https://www.npr.org/2011/02/11/133686004/IBM-Computer-Faces-Off-Against-Jeopardy-Champs |access-date=February 15, 2011 |archive-date=February 17, 2011 |archive-url=https://web.archive.org/web/20110217061619/http://www.npr.org/2011/02/11/133686004/IBM-Computer-Faces-Off-Against-Jeopardy-Champs |url-status=live }}</ref> [[Stephen L. Baker|Stephen Baker]], a journalist who recorded Watson's development in his book ''Final Jeopardy'', reported that the conflict between IBM and ''Jeopardy!'' became so serious in May 2010 that the competition was almost cancelled.<ref name=cnet/> As part of the preparation, IBM constructed a mock set in a conference room at one of its technology sites to model the one used on ''Jeopardy!''. Human players, including former ''Jeopardy!'' contestants, also participated in mock games against Watson with Todd Alan Crain of ''[[The Onion]]'' playing host.<ref name=nytmag/> About 100 test matches were conducted with Watson winning 65% of the games.<ref>{{cite news |last=Sostek |first=Anya |url=http://www.post-gazette.com/pg/11044/1125163-96.stm |title=Human champs of 'Jeopardy!' vs. Watson the IBM computer: a close match |newspaper=Pittsburgh Post Gazette |date=February 13, 2011 |access-date=February 19, 2011 |archive-date=February 17, 2011 |archive-url=https://web.archive.org/web/20110217141158/http://www.post-gazette.com/pg/11044/1125163-96.stm |url-status=live }}</ref> |

|||

To provide a physical presence in the televised games, Watson was represented by an "[[Avatar (computing)|avatar]]" of a globe, inspired by the IBM "smarter planet" symbol. Jennings described the computer's avatar as a "glowing blue ball crisscrossed by 'threads' of thought—42 threads, to be precise",<ref name="threads">{{cite web |url=http://www.slate.com/id/2284721/ |title=My Puny Human Brain |last=Jennings |first=Ken |author-link=Ken Jennings |work=[[Slate (magazine)|Slate]] |publisher=Newsweek Interactive Co. LLC |date=February 16, 2011 |access-date=February 17, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218104345/http://www.slate.com/id/2284721/ |url-status=live }}</ref> and stated that the number of thought threads in the avatar was an [[in-joke]] referencing the [[Phrases from The Hitchhiker's Guide to the Galaxy#Answer to the Ultimate Question of Life, the Universe, and Everything (42)|significance]] of the [[42 (number)|number 42]] in [[Douglas Adams]]' ''[[Hitchhiker's Guide to the Galaxy]]''.<ref name="threads"/> [[Joshua Davis (web designer)|Joshua Davis]], the artist who designed the avatar for the project, explained to Stephen Baker that there are 36 trigger-able states that Watson was able to use throughout the game to show its confidence in responding to a clue correctly; he had hoped to be able to find forty-two, to add another level to the ''Hitchhiker's Guide'' reference, but he was unable to pinpoint enough game states.{{sfnp|Baker|2011|p=117}} |

|||

A practice match was recorded on January 13, 2011, and the official matches were recorded on January 14, 2011. All participants maintained secrecy about the outcome until the match was broadcast in February.{{sfnp|Baker|2011|pp=232–258}} |

|||

====Practice match==== |

|||

In a practice match before the press on January 13, 2011, Watson won a 15-question round against Ken Jennings and Brad Rutter with a score of $4,400 to Jennings's $3,400 and Rutter's $1,200, though Jennings and Watson were tied before the final $1,000 question. None of the three players responded incorrectly to a clue.<ref name="PracticeMatch">{{cite web |url=https://www.zdnet.com/article/ibms-watson-wins-jeopardy-practice-round-can-humans-hang/ |title=IBM's Watson wins Jeopardy practice round: Can humans hang? |access-date=January 13, 2011 |last=Dignan |first=Larry |date=January 13, 2011 |work=[[ZDnet]] |archive-date=January 13, 2011 |archive-url=https://web.archive.org/web/20110113191635/http://www.zdnet.com/blog/btl/ibms-watson-wins-jeopardy-practice-round-can-humans-hang/43601 |url-status=live }}</ref> |

|||

====First match==== |

|||

The first round was broadcast February 14, 2011, and the second round, on February 15, 2011. The right to choose the first category had been determined by a draw won by Rutter.<ref name="1920sJeopardy Challenge">{{Cite episode |title=The IBM Challenge Day 1 |series=Jeopardy |series-link=Jeopardy! |airdate=February 14, 2011 |season=27 |number=23}}</ref> Watson, represented by a computer monitor display and artificial voice, responded correctly to the second clue and then selected the fourth clue of the first category, a deliberate strategy to find the Daily Double as quickly as possible.<ref name="Strategy">{{cite web |url=http://ibmresearchnews.blogspot.com/2011/02/knowing-what-it-knows-selected-nuances.html |title=Knowing what it knows: selected nuances of Watson's strategy |last=Lenchner |first=Jon |publisher=[[IBM]] |work=IBM Research News |date=February 3, 2011 |access-date=February 16, 2011 |archive-date=February 16, 2011 |archive-url=https://web.archive.org/web/20110216211148/http://ibmresearchnews.blogspot.com/2011/02/knowing-what-it-knows-selected-nuances.html |url-status=live }}</ref> Watson's guess at the Daily Double location was correct. At the end of the first round, Watson was tied with Rutter at $5,000; Jennings had $2,000.<ref name="1920sJeopardy Challenge" /> |

|||

Watson's performance was characterized by some quirks. In one instance, Watson repeated a reworded version of an incorrect response offered by Jennings. (Jennings said "What are the '20s?" in reference to the 1920s. Then Watson said "What is 1920s?") Because Watson could not recognize other contestants' responses, it did not know that Jennings had already given the same response. In another instance, Watson was initially given credit for a response of "What is a leg?" after Jennings incorrectly responded "What is: he only had one hand?" to a clue about [[George Eyser]] (the correct response was, "What is: he's missing a leg?"). Because Watson, unlike a human, could not have been responding to Jennings's mistake, it was decided that this response was incorrect. The broadcast version of the episode was edited to omit Trebek's original acceptance of Watson's response.<ref>{{cite web |url=https://arstechnica.com/media/news/2011/02/ibms-watson-tied-for-1st-in-jeopardy-almost-sneaks-wrong-answer-by-trebek.ars |title=Jeopardy: IBM's Watson almost sneaks wrong answer by Trebek |last=Johnston |first=Casey |work=Ars Technica |date=February 15, 2011 |access-date=February 15, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218025702/http://arstechnica.com/media/news/2011/02/ibms-watson-tied-for-1st-in-jeopardy-almost-sneaks-wrong-answer-by-trebek.ars |url-status=live }}</ref> Watson also demonstrated complex wagering strategies on the Daily Doubles, with one bet at $6,435 and another at $1,246.<ref name="Computer crushes the competition on 'Jeopardy!'"/> Gerald Tesauro, one of the IBM researchers who worked on Watson, explained that Watson's wagers were based on its confidence level for the category and a complex [[Regression analysis|regression model]] called the Game State Evaluator.<ref>{{cite web |last=Tesauro |first=Gerald |url=http://ibmresearchnews.blogspot.com/2011/02/watsons-wagering-strategies.html |title=Watson's wagering strategies |publisher=[[IBM]] |work=IBM Research News |date=February 13, 2011 |access-date=February 18, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218174326/http://ibmresearchnews.blogspot.com/2011/02/watsons-wagering-strategies.html |url-status=live }}</ref> |

|||

Watson took a commanding lead in Double Jeopardy!, correctly responding to both Daily Doubles. Watson responded to the second Daily Double correctly with a 32% confidence score.<ref name="Computer crushes the competition on 'Jeopardy!'">{{cite web |archive-url=https://web.archive.org/web/20110219023019/https://www.google.com/hostednews/ap/article/ALeqM5jwVBxDQvVKEwk_czuv8Q4jxdU1Sg?docId=2e3e918f552b4599b013b4cc473d96af |archive-date=February 19, 2011 |url=https://www.google.com/hostednews/ap/article/ALeqM5jwVBxDQvVKEwk_czuv8Q4jxdU1Sg?docId=2e3e918f552b4599b013b4cc473d96af |title=Computer crushes the competition on 'Jeopardy!' |agency=Associated Press |date=February 15, 2011 |access-date=February 19, 2011}}</ref> |

|||

However, during the Final Jeopardy! round, Watson was the only contestant to miss the clue in the category U.S. Cities ("Its [[O'Hare International Airport|largest airport]] was named for a [[Edward O'Hare|World War II hero]]; its [[Midway International Airport|second largest]], for a [[Battle of Midway|World War II battle]]"). Rutter and Jennings gave the correct response of Chicago, but Watson's response was "What is [[Toronto]]?????" with five question marks appended indicating a lack of confidence.<ref name="Computer crushes the competition on 'Jeopardy!'"/><ref name="TO">{{cite web |author=Staff |url=https://www.ctvnews.ca/ibm-s-computer-wins-jeopardy-but-toronto-1.608022 |title=IBM's computer wins 'Jeopardy!' but... Toronto? |work=[[CTV News]] |date=February 15, 2011 |access-date=February 15, 2011 |archive-date=November 27, 2012 |archive-url=https://web.archive.org/web/20121127193239/http://www.ctvnews.ca/ibm-s-computer-wins-jeopardy-but-toronto-1.608022 |url-status=live }}</ref><ref name="TOinUS">{{cite web |archive-url=https://web.archive.org/web/20110220044730/http://www.theglobeandmail.com/news/world/americas/for-watson-jeopardy-victory-was-elementary/article1910735/ |archive-date=February 20, 2011 |url=https://www.theglobeandmail.com/news/world/americas/for-watson-jeopardy-victory-was-elementary/article1910735/ |title=For Watson, Jeopardy! victory was elementary |last1=Robertson |first1=Jordan |last2=Borenstein |first2=Seth |agency=[[The Associated Press]] |newspaper=[[The Globe and Mail]] |date=February 16, 2011 |access-date=February 17, 2011 }}</ref> Ferrucci offered reasons why Watson would appear to have guessed a Canadian city: categories only weakly suggest the type of response desired, the phrase "U.S. city" did not appear in the question, there are [[Toronto (disambiguation)#United States|cities named Toronto in the U.S.]], and Toronto in Ontario has an [[American League]] baseball team.<ref>{{cite web |last=Hamm |first=Steve |url=http://asmarterplanet.com/blog/2011/02/watson-on-jeopardy-day-two-the-confusion-over-an-airport-clue.html |title=Watson on Jeopardy! Day Two: The Confusion over and Airport Clue |work=A Smart Planet Blog |date=February 15, 2011 |access-date=February 21, 2011 |archive-date=October 24, 2011 |archive-url=https://web.archive.org/web/20111024233808/http://asmarterplanet.com/blog/2011/02/watson-on-jeopardy-day-two-the-confusion-over-an-airport-clue.html |url-status=live }}</ref> [[Chris Welty]], who also worked on Watson, suggested that it may not have been able to correctly parse the second part of the clue, "its second largest, for a World War II battle" (which was not a standalone clause despite it following a [[semicolon]], and required context to understand that it was referring to a second-largest ''airport'').<ref name="ArsTechnica2">{{cite web |url=https://arstechnica.com/media/news/2011/02/creators-watson-has-no-speed-advantage-as-it-crushes-humans-in-jeopardy.ars |title=Creators: Watson has no speed advantage as it crushes humans in ''Jeopardy'' |first=Casey |last=Johnston |work=Ars Technica |date=February 15, 2011 |access-date=February 21, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218231452/http://arstechnica.com/media/news/2011/02/creators-watson-has-no-speed-advantage-as-it-crushes-humans-in-jeopardy.ars |url-status=live }}</ref> [[Eric Nyberg]], a professor at Carnegie Mellon University and a member of the development team, stated that the error occurred because Watson does not possess the comparative knowledge to discard that potential response as not viable.<ref name="TOinUS" /> Although not displayed to the audience as with non-Final Jeopardy! questions, Watson's second choice was Chicago. Both Toronto and Chicago were well below Watson's confidence threshold, at 14% and 11% respectively. Watson wagered only $947 on the question.<ref>{{cite web | url=https://www.youtube.com/watch?v=lI-M7O_bRNg | title=IBM Watson: Final Jeopardy! And the Future of Watson | website=YouTube | date=16 February 2011 }}</ref> |

|||

The game ended with Jennings with $4,800, Rutter with $10,400, and Watson with $35,734.<ref name="Computer crushes the competition on 'Jeopardy!'"/> |

|||

====Second match==== |

|||

During the introduction, Trebek (a Canadian native) joked that he had learned Toronto was a U.S. city, and Watson's error in the first match prompted an IBM engineer to wear a [[Toronto Blue Jays]] jacket to the recording of the second match.<ref name="Trebekintro">{{cite web|archive-url=https://web.archive.org/web/20110220044949/http://www.vancouversun.com/business/technology/Computer%2Bcreams%2Bhuman%2BJeopardy%2Bchampions/4300293/story.html |archive-date=February 20, 2011 |url=https://vancouversun.com/business/technology/Computer+creams+human+Jeopardy+champions/4300293/story.html |title=Computer creams human Jeopardy! champions |last=Oberman |first=Mira |agency=[[Agence France-Presse]] |work=[[Vancouver Sun]] |date=February 17, 2011 |access-date=February 17, 2011 |url-status=dead }}</ref> |

|||

In the first round, Jennings was finally able to choose a Daily Double clue,<ref>{{cite web |url=https://arstechnica.com/media/news/2011/02/bug-lets-humans-grab-daily-double-as-watson-triumphs-on-jeopardy.ars |title=Bug lets humans grab Daily Double as Watson triumphs on Jeopardy |last=Johnston |first=Casey |work=Ars Technica |date=February 17, 2011 |access-date=February 21, 2011 |archive-date=February 21, 2011 |archive-url=https://web.archive.org/web/20110221032847/http://arstechnica.com//media//news//2011//02//bug-lets-humans-grab-daily-double-as-watson-triumphs-on-jeopardy.ars |url-status=live }}</ref> while Watson responded to one Daily Double clue incorrectly for the first time in the Double Jeopardy! Round.<ref name=forbes>{{cite web |url=https://blogs.forbes.com/bruceupbin/2011/02/16/watson-wins-it-all-with-367-bet/ |title=IBM's Supercomputer Watson Wins It All With $367 Bet |last=Upbin |first=Bruce |work=[[Forbes]] |date=February 17, 2011 |access-date=February 21, 2011 |archive-date=February 21, 2011 |archive-url=https://web.archive.org/web/20110221100903/http://blogs.forbes.com/bruceupbin/2011/02/16/watson-wins-it-all-with-367-bet/ |url-status=live }}</ref> After the first round, Watson placed second for the first time in the competition after Rutter and Jennings were briefly successful in increasing their dollar values before Watson could respond.<ref name=forbes/><ref>{{cite web |last=Oldenburg |first=Ann |title=Ken Jennings: 'My puny brain' did just fine on 'Jeopardy!' |url=http://content.usatoday.com/communities/entertainment/post/2011/02/ken-jennings-my-puny-brain-did-just-fine-on-jeopardy-/1 |work=[[USA Today]] |date=February 17, 2011 |access-date=February 21, 2011 |archive-date=February 20, 2011 |archive-url=https://web.archive.org/web/20110220043823/http://content.usatoday.com/communities/entertainment/post/2011/02/ken-jennings-my-puny-brain-did-just-fine-on-jeopardy-/1 |url-status=live }}</ref> Nonetheless, the final result ended with a victory for Watson with a score of $77,147, besting Jennings who scored $24,000 and Rutter who scored $21,600.<ref>{{cite episode |title=Show 6088 – The IBM Challenge, Day 2 |series=Jeopardy! |date=February 16, 2011 |network=Syndicated}}</ref> |

|||

====Final outcome==== |

|||

The prizes for the competition were $1 million for first place (Watson), $300,000 for second place (Jennings), and $200,000 for third place (Rutter). As promised, IBM donated 100% of Watson's winnings to charity, with 50% of those winnings going to [[World Vision]] and 50% going to [[World Community Grid]].<ref>{{cite web |url=http://www.worldcommunitygrid.org/about_us/viewNewsArticle.do?articleId=148 |title=World Community Grid to benefit from Jeopardy! competition |work=[[World Community Grid]] |date=February 4, 2011 |access-date=February 19, 2011 |archive-date=January 14, 2012 |archive-url=https://web.archive.org/web/20120114010952/http://www.worldcommunitygrid.org/about_us/viewNewsArticle.do?articleId=148 |url-status=live }}</ref> Similarly, Jennings and Rutter donated 50% of their winnings to their respective charities.<ref>{{cite web |url=http://www-03.ibm.com/press/us/en/pressrelease/33373.wss |title=Jeopardy! And IBM Announce Charities To Benefit From Watson Competition |work=IBM Corporation |date=January 13, 2011 |access-date=February 19, 2011 |archive-date=November 10, 2021 |archive-url=https://web.archive.org/web/20211110221256/https://newsroom.ibm.com/ |url-status=dead }}</ref> |

|||

In acknowledgement of IBM and Watson's achievements, Jennings made an additional remark in his Final Jeopardy! response: "I for one welcome our new computer overlords", paraphrasing [[overlord meme|a joke]] from ''The Simpsons''.<ref name="overlord">{{cite web |url=https://www.bbc.co.uk/news/technology-12491688 |title=IBM's Watson supercomputer crowned Jeopardy king |work=BBC News |date=February 17, 2011 |access-date=February 17, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218052011/http://www.bbc.co.uk/news/technology-12491688 |url-status=live }}</ref><ref name="overlord2">{{cite news |url=https://www.nytimes.com/2011/02/17/science/17jeopardy-watson.html |title=Computer Wins on 'Jeopardy!': Trivial, It's Not |last=Markoff |first=John |newspaper=The New York Times |location=[[Yorktown Heights, New York]] |date=February 16, 2011 |access-date=February 17, 2011 |archive-date=October 22, 2014 |archive-url=https://web.archive.org/web/20141022023202/http://www.nytimes.com/2011/02/17/science/17jeopardy-watson.html |url-status=live }}</ref> Jennings later wrote an article for ''[[Slate (magazine)|Slate]]'', in which he stated: <blockquote>IBM has bragged to the media that Watson's question-answering skills are good for more than annoying Alex Trebek. The company sees a future in which fields like [[medical diagnosis]], [[business analytics]], and [[technical support|tech support]] are automated by question-answering software like Watson. Just as factory jobs were eliminated in the 20th century by new assembly-line robots, Brad and I were the first [[Knowledge worker|knowledge-industry workers]] put out of work by the new generation of 'thinking' machines. 'Quiz show contestant' may be the first job made redundant by Watson, but I'm sure it won't be the last.<ref name="threads" /></blockquote> |

|||

====Philosophy==== |

|||

Philosopher [[John Searle]] argues that Watson—despite impressive capabilities—cannot actually think.<ref name=SearleWSJ>{{cite news |url=https://www.wsj.com/articles/SB10001424052748703407304576154313126987674 |first=John |last=Searle |title=Watson Doesn't Know It Won on 'Jeopardy!' |newspaper=The Wall Street Journal |date=February 23, 2011 |access-date=July 26, 2011 |archive-date=November 10, 2021 |archive-url=https://web.archive.org/web/20211110221317/https://www.wsj.com/articles/SB10001424052748703407304576154313126987674 |url-status=live }}</ref> Drawing on his [[Chinese room]] [[thought experiment]], Searle claims that Watson, like other computational machines, is capable only of manipulating symbols, but has no ability to understand the meaning of those symbols; however, Searle's experiment has its [[Chinese room#Replies|detractors]].<ref>{{cite news |last=Lohr |first=Steve |url=https://www.nytimes.com/2011/12/06/science/creating-artificial-intelligence-based-on-the-real-thing.html |title=Creating AI based on the real thing |date=December 5, 2011 |newspaper=[[The New York Times]] |access-date=February 26, 2017 |archive-date=November 10, 2021 |archive-url=https://web.archive.org/web/20211110221227/https://www.nytimes.com/2011/12/06/science/creating-artificial-intelligence-based-on-the-real-thing.html |url-status=live }}.</ref> |

|||

====Match against members of the United States Congress==== |

|||

On February 28, 2011, Watson played an untelevised exhibition match of ''Jeopardy!'' against members of the [[United States House of Representatives]]. In the first round, [[Rush D. Holt, Jr.]] (D-NJ, a former ''Jeopardy!'' contestant), who was challenging the computer with [[Bill Cassidy]] (R-LA, later Senator from Louisiana), led with Watson in second place. However, combining the scores between all matches, the final score was $40,300 for Watson and $30,000 for the congressional players combined.<ref name="House Match">{{cite web |archive-url=https://web.archive.org/web/20110307032033/https://www.google.com/hostednews/ap/article/ALeqM5jQVgg2q1jaW9UdEZn-5s-D2x-sEw?docId=b8984784c9d4454db01523b8d99b6d8e |archive-date=March 7, 2011 |url=https://www.google.com/hostednews/ap/article/ALeqM5jQVgg2q1jaW9UdEZn-5s-D2x-sEw?docId=b8984784c9d4454db01523b8d99b6d8e |title=NJ congressman tops 'Jeopardy' computer Watson |agency=Associated Press |date=March 2, 2011 |access-date=March 2, 2011}}</ref> |

|||

IBM's Christopher Padilla said of the match, "The technology behind Watson represents a major advancement in computing. In the data-intensive environment of government, this type of technology can help organizations make better decisions and improve how government helps its citizens."<ref name="House Match"/> |

|||

==Current and future applications== |

|||

{{Advert section|date=April 2019}} |

|||

According to IBM, "The goal is to have computers start to interact in natural human terms across a range of applications and processes, understanding the questions that humans ask and providing answers that humans can understand and justify."<ref name="networld" /> It has been suggested by Robert C. Weber, IBM's [[general counsel]], that Watson may be used for legal research.<ref>{{cite web |first=Robert C. |last=Weber |url=http://www.law.com/jsp/nlj/PubArticleNLJ.jsp?id=1202481662966&slreturn=1&hbxlogin=1 |title=Why 'Watson' matters to lawyers |work=The National Law Journal |date=February 14, 2011 |access-date=February 18, 2011 |archive-date=November 10, 2021 |archive-url=https://web.archive.org/web/20211110221407/https://www.law.com/nationallawjournal/almID/1202481662966%26slreturn%3D1%26hbxlogin%3D1/?slreturn=20211010171407 |url-status=live }}</ref> The company also intends to use Watson in other information-intensive fields, such as telecommunications, financial services, and government.<ref>{{cite web |last=Nay |first=Chris |url=http://asmarterplanet.com/blog/2011/09/putting-watson-to-work-interview-with-ibm-gm-of-watson-solutions-manoj-saxena.html |title=Putting Watson to work: Interview with GM of Watson Solutions Manoj Saxena |date=September 6, 2011 |work=Smarter Planet Blog |publisher=IBM |access-date=November 12, 2013 |archive-date=November 12, 2013 |archive-url=https://web.archive.org/web/20131112173110/http://asmarterplanet.com/blog/2011/09/putting-watson-to-work-interview-with-ibm-gm-of-watson-solutions-manoj-saxena.html |url-status=live }}</ref> |

|||

Watson is based on commercially available IBM Power 750 servers that have been marketed since February 2010.<ref name="nytmag" /> |

|||

Commentator Rick Merritt said that "there's another really important reason why it is strategic for IBM to be seen very broadly by the American public as a company that can tackle tough computer problems. A big slice of [IBM's profit] comes from selling to the U.S. government some of the biggest, most expensive systems in the world."<ref name="times">{{cite web |first=Rick |last=Merritt |url=http://www.eetimes.com/electronics-news/4213145/IBM-playing-Jeopardy-with-tax-dollars |title=IBM playing Jeopardy with tax dollars |work=EE Times |date=February 14, 2011 |access-date=February 19, 2011 |archive-date=February 18, 2011 |archive-url=https://web.archive.org/web/20110218174023/http://www.eetimes.com/electronics-news/4213145/IBM-playing-Jeopardy-with-tax-dollars |url-status=live }}</ref> |

|||

In 2013, it was reported that three companies were working with IBM to create apps embedded with Watson technology. Fluid is developing an app for retailers, one called "The North Face", which is designed to provide advice to online shoppers. Welltok is developing an app designed to give people advice on ways to engage in activities to improve their health. MD Buyline is developing an app for the purpose of advising medical institutions on equipment procurement decisions.<ref>{{cite web |url=https://www.internetretailer.com/2013/12/03/ibms-watson-computer-helps-shoppers-new-app |title=IBM's Watson computer helps shoppers via a new app |first=Amy |last=Dusto |date=December 3, 2013 |work=Internet Retailer |access-date=January 10, 2016 |archive-date=June 12, 2016 |archive-url=https://web.archive.org/web/20160612025939/https://www.internetretailer.com/2013/12/03/ibms-watson-computer-helps-shoppers-new-app |url-status=live }}</ref><ref>{{cite web |url=http://mobihealthnews.com/27414/with-watson-api-launch-ibm-turns-to-welltok-for-patients-md-buyline-for-docs/ |title=With Watson API launch, IBM turns to WellTok for patients, MD Buyline for docs |first=Jonah |last=Comstock |date=November 15, 2013 |work=MobiHealthNews |access-date=January 10, 2016 |archive-date=March 4, 2016 |archive-url=https://web.archive.org/web/20160304084226/http://mobihealthnews.com/27414/with-watson-api-launch-ibm-turns-to-welltok-for-patients-md-buyline-for-docs/ |url-status=live }}</ref> |

|||

In November 2013, IBM announced it would make Watson's [[API]] available to software application providers, enabling them to build apps and services that are embedded in Watson's capabilities. To build out its base of partners who create applications on the Watson platform, IBM consults with a network of venture capital firms, which advise IBM on which of their portfolio companies may be a logical fit for what IBM calls the Watson Ecosystem. Thus far, roughly 800 organizations and individuals have signed up with IBM, with interest in creating applications that could use the Watson platform.<ref>{{cite web |url=https://www.forbes.com/sites/bruceupbin/2013/11/14/ibm-opens-up-watson-as-a-web-service/ |title=IBM Opens Up Its Watson Cognitive Computer For Developers Everywhere |first=Bruce |last=Upbin |date=November 14, 2013 |work=Forbes |access-date=January 10, 2016 |archive-date=January 26, 2016 |archive-url=https://web.archive.org/web/20160126185213/http://www.forbes.com/sites/bruceupbin/2013/11/14/ibm-opens-up-watson-as-a-web-service/ |url-status=live }}</ref> |

|||

On January 30, 2013, it was announced that [[Rensselaer Polytechnic Institute]] would receive a successor version of Watson, which would be housed at the institute's technology park and be available to researchers and students.<ref>{{cite web |url=http://news.rpi.edu/luwakkey/3126 |title=IBM's Watson to Join Research Team at Rensselaer |work=Rensselaer Polytechnic Institute |date=January 30, 2013 |access-date=October 1, 2013 |archive-date=October 4, 2013 |archive-url=https://web.archive.org/web/20131004215358/http://news.rpi.edu/luwakkey/3126 |url-status=live }}</ref> By summer 2013, Rensselaer had become the first university to receive a Watson computer.<ref>{{cite web |url=http://www.cicu.org/sites/default/files/publication_reports/2013ISMagazine_FINAL_0.pdf |title=The Independent Sector: Cultural, Economic and Social Contributions of New York's 100+, Not-for-Profit Colleges and Universities |work=Commission on Independent Colleges and Universities |date=Summer 2013 |page=12 |access-date=October 1, 2013 |archive-date=October 4, 2013 |archive-url=https://web.archive.org/web/20131004213932/http://www.cicu.org/sites/default/files/publication_reports/2013ISMagazine_FINAL_0.pdf |url-status=live }}</ref> |

|||

On February 6, 2014, it was reported that IBM plans to invest $100 million in a 10-year initiative to use Watson and other IBM technologies to help countries in Africa address development problems, beginning with healthcare and education.<ref>{{cite web |url=https://www.reuters.com/article/us-ibm-africa-idUSBREA1507H20140206 |title=IBM starts rolling out Watson supercomputer in Africa |first=Tim |last=Cocks |date=February 6, 2014 |work=Reuters |access-date=January 10, 2016 |archive-date=March 8, 2016 |archive-url=https://web.archive.org/web/20160308023823/http://www.reuters.com/article/us-ibm-africa-idUSBREA1507H20140206 |url-status=live }}</ref> |

|||

On June 3, 2014, three new Watson Ecosystem partners were chosen from more than 400 business concepts submitted by teams spanning 18 industries from 43 countries. "These bright and enterprising organizations have discovered innovative ways to apply Watson that can deliver demonstrable business benefits", said Steve Gold, vice president, IBM Watson Group. The winners were Majestyk Apps with their adaptive educational platform, FANG (Friendly Anthropomorphic Networked Genome);<ref>{{cite web |url=http://majestykapps.com/blog/ibm-watson-finalists/ |title=IBM Watson Mobile Developers Challenge Finalists: Majestyk |work=Majestyk Apps |first=Donald |last=Coolidge |date=May 29, 2014 |access-date=January 10, 2016 |archive-date=September 19, 2015 |archive-url=https://web.archive.org/web/20150919122811/http://majestykapps.com/blog/ibm-watson-finalists/ |url-status=live }}</ref><ref>{{cite web |url=https://www.flickr.com/photos/ibm_media/14359875853/ |title=Majestyk Apps – An IBM Watson Mobile Developer Challenge Winner |date=June 3, 2014 |work=Flickr |access-date=January 10, 2016 |archive-date=September 27, 2015 |archive-url=https://web.archive.org/web/20150927103308/https://www.flickr.com/photos/ibm_media/14359875853/ |url-status=live }}</ref> Red Ant with their retail sales trainer;<ref>{{cite web |url=https://www.flickr.com/photos/ibm_media/14153092500/ |title=Red Ant – An IBM Watson Mobile Developer Challenge Winner |date=June 3, 2014 |work=Flickr |access-date=January 10, 2016 |archive-date=December 28, 2015 |archive-url=https://web.archive.org/web/20151228210206/https://www.flickr.com/photos/ibm_media/14153092500 |url-status=live }}</ref> and GenieMD<ref>{{cite web |url=https://www.flickr.com/photos/ibm_media/14152996019/ |title=GenieMD – An IBM Watson Mobile Developer Challenge Winner |date=June 3, 2014 |work=Flickr |access-date=January 10, 2016 |archive-date=September 22, 2015 |archive-url=https://web.archive.org/web/20150922123835/https://www.flickr.com/photos/ibm_media/14152996019 |url-status=live }}</ref> with their medical recommendation service.<ref>{{cite web |url=http://www-03.ibm.com/press/us/en/pressrelease/44057.wss |title=IBM Announces Watson Mobile Developer Challenge Winners |date=June 3, 2014 |work=IBM News |access-date=January 10, 2016 |archive-date=January 19, 2016 |archive-url=https://web.archive.org/web/20160119022211/https://www-03.ibm.com/press/us/en/pressrelease/44057.wss |url-status=dead }}</ref> |

|||

On July 9, 2014, [[Genesys Telecommunications Laboratories]] announced plans to integrate Watson to improve their customer experience platform, citing the sheer volume of customer data to analyze.<ref>{{cite web |url=http://www.enterpriseappstoday.com/crm/genesys-to-put-ibms-watson-to-work.html |title=Genesys to Put IBM's Watson to Work |work=Enterprise Apps Today |first=Ann |last=All |date=July 9, 2014 |access-date=January 10, 2016 |archive-date=March 7, 2016 |archive-url=https://web.archive.org/web/20160307104326/http://www.enterpriseappstoday.com/crm/genesys-to-put-ibms-watson-to-work.html |url-status=live }}</ref> |

|||

Watson has been integrated with databases including ''Bon Appétit'' magazine to perform a recipe generating platform.<ref>{{cite web |url=http://www.fastcodesign.com/3032501/ibms-watson-is-now-a-cooking-app-with-infinite-recipes |title=IBM's Watson Is Now A Cooking App With Infinite Recipes |first=Mark |last=Wilson |work=fastcodesign.com |date=June 30, 2014 |access-date=January 10, 2016 |archive-date=February 28, 2016 |archive-url=https://web.archive.org/web/20160228232611/http://www.fastcodesign.com/3032501/ibms-watson-is-now-a-cooking-app-with-infinite-recipes |url-status=live }}</ref> |

|||

Watson is being used by Decibel, a music discovery startup, in its app MusicGeek which uses the supercomputer to provide music recommendations to its users. The use of Watson has also been found in the hospitality industry. Go Moment uses Watson for its Rev1 app, which gives hotel staff a way to quickly respond to questions from guests.<ref>{{cite web |last=Hardawar |first=Devindra |title=IBM's big bet on Watson is paying off with more apps and DNA analysis |url=https://www.engadget.com/2015/05/05/ibm-watson-apps-dna/ |work=Engadget |date=5 May 2015 |access-date=July 2, 2015 |archive-date=July 9, 2015 |archive-url=https://web.archive.org/web/20150709024739/http://www.engadget.com/2015/05/05/ibm-watson-apps-dna/ |url-status=live }}</ref> Arria NLG has built an app that helps energy companies stay within regulatory guidelines, making it easier for managers to make sense of thousands of pages of legal and technical jargon. |

|||

OmniEarth, Inc. uses Watson computer vision services to analyze satellite and aerial imagery, along with other municipal data, to infer water usage on a property-by-property basis, helping districts in California improve water conservation efforts.<ref>{{cite web |last=Griggs |first=Mary Beth |url=http://www.popsci.com/how-watson-supercomputer-can-see-water-waste-in-drought-stricken-california |title=IBM Watson can help find water wasters in drought-stricken California |date=May 20, 2016 |work=Popular Science |access-date=July 4, 2016 |archive-date=June 23, 2016 |archive-url=https://web.archive.org/web/20160623223951/http://www.popsci.com/how-watson-supercomputer-can-see-water-waste-in-drought-stricken-california |url-status=live }}</ref> |

|||

In September 2016, Condé Nast started using Watson to help build and strategize social influencer campaigns for brands. Using software built by IBM and Influential, Condé Nast's clients will be able to know which influencer's demographics, personality traits and more best align with a marketer and the audience it is targeting.<ref>{{Cite news |url=http://www.adweek.com/news/technology/cond-nast-has-started-using-ibms-watson-find-influencers-brands-173243 |title=Condé Nast Has Started Using IBM's Watson to Find Influencers for Brands |author=Marty Swant |publisher=Adweek |date=6 September 2016 |access-date=8 September 2016 |archive-date=8 September 2016 |archive-url=https://web.archive.org/web/20160908113129/http://www.adweek.com/news/technology/cond-nast-has-started-using-ibms-watson-find-influencers-brands-173243 |url-status=live }}</ref> |

|||

In February 2017, Rare Carat, a New York City-based startup and [[e-commerce]] platform for buying diamonds and diamond rings, introduced an IBM Watson-powered [[chatbot]] called "Rocky" to assist novice diamond buyers through the daunting process of purchasing a diamond. As part of the IBM Global Entrepreneur Program, Rare Carat received the assistance of IBM in the development of the Rocky Chat Bot.<ref>{{cite news |date=February 28, 2017 |title=Rare Carat Releases World's First Artificial Intelligence Jeweler Using IBM Watson Technology |url=https://www.rarecarat.com/blog/press-release-rare-carat-releases-world-s-first-artificial-intelligence-jeweler-using-ibm-watson-technology |agency=PRNewswire |access-date=August 22, 2017 |archive-date=August 22, 2017 |archive-url=https://web.archive.org/web/20170822100744/https://www.rarecarat.com/blog/press-release-rare-carat-releases-world-s-first-artificial-intelligence-jeweler-using-ibm-watson-technology |url-status=live }}</ref><ref>{{cite news |date=February 15, 2017 |title=Rare Carat's Watson-powered chatbot will help you put a diamond ring on it |url=https://techcrunch.com/2017/02/15/rare-carats-watson-powered-chat-bot-will-help-you-put-a-diamond-ring-on-it/ |agency=TechCrunch |access-date=August 22, 2017 |archive-date=August 22, 2017 |archive-url=https://web.archive.org/web/20170822133420/https://techcrunch.com/2017/02/15/rare-carats-watson-powered-chat-bot-will-help-you-put-a-diamond-ring-on-it/ |url-status=live }}</ref><ref>{{cite news |date=March 10, 2017 |title=10 ways you may have already used IBM Watson |url=https://venturebeat.com/2017/03/10/10-ways-you-may-have-already-used-ibm-watson/ |agency=VentureBeat |access-date=August 22, 2017 |archive-date=August 22, 2017 |archive-url=https://web.archive.org/web/20170822101320/https://venturebeat.com/2017/03/10/10-ways-you-may-have-already-used-ibm-watson/ |url-status=live }}</ref> |

|||

In May 2017, IBM partnered with the [[Pebble Beach Company]] to use Watson as a [[concierge]].<ref>{{Cite web|url=https://venturebeat.com/2017/05/09/ibm-watson-to-help-pebble-beach-create-a-virtual-concierge-for-guests/|title=IBM Watson to help Pebble Beach create a virtual concierge for guests|date=2017-05-09|website=VentureBeat|access-date=2017-05-10|archive-date=2017-05-09|archive-url=https://web.archive.org/web/20170509175332/https://venturebeat.com/2017/05/09/ibm-watson-to-help-pebble-beach-create-a-virtual-concierge-for-guests/|url-status=live}}</ref> Watson technology was added to an app developed by Pebble Beach and was used to guide visitors around the resort. The mobile app was designed by IBM iX and hosted on the IBM Cloud. It uses Watson's Conversation applications programming interface. |

|||

In November 2017, in Mexico City, the Experience Voices of Another Time was opened at the National Museum of Anthropology using IBM Watson as an alternative to visiting a museum.<ref>{{cite AV media |date=October 31, 2017 |title=IBM Watson: artificial intelligence arrives at the Museum of Anthropology |via=Youtube |url=https://www.youtube.com/watch?v=e52xa2s5HkM | archive-url=https://web.archive.org/web/20190921013845/https://www.youtube.com/watch?v=e52xa2s5HkM| archive-date=2019-09-21 | url-status=dead|access-date=May 11, 2018 |publisher=Aban Tech }}</ref> |

|||

===Healthcare=== |

|||

{{See also|IBM Watson Health}} |

|||