Response bias: Difference between revisions

As brilliantly written as this is, it reads more like an essay rather than an encyclopedic article. Various fixes mainly with piped links and straight quotes, superb writing, though |

mNo edit summary |

||

| (78 intermediate revisions by 51 users not shown) | |||

| Line 1: | Line 1: | ||

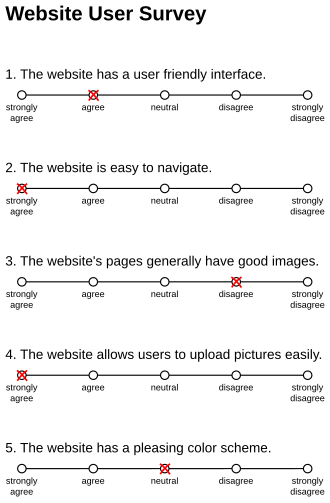

| ⚫ | {{Short description|Type of bias}}{{Distinguish|sampling bias}}[[File:Example Likert Scale.svg|thumb|upright=1.5|alt=Example Likert Scale.| A survey using a [[Likert Scale|Likert]] style response set. This is one example of a type of survey that can be highly vulnerable to the effects of response bias.]] |

||

{{essay|date=April 2014}} |

|||

'''Response bias''' is a general term for a wide range of tendencies for participants to respond inaccurately or falsely to questions. These biases are prevalent in research involving participant [[self-report study|self-report]], such as [[structured interview]]s or [[statistical survey|surveys]].<ref name="Furnham">{{cite journal|doi=10.1016/0191-8869(86)90014-0|title=Response bias, social desirability and dissimulation|journal=Personality and Individual Differences|volume=7|issue=3|pages=385–400|year=1986|last1=Furnham|first1=Adrian}}</ref> Response biases can have a large impact on the validity of [[questionnaire]]s or surveys.<ref name="Furnham"/><ref name="Nederhof">{{cite journal|doi=10.1002/ejsp.2420150303|title=Methods of coping with social desirability bias: A review|journal=European Journal of Social Psychology|volume=15|issue=3|pages=263–280|year=1985|last1=Nederhof|first1=Anton J.}}</ref> |

|||

| ⚫ | |||

'''Response bias''' is a general term for a wide range of [[cognitive bias]]es that influence the responses of participants away from an accurate or truthful response. These biases are most prevalent in the types of studies and research that involve participant [[self-report study|self-report]], such as [[structured interview]]s or [[statistical survey|surveys]].<ref name="Furnham"> Furnham, A (1986). Response bias, social desirability and dissimulation. ''Personality and individual differences 7.'' 385-400 </ref> Response biases can have a large impact on the validity of the [[questionnaire]] or survey to which the participant is responding.<ref name="Furnham"/> <ref name="Nederhof"> Nederhof, A (1985). Methods of coping with social desirability bias: a review. ''European Journal of Social Psychology 15.'' 263-280 </ref> This bias can be induced or caused by a number of factors, all relating to the idea that human subjects do not respond passively to [[Stimulus (psychology)|stimuli]], but rather actively integrate multiple sources of information to generate a response in a given situation.<ref name="Orne 1962"> Orne, M (1962). On the social psychology of the psychological experiment: with particular reference to demand characteristics and their implications. ''American Psychologist 17.'' 776-783 </ref> Because of this, almost any aspect of an experimental condition may be able to bias a respondent in some form or another. For example, the phrasing of questions in surveys, the demeanor of the researcher, the way the experiment is conducted, or the desires of the participant to be a good experimental subject and to provide socially desirable responses may bias the response of the participant in some way.<ref name="Furnham"/><ref name="Nederhof"/><ref name="Orne 1962"/><ref name="Kalton"> Kalton, G, Schuman, H, (1982). The effect of the question on survey responses: a review. ''J. R. statist. Soc. 154.'' 42-73 </ref> All of these "artifacts" of survey and self-report research may have the potential to damage the [[construct validity|validity]] of a measure or study.<ref name="Nederhof"/> Compounding this issue is that surveys affected by response bias still often have high [[reliability (psychometrics)|reliability]]. <ref name="Gove 1977"> Gove, WR; Geerken, MR (1977) Response bias in surveys of mental health: and empirical investigation. ''American journal of Sociology 82.'' 1289-1317 </ref> This insidious combination can lure researchers into a false sense of security about the conclusions they draw.<ref name="Gove 1977"/> The effect of this bias means that it is possible that some study results are due to a systematic response bias rather than the [[Hypothesis|hypothesized]] effect, which can have a profound effect on [[Psychology|psychological]] and other types of research using questionnaires or surveys.<ref name="Gove 1977"/>It is therefore important for researchers to be aware of response bias and the effect can have on their research so that they can attempt to prevent it from impacting their findings in a negative manner. |

|||

Response bias can be induced or caused by numerous factors, all relating to the idea that human subjects do not respond passively to [[Stimulus (psychology)|stimuli]], but rather actively integrate multiple sources of information to generate a response in a given situation.<ref name="Orne 1962">{{cite journal|doi=10.1037/h0043424|title=On the social psychology of the psychological experiment: With particular reference to demand characteristics and their implications|journal=American Psychologist|volume=17|issue=11|pages=776–783|year=1962|last1=Orne|first1=Martin T.|s2cid=7975753}}</ref> Because of this, almost any aspect of an experimental condition may potentially bias a respondent. Examples include the phrasing of questions in surveys, the demeanor of the researcher, the way the experiment is conducted, or the desires of the participant to be a good experimental subject and to provide socially desirable responses may affect the response in some way.<ref name="Furnham"/><ref name="Nederhof"/><ref name="Orne 1962"/><ref name="Kalton">{{cite journal|last1=Kalton|first1=Graham|last2=Schuman|first2=Howard|title=The Effect of the Question on Survey Responses: A Review|journal=Journal of the Royal Statistical Society. Series A (General)|date=1982|volume=145|issue=1|pages=42–73|doi=10.2307/2981421|jstor=2981421|url=https://deepblue.lib.umich.edu/bitstream/2027.42/146916/1/rssa04317.pdf|hdl=2027.42/146916|s2cid=151566559 |hdl-access=free}}</ref> All of these "artifacts" of survey and self-report research may have the potential to damage the [[construct validity|validity]] of a measure or study.<ref name="Nederhof"/> Compounding this issue is that surveys affected by response bias still often have high [[reliability (psychometrics)|reliability]], which can lure researchers into a false sense of security about the conclusions they draw.<ref name="Gove 1977">{{cite journal|jstor=2777936|pmid=889001|year=1977|last1=Gove|first1=W. R.|title=Response bias in surveys of mental health: An empirical investigation|journal=American Journal of Sociology|volume=82|issue=6|pages=1289–1317|last2=Geerken|first2=M. R.|doi=10.1086/226466|s2cid=40008515}}</ref> |

|||

| ⚫ | |||

| ⚫ | Awareness of response bias has been present in [[psychology]] and [[sociology]] literature for some time because self- |

||

Because of response bias, it is possible that some study results are due to a systematic response bias rather than the [[Hypothesis|hypothesized]] effect, which can have a profound effect on [[Psychology|psychological]] and other types of research using questionnaires or surveys.<ref name="Gove 1977"/> It is therefore important for researchers to be aware of response bias and the effect it can have on their research so that they can attempt to prevent it from impacting their findings in a negative manner. |

|||

===Arguments Against Response Bias=== |

|||

| ⚫ | The first |

||

| ⚫ | |||

===Arguments For response bias=== |

|||

| ⚫ | Awareness of response bias has been present in [[psychology]] and [[sociology]] literature for some time because self-reporting features significantly in those fields of research. However, researchers were initially unwilling to admit the degree to which they impact, and potentially invalidate research utilizing these types of measures.<ref name="Gove 1977"/> Some researchers believed that the biases present in a group of subjects cancel out when the group is large enough.<ref>Hyman, H; 1954. [https://books.google.com/books?id=Lj0OAQAAIAAJ ''Interviewing in Social Research.''] Chicago: University of Chicago Press.</ref> This would mean that the impact of response bias is random noise, which washes out if enough participants are included in the study.<ref name="Gove 1977"/> However, at the time this argument was proposed, effective methodological tools that could test it were not available.<ref name="Gove 1977"/> Once newer methodologies were developed, researchers began to investigate the impact of response bias.<ref name="Gove 1977"/> From this renewed research, two opposing sides arose. |

||

| ⚫ | The second group argues against Hyman's point, saying that response bias has a significant effect, and that researchers need to take steps to |

||

| ⚫ | The first group supports Hyman's belief that although response bias exists, it often has minimal effect on participant response, and no large steps need to be taken to mitigate it.<ref name="Gove 1977"/><ref name="Clancy 1974">{{cite journal|last1=Clancy|first1=Kevin|last2=Gove|first2=Walter|title=Sex Differences in Mental Illness: An Analysis of Response Bias in Self-Reports|journal=American Journal of Sociology|date=1974|volume=80|issue=1|pages=205–216|jstor=2776967|doi=10.1086/225767|s2cid=46255353}}</ref><ref name="Campbell 1976">Campbell, A. Converse, P. Rodgers; 1976. [https://books.google.com/books?id=h_QWAwAAQBAJ ''The Quality of American Life: Perceptions, Evaluations and Satisfaction.''] New York: Russell Sage.</ref> These researchers hold that although there is significant literature identifying response bias as influencing the responses of study participants, these studies do not in fact provide [[empirical evidence]] that this is the case.<ref name="Gove 1977"/> They subscribe to the idea that the effects of this bias wash out with large enough samples, and that it is not a systematic problem in [[mental health]] research.<ref name="Gove 1977"/><ref name="Clancy 1974"/> These studies also call into question earlier research that investigated response bias on the basis of their [[List of psychological research methods|research methodologies]]. For example, they mention that many of the studies had very small [[Sample (statistics)|sample sizes]], or that in studies looking at [[Social desirability bias|social desirability]], a subtype of response bias, the researchers had no way to [[Quantification (science)|quantify]] the desirability of the statements used in the study.<ref name="Gove 1977"/> Additionally, some have argued that what researchers may believe to be artifacts of response bias, such as differences in responding between men and women, may in fact be actual differences between the two groups.<ref name="Clancy 1974"/> Several other studies also found evidence that response bias is not as big of a problem as it may seem. The first found that when comparing the responses of participants, with and without controls for response bias, their answers to the surveys were not [[Statistical significance|different]].<ref name="Clancy 1974"/> Two other studies found that although the bias may be present, the effects are extremely small, having little to no impact towards dramatically changing or altering the responses of participants.<ref name="Campbell 1976"/><ref name="Gove 1976">{{cite journal|doi=10.1016/0037-7856(76)90118-9|pmid=1006342|title=Response bias in community surveys of mental health: Systematic bias or random noise?|journal=Social Science & Medicine |volume=10|issue=9–10|pages=497–502|year=1976|last1=Gove|first1=Walter R.|last2=McCorkel|first2=James|last3=Fain|first3=Terry|last4=Hughes|first4=Michael D.}}</ref> |

||

===Conclusion=== |

|||

| ⚫ | While both sides have support in the literature, there appears to be greater empirical support for the |

||

| ⚫ | The second group argues against Hyman's point, saying that response bias has a significant effect, and that researchers need to take steps to reduce response bias in order to conduct sound research.<ref name="Furnham"/><ref name="Nederhof"/> They argue that the impact of response bias is a [[systematic error]] inherent to this type of research and that it needs to be addressed in order for studies to be able to produce accurate results. In psychology, there are many studies exploring the impact of response bias in many different settings and with many different [[Variable and attribute (research)|variables]]. For example, some studies have found effects of response bias in the reporting of [[Major depressive disorder|depression]] in elderly patients.<ref name="Knauper 1994">{{cite journal|doi=10.1016/0022-3956(94)90026-4|pmid=7932277|title=Diagnosing major depression in the elderly: Evidence for response bias in standardized diagnostic interviews?|journal=Journal of Psychiatric Research|volume=28|issue=2|pages=147–164|year=1994|last1=Knäuper|first1=Bärbel|last2=Wittchen|first2=Hans-Ulrich}}</ref> Other researchers have found that there are serious issues when responses to a given survey or questionnaire have responses that may seem desirable or undesirable to report, and that a person's responses to certain questions can be biased by their culture.<ref name="Nederhof"/><ref name="Fischer 2004">{{cite journal|doi=10.1177/0022022104264122|title=Standardization to Account for Cross-Cultural Response Bias: A Classification of Score Adjustment Procedures and Review of Research in JCCP|journal=Journal of Cross-Cultural Psychology|volume=35|issue=3|pages=263–282 |year=2004|last1=Fischer|first1=Ronald|s2cid=32046329}}</ref> Additionally, there is support for the idea that simply being part of an experiment can have dramatic effects on how participants act, thus biasing anything that they may do in a research or experimental setting when it comes to self-reporting.<ref name="Orne 1962"/> One of the most influential studies was one which found that social desirability bias, a type of response bias, can account for as much as 10–70% of the [[variance]] in participant response.<ref name="Nederhof"/> Essentially, because of several findings that illustrate the dramatic effects response bias has on the outcomes of self-report research, this side supports the idea that steps need to be taken to mitigate the effects of response bias to maintain the accuracy of research. |

||

| ⚫ | While both sides have support in the literature, there appears to be greater empirical support for the significance of response bias.<ref name="Furnham"/><ref name="Nederhof"/><ref name="Orne 1962"/><ref name="Fischer 2004"/><ref name="Reese 2013">{{cite journal|doi=10.1002/jclp.21946|pmid=23349082|title=The Influence of Demand Characteristics and Social Desirability on Clients' Ratings of the Therapeutic Alliance|journal=Journal of Clinical Psychology|volume=69|issue=7|pages=696–709|year=2013|last1=Reese|first1=Robert J.|last2=Gillaspy|first2=J. Arthur|last3=Owen|first3=Jesse J.|last4=Flora|first4=Kevin L.|last5=Cunningham|first5=Linda C.|last6=Archie|first6=Danielle|last7=Marsden|first7=Troymichael}}</ref><ref name="Cronbach">{{cite journal|doi=10.1037/h0054677|title=Studies of acquiescence as a factor in the true-false test|journal=Journal of Educational Psychology|volume=33|issue=6|pages=401–415|year=1942|last1=Cronbach|first1=L. J.}}</ref> To add strength to the claims of those who argue the importance of response bias, many of the studies that reject the significance of response bias report multiple methodological issues in their studies. For example, they have extremely small samples that are not representative of the population as a whole, they only considered a small subset of potential variables that could be affected by response bias, and their measurements were conducted over the phone with poorly worded statements.<ref name="Gove 1977"/><ref name="Clancy 1974"/> |

||

| ⚫ | |||

==Types of response bias== |

|||

===Acquiescence bias=== |

===Acquiescence bias=== |

||

{{Main|Acquiescence bias}} |

{{Main|Acquiescence bias}} |

||

| ⚫ | Acquiescence bias, which is also referred to as "yea-saying", is a category of response bias in which respondents to a [[Sample survey|survey]] have a tendency to agree with all the questions in a [[Psychological testing|measure]].<ref name="Watson">{{cite journal|doi=10.1177/0049124192021001003|title=Correcting for Acquiescent Response Bias in the Absence of a Balanced Scale: An Application to Class Consciousness|journal=Sociological Methods & Research|volume=21|pages=52–88|year=1992|last1=Watson|first1=D.|s2cid=122977362}}</ref><ref name="Psychlopedia">[https://web.archive.org/web/20091015050748/http://www.psych-it.com.au/Psychlopedia/article.asp?id=154 Moss, Simon. (2008). Acquiescence bias]</ref> This bias in responding may represent a form of dishonest reporting because the participant automatically endorses any statements, even if the result is contradictory responses.<ref name="Knowles 1997">{{cite journal|doi=10.1006/jrpe.1997.2180|title=Acquiescent Responding in Self-Reports: Cognitive Style or Social Concern?|journal=Journal of Research in Personality|volume=31|issue=2|pages=293–301|year=1997|last1=Knowles|first1=Eric S.|last2=Nathan|first2=Kobi T.}}</ref><ref name="Meisenberg 2008">{{cite journal|doi=10.1016/j.paid.2008.01.010|title=Are acquiescent and extreme response styles related to low intelligence and education?|journal=Personality and Individual Differences|volume=44|issue=7|pages=1539–1550|year=2008|last1=Meisenberg|first1=Gerhard|last2=Williams|first2=Amandy}}</ref> For example, a [[Research participant|participant]] could be asked whether they endorse the following statement, "I prefer to spend time with others" but then later on in the survey also endorses "I prefer to spend time alone," which are contradictory statements. This is a distinct problem for self-report research because it does not allow a researcher to understand or gather accurate data from any type of question that asks for a participant to endorse or reject statements.<ref name="Knowles 1997"/> Researchers have approached this issue by thinking about the bias in two different ways. The first deals with the idea that participants are trying to be agreeable, in order to avoid the disapproval of the researcher.<ref name="Knowles 1997"/> A second cause for this type of bias was proposed by [[Lee Cronbach]], when he argued that it is likely due to a problem in the [[Cognition|cognitive processes]] of the participant, instead of the motivation to please the researcher.<ref name="Cronbach"/> He argues that it may be due to biases in memory where an individual recalls information that supports endorsement of the statement, and ignores contradicting information.<ref name="Cronbach"/> |

||

| ⚫ | Researchers have several methods to try and reduce this form of bias. Primarily, they attempt to make balanced response sets in a given measure, meaning that there are a balanced number of positively and negatively worded questions.<ref name="Knowles 1997"/><ref name="Podsakoff 2003">{{cite journal|doi=10.1037/0021-9010.88.5.879|pmid=14516251|title=Common method biases in behavioral research: A critical review of the literature and recommended remedies|journal=Journal of Applied Psychology|volume=88|issue=5|pages=879–903|year=2003|last1=Podsakoff|first1=Philip M.|last2=MacKenzie|first2=Scott B.|last3=Lee|first3=Jeong-Yeon|last4=Podsakoff|first4=Nathan P.|hdl=2027.42/147112|s2cid=5281538|hdl-access=free}}</ref> This means that if a researcher was hoping to examine a certain trait with a given questionnaire, half of the questions would have a "yes" response to identify the trait, and the other half would have a "no" response to identify the trait.<ref name="Podsakoff 2003"/> |

||

| ⚫ | |||

| ⚫ | Researchers have several methods to try and reduce this form of bias. Primarily, they |

||

Nay-saying is the opposite form of this bias. It occurs when a participant always chooses to deny or not endorse any statements in a survey or measure. This has a similar effect of invalidating any kinds of endorsements that participants may make over the course of the experiment. |

Nay-saying is the opposite form of this bias. It occurs when a participant always chooses to deny or not endorse any statements in a survey or measure. This has a similar effect of invalidating any kinds of endorsements that participants may make over the course of the experiment. |

||

===Courtesy bias=== |

|||

''Courtesy bias'' is a type of response bias that occurs when some individuals tend to not fully state their unhappiness with a service or product as an attempt to be polite or [[courteous]] toward the questioner.<ref>{{cite web |title=Courtesy Bias |url=https://www.alleydog.com/glossary/definition.php?term=Courtesy+Bias |website=alleydog.com |access-date=8 May 2020}}</ref> It is a common bias in [[qualitative research]] methodology. |

|||

In a study on disrespect and abuse during facility based [[childbirth]], courtesy bias was found to be one of the causes of potential underreporting of those behaviors at hospitals and clinics.<ref>{{cite journal |last1=Sando |first1=David |last2=Abuya |first2=Timothy |last3=Asefa |first3=Anteneh |last4=Banks |first4=Kathleen P. |last5=Freedman |first5=Lynn P. |last6=Kujawski |first6=Stephanie |last7=Markovitz |first7=Amanda |last8=Ndwiga |first8=Charity |last9=Ramsey |first9=Kate |last10=Ratcliffe |first10=Hannah |last11=Ugwu |first11=Emmanuel O. |last12=Warren |first12=Charlotte E. |last13=Jolivet |first13=R. Rima |title=Methods used in prevalence studies of disrespect and abuse during facility based childbirth: lessons learned |journal=Reproductive Health |year=2017 |volume=14 |issue=1 |page=127 |doi=10.1186/s12978-017-0389-z |pmid=29020966 |pmc=5637332 |doi-access=free }}</ref> Evidence has been found that some cultures are especially prone to the courtesy bias, leading respondents to say what they believe the questioner wants to hear. This bias has been found in Asian and in [[Hispanic]] cultures.<ref>{{cite book |last1=Hakim |first1=Catherine |title=Models of the Family in Modern Societies: Ideals and Realities: Ideals and Realities |date=12 January 2018 |publisher=Routledge |isbn=9781351771481 |url=https://books.google.com/books?id=e-pGDwAAQBAJ&q=%22Courtesy+bias+is+a%22}}</ref> Courtesy bias has been found to be a similar term referring to people in [[East Asia]], who frequently tend to exhibit [[acquiescence bias]].<ref>{{cite web |title=Conducting Culturally Sensitive Psychosocial Research |url=https://www.netce.com/coursecontent.php?courseid=1597 |website=netce.com |access-date=19 July 2020}}</ref> As with most [[data collection]], courtesy bias has been found to be a concern from the phone survey respondents.<ref>{{cite journal |last1=Diamond-Smith |first1=Nadia |last2=Treleaven |first2=Emily |last3=Omoluabi |first3=Elizabeth |last4=Liu |first4=Jenny |title=Comparing simulated client experiences with phone survey self-reports for measuring the quality of family planning counseling: The case of depot medroxyprogesterone acetate – subcutaneous (DMPA-SC) in Nigeria |journal=Gates Open Research |year=2019 |volume=3 |page=1092 |doi=10.12688/gatesopenres.12935.1 |url=https://dqo52087pnd5x.cloudfront.net/manuscripts/14038/9e82fda5-bdc4-4ac9-a2d6-fc29e62bd999_12935_-_nadia_diamond-smith.pdf?doi=10.12688/gatesopenres.12935.1&numberOfBrowsableCollections=3&numberOfBrowsableInstitutionalCollections=0&numberOfBrowsableGateways=5|doi-access=free }}</ref> |

|||

Attempts were made to create a good interview environment in order to minimize courtesy bias. An emphasis is needed that both positive and negative experiences must be important to showcase to enhance learning and minimize the bias as much as possible.<ref>{{cite web |title=Gestational diabetes mellitus |url=https://www.worlddiabetesfoundation.org/sites/default/files/GDM%20Review.pdf |website=worlddiabetesfoundation.org |access-date=19 July 2020}}</ref> |

|||

===Demand characteristics=== |

===Demand characteristics=== |

||

{{Main|Demand characteristics}} |

{{Main|Demand characteristics}} |

||

Demand characteristics refer to a type of response bias where participants alter their response or behavior simply because they are part of an experiment.<ref name="Orne 1962"/> This arises because participants are actively engaged in the experiment, and may try to figure out the purpose, or adopt certain [[Human behavior|behaviors]] they believe belong in an experimental setting. [[Martin Orne]] was one of the first to identify this type of bias, and has developed several theories to address their cause.<ref>{{Cite book |chapter-url=https://books.google.com/books?id=zAMeF0JOtY0C&pg=PA110 |title=Artifacts in Behavioral Research |last=Orne |first=Martin T. |date=2009 |publisher=Oxford University Press |isbn=978-0-19-538554-0 |editor-last=Rosenthal |editor-first=Robert |pages=110–137 |chapter=Demand Characteristics and the Concept of Quasi-Controls |doi=10.1093/acprof:oso/9780195385540.003.0005 |editor-last2=Rosnow |editor-first2=Ralph L.}}</ref> His research points to the idea that participants enter a certain type of social interaction when engaging in an experiment, and this special social interaction drives participants to [[Consciousness|consciously]] and [[Unconscious mind|unconsciously]] alter their behaviors<ref name="Orne 1962"/> There are several ways that this bias can influence participants and their responses in an experimental setting. One of the most common relates to the motivations of the participant. Many people choose to volunteer to be in studies because they believe that experiments are important. This drives participants to be "good subjects" and fulfill their role in the experiment properly, because they believe that their proper participation is vital to the success of the study.<ref name="Orne 1962"/><ref name="Nichols 2008">{{cite journal|doi=10.3200/GENP.135.2.151-166|pmid=18507315|title=The Good-Subject Effect: Investigating Participant Demand Characteristics|journal=The Journal of General Psychology|volume=135|issue=2|pages=151–165|year=2008|last1=Nichols|first1=Austin Lee|last2=Maner|first2=Jon K.|s2cid=26488916}}</ref> Thus, in an attempt to productively participate, the subject may try to gain knowledge of the hypothesis being tested in the experiment and alter their behavior in an attempt to support that [[hypothesis]]. Orne conceptualized this change by saying that the experiment may appear to a participant as a problem, and it is his or her job to find the solution to that problem, which would be behaving in a way that would lend support to the experimenter's hypothesis.<ref name="Orne 1962"/> Alternatively, a participant may try to discover the hypothesis simply to provide faulty information and wreck the hypothesis.<ref name="Nichols 2008"/> Both of these results are harmful because they prevent the experimenters from gathering accurate data and making sound conclusions. |

|||

Outside of participant motivation, there are other factors that influence the appearance of demand characteristics in a study. Many of these factors relate to the unique nature of the experimental setting itself. For example, participants in studies are more likely to put up with uncomfortable or tedious tasks simply because they are in an experiment.<ref name="Orne 1962"/> Additionally, the [[Nonverbal communication|mannerisms]] of the experimenter, such as the way |

Outside of participant motivation, there are other factors that influence the appearance of demand characteristics in a study. Many of these factors relate to the unique nature of the experimental setting itself. For example, participants in studies are more likely to put up with uncomfortable or tedious tasks simply because they are in an experiment.<ref name="Orne 1962"/> Additionally, the [[Nonverbal communication|mannerisms]] of the experimenter, such as the way they greet the participant, or the way they interact with the participant during the course of the experiment may inadvertently bias how the participant responds during the course of the experiment.<ref name="Orne 1962"/><ref name="Cook 1970" /> Also, prior experiences of being in an experiment, or rumors of the experiment that participants may hear can greatly bias the way they respond.<ref name="Orne 1962"/><ref name="Nichols 2008"/><ref name="Cook 1970" /> Outside of an experiment, these types of past experiences and mannerisms may have significant effects on how patients rank the effectiveness of their [[Mental health professional|therapist]].<ref name="Reese 2013"/> Many of the ways therapists go about collecting client feedback involve self-reporting measures, which can be highly influenced by response bias.<ref name="Reese 2013"/> Participants may be biased if they fill out these measure in front of their therapist, or somehow feel compelled to answer in an affirmative matter because they believe their therapy should be working.<ref name="Reese 2013"/> In this case, the therapists would not be able to gain accurate feedback from their clients, and be unable to improve their therapy or accurately tailor further treatment to what the participants need.<ref name="Reese 2013"/> All of these different examples may have significant effects on the responses of participants, driving them to respond in ways that do not reflect their actual beliefs or actual mindset, which negatively impact conclusions drawn from those surveys.<ref name="Orne 1962"/> |

||

While demand characteristics cannot be completely removed from an experiment, there are steps that researchers can take to minimize the impact they may have on the results.<ref name="Orne 1962"/> One way to mitigate response bias is to use |

While demand characteristics cannot be completely removed from an experiment, there are steps that researchers can take to minimize the impact they may have on the results.<ref name="Orne 1962"/> One way to mitigate response bias is to use deception to prevent the participant from discovering the true hypothesis of the experiment<ref name="Cook 1970">{{cite journal|doi=10.1037/h0028849|title=Demand characteristics and three conceptions of the frequently deceived subject|journal=Journal of Personality and Social Psychology|volume=14|issue=3|pages=185–194|year=1970|last1=Cook|first1=Thomas D.|display-authors=etal}}</ref> and then [[Debriefing|debrief]] the participants.<ref name="Cook 1970"/> For example, research has demonstrated that repeated deception and debriefing is useful in preventing participants from becoming familiar with the experiment, and that participants do not significantly alter their behaviors after being deceived and debriefed multiple times.<ref name="Cook 1970"/> Another way that researchers attempt to reduce demand characteristics is by being as [[Neutrality (philosophy)|neutral]] as possible, or training those conducting the experiment to be as neutral as possible.<ref name="Nichols 2008"/> For example, studies show that extensive one-on-one contact between the experimenter and the participant makes it more difficult to be neutral, and go on to suggest that this type of interaction should be limited when designing an experiment.<ref name="Podsakoff 2003"/><ref name="Nichols 2008"/> Another way to prevent demand characteristics is to use [[Blind experiment|blinded]] experiments with [[placebo]]s or [[Treatment and control groups|control groups]].<ref name="Orne 1962"/><ref name="Podsakoff 2003"/> This prevents the experimenter from biasing the participant, because the researcher does not know in which way the participant should respond. Although not perfect, these methods can significantly reduce the effect of demand characteristics on a study, thus making the conclusions drawn from the experiment more likely to accurately reflect what they were intended to measure.<ref name="Nichols 2008"/> |

||

===Extreme responding=== |

===Extreme responding=== |

||

Extreme responding is a form of response bias that drives respondents to only select the most extreme options or answers available.<ref name="Furnham"/><ref name="Meisenberg 2008"/> For example, in a survey utilizing a [[Likert scale]] with potential responses ranging from one to five, the respondent may only give answers as ones or fives. Another example is if the participant only answered questionnaires with "strongly agree" or "strongly disagree" in a survey with that type of response style. There are several reasons for why this bias may take hold in a group of participants. One example ties the development of this type of bias in respondents to their cultural identity.<ref name="Meisenberg 2008"/> This explanation states that people from certain cultures are more likely to respond in an extreme manner as compared to others. For example, research has found that those from the Middle East and Latin America are more prone to be affected by extremity response, whereas those from East Asia and Western Europe are less likely to be affected.<ref name="Meisenberg 2008"/> A second explanation for this type of response bias relates to the education level of the participants.<ref name="Meisenberg 2008"/> Research has indicated that those with lower intelligence, measured by an analysis of [[Intelligence quotient|IQ]] and school achievement, are more likely to be affected by extremity response.<ref name="Meisenberg 2008"/> Another way that this bias can be introduced is through the wording of questions in a survey or questionnaire.<ref name="Furnham"/> Certain topics or the wording of a question may drive participants to respond in an extreme manner, especially if it relates to the motivations or beliefs of the participant.<ref name="Furnham"/> |

|||

The opposite of this bias occurs when participants only select intermediate or mild responses as answers.<ref name="Furnham"/> |

|||

=== Question order bias === |

|||

The opposite of this bias occurs when participants only select intermediate or mild responses as answers. <ref name="Furnham"/> In this type of bias, a participant would highly prefer to select a 3 in a scale ranging from 1 to 5, or the most mild response in a survey asking for their feelings on a topic, such as "Neutral," "slightly agree," or "slightly disagree." |

|||

Question order bias, or "order effects bias", is a type of response bias where a respondent may react differently to questions based on the order in which questions appear in a survey or interview.<ref>{{Cite journal |last=Blankenship |first=Albert |date=1942 |title=Psychological Difficulties in Measuring Consumer Preference |journal=Journal of Marketing |language=en |volume=6 |issue=4, part 2 |pages=66–75 |doi=10.1177/002224294200600420.1 |jstor=1246085|s2cid=167331418 }}</ref> Question order bias is different from "response order bias" that addresses specifically the order of the set of responses within a survey question.<ref>{{Cite journal |last1=Israel |first1=Glenn D. |last2=Taylor |first2=C.L. |date=1990 |title=Can response order bias evaluations? |journal=Evaluation and Program Planning |language=en |volume=13 |issue=4 |pages=365–371 |doi=10.1016/0149-7189(90)90021-N}}</ref> There are many ways that questionnaire items that appear earlier in a survey can affect responses to later questions. One way is when a question creates a "norm of reciprocity or fairness" as identified in the 1950 work of [[Herbert Hyman]] and Paul Sheatsley.<ref>{{Cite book|last1=Hyman |last2= Sheatsley|first1=H. H. |first2= P. B.|editor-last=Payne|editor-first=J. C. |date=1950|chapter=The Current Status of American Public Opinion|title=The Teaching of Contemporary Affairs: Twenty-first Yearbook of the National Council of Social Studies|pages=11–34|oclc=773251346}}</ref> In their research they asked two questions. One was asked on whether the United States should allow reporters from communist countries to come to the U.S. and send back news as they saw it; and another question was asked on whether a communist country like Russia should let American newspaper reporters come in and send back news as they saw it to America. In the study, the percentage of “yes” responses to the question allowing communist reporters increased by 37 percentage points depending on the order. Similarly results for the American reporters item increased by 24 percentage points. When either of the items was asked second, the context for the item was changed as a result of the answer to the first, and the responses to the second were more in line with what would be considered fair, based on the previous response.<ref name=":0">{{Cite book|title=Encyclopedia of Survey Research Methods|last=Lavrakas|first=Paul J.|publisher=SAGE Publications, Inc|year=2008|isbn=9781412918084|location=Thousand Oaks|pages=664–665}}</ref> Another way to alter the response towards questions based on order depends on the framing of the question. If a respondent is first asked about their general interest in a subject their response interest may be higher than if they are first posed technical or knowledge based questions about a subject.<ref name=":0" /> Part-whole contrast effect is yet another ordering effect. When general and specific questions are asked in different orders, results for the specific item are generally unaffected, whereas those for the general item can change significantly.<ref name=":0" /> Question order biases occur primarily in survey or questionnaire settings. Some strategies to limit the effects of question order bias include randomization, grouping questions by topic to unfold in a logical order.<ref>{{Cite news|url=http://www.pewresearch.org/methodology/u-s-survey-research/questionnaire-design/|title=Questionnaire design|date=2015-01-29|work=Pew Research Center|access-date=2017-11-18|language=en-US}}</ref> |

|||

===Social desirability bias=== |

===Social desirability bias=== |

||

{{Main|Social desirability bias}} |

{{Main|Social desirability bias}} |

||

Social desirability bias is a type of response bias that influences a participant to deny undesirable traits, and ascribe to themselves traits that are socially desirable.<ref name="Nederhof"/> In essence, it is a bias that drives an individual to answer in a way that makes them look more favorable to the experimenter.<ref name="Furnham"/><ref name="Nederhof"/> This bias can take many forms. Some individuals may over-report good behavior, while others may under-report bad, or undesirable behavior.<ref name="Furnham"/> A critical aspect of how this bias can come to affect the responses of participants relates to the norms of the society in which the research is taking place.<ref name="Nederhof"/> For example, social desirability bias could play a large role if conducting research about an individual's tendency to use drugs. Those in a community where drug use is seen as acceptable or popular may exaggerate their own drug use, whereas those from a community where drug use is looked down upon may choose to under-report their own use. This type of bias is much more prevalent in questions that draw on a subject's opinion, like when asking a participant to evaluate or rate something, because there generally is not one correct answer, and the respondent has multiple ways they could answer the question.<ref name="Kalton"/> Overall, this bias can be very problematic for self-report researchers, especially if the topic they are looking at is controversial.<ref name="Furnham"/> The distortions created by respondents answering in a socially desirable manner can have profound effects on the validity of self-report research.<ref name="Nederhof"/> Without being able to control for or deal with this bias, researchers are unable to determine if the effects they are measuring are due to individual differences, or from a desire to [[Conformity|conform to the societal norms]] present in the population they are studying. Therefore, researchers strive to employ strategies aimed at mitigating social desirability bias so that they can draw valid conclusions from their research.<ref name="Furnham"/> |

|||

Several strategies exist to limit the effect of social desirability bias. In 1985, Anton Nederhof compiled a list |

Several strategies exist to limit the effect of social desirability bias. In 1985, Anton Nederhof compiled a list of techniques and methodological strategies for researchers to use to mitigate the effects of social desirability bias in their studies.<ref name="Nederhof"/> Most of these strategies involve deceiving the subject, or are related to the way questions in surveys and questionnaires are presented to those in a study. A condensed list of seven of the strategies are listed below: |

||

*'''Ballot-box method''': This method allows a subject to anonymously self-complete a questionnaire and submit it to a locked "ballot box", thereby concealing their responses from an interviewer and affording the participant an additional layer of assured concealment from perceived social repercussion.<ref name=":1">{{Cite journal |last1=Bova |first1=Christopher S. |last2=Aswani |first2=Shankar |last3=Farthing |first3=Matthew W. |last4=Potts |first4=Warren M. |date=2018 |title=Limitations of the random response technique and a call to implement the ballot box method for estimating recreational angler compliance using surveys |journal=Fisheries Research |language=en |volume=208 |pages=34–41 |doi=10.1016/j.fishres.2018.06.017|s2cid=92793552 }}</ref> |

|||

*'''Forced-choice items:''' This technique hopes to generate questions that are equal in desirability to hopefully prevent a socially desirable response in one direction or another.<ref name="Nederhof"/> |

|||

*''' |

*'''Forced-choice items''': This technique hopes to generate questions that are equal in desirability to prevent a socially desirable response in one direction or another.<ref name="Nederhof" /> |

||

*'''Randomized response technique |

*'''Neutral questions''': The goal of this strategy is to use questions that are rated as neutral by a wide range of participants so that socially desirable responding does not apply.<ref name="Nederhof"/> |

||

*'''[[Randomized response|Randomized response technique]]''': This technique allows participants to answer a question that is randomly selected from a set of questions. The researcher in this technique does not know which question the subject responds to, so subjects are more likely to answer truthfully. Researchers can then use statistics to interpret the anonymous data.<ref name="Nederhof"/> |

|||

*'''Self-administered questionnaires |

*'''Self-administered questionnaires''': This strategy involves isolating the participant before they begin answering the survey or questionnaire to hopefully remove any social cues the researcher may present to the participant.<ref name="Nederhof"/> |

||

*'''Bogus-pipeline |

*'''Bogus-pipeline''': This technique involves a form of deception, where researchers convince a subject through a series of rigged demonstrations that a machine can accurately determine if a participant is being truthful when responding to certain questions. After the participant completes the survey or questionnaire, they are debriefed. This is a rare technique, and does not see much use because of the cost, time commitment and because it is a one-use only technique for each participant.<ref name="Nederhof"/> |

||

*'''Selection interviewers |

*'''Selection interviewers''': This strategy allows participants to select the person or persons who will be conducting the interview or presiding over the experiment. This, in the hope that with a higher degree of rapport, subjects will be more likely to answer honestly.<ref name="Nederhof"/> |

||

*'''Proxy subjects |

*'''Proxy subjects''': Instead of asking a person directly, this strategy questions someone who is close to or knows the target individual well. This technique is generally limited to questions about behavior, and is not adequate for asking about attitudes or beliefs.<ref name="Nederhof"/> |

||

The degree of effectiveness for each of these techniques or strategies differs depending on the situation and the question asked.<ref name="Nederhof"/> In order to be the most successful in reducing social desirability bias in a wide range of situations, it |

The degree of effectiveness for each of these techniques or strategies differs depending on the situation and the question asked.<ref name="Nederhof"/> In order to be the most successful in reducing social desirability bias in a wide range of situations, it has been suggested that researchers utilize a combination of these techniques to have the best chance at mitigating the effects of social desirability bias.<ref name="Furnham"/><ref name="Nederhof"/> Validations are not made on a "more is better" assumption (higher stated prevalence of the behavior of interest) when selecting the best method for reducing SDB as this is a "weak validation" that does not always guarantee the best results. Instead, ground "truthed" comparisons of observed data to stated data should reveal the most accurate method.<ref name=":1" /> |

||

==Related terminology== |

==Related terminology== |

||

*[[Non-response bias]] is not the opposite of |

*[[Non-response bias]] is not the opposite of response bias and is not a type of cognitive bias: it occurs in a [[statistical survey]] if those who respond to the survey differ in the outcome variable. |

||

*[[Response rate]] is not a cognitive bias, but rather refers to a ratio of those who complete the survey and those who |

*[[Response rate (survey)|Response rate]] is not a cognitive bias, but rather refers to a ratio of those who complete the survey and those who do not. |

||

==Highly vulnerable areas== |

==Highly vulnerable areas== |

||

Some areas or topics that are highly vulnerable to the various types of response bias include: |

Some areas or topics that are highly vulnerable to the various types of response bias include: |

||

*alcoholism<ref> |

*alcoholism<ref>{{cite journal|doi=10.15288/jsa.1987.48.410|pmid=3312821|title=Verbal report methods in clinical research on alcoholism: Response bias and its minimization|journal=Journal of Studies on Alcohol|volume=48|issue=5|pages=410–424|year=1987|last1=Babor|first1=T F|last2=Stephens|first2=R S|last3=Marlatt|first3=G A}}</ref><ref>{{cite journal|doi=10.15288/jsa.1993.54.334|pmid=8487543|title=Validity and reliability of self-reported drinking behavior: Dealing with the problem of response bias|journal=Journal of Studies on Alcohol|volume=54|issue=3|pages=334–344|year=1993|last1=Embree|first1=B G|last2=Whitehead|first2=P C}}</ref> |

||

| ⚫ | |||

*sexual violence |

|||

| ⚫ | |||

==See also== |

==See also== |

||

* [[ |

* [[List of cognitive biases]] |

||

* [[Compound question]] |

* [[Compound question]] |

||

* [[Heckman correction]] |

|||

* [[Loaded question]] |

* [[Loaded question]] |

||

* [[Misinformation effect]], similar effect for memory instead of opinion. |

* [[Misinformation effect]], similar effect for memory instead of opinion. |

||

* [[Opinion poll#Potential for inaccuracy|Opinion poll]] |

* [[Opinion poll#Potential for inaccuracy|Opinion poll]] |

||

* [[Randomized response]] |

|||

* [[List of cognitive biases]] |

|||

* [[Total survey error]] |

|||

==Notes== |

|||

| ⚫ | |||

==Further reading== |

|||

*{{cite journal |last1=Blair |first1=G. |last2=Coppock |first2=A. |last3=Moor |first3=M. |year=2020 |doi=10.1017/S0003055420000374 |title=When to Worry about Sensitivity Bias: A Social Reference Theory and Evidence from 30 Years of List Experiments |journal=American Political Science Review |volume=114 |issue=4 |pages=1297–1315 |doi-access=free }} |

|||

==External links== |

==External links== |

||

* [http://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=9613 Estimation of Response Bias in the NHES:95 Adult Education Survey] |

* [http://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=9613 Estimation of Response Bias in the NHES:95 Adult Education Survey] |

||

* [http://www.srs.fs.usda.gov/pubs/20254 Effects of road sign wording on visitor survey - non-response bias] |

* [http://www.srs.fs.usda.gov/pubs/20254 Effects of road sign wording on visitor survey - non-response bias] |

||

| ⚫ | |||

| ⚫ | |||

{{Biases}} |

{{Biases}} |

||

[[Category: |

[[Category:Experimental bias]] |

||

[[Category: |

[[Category:Survey methodology]] |

||

{{Statistics-stub}} |

|||

Latest revision as of 22:54, 10 December 2024

Response bias is a general term for a wide range of tendencies for participants to respond inaccurately or falsely to questions. These biases are prevalent in research involving participant self-report, such as structured interviews or surveys.[1] Response biases can have a large impact on the validity of questionnaires or surveys.[1][2]

Response bias can be induced or caused by numerous factors, all relating to the idea that human subjects do not respond passively to stimuli, but rather actively integrate multiple sources of information to generate a response in a given situation.[3] Because of this, almost any aspect of an experimental condition may potentially bias a respondent. Examples include the phrasing of questions in surveys, the demeanor of the researcher, the way the experiment is conducted, or the desires of the participant to be a good experimental subject and to provide socially desirable responses may affect the response in some way.[1][2][3][4] All of these "artifacts" of survey and self-report research may have the potential to damage the validity of a measure or study.[2] Compounding this issue is that surveys affected by response bias still often have high reliability, which can lure researchers into a false sense of security about the conclusions they draw.[5]

Because of response bias, it is possible that some study results are due to a systematic response bias rather than the hypothesized effect, which can have a profound effect on psychological and other types of research using questionnaires or surveys.[5] It is therefore important for researchers to be aware of response bias and the effect it can have on their research so that they can attempt to prevent it from impacting their findings in a negative manner.

History of research

[edit]Awareness of response bias has been present in psychology and sociology literature for some time because self-reporting features significantly in those fields of research. However, researchers were initially unwilling to admit the degree to which they impact, and potentially invalidate research utilizing these types of measures.[5] Some researchers believed that the biases present in a group of subjects cancel out when the group is large enough.[6] This would mean that the impact of response bias is random noise, which washes out if enough participants are included in the study.[5] However, at the time this argument was proposed, effective methodological tools that could test it were not available.[5] Once newer methodologies were developed, researchers began to investigate the impact of response bias.[5] From this renewed research, two opposing sides arose.

The first group supports Hyman's belief that although response bias exists, it often has minimal effect on participant response, and no large steps need to be taken to mitigate it.[5][7][8] These researchers hold that although there is significant literature identifying response bias as influencing the responses of study participants, these studies do not in fact provide empirical evidence that this is the case.[5] They subscribe to the idea that the effects of this bias wash out with large enough samples, and that it is not a systematic problem in mental health research.[5][7] These studies also call into question earlier research that investigated response bias on the basis of their research methodologies. For example, they mention that many of the studies had very small sample sizes, or that in studies looking at social desirability, a subtype of response bias, the researchers had no way to quantify the desirability of the statements used in the study.[5] Additionally, some have argued that what researchers may believe to be artifacts of response bias, such as differences in responding between men and women, may in fact be actual differences between the two groups.[7] Several other studies also found evidence that response bias is not as big of a problem as it may seem. The first found that when comparing the responses of participants, with and without controls for response bias, their answers to the surveys were not different.[7] Two other studies found that although the bias may be present, the effects are extremely small, having little to no impact towards dramatically changing or altering the responses of participants.[8][9]

The second group argues against Hyman's point, saying that response bias has a significant effect, and that researchers need to take steps to reduce response bias in order to conduct sound research.[1][2] They argue that the impact of response bias is a systematic error inherent to this type of research and that it needs to be addressed in order for studies to be able to produce accurate results. In psychology, there are many studies exploring the impact of response bias in many different settings and with many different variables. For example, some studies have found effects of response bias in the reporting of depression in elderly patients.[10] Other researchers have found that there are serious issues when responses to a given survey or questionnaire have responses that may seem desirable or undesirable to report, and that a person's responses to certain questions can be biased by their culture.[2][11] Additionally, there is support for the idea that simply being part of an experiment can have dramatic effects on how participants act, thus biasing anything that they may do in a research or experimental setting when it comes to self-reporting.[3] One of the most influential studies was one which found that social desirability bias, a type of response bias, can account for as much as 10–70% of the variance in participant response.[2] Essentially, because of several findings that illustrate the dramatic effects response bias has on the outcomes of self-report research, this side supports the idea that steps need to be taken to mitigate the effects of response bias to maintain the accuracy of research.

While both sides have support in the literature, there appears to be greater empirical support for the significance of response bias.[1][2][3][11][12][13] To add strength to the claims of those who argue the importance of response bias, many of the studies that reject the significance of response bias report multiple methodological issues in their studies. For example, they have extremely small samples that are not representative of the population as a whole, they only considered a small subset of potential variables that could be affected by response bias, and their measurements were conducted over the phone with poorly worded statements.[5][7]

Types

[edit]Acquiescence bias

[edit]Acquiescence bias, which is also referred to as "yea-saying", is a category of response bias in which respondents to a survey have a tendency to agree with all the questions in a measure.[14][15] This bias in responding may represent a form of dishonest reporting because the participant automatically endorses any statements, even if the result is contradictory responses.[16][17] For example, a participant could be asked whether they endorse the following statement, "I prefer to spend time with others" but then later on in the survey also endorses "I prefer to spend time alone," which are contradictory statements. This is a distinct problem for self-report research because it does not allow a researcher to understand or gather accurate data from any type of question that asks for a participant to endorse or reject statements.[16] Researchers have approached this issue by thinking about the bias in two different ways. The first deals with the idea that participants are trying to be agreeable, in order to avoid the disapproval of the researcher.[16] A second cause for this type of bias was proposed by Lee Cronbach, when he argued that it is likely due to a problem in the cognitive processes of the participant, instead of the motivation to please the researcher.[13] He argues that it may be due to biases in memory where an individual recalls information that supports endorsement of the statement, and ignores contradicting information.[13]

Researchers have several methods to try and reduce this form of bias. Primarily, they attempt to make balanced response sets in a given measure, meaning that there are a balanced number of positively and negatively worded questions.[16][18] This means that if a researcher was hoping to examine a certain trait with a given questionnaire, half of the questions would have a "yes" response to identify the trait, and the other half would have a "no" response to identify the trait.[18]

Nay-saying is the opposite form of this bias. It occurs when a participant always chooses to deny or not endorse any statements in a survey or measure. This has a similar effect of invalidating any kinds of endorsements that participants may make over the course of the experiment.

Courtesy bias

[edit]Courtesy bias is a type of response bias that occurs when some individuals tend to not fully state their unhappiness with a service or product as an attempt to be polite or courteous toward the questioner.[19] It is a common bias in qualitative research methodology.

In a study on disrespect and abuse during facility based childbirth, courtesy bias was found to be one of the causes of potential underreporting of those behaviors at hospitals and clinics.[20] Evidence has been found that some cultures are especially prone to the courtesy bias, leading respondents to say what they believe the questioner wants to hear. This bias has been found in Asian and in Hispanic cultures.[21] Courtesy bias has been found to be a similar term referring to people in East Asia, who frequently tend to exhibit acquiescence bias.[22] As with most data collection, courtesy bias has been found to be a concern from the phone survey respondents.[23]

Attempts were made to create a good interview environment in order to minimize courtesy bias. An emphasis is needed that both positive and negative experiences must be important to showcase to enhance learning and minimize the bias as much as possible.[24]

Demand characteristics

[edit]Demand characteristics refer to a type of response bias where participants alter their response or behavior simply because they are part of an experiment.[3] This arises because participants are actively engaged in the experiment, and may try to figure out the purpose, or adopt certain behaviors they believe belong in an experimental setting. Martin Orne was one of the first to identify this type of bias, and has developed several theories to address their cause.[25] His research points to the idea that participants enter a certain type of social interaction when engaging in an experiment, and this special social interaction drives participants to consciously and unconsciously alter their behaviors[3] There are several ways that this bias can influence participants and their responses in an experimental setting. One of the most common relates to the motivations of the participant. Many people choose to volunteer to be in studies because they believe that experiments are important. This drives participants to be "good subjects" and fulfill their role in the experiment properly, because they believe that their proper participation is vital to the success of the study.[3][26] Thus, in an attempt to productively participate, the subject may try to gain knowledge of the hypothesis being tested in the experiment and alter their behavior in an attempt to support that hypothesis. Orne conceptualized this change by saying that the experiment may appear to a participant as a problem, and it is his or her job to find the solution to that problem, which would be behaving in a way that would lend support to the experimenter's hypothesis.[3] Alternatively, a participant may try to discover the hypothesis simply to provide faulty information and wreck the hypothesis.[26] Both of these results are harmful because they prevent the experimenters from gathering accurate data and making sound conclusions.

Outside of participant motivation, there are other factors that influence the appearance of demand characteristics in a study. Many of these factors relate to the unique nature of the experimental setting itself. For example, participants in studies are more likely to put up with uncomfortable or tedious tasks simply because they are in an experiment.[3] Additionally, the mannerisms of the experimenter, such as the way they greet the participant, or the way they interact with the participant during the course of the experiment may inadvertently bias how the participant responds during the course of the experiment.[3][27] Also, prior experiences of being in an experiment, or rumors of the experiment that participants may hear can greatly bias the way they respond.[3][26][27] Outside of an experiment, these types of past experiences and mannerisms may have significant effects on how patients rank the effectiveness of their therapist.[12] Many of the ways therapists go about collecting client feedback involve self-reporting measures, which can be highly influenced by response bias.[12] Participants may be biased if they fill out these measure in front of their therapist, or somehow feel compelled to answer in an affirmative matter because they believe their therapy should be working.[12] In this case, the therapists would not be able to gain accurate feedback from their clients, and be unable to improve their therapy or accurately tailor further treatment to what the participants need.[12] All of these different examples may have significant effects on the responses of participants, driving them to respond in ways that do not reflect their actual beliefs or actual mindset, which negatively impact conclusions drawn from those surveys.[3]

While demand characteristics cannot be completely removed from an experiment, there are steps that researchers can take to minimize the impact they may have on the results.[3] One way to mitigate response bias is to use deception to prevent the participant from discovering the true hypothesis of the experiment[27] and then debrief the participants.[27] For example, research has demonstrated that repeated deception and debriefing is useful in preventing participants from becoming familiar with the experiment, and that participants do not significantly alter their behaviors after being deceived and debriefed multiple times.[27] Another way that researchers attempt to reduce demand characteristics is by being as neutral as possible, or training those conducting the experiment to be as neutral as possible.[26] For example, studies show that extensive one-on-one contact between the experimenter and the participant makes it more difficult to be neutral, and go on to suggest that this type of interaction should be limited when designing an experiment.[18][26] Another way to prevent demand characteristics is to use blinded experiments with placebos or control groups.[3][18] This prevents the experimenter from biasing the participant, because the researcher does not know in which way the participant should respond. Although not perfect, these methods can significantly reduce the effect of demand characteristics on a study, thus making the conclusions drawn from the experiment more likely to accurately reflect what they were intended to measure.[26]

Extreme responding

[edit]Extreme responding is a form of response bias that drives respondents to only select the most extreme options or answers available.[1][17] For example, in a survey utilizing a Likert scale with potential responses ranging from one to five, the respondent may only give answers as ones or fives. Another example is if the participant only answered questionnaires with "strongly agree" or "strongly disagree" in a survey with that type of response style. There are several reasons for why this bias may take hold in a group of participants. One example ties the development of this type of bias in respondents to their cultural identity.[17] This explanation states that people from certain cultures are more likely to respond in an extreme manner as compared to others. For example, research has found that those from the Middle East and Latin America are more prone to be affected by extremity response, whereas those from East Asia and Western Europe are less likely to be affected.[17] A second explanation for this type of response bias relates to the education level of the participants.[17] Research has indicated that those with lower intelligence, measured by an analysis of IQ and school achievement, are more likely to be affected by extremity response.[17] Another way that this bias can be introduced is through the wording of questions in a survey or questionnaire.[1] Certain topics or the wording of a question may drive participants to respond in an extreme manner, especially if it relates to the motivations or beliefs of the participant.[1]

The opposite of this bias occurs when participants only select intermediate or mild responses as answers.[1]

Question order bias

[edit]Question order bias, or "order effects bias", is a type of response bias where a respondent may react differently to questions based on the order in which questions appear in a survey or interview.[28] Question order bias is different from "response order bias" that addresses specifically the order of the set of responses within a survey question.[29] There are many ways that questionnaire items that appear earlier in a survey can affect responses to later questions. One way is when a question creates a "norm of reciprocity or fairness" as identified in the 1950 work of Herbert Hyman and Paul Sheatsley.[30] In their research they asked two questions. One was asked on whether the United States should allow reporters from communist countries to come to the U.S. and send back news as they saw it; and another question was asked on whether a communist country like Russia should let American newspaper reporters come in and send back news as they saw it to America. In the study, the percentage of “yes” responses to the question allowing communist reporters increased by 37 percentage points depending on the order. Similarly results for the American reporters item increased by 24 percentage points. When either of the items was asked second, the context for the item was changed as a result of the answer to the first, and the responses to the second were more in line with what would be considered fair, based on the previous response.[31] Another way to alter the response towards questions based on order depends on the framing of the question. If a respondent is first asked about their general interest in a subject their response interest may be higher than if they are first posed technical or knowledge based questions about a subject.[31] Part-whole contrast effect is yet another ordering effect. When general and specific questions are asked in different orders, results for the specific item are generally unaffected, whereas those for the general item can change significantly.[31] Question order biases occur primarily in survey or questionnaire settings. Some strategies to limit the effects of question order bias include randomization, grouping questions by topic to unfold in a logical order.[32]

Social desirability bias

[edit]Social desirability bias is a type of response bias that influences a participant to deny undesirable traits, and ascribe to themselves traits that are socially desirable.[2] In essence, it is a bias that drives an individual to answer in a way that makes them look more favorable to the experimenter.[1][2] This bias can take many forms. Some individuals may over-report good behavior, while others may under-report bad, or undesirable behavior.[1] A critical aspect of how this bias can come to affect the responses of participants relates to the norms of the society in which the research is taking place.[2] For example, social desirability bias could play a large role if conducting research about an individual's tendency to use drugs. Those in a community where drug use is seen as acceptable or popular may exaggerate their own drug use, whereas those from a community where drug use is looked down upon may choose to under-report their own use. This type of bias is much more prevalent in questions that draw on a subject's opinion, like when asking a participant to evaluate or rate something, because there generally is not one correct answer, and the respondent has multiple ways they could answer the question.[4] Overall, this bias can be very problematic for self-report researchers, especially if the topic they are looking at is controversial.[1] The distortions created by respondents answering in a socially desirable manner can have profound effects on the validity of self-report research.[2] Without being able to control for or deal with this bias, researchers are unable to determine if the effects they are measuring are due to individual differences, or from a desire to conform to the societal norms present in the population they are studying. Therefore, researchers strive to employ strategies aimed at mitigating social desirability bias so that they can draw valid conclusions from their research.[1]

Several strategies exist to limit the effect of social desirability bias. In 1985, Anton Nederhof compiled a list of techniques and methodological strategies for researchers to use to mitigate the effects of social desirability bias in their studies.[2] Most of these strategies involve deceiving the subject, or are related to the way questions in surveys and questionnaires are presented to those in a study. A condensed list of seven of the strategies are listed below:

- Ballot-box method: This method allows a subject to anonymously self-complete a questionnaire and submit it to a locked "ballot box", thereby concealing their responses from an interviewer and affording the participant an additional layer of assured concealment from perceived social repercussion.[33]

- Forced-choice items: This technique hopes to generate questions that are equal in desirability to prevent a socially desirable response in one direction or another.[2]

- Neutral questions: The goal of this strategy is to use questions that are rated as neutral by a wide range of participants so that socially desirable responding does not apply.[2]

- Randomized response technique: This technique allows participants to answer a question that is randomly selected from a set of questions. The researcher in this technique does not know which question the subject responds to, so subjects are more likely to answer truthfully. Researchers can then use statistics to interpret the anonymous data.[2]

- Self-administered questionnaires: This strategy involves isolating the participant before they begin answering the survey or questionnaire to hopefully remove any social cues the researcher may present to the participant.[2]

- Bogus-pipeline: This technique involves a form of deception, where researchers convince a subject through a series of rigged demonstrations that a machine can accurately determine if a participant is being truthful when responding to certain questions. After the participant completes the survey or questionnaire, they are debriefed. This is a rare technique, and does not see much use because of the cost, time commitment and because it is a one-use only technique for each participant.[2]

- Selection interviewers: This strategy allows participants to select the person or persons who will be conducting the interview or presiding over the experiment. This, in the hope that with a higher degree of rapport, subjects will be more likely to answer honestly.[2]

- Proxy subjects: Instead of asking a person directly, this strategy questions someone who is close to or knows the target individual well. This technique is generally limited to questions about behavior, and is not adequate for asking about attitudes or beliefs.[2]

The degree of effectiveness for each of these techniques or strategies differs depending on the situation and the question asked.[2] In order to be the most successful in reducing social desirability bias in a wide range of situations, it has been suggested that researchers utilize a combination of these techniques to have the best chance at mitigating the effects of social desirability bias.[1][2] Validations are not made on a "more is better" assumption (higher stated prevalence of the behavior of interest) when selecting the best method for reducing SDB as this is a "weak validation" that does not always guarantee the best results. Instead, ground "truthed" comparisons of observed data to stated data should reveal the most accurate method.[33]

Related terminology

[edit]- Non-response bias is not the opposite of response bias and is not a type of cognitive bias: it occurs in a statistical survey if those who respond to the survey differ in the outcome variable.

- Response rate is not a cognitive bias, but rather refers to a ratio of those who complete the survey and those who do not.

Highly vulnerable areas

[edit]Some areas or topics that are highly vulnerable to the various types of response bias include:

See also

[edit]- List of cognitive biases

- Compound question

- Loaded question

- Misinformation effect, similar effect for memory instead of opinion.

- Opinion poll

- Randomized response

- Total survey error

Notes

[edit]- ^ a b c d e f g h i j k l m n Furnham, Adrian (1986). "Response bias, social desirability and dissimulation". Personality and Individual Differences. 7 (3): 385–400. doi:10.1016/0191-8869(86)90014-0.

- ^ a b c d e f g h i j k l m n o p q r s t u Nederhof, Anton J. (1985). "Methods of coping with social desirability bias: A review". European Journal of Social Psychology. 15 (3): 263–280. doi:10.1002/ejsp.2420150303.

- ^ a b c d e f g h i j k l m n Orne, Martin T. (1962). "On the social psychology of the psychological experiment: With particular reference to demand characteristics and their implications". American Psychologist. 17 (11): 776–783. doi:10.1037/h0043424. S2CID 7975753.

- ^ a b Kalton, Graham; Schuman, Howard (1982). "The Effect of the Question on Survey Responses: A Review" (PDF). Journal of the Royal Statistical Society. Series A (General). 145 (1): 42–73. doi:10.2307/2981421. hdl:2027.42/146916. JSTOR 2981421. S2CID 151566559.

- ^ a b c d e f g h i j k Gove, W. R.; Geerken, M. R. (1977). "Response bias in surveys of mental health: An empirical investigation". American Journal of Sociology. 82 (6): 1289–1317. doi:10.1086/226466. JSTOR 2777936. PMID 889001. S2CID 40008515.

- ^ Hyman, H; 1954. Interviewing in Social Research. Chicago: University of Chicago Press.

- ^ a b c d e Clancy, Kevin; Gove, Walter (1974). "Sex Differences in Mental Illness: An Analysis of Response Bias in Self-Reports". American Journal of Sociology. 80 (1): 205–216. doi:10.1086/225767. JSTOR 2776967. S2CID 46255353.

- ^ a b Campbell, A. Converse, P. Rodgers; 1976. The Quality of American Life: Perceptions, Evaluations and Satisfaction. New York: Russell Sage.

- ^ Gove, Walter R.; McCorkel, James; Fain, Terry; Hughes, Michael D. (1976). "Response bias in community surveys of mental health: Systematic bias or random noise?". Social Science & Medicine. 10 (9–10): 497–502. doi:10.1016/0037-7856(76)90118-9. PMID 1006342.

- ^ a b Knäuper, Bärbel; Wittchen, Hans-Ulrich (1994). "Diagnosing major depression in the elderly: Evidence for response bias in standardized diagnostic interviews?". Journal of Psychiatric Research. 28 (2): 147–164. doi:10.1016/0022-3956(94)90026-4. PMID 7932277.

- ^ a b Fischer, Ronald (2004). "Standardization to Account for Cross-Cultural Response Bias: A Classification of Score Adjustment Procedures and Review of Research in JCCP". Journal of Cross-Cultural Psychology. 35 (3): 263–282. doi:10.1177/0022022104264122. S2CID 32046329.

- ^ a b c d e Reese, Robert J.; Gillaspy, J. Arthur; Owen, Jesse J.; Flora, Kevin L.; Cunningham, Linda C.; Archie, Danielle; Marsden, Troymichael (2013). "The Influence of Demand Characteristics and Social Desirability on Clients' Ratings of the Therapeutic Alliance". Journal of Clinical Psychology. 69 (7): 696–709. doi:10.1002/jclp.21946. PMID 23349082.

- ^ a b c Cronbach, L. J. (1942). "Studies of acquiescence as a factor in the true-false test". Journal of Educational Psychology. 33 (6): 401–415. doi:10.1037/h0054677.

- ^ Watson, D. (1992). "Correcting for Acquiescent Response Bias in the Absence of a Balanced Scale: An Application to Class Consciousness". Sociological Methods & Research. 21: 52–88. doi:10.1177/0049124192021001003. S2CID 122977362.

- ^ Moss, Simon. (2008). Acquiescence bias

- ^ a b c d Knowles, Eric S.; Nathan, Kobi T. (1997). "Acquiescent Responding in Self-Reports: Cognitive Style or Social Concern?". Journal of Research in Personality. 31 (2): 293–301. doi:10.1006/jrpe.1997.2180.

- ^ a b c d e f Meisenberg, Gerhard; Williams, Amandy (2008). "Are acquiescent and extreme response styles related to low intelligence and education?". Personality and Individual Differences. 44 (7): 1539–1550. doi:10.1016/j.paid.2008.01.010.

- ^ a b c d Podsakoff, Philip M.; MacKenzie, Scott B.; Lee, Jeong-Yeon; Podsakoff, Nathan P. (2003). "Common method biases in behavioral research: A critical review of the literature and recommended remedies". Journal of Applied Psychology. 88 (5): 879–903. doi:10.1037/0021-9010.88.5.879. hdl:2027.42/147112. PMID 14516251. S2CID 5281538.

- ^ "Courtesy Bias". alleydog.com. Retrieved 8 May 2020.