Interpolation: Difference between revisions

→Functional interpolation: Fixed typo Tags: Mobile edit Mobile web edit |

|||

| (139 intermediate revisions by 82 users not shown) | |||

| Line 1: | Line 1: | ||

{{Short description|Method for estimating new data within known data points}} |

|||

{{Other uses}} |

{{Other uses}} |

||

{{distinguish|Interpellation (disambiguation){{!}}Interpellation}} |

|||

{{more footnotes|date=October 2016}} |

{{more footnotes|date=October 2016}} |

||

In the [[mathematics|mathematical]] field of [[numerical analysis]], '''interpolation''' is a method of constructing new [[data points]] |

In the [[mathematics|mathematical]] field of [[numerical analysis]], '''interpolation''' is a type of [[estimation]], a method of constructing (finding) new [[data points]] based on the range of a [[discrete set]] of known data points.<ref>{{cite EB1911 |wstitle=Interpolation |volume=14 |pages=706–710 |first=William Fleetwood |last=Sheppard |author-link=William Fleetwood Sheppard}}</ref><ref>{{Cite book|last=Steffensen|first=J. F.|url=https://www.worldcat.org/oclc/867770894|title=Interpolation|date=2006|isbn=978-0-486-15483-1|edition=Second|location=Mineola, N.Y.|oclc=867770894}}</ref> |

||

In [[engineering]] and [[science]], one often has a number of data points, obtained by [[sampling (statistics)|sampling]] or [[experimentation]], which represent the values of a function for a limited number of values of the independent variable. It is often required to '''interpolate''' |

In [[engineering]] and [[science]], one often has a number of data points, obtained by [[sampling (statistics)|sampling]] or [[experimentation]], which represent the values of a function for a limited number of values of the [[Dependent and independent variables|independent variable]]. It is often required to '''interpolate'''; that is, estimate the value of that function for an intermediate value of the independent variable. |

||

A |

A closely related problem is the [[function approximation|approximation]] of a complicated function by a simple function. Suppose the formula for some given function is known, but too complicated to evaluate efficiently. A few data points from the original function can be interpolated to produce a simpler function which is still fairly close to the original. The resulting gain in simplicity may outweigh the loss from interpolation error and give better performance in calculation process. |

||

| ⚫ | [[File:Splined epitrochoid.svg|300px|thumb|An interpolation of a finite set of points on an [[epitrochoid]]. The points in red are connected by blue interpolated [[spline (mathematics)|spline curves]] deduced only from the red points. The interpolated curves have polynomial formulas much simpler than that of the original epitrochoid curve.]] |

||

| ⚫ | |||

| ⚫ | |||

==Example== |

==Example== |

||

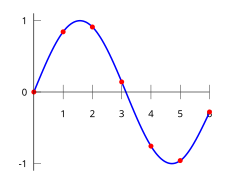

This table gives some values of an unknown function <math>f(x)</math>. |

|||

[[File:Interpolation Data.svg|right|thumb|230px|Plot of the data points as given in the table |

[[File:Interpolation Data.svg|right|thumb|230px|Plot of the data points as given in the table]] |

||

{| cellpadding=0 cellspacing=0 |

{| cellpadding=0 cellspacing=0 |

||

|width="20px"| |

|width="20px"| |

||

! <math>x</math> |

|||

! ''x'' |

|||

|width="10px"| |

|width="10px"| |

||

!colspan=3 align=center| |

!colspan=3 align=center| <math>f(x)</math> |

||

|- |

|- |

||

| || 0 || ||align=right| 0 |

| || 0 || ||align=right| 0 |

||

| Line 35: | Line 35: | ||

| || 6 || ||align=right| −0 || . || 2794 |

| || 6 || ||align=right| −0 || . || 2794 |

||

|} |

|} |

||

Interpolation provides a means of estimating the function at intermediate points, such as |

Interpolation provides a means of estimating the function at intermediate points, such as <math>x=2.5.</math> |

||

We describe some [[algorithm|methods]] of interpolation, differing in such properties as: accuracy, cost, number of data points needed, and [[smooth function|smoothness]] of the resulting [[interpolant]] function. |

|||

There are many different interpolation methods, some of which are described below. Some of the concerns to take into account when choosing an appropriate [[algorithm]] are: How accurate is the method? How expensive is it? How [[smooth function|smooth]] is the interpolant? How many data points are needed? |

|||

{{clear}} |

{{clear}} |

||

===Piecewise constant interpolation=== |

===Piecewise constant interpolation=== |

||

[[File:Piecewise constant.svg|thumb|right|Piecewise constant interpolation, or [[nearest-neighbor interpolation]] |

[[File:Piecewise constant.svg|thumb|right|Piecewise constant interpolation, or [[nearest-neighbor interpolation]]]] |

||

{{ |

{{Further|Nearest-neighbor interpolation}} |

||

The simplest interpolation method is to locate the nearest data value, and assign the same value. In simple problems, this method is unlikely to be used, as linear interpolation (see below) is almost as easy, but in higher-dimensional [[multivariate interpolation]], this could be a favourable choice for its speed and simplicity. |

The simplest interpolation method is to locate the nearest data value, and assign the same value. In simple problems, this method is unlikely to be used, as [[linear]] interpolation (see below) is almost as easy, but in higher-dimensional [[multivariate interpolation]], this could be a favourable choice for its speed and simplicity. |

||

{{clear}} |

{{clear}} |

||

| Line 49: | Line 49: | ||

[[File:Interpolation example linear.svg|right|thumb|230px|Plot of the data with linear interpolation superimposed]] |

[[File:Interpolation example linear.svg|right|thumb|230px|Plot of the data with linear interpolation superimposed]] |

||

{{Main|Linear interpolation}} |

{{Main|Linear interpolation}} |

||

One of the simplest methods is |

One of the simplest methods is linear interpolation (sometimes known as lerp). Consider the above example of estimating ''f''(2.5). Since 2.5 is midway between 2 and 3, it is reasonable to take ''f''(2.5) midway between ''f''(2) = 0.9093 and ''f''(3) = 0.1411, which yields 0.5252. |

||

Generally, linear interpolation takes two data points, say (''x''<sub>''a''</sub>,''y''<sub>''a''</sub>) and (''x''<sub>''b''</sub>,''y''<sub>''b''</sub>), and the interpolant is given by: |

Generally, linear interpolation takes two data points, say (''x''<sub>''a''</sub>,''y''<sub>''a''</sub>) and (''x''<sub>''b''</sub>,''y''<sub>''b''</sub>), and the interpolant is given by: |

||

:<math> y = y_a + \left( y_b-y_a \right) \frac{x-x_a}{x_b-x_a} \text{ at the point } \left( x,y \right) </math> |

:<math> y = y_a + \left( y_b-y_a \right) \frac{x-x_a}{x_b-x_a} \text{ at the point } \left( x,y \right) </math> |

||

:<math> \frac{y-y_a}{y_b-y_a} = \frac{x-x_a}{x_b-x_a} </math> |

:<math> \frac{y-y_a}{y_b-y_a} = \frac{x-x_a}{x_b-x_a} </math> |

||

:<math> \frac{y-y_a}{x-x_a} = \frac{y_b-y_a}{x_b-x_a} </math> |

:<math> \frac{y-y_a}{x-x_a} = \frac{y_b-y_a}{x_b-x_a} </math> |

||

This previous equation states that the slope of the new line between <math> (x_a,y_a) </math> and <math> (x,y) </math> is the same as the slope of the line between <math> (x_a,y_a) </math> and <math> (x_b,y_b) </math> |

This previous equation states that the slope of the new line between <math> (x_a,y_a) </math> and <math> (x,y) </math> is the same as the slope of the line between <math> (x_a,y_a) </math> and <math> (x_b,y_b) </math> |

||

| Line 69: | Line 66: | ||

:<math> |f(x)-g(x)| \le C(x_b-x_a)^2 \quad\text{where}\quad C = \frac18 \max_{r\in[x_a,x_b]} |g''(r)|. </math> |

:<math> |f(x)-g(x)| \le C(x_b-x_a)^2 \quad\text{where}\quad C = \frac18 \max_{r\in[x_a,x_b]} |g''(r)|. </math> |

||

In words, the error is proportional to the square of the distance between the data points. The error in some other methods, including polynomial interpolation and spline interpolation (described below), is proportional to higher powers of the distance between the data points. These methods also produce smoother interpolants. |

In words, the error is proportional to the square of the distance between the data points. The error in some other methods, including [[polynomial interpolation]] and spline interpolation (described below), is proportional to higher powers of the distance between the data points. These methods also produce smoother interpolants. |

||

{{clear}} |

{{clear}} |

||

===Polynomial interpolation=== |

===Polynomial interpolation=== |

||

[[File:Interpolation example polynomial.svg|right|thumb|230px|Plot of the data with polynomial interpolation applied]] |

[[File:Interpolation example polynomial.svg|right|thumb|230px|Plot of the data with polynomial interpolation applied]] |

||

| ⚫ | |||

Polynomial interpolation is a generalization of linear interpolation. Note that the linear interpolant is a [[linear function]]. We now replace this interpolant with a [[polynomial]] of higher [[degree of a polynomial|degree]]. |

|||

Consider again the problem given above. The following sixth degree polynomial goes through all the seven points: |

Consider again the problem given above. The following sixth degree polynomial goes through all the seven points: |

||

:<math> f(x) = -0.0001521 x^6 - 0.003130 x^5 + 0.07321 x^4 - 0.3577 x^3 + 0.2255 x^2 + 0.9038 x. </math> |

:<math> f(x) = -0.0001521 x^6 - 0.003130 x^5 + 0.07321 x^4 - 0.3577 x^3 + 0.2255 x^2 + 0.9038 x. </math> |

||

<!-- Coefficients are 0, 0.903803333333334, 0.22549749999997, -0.35772291666664, 0.07321458333332, -0.00313041666667, -0.00015208333333. --> |

<!-- Coefficients are 0, 0.903803333333334, 0.22549749999997, -0.35772291666664, 0.07321458333332, -0.00313041666667, -0.00015208333333. --> |

||

Substituting ''x'' = 2.5, we find that ''f''(2.5) = 0. |

Substituting ''x'' = 2.5, we find that ''f''(2.5) = ~0.59678. |

||

Generally, if we have ''n'' data points, there is exactly one polynomial of degree at most ''n''−1 going through all the data points. The interpolation error is proportional to the distance between the data points to the power ''n''. Furthermore, the interpolant is a polynomial and thus infinitely differentiable. So, we see that polynomial interpolation overcomes most of the problems of linear interpolation. |

Generally, if we have ''n'' data points, there is exactly one polynomial of degree at most ''n''−1 going through all the data points. The interpolation error is proportional to the distance between the data points to the power ''n''. Furthermore, the interpolant is a polynomial and thus infinitely differentiable. So, we see that polynomial interpolation overcomes most of the problems of linear interpolation. |

||

| Line 86: | Line 83: | ||

However, polynomial interpolation also has some disadvantages. Calculating the interpolating polynomial is computationally expensive (see [[Computational complexity theory|computational complexity]]) compared to linear interpolation. Furthermore, polynomial interpolation may exhibit oscillatory artifacts, especially at the end points (see [[Runge's phenomenon]]). |

However, polynomial interpolation also has some disadvantages. Calculating the interpolating polynomial is computationally expensive (see [[Computational complexity theory|computational complexity]]) compared to linear interpolation. Furthermore, polynomial interpolation may exhibit oscillatory artifacts, especially at the end points (see [[Runge's phenomenon]]). |

||

Polynomial interpolation can estimate local maxima and minima that are outside the range of the samples, unlike linear interpolation. For example, the interpolant above has a local maximum at ''x'' ≈ 1.566, ''f''(''x'') ≈ 1.003 and a local minimum at ''x'' ≈ 4.708, ''f''(''x'') ≈ −1.003. However, these maxima and minima may exceed the theoretical range of the |

Polynomial interpolation can estimate local maxima and minima that are outside the range of the samples, unlike linear interpolation. For example, the interpolant above has a local maximum at ''x'' ≈ 1.566, ''f''(''x'') ≈ 1.003 and a local minimum at ''x'' ≈ 4.708, ''f''(''x'') ≈ −1.003. However, these maxima and minima may exceed the theoretical range of the function; for example, a function that is always positive may have an interpolant with negative values, and whose inverse therefore contains false [[division by zero|vertical asymptotes]]. |

||

More generally, the shape of the resulting curve, especially for very high or low values of the independent variable, may be contrary to commonsense |

More generally, the shape of the resulting curve, especially for very high or low values of the independent variable, may be contrary to commonsense; that is, to what is known about the experimental system which has generated the data points. These disadvantages can be reduced by using spline interpolation or restricting attention to [[Chebyshev polynomials]]. |

||

{{clear}} |

{{clear}} |

||

| Line 95: | Line 92: | ||

{{Main|Spline interpolation}} |

{{Main|Spline interpolation}} |

||

Linear interpolation uses a linear function for each of intervals [''x''<sub>''k''</sub>,''x''<sub>''k+1''</sub>]. Spline interpolation uses low-degree polynomials in each of the intervals, and chooses the polynomial pieces such that they fit smoothly together. The resulting function is called a spline. |

|||

For instance, the [[natural cubic spline]] is [[piecewise]] cubic and twice continuously differentiable. Furthermore, its second derivative is zero at the end points. The natural cubic spline interpolating the points in the table above is given by |

For instance, the [[natural cubic spline]] is [[piecewise]] cubic and twice continuously differentiable. Furthermore, its second derivative is zero at the end points. The natural cubic spline interpolating the points in the table above is given by |

||

| Line 110: | Line 107: | ||

In this case we get ''f''(2.5) = 0.5972. |

In this case we get ''f''(2.5) = 0.5972. |

||

Like polynomial interpolation, spline interpolation incurs a smaller error than linear interpolation |

Like polynomial interpolation, spline interpolation incurs a smaller error than linear interpolation, while the interpolant is smoother and easier to evaluate than the high-degree polynomials used in polynomial interpolation. However, the global nature of the basis functions leads to ill-conditioning. This is completely mitigated by using splines of compact support, such as are implemented in Boost.Math and discussed in Kress.<ref>{{cite book|last1=Kress|first1=Rainer|title=Numerical Analysis|url=https://archive.org/details/springer_10.1007-978-1-4612-0599-9|year=1998|publisher=Springer |isbn=9781461205999}}</ref> |

||

{{Clear}} |

{{Clear}} |

||

| ⚫ | |||

| ⚫ | |||

| ⚫ | |||

| ⚫ | [[Gaussian process]] is a powerful non-linear interpolation tool. Many popular interpolation tools are actually equivalent to particular Gaussian processes. Gaussian processes can be used not only for fitting an interpolant that passes exactly through the given data points but also for regression |

||

Depending on the underlying discretisation of fields, different interpolants may be required. In contrast to other interpolation methods, which estimate functions on target points, mimetic interpolation evaluates the integral of fields on target lines, areas or volumes, depending on the type of field (scalar, vector, pseudo-vector or pseudo-scalar). |

|||

A key feature of mimetic interpolation is that [[vector calculus identities]] are satisfied, including [[Stokes' theorem]] and the [[divergence theorem]]. As a result, mimetic interpolation conserves line, area and volume integrals.<ref>{{Cite journal |last1=Pletzer |first1=Alexander |last2=Hayek |first2=Wolfgang |date=2019-01-01 |title=Mimetic Interpolation of Vector Fields on Arakawa C/D Grids |url=https://journals.ametsoc.org/view/journals/mwre/147/1/mwr-d-18-0146.1.xml |journal=Monthly Weather Review |language=EN |volume=147 |issue=1 |pages=3–16 |doi=10.1175/MWR-D-18-0146.1 |bibcode=2019MWRv..147....3P |s2cid=125214770 |issn=1520-0493 |access-date=2022-06-07 |archive-date=2022-06-07 |archive-url=https://web.archive.org/web/20220607041035/https://journals.ametsoc.org/view/journals/mwre/147/1/mwr-d-18-0146.1.xml |url-status=live }}</ref> Conservation of line integrals might be desirable when interpolating the [[electric field]], for instance, since the line integral gives the [[electric potential]] difference at the endpoints of the integration path.<ref>{{Citation |last1=Stern |first1=Ari |title=Geometric Computational Electrodynamics with Variational Integrators and Discrete Differential Forms |date=2015 |url=http://link.springer.com/10.1007/978-1-4939-2441-7_19 |work=Geometry, Mechanics, and Dynamics |volume=73 |pages=437–475 |editor-last=Chang |editor-first=Dong Eui |place=New York, NY |publisher=Springer New York |doi=10.1007/978-1-4939-2441-7_19 |isbn=978-1-4939-2440-0 |access-date=2022-06-15 |last2=Tong |first2=Yiying |last3=Desbrun |first3=Mathieu |last4=Marsden |first4=Jerrold E. |series=Fields Institute Communications |s2cid=15194760 |editor2-last=Holm |editor2-first=Darryl D. |editor3-last=Patrick |editor3-first=George |editor4-last=Ratiu |editor4-first=Tudor|arxiv=0707.4470 }}</ref> Mimetic interpolation ensures that the error of estimating the line integral of an electric field is the same as the error obtained by interpolating the potential at the end points of the integration path, regardless of the length of the integration path. |

|||

| ⚫ | |||

[[Linear interpolation|Linear]], [[Bilinear interpolation|bilinear]] and [[trilinear interpolation]] are also considered mimetic, even if it is the field values that are conserved (not the integral of the field). Apart from linear interpolation, area weighted interpolation can be considered one of the first mimetic interpolation methods to have been developed.<ref>{{Cite journal |last=Jones |first=Philip |title=First- and Second-Order Conservative Remapping Schemes for Grids in Spherical Coordinates |journal=Monthly Weather Review |year=1999 |volume=127 |issue=9 |pages=2204–2210|doi=10.1175/1520-0493(1999)127<2204:FASOCR>2.0.CO;2 |bibcode=1999MWRv..127.2204J |s2cid=122744293 |doi-access=free }}</ref> |

|||

==Functional interpolation== |

|||

The [[Theory of functional connections|Theory of Functional Connections]] (TFC) is a mathematical framework specifically developed for [https://www.mdpi.com/journal/mathematics/sections/functional_interpolation functional interpolation]. Given any interpolant that satisfies a set of constraints, TFC derives a functional that represents the entire family of interpolants satisfying those constraints, including those that are discontinuous or partially defined. These functionals identify the subspace of functions where the solution to a constrained optimization problem resides. Consequently, TFC transforms constrained optimization problems into equivalent unconstrained formulations. This transformation has proven highly effective in the solution of [[Differential equation|differential equations]]. TFC achieves this by constructing a constrained functional (a function of a free function), that inherently satisfies given constraints regardless of the expression of the free function. This simplifies solving various types of equations and significantly improves the efficiency and accuracy of methods like [[Physics-informed neural networks|Physics-Informed Neural Networks]] (PINNs). TFC offers advantages over traditional methods like [[Lagrange multiplier|Lagrange multipliers]] and [[spectral method]]s by directly addressing constraints analytically and avoiding iterative procedures, although it cannot currently handle inequality constraints. |

|||

==Function approximation== |

|||

Interpolation is a common way to approximate functions. Given a function <math>f:[a,b] \to \mathbb{R}</math> with a set of points <math>x_1, x_2, \dots, x_n \in [a, b]</math> one can form a function <math>s: [a,b] \to \mathbb{R}</math> such that <math>f(x_i)=s(x_i)</math> for <math>i=1, 2, \dots, n</math> (that is, that <math>s</math> interpolates <math>f</math> at these points). In general, an interpolant need not be a good approximation, but there are well known and often reasonable conditions where it will. For example, if <math>f\in C^4([a,b])</math> (four times continuously differentiable) then [[spline interpolation|cubic spline interpolation]] has an error bound given by <math>\|f-s\|_\infty \leq C \|f^{(4)}\|_\infty h^4</math> where <math>h \max_{i=1,2, \dots, n-1} |x_{i+1}-x_i|</math> and <math>C</math> is a constant.<ref>{{cite journal |last1=Hall |first1=Charles A. |last2=Meyer |first2=Weston W. |title=Optimal Error Bounds for Cubic Spline Interpolation |journal=Journal of Approximation Theory |date=1976 |volume=16 |issue=2 |pages=105–122 |doi=10.1016/0021-9045(76)90040-X |doi-access=free }}</ref> |

|||

| ⚫ | |||

| ⚫ | [[Gaussian process]] is a powerful non-linear interpolation tool. Many popular interpolation tools are actually equivalent to particular Gaussian processes. Gaussian processes can be used not only for fitting an interpolant that passes exactly through the given data points but also for regression; that is, for fitting a curve through noisy data. In the geostatistics community Gaussian process regression is also known as [[Kriging]]. |

||

==Other forms== |

|||

Other forms of interpolation can be constructed by picking a different class of interpolants. For instance, rational interpolation is '''interpolation''' by [[rational function]]s using [[Padé approximant]], and [[trigonometric interpolation]] is interpolation by [[trigonometric polynomial]]s using [[Fourier series]]. Another possibility is to use [[wavelet]]s. |

Other forms of interpolation can be constructed by picking a different class of interpolants. For instance, rational interpolation is '''interpolation''' by [[rational function]]s using [[Padé approximant]], and [[trigonometric interpolation]] is interpolation by [[trigonometric polynomial]]s using [[Fourier series]]. Another possibility is to use [[wavelet]]s. |

||

The [[Whittaker–Shannon interpolation formula]] can be used if the number of data points is infinite. |

The [[Whittaker–Shannon interpolation formula]] can be used if the number of data points is infinite or if the function to be interpolated has compact support. |

||

Sometimes, we know not only the value of the function that we want to interpolate, at some points, but also its derivative. This leads to [[Hermite interpolation]] problems. |

Sometimes, we know not only the value of the function that we want to interpolate, at some points, but also its derivative. This leads to [[Hermite interpolation]] problems. |

||

| Line 127: | Line 138: | ||

==In higher dimensions== |

==In higher dimensions== |

||

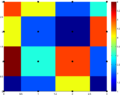

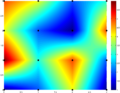

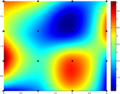

{{comparison_of_1D_and_2D_interpolation.svg|250px|}} |

{{comparison_of_1D_and_2D_interpolation.svg|250px|}} |

||

{{ |

{{Main|Multivariate interpolation}} |

||

Multivariate interpolation is the interpolation of functions of more than one variable. |

Multivariate interpolation is the interpolation of functions of more than one variable. |

||

Methods include [[bilinear interpolation]] and [[bicubic interpolation]] in two dimensions, and [[trilinear interpolation]] in three dimensions. |

Methods include [[nearest-neighbor interpolation]], [[bilinear interpolation]] and [[bicubic interpolation]] in two dimensions, and [[trilinear interpolation]] in three dimensions. |

||

They can be applied to gridded or scattered data. Mimetic interpolation generalizes to <math>n</math> dimensional spaces where <math>n > 3</math>.<ref>{{Cite book |last=Whitney |first=Hassler |title=Geometric Integration Theory |publisher=Dover Books on Mathematics |year=1957 |isbn=978-0486445830}}</ref><ref>{{Cite journal |last1=Pletzer |first1=Alexander |last2=Fillmore |first2=David |title=Conservative interpolation of edge and face data on n dimensional structured grids using differential forms |journal=Journal of Computational Physics |year=2015 |volume=302 |pages=21–40 |doi=10.1016/j.jcp.2015.08.029 |bibcode=2015JCoPh.302...21P |doi-access=free }}</ref> |

|||

They can be applied to gridded or scattered data. |

|||

<gallery> |

<gallery> |

||

| Line 138: | Line 149: | ||

</gallery> |

</gallery> |

||

== |

==In digital signal processing== |

||

In the domain of digital signal processing, the term interpolation refers to the process of converting a sampled digital signal (such as a sampled audio signal) to that of a higher sampling rate ([[Upsampling]]) using various digital filtering techniques ( |

In the domain of digital signal processing, the term interpolation refers to the process of converting a sampled digital signal (such as a sampled audio signal) to that of a higher sampling rate ([[Upsampling]]) using various digital filtering techniques (for example, convolution with a frequency-limited impulse signal). In this application there is a specific requirement that the harmonic content of the original signal be preserved without creating aliased harmonic content of the original signal above the original [[Nyquist frequency|Nyquist limit]] of the signal (that is, above fs/2 of the original signal sample rate). An early and fairly elementary discussion on this subject can be found in Rabiner and Crochiere's book ''Multirate Digital Signal Processing''.<ref>{{Cite book |title=R.E. Crochiere and L.R. Rabiner. (1983). Multirate Digital Signal Processing. Englewood Cliffs, NJ: Prentice–Hall. |isbn=0136051626 |last1=Crochiere |first1=Ronald E. |last2=Rabiner |first2=Lawrence R. |date=1983 |publisher=Prentice-Hall }}</ref> |

||

==Related concepts== |

==Related concepts== |

||

| Line 147: | Line 158: | ||

[[Approximation theory]] studies how to find the best approximation to a given function by another function from some predetermined class, and how good this approximation is. This clearly yields a bound on how well the interpolant can approximate the unknown function. |

[[Approximation theory]] studies how to find the best approximation to a given function by another function from some predetermined class, and how good this approximation is. This clearly yields a bound on how well the interpolant can approximate the unknown function. |

||

==Generalization== |

|||

| ⚫ | If we consider <math>x</math> as a variable in a [[topological space]], and the function <math>f(x)</math> mapping to a [[Banach space]], then the problem is treated as "interpolation of operators".<ref>Colin Bennett, Robert C. Sharpley, ''Interpolation of Operators'', Academic Press 1988</ref> The classical results about interpolation of operators are the [[Riesz–Thorin theorem]] and the [[Marcinkiewicz theorem]]. There are also many other subsequent results. |

||

==See also== |

==See also== |

||

{{Div col| |

{{Div col|colwidth=30em}} |

||

* [[Barycentric coordinate system |

* [[Barycentric coordinate system|Barycentric coordinates]] – for interpolating within on a triangle or tetrahedron |

||

| ⚫ | |||

* [[Brahmagupta's interpolation formula]] |

* [[Brahmagupta's interpolation formula]] |

||

* [[ |

* [[Discretization]] |

||

* [[Fractal compression#Fractal interpolation|Fractal interpolation]] |

|||

* [[Imputation (statistics)]] |

* [[Imputation (statistics)]] |

||

* [[Lagrange polynomial|Lagrange interpolation]] |

* [[Lagrange polynomial|Lagrange interpolation]] |

||

* [[Missing data]] |

* [[Missing data]] |

||

| ⚫ | |||

* [[Newton–Cotes formulas]] |

* [[Newton–Cotes formulas]] |

||

* [[ |

* [[Radial basis function interpolation]] |

||

* [[Simple rational approximation]] |

* [[Simple rational approximation]] |

||

* [[Smoothing]] |

|||

{{ |

{{div col end}} |

||

==References== |

==References== |

||

{{Reflist}} |

|||

<references /> |

|||

==External links== |

==External links== |

||

{{Commons category}} |

|||

* Online tools for [http://tools.timodenk.com/linear-interpolation linear], [http://tools.timodenk.com/quadratic-interpolation quadratic], [http://tools.timodenk.com/cubic-spline-interpolation cubic spline], and [http://tools.timodenk.com/polynomial-interpolation polynomial] interpolation with visualisation and [[JavaScript]] source code. |

|||

* Online tools for [http://tools.timodenk.com/linear-interpolation linear] {{Webarchive|url=https://web.archive.org/web/20160918103516/http://tools.timodenk.com/linear-interpolation |date=2016-09-18 }}, [http://tools.timodenk.com/quadratic-interpolation quadratic] {{Webarchive|url=https://web.archive.org/web/20160918102633/http://tools.timodenk.com/quadratic-interpolation |date=2016-09-18 }}, [http://tools.timodenk.com/cubic-spline-interpolation cubic spline] {{Webarchive|url=https://web.archive.org/web/20160820175607/http://tools.timodenk.com/cubic-spline-interpolation |date=2016-08-20 }}, and [http://tools.timodenk.com/polynomial-interpolation polynomial] {{Webarchive|url=https://web.archive.org/web/20160918102129/http://tools.timodenk.com/polynomial-interpolation |date=2016-09-18 }} interpolation with visualisation and [[JavaScript]] source code. |

|||

* [http://sol.gfxile.net/interpolation/index.html Sol Tutorials - Interpolation Tricks] |

* [http://sol.gfxile.net/interpolation/index.html Sol Tutorials - Interpolation Tricks] {{Webarchive|url=https://web.archive.org/web/20210131063823/http://sol.gfxile.net/interpolation/index.html |date=2021-01-31 }} |

||

* [http://www.boost.org/doc/libs/release/libs/math/doc/html/math_toolkit/barycentric.html Barycentric rational interpolation in Boost.Math] |

|||

* [http://www.boost.org/doc/libs/release/libs/math/doc/html/math_toolkit/sf_poly/chebyshev.html Interpolation via the Chebyshev transform in Boost.Math] |

|||

{{Authority control}} |

|||

[[Category:Interpolation| ]] |

[[Category:Interpolation| ]] |

||

Latest revision as of 04:45, 12 December 2024

This article includes a list of general references, but it lacks sufficient corresponding inline citations. (October 2016) |

In the mathematical field of numerical analysis, interpolation is a type of estimation, a method of constructing (finding) new data points based on the range of a discrete set of known data points.[1][2]

In engineering and science, one often has a number of data points, obtained by sampling or experimentation, which represent the values of a function for a limited number of values of the independent variable. It is often required to interpolate; that is, estimate the value of that function for an intermediate value of the independent variable.

A closely related problem is the approximation of a complicated function by a simple function. Suppose the formula for some given function is known, but too complicated to evaluate efficiently. A few data points from the original function can be interpolated to produce a simpler function which is still fairly close to the original. The resulting gain in simplicity may outweigh the loss from interpolation error and give better performance in calculation process.

Example

[edit]This table gives some values of an unknown function .

| 0 | 0 | ||||

| 1 | 0 | . | 8415 | ||

| 2 | 0 | . | 9093 | ||

| 3 | 0 | . | 1411 | ||

| 4 | −0 | . | 7568 | ||

| 5 | −0 | . | 9589 | ||

| 6 | −0 | . | 2794 | ||

Interpolation provides a means of estimating the function at intermediate points, such as

We describe some methods of interpolation, differing in such properties as: accuracy, cost, number of data points needed, and smoothness of the resulting interpolant function.

Piecewise constant interpolation

[edit]

The simplest interpolation method is to locate the nearest data value, and assign the same value. In simple problems, this method is unlikely to be used, as linear interpolation (see below) is almost as easy, but in higher-dimensional multivariate interpolation, this could be a favourable choice for its speed and simplicity.

Linear interpolation

[edit]

One of the simplest methods is linear interpolation (sometimes known as lerp). Consider the above example of estimating f(2.5). Since 2.5 is midway between 2 and 3, it is reasonable to take f(2.5) midway between f(2) = 0.9093 and f(3) = 0.1411, which yields 0.5252.

Generally, linear interpolation takes two data points, say (xa,ya) and (xb,yb), and the interpolant is given by:

This previous equation states that the slope of the new line between and is the same as the slope of the line between and

Linear interpolation is quick and easy, but it is not very precise. Another disadvantage is that the interpolant is not differentiable at the point xk.

The following error estimate shows that linear interpolation is not very precise. Denote the function which we want to interpolate by g, and suppose that x lies between xa and xb and that g is twice continuously differentiable. Then the linear interpolation error is

In words, the error is proportional to the square of the distance between the data points. The error in some other methods, including polynomial interpolation and spline interpolation (described below), is proportional to higher powers of the distance between the data points. These methods also produce smoother interpolants.

Polynomial interpolation

[edit]

Polynomial interpolation is a generalization of linear interpolation. Note that the linear interpolant is a linear function. We now replace this interpolant with a polynomial of higher degree.

Consider again the problem given above. The following sixth degree polynomial goes through all the seven points:

Substituting x = 2.5, we find that f(2.5) = ~0.59678.

Generally, if we have n data points, there is exactly one polynomial of degree at most n−1 going through all the data points. The interpolation error is proportional to the distance between the data points to the power n. Furthermore, the interpolant is a polynomial and thus infinitely differentiable. So, we see that polynomial interpolation overcomes most of the problems of linear interpolation.

However, polynomial interpolation also has some disadvantages. Calculating the interpolating polynomial is computationally expensive (see computational complexity) compared to linear interpolation. Furthermore, polynomial interpolation may exhibit oscillatory artifacts, especially at the end points (see Runge's phenomenon).

Polynomial interpolation can estimate local maxima and minima that are outside the range of the samples, unlike linear interpolation. For example, the interpolant above has a local maximum at x ≈ 1.566, f(x) ≈ 1.003 and a local minimum at x ≈ 4.708, f(x) ≈ −1.003. However, these maxima and minima may exceed the theoretical range of the function; for example, a function that is always positive may have an interpolant with negative values, and whose inverse therefore contains false vertical asymptotes.

More generally, the shape of the resulting curve, especially for very high or low values of the independent variable, may be contrary to commonsense; that is, to what is known about the experimental system which has generated the data points. These disadvantages can be reduced by using spline interpolation or restricting attention to Chebyshev polynomials.

Spline interpolation

[edit]

Linear interpolation uses a linear function for each of intervals [xk,xk+1]. Spline interpolation uses low-degree polynomials in each of the intervals, and chooses the polynomial pieces such that they fit smoothly together. The resulting function is called a spline.

For instance, the natural cubic spline is piecewise cubic and twice continuously differentiable. Furthermore, its second derivative is zero at the end points. The natural cubic spline interpolating the points in the table above is given by

In this case we get f(2.5) = 0.5972.

Like polynomial interpolation, spline interpolation incurs a smaller error than linear interpolation, while the interpolant is smoother and easier to evaluate than the high-degree polynomials used in polynomial interpolation. However, the global nature of the basis functions leads to ill-conditioning. This is completely mitigated by using splines of compact support, such as are implemented in Boost.Math and discussed in Kress.[3]

Mimetic interpolation

[edit]Depending on the underlying discretisation of fields, different interpolants may be required. In contrast to other interpolation methods, which estimate functions on target points, mimetic interpolation evaluates the integral of fields on target lines, areas or volumes, depending on the type of field (scalar, vector, pseudo-vector or pseudo-scalar).

A key feature of mimetic interpolation is that vector calculus identities are satisfied, including Stokes' theorem and the divergence theorem. As a result, mimetic interpolation conserves line, area and volume integrals.[4] Conservation of line integrals might be desirable when interpolating the electric field, for instance, since the line integral gives the electric potential difference at the endpoints of the integration path.[5] Mimetic interpolation ensures that the error of estimating the line integral of an electric field is the same as the error obtained by interpolating the potential at the end points of the integration path, regardless of the length of the integration path.

Linear, bilinear and trilinear interpolation are also considered mimetic, even if it is the field values that are conserved (not the integral of the field). Apart from linear interpolation, area weighted interpolation can be considered one of the first mimetic interpolation methods to have been developed.[6]

Functional interpolation

[edit]The Theory of Functional Connections (TFC) is a mathematical framework specifically developed for functional interpolation. Given any interpolant that satisfies a set of constraints, TFC derives a functional that represents the entire family of interpolants satisfying those constraints, including those that are discontinuous or partially defined. These functionals identify the subspace of functions where the solution to a constrained optimization problem resides. Consequently, TFC transforms constrained optimization problems into equivalent unconstrained formulations. This transformation has proven highly effective in the solution of differential equations. TFC achieves this by constructing a constrained functional (a function of a free function), that inherently satisfies given constraints regardless of the expression of the free function. This simplifies solving various types of equations and significantly improves the efficiency and accuracy of methods like Physics-Informed Neural Networks (PINNs). TFC offers advantages over traditional methods like Lagrange multipliers and spectral methods by directly addressing constraints analytically and avoiding iterative procedures, although it cannot currently handle inequality constraints.

Function approximation

[edit]Interpolation is a common way to approximate functions. Given a function with a set of points one can form a function such that for (that is, that interpolates at these points). In general, an interpolant need not be a good approximation, but there are well known and often reasonable conditions where it will. For example, if (four times continuously differentiable) then cubic spline interpolation has an error bound given by where and is a constant.[7]

Via Gaussian processes

[edit]Gaussian process is a powerful non-linear interpolation tool. Many popular interpolation tools are actually equivalent to particular Gaussian processes. Gaussian processes can be used not only for fitting an interpolant that passes exactly through the given data points but also for regression; that is, for fitting a curve through noisy data. In the geostatistics community Gaussian process regression is also known as Kriging.

Other forms

[edit]Other forms of interpolation can be constructed by picking a different class of interpolants. For instance, rational interpolation is interpolation by rational functions using Padé approximant, and trigonometric interpolation is interpolation by trigonometric polynomials using Fourier series. Another possibility is to use wavelets.

The Whittaker–Shannon interpolation formula can be used if the number of data points is infinite or if the function to be interpolated has compact support.

Sometimes, we know not only the value of the function that we want to interpolate, at some points, but also its derivative. This leads to Hermite interpolation problems.

When each data point is itself a function, it can be useful to see the interpolation problem as a partial advection problem between each data point. This idea leads to the displacement interpolation problem used in transportation theory.

In higher dimensions

[edit]

Black and red/yellow/green/blue dots correspond to the interpolated point and neighbouring samples, respectively.

Their heights above the ground correspond to their values.

Multivariate interpolation is the interpolation of functions of more than one variable. Methods include nearest-neighbor interpolation, bilinear interpolation and bicubic interpolation in two dimensions, and trilinear interpolation in three dimensions. They can be applied to gridded or scattered data. Mimetic interpolation generalizes to dimensional spaces where .[8][9]

-

Nearest neighbor

-

Bilinear

-

Bicubic

In digital signal processing

[edit]In the domain of digital signal processing, the term interpolation refers to the process of converting a sampled digital signal (such as a sampled audio signal) to that of a higher sampling rate (Upsampling) using various digital filtering techniques (for example, convolution with a frequency-limited impulse signal). In this application there is a specific requirement that the harmonic content of the original signal be preserved without creating aliased harmonic content of the original signal above the original Nyquist limit of the signal (that is, above fs/2 of the original signal sample rate). An early and fairly elementary discussion on this subject can be found in Rabiner and Crochiere's book Multirate Digital Signal Processing.[10]

Related concepts

[edit]The term extrapolation is used to find data points outside the range of known data points.

In curve fitting problems, the constraint that the interpolant has to go exactly through the data points is relaxed. It is only required to approach the data points as closely as possible (within some other constraints). This requires parameterizing the potential interpolants and having some way of measuring the error. In the simplest case this leads to least squares approximation.

Approximation theory studies how to find the best approximation to a given function by another function from some predetermined class, and how good this approximation is. This clearly yields a bound on how well the interpolant can approximate the unknown function.

Generalization

[edit]If we consider as a variable in a topological space, and the function mapping to a Banach space, then the problem is treated as "interpolation of operators".[11] The classical results about interpolation of operators are the Riesz–Thorin theorem and the Marcinkiewicz theorem. There are also many other subsequent results.

See also

[edit]- Barycentric coordinates – for interpolating within on a triangle or tetrahedron

- Brahmagupta's interpolation formula

- Discretization

- Fractal interpolation

- Imputation (statistics)

- Lagrange interpolation

- Missing data

- Newton–Cotes formulas

- Radial basis function interpolation

- Simple rational approximation

- Smoothing

References

[edit]- ^ Sheppard, William Fleetwood (1911). . In Chisholm, Hugh (ed.). Encyclopædia Britannica. Vol. 14 (11th ed.). Cambridge University Press. pp. 706–710.

- ^ Steffensen, J. F. (2006). Interpolation (Second ed.). Mineola, N.Y. ISBN 978-0-486-15483-1. OCLC 867770894.

{{cite book}}: CS1 maint: location missing publisher (link) - ^ Kress, Rainer (1998). Numerical Analysis. Springer. ISBN 9781461205999.

- ^ Pletzer, Alexander; Hayek, Wolfgang (2019-01-01). "Mimetic Interpolation of Vector Fields on Arakawa C/D Grids". Monthly Weather Review. 147 (1): 3–16. Bibcode:2019MWRv..147....3P. doi:10.1175/MWR-D-18-0146.1. ISSN 1520-0493. S2CID 125214770. Archived from the original on 2022-06-07. Retrieved 2022-06-07.

- ^ Stern, Ari; Tong, Yiying; Desbrun, Mathieu; Marsden, Jerrold E. (2015), Chang, Dong Eui; Holm, Darryl D.; Patrick, George; Ratiu, Tudor (eds.), "Geometric Computational Electrodynamics with Variational Integrators and Discrete Differential Forms", Geometry, Mechanics, and Dynamics, Fields Institute Communications, vol. 73, New York, NY: Springer New York, pp. 437–475, arXiv:0707.4470, doi:10.1007/978-1-4939-2441-7_19, ISBN 978-1-4939-2440-0, S2CID 15194760, retrieved 2022-06-15

- ^ Jones, Philip (1999). "First- and Second-Order Conservative Remapping Schemes for Grids in Spherical Coordinates". Monthly Weather Review. 127 (9): 2204–2210. Bibcode:1999MWRv..127.2204J. doi:10.1175/1520-0493(1999)127<2204:FASOCR>2.0.CO;2. S2CID 122744293.

- ^ Hall, Charles A.; Meyer, Weston W. (1976). "Optimal Error Bounds for Cubic Spline Interpolation". Journal of Approximation Theory. 16 (2): 105–122. doi:10.1016/0021-9045(76)90040-X.

- ^ Whitney, Hassler (1957). Geometric Integration Theory. Dover Books on Mathematics. ISBN 978-0486445830.

- ^ Pletzer, Alexander; Fillmore, David (2015). "Conservative interpolation of edge and face data on n dimensional structured grids using differential forms". Journal of Computational Physics. 302: 21–40. Bibcode:2015JCoPh.302...21P. doi:10.1016/j.jcp.2015.08.029.

- ^ Crochiere, Ronald E.; Rabiner, Lawrence R. (1983). R.E. Crochiere and L.R. Rabiner. (1983). Multirate Digital Signal Processing. Englewood Cliffs, NJ: Prentice–Hall. Prentice-Hall. ISBN 0136051626.

- ^ Colin Bennett, Robert C. Sharpley, Interpolation of Operators, Academic Press 1988

External links

[edit]- Online tools for linear Archived 2016-09-18 at the Wayback Machine, quadratic Archived 2016-09-18 at the Wayback Machine, cubic spline Archived 2016-08-20 at the Wayback Machine, and polynomial Archived 2016-09-18 at the Wayback Machine interpolation with visualisation and JavaScript source code.

- Sol Tutorials - Interpolation Tricks Archived 2021-01-31 at the Wayback Machine

- Barycentric rational interpolation in Boost.Math

- Interpolation via the Chebyshev transform in Boost.Math

![{\displaystyle |f(x)-g(x)|\leq C(x_{b}-x_{a})^{2}\quad {\text{where}}\quad C={\frac {1}{8}}\max _{r\in [x_{a},x_{b}]}|g''(r)|.}](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/15e835bf7d5d64ca8fef6bd55cfd937460b4752e)

![{\displaystyle f(x)={\begin{cases}-0.1522x^{3}+0.9937x,&{\text{if }}x\in [0,1],\\-0.01258x^{3}-0.4189x^{2}+1.4126x-0.1396,&{\text{if }}x\in [1,2],\\0.1403x^{3}-1.3359x^{2}+3.2467x-1.3623,&{\text{if }}x\in [2,3],\\0.1579x^{3}-1.4945x^{2}+3.7225x-1.8381,&{\text{if }}x\in [3,4],\\0.05375x^{3}-0.2450x^{2}-1.2756x+4.8259,&{\text{if }}x\in [4,5],\\-0.1871x^{3}+3.3673x^{2}-19.3370x+34.9282,&{\text{if }}x\in [5,6].\end{cases}}}](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/3cd654c9f03b663dc277263ec988f010e0d934e1)

![{\displaystyle f:[a,b]\to \mathbb {R} }](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/b592d102ccd1ba134d401c5b3ea177baaba3ffac)

![{\displaystyle x_{1},x_{2},\dots ,x_{n}\in [a,b]}](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/df22c48c2c827e30fa634d0964908f94af232750)

![{\displaystyle s:[a,b]\to \mathbb {R} }](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/cb454b2565ba3ced5a91e87ee9b2a685a14d03bb)

![{\displaystyle f\in C^{4}([a,b])}](https://wikimedia.org/enwiki/api/rest_v1/media/math/render/svg/cf9d66eda475f0e830cf15ecdec3d8fbf5e6ba7e)