Artificial intelligence: Difference between revisions

Reverting possible vandalism by Special:Contributions/193.1.82.214 to version by 134.121.178.124. If this is a mistake, report it. Thanks, ClueBot. (89043) (Bot) |

No edit summary |

||

| Line 3: | Line 3: | ||

{{portal}} |

{{portal}} |

||

The modern definition of '''artificial intelligence''' (or '''AI''') is "the study and design of [[intelligent agents]]" where an intelligent agent is a system that perceives its environment and takes actions which maximizes its chances of success.<ref>Textbooks that define AI this way include {{Harvnb|Poole|Mackworth|Goebel|1998|loc=[http://www.cs.ubc.ca/spider/poole/ci/ch1.pdf p. 1]}} and {{Harvnb|Russell|Norvig|2003|loc=[http://aima.cs.berkeley.edu/preface.html preface]}} (who prefer the term "rational agent") and write "The whole-agent view is now widely accepted in the field" {{Harv|Russell|Norvig|2003|p=55}}</ref> |

Yo Bitch The modern definition of '''artificial intelligence''' (or '''AI''') is "the study and design of [[intelligent agents]]" where an intelligent agent is a system that perceives its environment and takes actions which maximizes its chances of success.<ref>Textbooks that define AI this way include {{Harvnb|Poole|Mackworth|Goebel|1998|loc=[http://www.cs.ubc.ca/spider/poole/ci/ch1.pdf p. 1]}} and {{Harvnb|Russell|Norvig|2003|loc=[http://aima.cs.berkeley.edu/preface.html preface]}} (who prefer the term "rational agent") and write "The whole-agent view is now widely accepted in the field" {{Harv|Russell|Norvig|2003|p=55}}</ref> |

||

[[John McCarthy (computer scientist)|John McCarthy]], who coined the term in 1956,<ref>Although there is some controversy on this point (see {{Harvnb|Crevier|1993|p=50}}), [[John McCarthy|McCarthy]] states unequivocally "I came up with the term" in a c|net interview. (See [http://news.com.com/Getting+machines+to+think+like+us/2008-11394_3-6090207.html Getting Machines to Think Like Us].)</ref> |

[[John McCarthy (computer scientist)|John McCarthy]], who coined the term in 1956,<ref>Although there is some controversy on this point (see {{Harvnb|Crevier|1993|p=50}}), [[John McCarthy|McCarthy]] states unequivocally "I came up with the term" in a c|net interview. (See [http://news.com.com/Getting+machines+to+think+like+us/2008-11394_3-6090207.html Getting Machines to Think Like Us].)</ref> |

||

defines it as "the science and engineering of making intelligent machines."<ref>See [http://www-formal.stanford.edu/jmc/whatisai/whatisai.html WHAT IS ARTIFICIAL INTELLIGENCE? by John McCarthy]</ref> |

defines it as "the science and engineering of making intelligent machines."<ref>See [http://www-formal.stanford.edu/jmc/whatisai/whatisai.html WHAT IS ARTIFICIAL INTELLIGENCE? by John McCarthy]</ref> |

||

Revision as of 16:02, 27 November 2007

Yo Bitch The modern definition of artificial intelligence (or AI) is "the study and design of intelligent agents" where an intelligent agent is a system that perceives its environment and takes actions which maximizes its chances of success.[1] John McCarthy, who coined the term in 1956,[2] defines it as "the science and engineering of making intelligent machines."[3] Other names for the field have been proposed, such as computational intelligence,[4] synthetic intelligence[4][5] or computational rationality.[6] The term artificial intelligence is also used to describe a property of machines or programs: the intelligence that the system demonstrates.

AI research uses tools and insights from many fields, including computer science, psychology, philosophy, neuroscience, cognitive science, linguistics, operations research, economics, control theory, probability, optimization and logic.[7] AI research also overlaps with tasks such as robotics, control systems, scheduling, data mining, logistics, speech recognition, facial recognition and many others.[8]

Perspectives on AI

The rise and fall of AI in public perception

The field was born at a conference on the campus of Dartmouth College in the summer of 1956.[9]

Those who attended would become the leaders of AI research for many decades, especially John McCarthy, Marvin Minsky, Allen Newell and Herbert Simon, who founded AI laboratories at MIT, CMU and Stanford. They and their students wrote programs that were, to most people, simply astonishing:[10]

computers were solving word problems in algebra, proving logical theorems and speaking English.[11]

By the middle 60s their research was heavily funded by DARPA,[12]

and they were optimistic about the future of the new field:

- 1965, H. A. Simon: "machines will be capable, within twenty years, of doing any work a man can do"[13]

- 1967, Marvin Minsky: "Within a generation ... the problem of creating 'artificial intelligence' will substantially be solved."[14]

These predictions, and many like them, would not come true. They had failed to recognize the difficulty of some of the problems they faced: the lack of raw computer power,[15] the intractable combinatorial explosion of their algorithms,[16] the difficulty of representing commonsense knowledge and doing commonsense reasoning,[17] the incredible difficulty of perception and motion[18] and the failings of logic.[19] In 1974, in response to the criticism of England's Sir James Lighthill and ongoing pressure from congress to fund more productive projects, DARPA cut off all undirected, exploratory research in AI. This was the first AI Winter.[20]

In the early 80s, the field was revived by the commercial success of expert systems and by 1985 the market for AI had reached more than a billion dollars.[21] Minsky and others warned the community that enthusiasm for AI had spiraled out of control and that disappointment was sure to follow.[22] Minsky was right. Beginning with the collapse of the Lisp Machine market in 1987, AI once again fell into disrepute, and a second, more lasting AI Winter began.[23]

In the 90s AI achieved its greatest successes, albeit somewhat behind the scenes. Artificial intelligence was adopted throughout the technology industry, providing the heavy lifting for logistics, data mining, medical diagnosis and many other areas.[24] The success was due to several factors: the incredible power of computers today (see Moore's law), a greater emphasis on solving specific subproblems, the creation of new ties between AI and other fields working on similar problems, and above all a new commitment by researchers to solid mathematical methods and rigorous scientific standards.[25]

1961-65 -- A.L.Samuel Developed a program which learned to play checkers at Masters level.

1965 -- J.A.Robinson introduced resolution as an inference method in logic.

1965 -- Work on DENDRAL was begun at Stanford University by J.Lederberg, Edward Feigenbaum and Carl Djerassi. DENDRAL is an expert system which discovers molecule structure given only informaton of the constituents of the compound and mass spectra data. DENDRAL was the first knowledge-based expert system to be developed.

1968 -- Work on MACSYMA was initiated at MIT by Carl Engleman, William Martin and Joel Moses. MACSYMA is a large interactive program which solves numerous types of mathematical problems. Written in LISP, MACSYMA was a continuation of earlier work on SIN, an indefinite integration solving problem

References on early work in AI include Pamela McCorduck's Machines Who Think (1979) and Newell and Simon's Human Problem Solving (1972).

The philosophy of AI

The strong AI vs. weak AI debate ("can a man-made artifact be conscious?") is still a hot topic amongst AI philosophers. This involves philosophy of mind and the mind-body problem. Most notably Roger Penrose in his book The Emperor's New Mind and John Searle with his "Chinese room" thought experiment argue that true consciousness cannot be achieved by formal logic systems, while Douglas Hofstadter in Gödel, Escher, Bach and Daniel Dennett in Consciousness Explained argue in favour of functionalism. In many strong AI supporters' opinions, artificial consciousness is considered the holy grail of artificial intelligence. Edsger Dijkstra famously opined that the debate had little importance: "The question of whether a computer can think is no more interesting than the question of whether a submarine can swim."

Epistemology, the study of knowledge, also makes contact with AI, as engineers find themselves debating similar questions to philosophers about how best to represent and use knowledge and information (e.g., semantic networks).

AI in myth and fiction

In science fiction AI is often portrayed as an upcoming power trying to overthrow human authority, usually in the form of futuristic humanoid robots. Best known examples include the films The Terminator and The Matrix, as well as TV shows such as the re-imagined Battlestar Galactica series.

Another common theme is the suspicion and hatred by humanity for AIs and the AIs attempt to gain human acceptance. Films include Bicentennial Man, Artificial Intelligence: A.I. and The Iron Giant. This concept is also explored in the Uncanny Valley hypothesis.

Isaac Asimov wrote stories where engineers understood these potential problems and designed their robots accordingly. Positive examples of AIs include Robby from Forbidden Planet, R2D2, C3PO and Data (Star Trek). A negative example, the movie I, Robot is based on Asimov's stories in which an AI positronic brain develops "her" own radical understanding ot the "three laws of robotics." The book I, Robot does not take this view.

The inevitability of the integration of AI into human society is also argued by some science/futurist writers such as Kevin Warwick and Hans Moravec and the manga Ghost in the Shell

The future of AI

AI research

Artificial intelligence is a young science and is still a fragmented collection of subfields. At present, there is no established unifying theory that links the subfields into a coherent whole.

Problems of AI research

This article needs attention from an expert in Computing. Please add a reason or a talk parameter to this template to explain the issue with the article. |

Approaches to AI research

This article needs attention from an expert in Computing. Please add a reason or a talk parameter to this template to explain the issue with the article. |

Conventional AI mostly involves methods now classified as machine learning, characterized by formalism and statistical analysis. This is also known as symbolic AI, logical AI, neat AI and Good Old Fashioned Artificial Intelligence (GOFAI). (Also see semantics.) Methods include:

- Expert systems: apply reasoning capabilities to reach a conclusion. An expert system can process large amounts of known information and provide conclusions based on them.

- Case based reasoning: stores a set of problems and answers in an organized data structure called cases. A case based reasoning system upon being presented with a problem finds a case in its knowledge base that is most closely related to the new problem and presents its solutions as an output with suitable modifications.[26]

- Bayesian networks

- Behavior based AI: a modular method of building AI systems by hand.

Computational intelligence involves iterative development or learning (e.g., parameter tuning in connectionist systems). Learning is based on empirical data and is associated with non-symbolic AI, scruffy AI and soft computing. Subjects in computational intelligence as defined by IEEE Computational Intelligence Society mainly include:

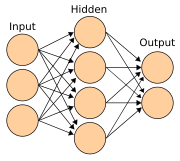

- Neural networks: trainable systems with very strong pattern recognition capabilities.

- Fuzzy systems: techniques for reasoning under uncertainty, have been widely used in modern industrial and consumer product control systems; capable of working with concepts such as 'hot', 'cold', 'warm' and 'boiling'.

- Evolutionary computation: applies biologically inspired concepts such as populations, mutation and survival of the fittest to generate increasingly better solutions to the problem. These methods most notably divide into evolutionary algorithms (e.g., genetic algorithms) and swarm intelligence (e.g., ant algorithms).

With hybrid intelligent systems, attempts are made to combine these two groups. Expert inference rules can be generated through neural network or production rules from statistical learning such as in ACT-R or CLARION (see References below). It is thought that the human brain uses multiple techniques to both formulate and cross-check results. Thus, systems integration is seen as promising and perhaps necessary for true AI, especially the integration of symbolic and connectionist models (e.g., as advocated by Ron Sun).

Conventional AI research focuses on attempts to mimic human intelligence through symbol manipulation and symbolically structured knowledge bases. This approach limits the situations to which conventional AI can be applied. Lotfi Zadeh stated that "we are also in possession of computational tools which are far more effective in the conception and design of intelligent systems than the predicate-logic-based methods which form the core of traditional AI." These techniques, which include fuzzy logic, have become known as soft computing. These often biologically inspired methods stand in contrast to conventional AI and compensate for the shortcomings of symbolicism.[27] These two methodologies have also been labeled as neats vs. scruffies, with neats emphasizing the use of logic and formal representation of knowledge while scruffies take an application-oriented heuristic bottom-up approach.[28]

Tools of AI research

This article needs attention from an expert in Computing. Please add a reason or a talk parameter to this template to explain the issue with the article. |

Production systems

Many expert systems are organized collections of if-then such statements, called productions. These can include stochastic elements, producing intrinsic variation, or rely on variation produced in response to a dynamic environment.

They are built around automated inference engines including forward reasoning and backwards reasoning. Based on certain conditions ("if") the system infers certain consequences ("then").

Classifiers

In the simplest case, AI applications can be divided into two types: classifiers ("if shiny then diamond") and controllers ("if shiny then pick up"). Controllers do however also classify conditions before inferring actions, and therefore classification forms a central part of most AI systems.

Classifiers make use of pattern recognition for condition matching. In many cases this does not imply absolute, but rather the closest match.

Classifiers are functions that can be tuned according to examples, making them very attractive for use in AI. These examples are known as observations or patterns. In supervised learning, each pattern belongs to a certain predefined class. A class can be seen as a decision that has to be made. All the observations combined with their class labels are known as a data set.

When a new observation is received, that observation is classified based on previous experience. A classifier can be trained in various ways; there are mainly statistical and machine learning approaches.

A wide range of classifiers are available, each with its strengths and weaknesses. Classifier performance depends greatly on the characteristics of the data to be classified. There is no single classifier that works best on all given problems; this is also referred to as the "no free lunch" theorem. Various empirical tests have been performed to compare classifier performance and to find the characteristics of data that determine classifier performance. Determining a suitable classifier for a given problem is however still more an art than science.

The most widely used classifiers are the neural network, support vector machine, k-nearest neighbor algorithm, Gaussian mixture model, naive Bayes classifier, and decision tree. The performance of these classifiers have been compared over a wide range of classification tasks [29] in order to find data characteristics that determine classifier performance; to fully describe the relationship between data characateristics and classifier performance, however, remains an intriguing task.

Neural networks

Techniques and technologies in AI which have been directly derived from neuroscience include neural networks, Hebbian learning and the relatively new field of Hierarchical Temporal Memory which simulates the architecture of the neocortex.

Specialized languages

AI research has led to many advances in programming languages including the first list processing language by Allen Newell et al., Lisp dialects, Planner, Actors, the Scientific Community Metaphor, production systems, and rule-based languages.

GOFAI research is often done in programming languages such as Prolog or Lisp. Matlab and Lush (a numerical dialect of Lisp) include many specialist probabilistic libraries for Bayesian systems. AI research often emphasises rapid development and prototyping, using such interpreted languages to empower rapid command-line testing and experimentation. Real-time systems are however likely to require dedicated optimized software.

Notable examples include the languages LISP and Prolog, which were invented for AI research but are now used for non-AI tasks. Hacker culture first sprang from AI laboratories, in particular the MIT AI Lab, home at various times to such luminaries as John McCarthy, Marvin Minsky, Seymour Papert (who developed Logo there) and Terry Winograd (who abandoned AI after developing SHRDLU).

Research challenges

The 800 million-Euro EUREKA Prometheus Project on driverless cars (1987-1995) showed that fast autonomous vehicles, notably those of Ernst Dickmanns and his team, can drive long distances (over 100 miles) in traffic, automatically recognizing and tracking other cars through computer vision, passing slower cars in the left lane. But the challenge of safe door-to-door autonomous driving in arbitrary environments will require additional research.

The DARPA Grand Challenge was a race for a $2 million prize where cars had to drive themselves over a hundred miles of challenging desert terrain without any communication with humans, using GPS, computers and a sophisticated array of sensors. In 2005, the winning vehicles completed all 132 miles (212 km) of the course in just under seven hours. This was the first in a series of challenges aimed at a congressional mandate stating that by 2015 one-third of the operational ground combat vehicles of the US Armed Forces should be unmanned.[30] For November 2007, DARPA introduced the DARPA Urban Challenge. The course will involve a sixty-mile urban area course. Darpa has secured the prize money for the challenge as $2 million for first place, $1 million for second and $500,000 for third.

A popular challenge amongst AI research groups is the RoboCup and FIRA annual international robot soccer competitions. Hiroaki Kitano has formulated the International RoboCup Federation challenge: "In 2050 a team of fully autonomous humanoid robot soccer players shall win the soccer game, comply with the official rule of the FIFA, against the winner of the most recent World Cup."[31]

A lesser known challenge to promote AI research is the annual Arimaa challenge match. The challenge offers a $10,000 prize until the year 2020 to develop a program that plays the board game Arimaa and defeats a group of selected human opponents.

In the post-dot-com boom era, some search engine websites use a simple form of AI to provide answers to questions entered by the visitor. Questions such as What is the tallest building? can be entered into the search engine's input form, and a list of answers will be returned. AskWiki is an example this sort of search engine.

Applications of artificial intelligence

Business

Banks use artificial intelligence systems to organize operations, invest in stocks, and manage properties. In August 2001, robots beat humans in a simulated financial trading competition (BBC News, 2001).[32] A medical clinic can use artificial intelligence systems to organize bed schedules, make a staff rotation, and provide medical information. Many practical applications are dependent on artificial neural networks, networks that pattern their organization in mimicry of a brain's neurons, which have been found to excel in pattern recognition. Financial institutions have long used such systems to detect charges or claims outside of the norm, flagging these for human investigation. Neural networks are also being widely deployed in homeland security, speech and text recognition, medical diagnosis (such as in Concept Processing technology in EMR software), data mining, and e-mail spam filtering.

Robots have become common in many industries. They are often given jobs that are considered dangerous to humans. Robots have proven effective in jobs that are very repetitive which may lead to mistakes or accidents due to a lapse in concentration and other jobs which humans may find degrading. General Motors uses around 16,000 robots for tasks such as painting, welding, and assembly. Japan is the leader in using and producing robots in the world. In 1995, 700,000 robots were in use worldwide; over 500,000 of which were from Japan.[33]

Toys and games

The 1990s saw some of the first attempts to mass-produce domestically aimed types of basic Artificial Intelligence for education, or leisure. This prospered greatly with the Digital Revolution, and helped introduce people, especially children, to a life of dealing with various types of AI, specifically in the form of Tamagotchis and Giga Pets, the Internet (example: basic search engine interfaces are one simple form), and the first widely released robot, Furby. A mere year later an improved type of domestic robot was released in the form of Aibo, a robotic dog with intelligent features and autonomy.

List of applications

- Typical problems to which AI methods are applied

| class="col-break " |

- Computer vision, Virtual reality and Image processing

- Diagnosis (artificial intelligence)

- Game theory and Strategic planning

- Game artificial intelligence and Computer game bot

- Natural language processing, Translation and Chatterbots

- Non-linear control and Robotics

- Other fields in which AI methods are implemented

- Artificial life

- Automated reasoning

- Automation

- Biologically-inspired computing

- Colloquis

- Concept mining

- Data mining

- Knowledge representation

- Semantic Web

- E-mail spam filtering

| class="col-break " |

- Lists of researchers, projects & publications

See also

- Main list: List of basic artificial intelligence topics

- History of artificial intelligence

- AI effect

- AI winter

- Artificial intelligence systems integration

- Association for the Advancement of Artificial Intelligence

- Autonomous foraging

- Cognitive science

- Embodied agent

- Fifth generation computer

- Friendly artificial intelligence

- Generative systems

- German Research Centre for Artificial Intelligence

- Intelligent system

- Intelligent agent

- International Joint Conference on Artificial Intelligence

- Loebner prize

| class="col-break " |

- Nanotechnology

- Neuromancer

- Nouvelle AI

- PEAS

- Personhood

- Predictive analytics

- Robot

- Singularitarianism

- Three Laws of Robotics

- Transhuman

Notes

- ^ Textbooks that define AI this way include Poole, Mackworth & Goebel 1998, p. 1 and Russell & Norvig 2003, preface (who prefer the term "rational agent") and write "The whole-agent view is now widely accepted in the field" (Russell & Norvig 2003, p. 55)

- ^ Although there is some controversy on this point (see Crevier 1993, p. 50), McCarthy states unequivocally "I came up with the term" in a c|net interview. (See Getting Machines to Think Like Us.)

- ^ See WHAT IS ARTIFICIAL INTELLIGENCE? by John McCarthy

- ^ a b Poole, Mackworth & Goebel 1998, p. 1

- ^ Law 1994

- ^ Russell & Norvig 2003, p. 17

- ^ Russell & Norvig 2003, pp. 5–16

- ^ See AI Topics: applications

- ^ Crevier 1993, pp. 47–49 and Russell & Norvig 2003, p. 17

- ^ Russell and Norvig write "it was astonishing whenever a computer did anything kind of smartish." Russell & Norvig 2003, p. 18

- ^ Crevier 1993, pp. 52–107, Moravec 1988, p. 9 and Russell & Norvig 2003, p. 18-21. The programs described are Daniel Bobrow's STUDENT, Newell and Simon's Logic Theorist and Terry Winograd's SHRDLU.

- ^ Crevier 1993, pp. 64–65

- ^ Simon 1965, p. 96 quoted in Crevier 1993, p. 109

- ^ Minsky 1967, p. 2 quoted in Crevier 1993, p. 109

- ^ Crevier 1993, pp. 146–148, Buchanan 2005, p. 56, Moravec 1976

- ^ Russell & Norvig 2003, pp. 9, 21–22

- ^ Crevier 1993, pp. 113–114, Moravec 1988, p. 13, Lenat 1989 (Introduction) and Russell & Norvig 2003, p. 21

- ^ Moravec 1988, pp. 15–16 and see Moravec's paradox

- ^ McCarthy & Hayes 1969, Crevier 1993, pp. 117–119 and see the frame problem, qualification problem and ramification problem.

- ^ Crevier 1993, pp. 115–117, Russell & Norvig 2003, p. 22, NRC 1999 under "Shift to Applied Research Increases Investment." and also see Howe, J. "Artificial Intelligence at Edinburgh University : a Perspective"

- ^ Crevier 1993, pp. 161–162, 197–203 and and Russell & Norvig 2003, p. 24

- ^ Crevier 1993, p. 203

- ^ Crevier 1993, pp. 209–210

- ^ Russell & Norvig, p. 28, NRC 1999 under "Artificial Intelligence in the 90s"

- ^ Russell & Norvig, pp. 25–26

- ^ Hammond J, Kristian. Case-based planning: viewing planning as a memory task. Academic Press Perspectives In Artificial Intelligence; Vol 1. Pages: 277. 1989. ISBN 0-12-322060-2

- ^ J.-S. R. Jang, C.-T. Sun, E. Mizutani, (foreword L. Zadeh) "Neuro-Fuzzy and Soft Computing," Prentice Hall, 1997

- ^ G.F. Luger, W.A. Stubblefield "Artificial Intelligence and the Design of Expert Systems"

- ^ http://www.patternrecognition.co.za/publications/cvdwalt_data_characteristics_classifiers.pdf

- ^ Congressional Mandate DARPA

- ^ The RoboCup2003 Presents: Humanoid Robots playing Soccer PRESS RELEASE: 2 June 2003

- ^ "Robots beat humans in trading battle". BBC News, Business. The British Broadcasting Corporation. August 8 2001. Retrieved 2006-11-02.

{{cite web}}: Check date values in:|year=(help)CS1 maint: year (link) - ^ "Robot," Microsoft® Encarta® Online Encyclopedia 2006

References

- Brooks, Rodney (1990), "Elephants Don't Play Chess" (PDF), Robotics and Autonomous Systems, 6: 3–15, retrieved 30 August 2007

- Buchanan, Bruce G. (2005), "A (Very) Brief History of Artificial Intelligence" (PDF), AI Magazine, pp. 53–60, retrieved 30 August 2007

- Crevier, Daniel (1993). AI: The Tumultuous Search for Artificial Intelligence. New York, NY: BasicBooks. ISBN 0-465-02997-3.

- Lenat, Douglas (1989), Building Large Knowledge-Based Systems, Addison-Wesley

- Law, Diane (1994), Searle, Subsymbolic Functionalism and Synthetic Intelligence (PDF)

- Lighthill, Professor Sir James (1973), "Artificial Intelligence: A General Survey", Artificial Intelligence: a paper symposium, Science Research Council

- McCarthy, John; Hayes, P. J. (1969), "Some philosophical problems from the standpoint of artificial intelligence", Machine Intelligence, 4: 463–502

- Minsky, Marvin (1967), Computation: Finite and Infinite Machines, Englewood Cliffs, N.J.: Prentice-Hall

- Moravec, Hans (1976), The Role of Raw Power in Intelligence

- Moravec, Hans (1988), Mind Children, Harvard University Press

- NRC (1999), "Developments in Artificial Intelligence", Funding a Revolution: Government Support for Computing Research, National Academy Press, retrieved 30 August 2007

- Newell, Allen; Simon, H. A. (1963), "GPS: A Program that Simulates Human Thought", in Feigenbaum, E.A.; Feldman, J. (eds.), Computers and Thought, McGraw-Hill

{{citation}}: Unknown parameter|publisher-place=ignored (help) - Poole, David; Mackworth, Alan; Goebel, Randy (1998), Computational Intelligence: A Logical Approach, Oxford University Press

{{citation}}: Unknown parameter|publisher-place=ignored (help) - Russell, Stuart J.; Norvig, Peter (2003), Artificial Intelligence: A Modern Approach (2nd ed.), Upper Saddle River, New Jersey: Prentice Hall, ISBN 0-13-790395-2

- Samuel, Arthur L. (1959). "Some studies in machine learning using the game of checkers". IBM Journal of Research and Development. 3 (3): 210–219. ISSN 0018-8646. Retrieved 2007-08-20.

{{cite journal}}: Unknown parameter|month=ignored (help) - Searle, John (1980), "Minds, Brains and Programs", Behavioral and Brain Sciences, 3 (3): 417–457

- Simon, H. A. (1965), The Shape of Automation for Men and Management, New York: Harper & Row

- Weizenbaum, Joseph (1976). Computer Power and Human Reason. San Francisco: W.H. Freeman & Company. ISBN 0716704641.

Further reading

- R. Sun & L. Bookman, (eds.), Computational Architectures Integrating Neural and Symbolic Processes. Kluwer Academic Publishers, Needham, MA. 1994.

External links

This article's use of external links may not follow Wikipedia's policies or guidelines. |

- Template:Dmoz

- AI with Neural Networks

- AI-Tools, the Open Source AI community homepage

- Artificial Intelligence Directory, a directory of Web resources related to artificial intelligence

- The Association for the Advancement of Artificial Intelligence

- Freeview Video 'Machines with Minds' by the Vega Science Trust and the BBC/OU

- Heuristics and artificial intelligence in finance and investment

- John McCarthy's frequently asked questions about AI

- Jonathan Edwards looks at AI (BBC audio)

- Generation5 - Large artificial intelligence portal with articles and news.

- Mindmakers.org, an online organization for people building large scale A.I. systems

- Ray Kurzweil's website dedicated to AI including prediction of future development in AI

- AI articles on the Accelerating Future blog

- AI Genealogy Project

- Artificial intelligence library and other useful links

- International Journal of Computational Intelligence

- International Journal of Intelligent Technology

- AI definitions at Labor Law Talk