BeeGFS: Difference between revisions

No edit summary |

Tobias.goetz (talk | contribs) |

||

| Line 46: | Line 46: | ||

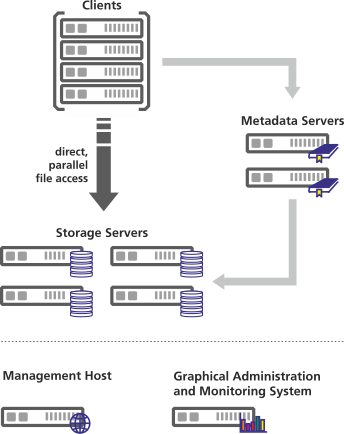

FhGFS runs on any Linux machine and consists of several components that include services for clients, metadata servers and storage servers. In addition, there is a service for the management host as well as one for a graphical administration and monitoring system. |

FhGFS runs on any Linux machine and consists of several components that include services for clients, metadata servers and storage servers. In addition, there is a service for the management host as well as one for a graphical administration and monitoring system. |

||

<gallery mode=nolines widths=" |

<gallery mode=nolines widths="344px" heights="433px"> |

||

File: |

File:BeeGFS_System_Architecture_Overview.svg|FhGFS System Overview |

||

</gallery> |

</gallery> |

||

| Line 64: | Line 64: | ||

FhGFS on-demand allows the creation of FhGFS on a set of nodes with one single command line. Possible use cases for the tool are manifold, a few include setting up a dedicated parallel file system for a cluster job, cloud computing or for fast and easy temporary setups for testing purposes. |

FhGFS on-demand allows the creation of FhGFS on a set of nodes with one single command line. Possible use cases for the tool are manifold, a few include setting up a dedicated parallel file system for a cluster job, cloud computing or for fast and easy temporary setups for testing purposes. |

||

==Benchmarks== |

==Benchmarks== |

||

Revision as of 16:25, 7 July 2014

| FhGFS Logo | |

| Developer(s) | Fraunhofer ITWM |

|---|---|

| Stable release | 2014.01-r1 (feature),[1]

/ March 10, 2014 |

| Operating system | Linux |

| Type | Distributed file system |

| Website | www.itwm.fraunhofer.de fhgfs.com |

FhGFS is a parallel file system, developed and optimized for high-performance computing. FhGFS includes a distributed metadata architecture for scalability and flexibility reasons. Its most important aspect is data throughput.

FhGFS is short for Fraunhofer Parallel File System (or Fraunhofer FS) and is developed at the Fraunhofer Institute for Industrial Mathematics (ITWM) in Kaiserslautern, Germany. It can be downloaded and used free of charge from the project’s website [3] . Fraunhofer also offers professional support for the software.

History & usage

FhGFS started in 2005 as an in-house development at Fraunhofer ITWM to replace the existing file system on the institute’s new compute cluster and to be used in a production environment.

In 2007, the first beta version of the software was announced during ISC07 in Dresden, Germany and introduced to the public during SC07 in Reno, NV. One year later the first stable major release became available.

Due to the nature of FhGFS being free of charge, it is unknown how many active installations there are. However, there are around 100 customers worldwide that use FhGFS and are supported by Fraunhofer. Among those are academic users such as universities and research facilities[4] as well as for-profit companies in finance or the oil & gas industry.

Notable installations include several TOP500 computers such as Loewe-CSC[5] cluster at the Goethe University of Frankfurt, Germany (#22 on installation), the Vienna Scientific Cluster[6] at the University of Vienna, Austria (#56 on installation), and the Abel[7] cluster at the University of Oslo, Norway (#96 on installation).

Key concepts & features

When developing FhGFS, Fraunhofer ITWM aimed for three key concepts with the software: scalability, flexibility and good usability.

FhGFS runs on any Linux machine and consists of several components that include services for clients, metadata servers and storage servers. In addition, there is a service for the management host as well as one for a graphical administration and monitoring system.

-

FhGFS System Overview

To run FhGFS, at least one instance of the metadata server and the storage server is required. But FhGFS allows multiple instances of each service to distribute the load from a large number of clients. The scalability of each component makes sure, the system itself is scalable.

File contents are distributed over several storage servers using striping, i.e. each file is split into chunks of a given size and these chunks are distributed over the existing storage servers. The size of these chunks can be defined by the file system administrator. In addition, also the metadata is distributed over several metadata servers on a directory level, with each server storing a part of the complete file system tree. This approach allows fast access on the data.

Clients as well as metadata or storage servers can be added into an existing system without any downtime. The client itself is a lightweight kernel module that does not require any kernel patches. The servers run on top of an existing local file system. There are no restrictions to the type of underlying file system as long as it supports POSIX, recommendations are to use ext4 for the metadata servers and XFS for the storage servers. Both servers run in userspace.

Also there is no strict requirement for dedicated hardware for individual services. The design allows a file system administrator to start the services in any combination on a given set of machines and expand in the future. A common way among FhGFS users to take advantage of this is combining metadata servers and storage servers on the same machines.

FhGFS supports various network-interconnects with dynamic failover such as Ethernet or Infiniband as well as many different Linux distributions and kernels (from 2.6.16 to the latest vanilla). The software has a simple setup and startup mechanism using init scripts. For users that prefer a graphical interface over command lines, a Java based GUI (AdMon) is available. The GUI provides monitoring of the FhGFS state and management of system settings. Besides managing and administrating the FhGFS installation, this tool also offers a couple of monitoring options to help identifying performance issues within the system.

FhGFS on-demand

FhGFS on-demand allows the creation of FhGFS on a set of nodes with one single command line. Possible use cases for the tool are manifold, a few include setting up a dedicated parallel file system for a cluster job, cloud computing or for fast and easy temporary setups for testing purposes.

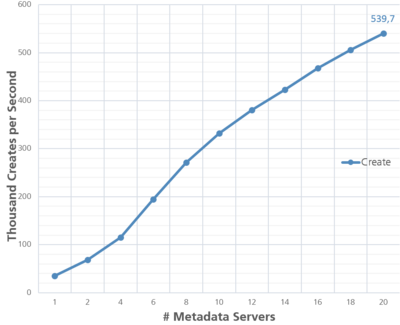

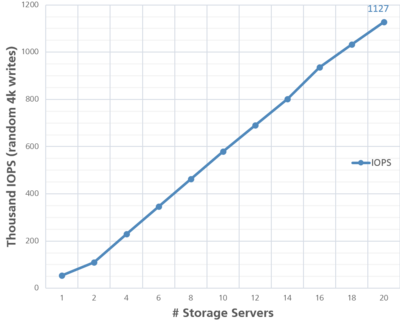

Benchmarks

The following benchmarks have been performed on Fraunhofer Seislab, a test and experimental cluster at Fraunhofer ITWM with 25 nodes (20 compute + 5 storage) and a three tier memory: 1 TB RAM, 20 TB SSD, 120 TB HDD. Single node performance on the local file system without FhGFS is 1,332 MB/s (write) and 1,317 MB/s (read).

The nodes are equipped with 2x Intel Xeon X5660, 48 GB RAM, 4x Intel 510 Series SSD (RAID 0), Ext4, QDR Infiniband and run Scientific Linux 6.3, Kernel 2.6.32-279 and FhGFS 2012.10-beta1.

-

Read/Write Throughput

-

File Creates

-

IOPS

Future

In order to take FhGFS to the next level, out of research into a business environment, Fraunhofer started a spin-off in January 2014. The new company called ThinkParQ[8] will take over sales, customer service, and professional support. In this process, FhGFS will be renamed and becomes BeeGFS®.[9]

While ThinkParQ will take over customer support and sales, Fraunhofer ITWM will continue to drive the further development of the software. The focus will stay on improvements and new features for HPC systems and applications.

The new company has its main office in Kaiserslautern, Germany.

FhGFS and exascale

Fraunhofer ITWM is participating in the Dynamic-Exascale Entry Platform – Extended Reach (DEEP-ER) project of the European Union[10] which addresses the problems of the growing gap between compute speed and I/O bandwith, and system resiliency for large scale systems.

Some of the aspects, FhGFS developers are working on under the scope of this project are:

- support for tiered storage,

- POSIX interface extentions,

- fault tolerance / high availability (HA), and

- improved monitoring and diagnose tools.

Plan is to keep the POSIX interface for backward compatibility but also allow applications more control over how the file system handles things like data placement and coherency through API extensions.

See also

References

- ^ "Latest stable FhGFS release". March 10, 2014. Retrieved March 10, 2014.

- ^ "HPC-Wire about first official FraunhoferFS release". 2007-11-09. Retrieved 2011-03-01.

- ^ "FhGFS End-User License Agreement (EULA)". Fraunhofer ITWM. February 22, 2012. Retrieved March 15, 2014.

- ^ "FraunhoferFS High-Performance Parallel File System". ClusterVision eNews. November 2012. Retrieved March 17, 2014.

- ^ "... And Fraunhofer". StorageNewsletter.com. June 18, 2010. Retrieved March 17, 2014.

- ^ "VSC-2". Top500 List. June 20, 2011. Retrieved March 17, 2014.

- ^ "Abel". Top500 List. June 18, 2012. Retrieved March 17, 2014.

- ^ "ThinkParQ website". Retrieved March 17, 2014.

- ^ Rich Brueckner (March 13, 2014). "Fraunhofer to Spin Off Renamed BeeGFS File System". insideHPC. Retrieved March 17, 2014.

- ^ "DEEP-ER Project Website". Retrieved March 17, 2014.