Synthesizer: Difference between revisions

Nitfiddley (talk | contribs) No edit summary |

Nitfiddley (talk | contribs) Undid revision 651121372 by Nitfiddley (talk) |

||

| Line 8: | Line 8: | ||

}}</div> |

}}</div> |

||

A '''sound synthesizer''' (often abbreviated as "'''synthesizer'''" or "'''synth'''", also spelled "'''synthesiser'''") is an [[electronic musical instrument]] that generates electric signals |

A '''sound synthesizer''' (often abbreviated as "'''synthesizer'''" or "'''synth'''", also spelled "'''synthesiser'''") is an [[electronic musical instrument]] that generates electric signals converted to sound through [[loudspeaker]]s or [[headphones]]. Synthesizers may either [[#Imitative synthesis|imitate other instruments]] or generate new [[timbres]]. They are often played with a [[musical keyboard|keyboard]], but they can be controlled via a variety of other input devices, including [[music sequencers]], [[MIDI controller|instrument controllers]], [[#Fingerboard controller|fingerboards]], [[guitar synthesizer]]s, [[wind controller]]s, and [[electronic drum]]s. Synthesizers without built-in controllers are often called ''[[sound modules]]'', and are controlled via [[MIDI]] or [[CV/Gate]]. |

||

Synthesizers use various methods to generate signal. Among the most popular waveform synthesis techniques are [[subtractive synthesis]], [[additive synthesis]], [[wavetable synthesis]], [[frequency modulation synthesis]], [[phase distortion synthesis]], [[physical modeling synthesis]] and [[sample-based synthesis]]. Other less common synthesis types (''see [[#Types of synthesis]]'') include [[Subharmonic synthesizer|subharmonic synthesis]], a form of additive synthesis via subharmonics (used by [[Trautonium|mixture trautonium]]), and [[granular synthesis]], sample-based synthesis based on grains of sound, generally resulting in [[soundscape]]s or [[Cloud (music)|clouds]]. |

Synthesizers use various methods to generate signal. Among the most popular waveform synthesis techniques are [[subtractive synthesis]], [[additive synthesis]], [[wavetable synthesis]], [[frequency modulation synthesis]], [[phase distortion synthesis]], [[physical modeling synthesis]] and [[sample-based synthesis]]. Other less common synthesis types (''see [[#Types of synthesis]]'') include [[Subharmonic synthesizer|subharmonic synthesis]], a form of additive synthesis via subharmonics (used by [[Trautonium|mixture trautonium]]), and [[granular synthesis]], sample-based synthesis based on grains of sound, generally resulting in [[soundscape]]s or [[Cloud (music)|clouds]]. |

||

Revision as of 23:54, 12 March 2015

A sound synthesizer (often abbreviated as "synthesizer" or "synth", also spelled "synthesiser") is an electronic musical instrument that generates electric signals converted to sound through loudspeakers or headphones. Synthesizers may either imitate other instruments or generate new timbres. They are often played with a keyboard, but they can be controlled via a variety of other input devices, including music sequencers, instrument controllers, fingerboards, guitar synthesizers, wind controllers, and electronic drums. Synthesizers without built-in controllers are often called sound modules, and are controlled via MIDI or CV/Gate.

Synthesizers use various methods to generate signal. Among the most popular waveform synthesis techniques are subtractive synthesis, additive synthesis, wavetable synthesis, frequency modulation synthesis, phase distortion synthesis, physical modeling synthesis and sample-based synthesis. Other less common synthesis types (see #Types of synthesis) include subharmonic synthesis, a form of additive synthesis via subharmonics (used by mixture trautonium), and granular synthesis, sample-based synthesis based on grains of sound, generally resulting in soundscapes or clouds.

History

consists of tuning forks, electromagnets, and Helmholtz resonators.

This article or section possibly contains synthesis of material that does not verifiably mention or relate to the main topic. (October 2011) |

The beginnings of the synthesizer are difficult to trace, as there is confusion between sound synthesizers and arbitrary electric/electronic musical instruments.[1][2]

Early electric instruments

One of the earliest electric musical instruments, the musical telegraph, was invented in 1876 by American electrical engineer Elisha Gray. He accidentally discovered the sound generation from a self-vibrating electromagnetic circuit, and invented a basic single-note oscillator. This musical telegraph used steel reeds with oscillations created by electromagnets transmitted over a telegraphy line. Gray also built a simple loudspeaker device into later models, consisting of a vibrating diaphragm in a magnetic field, to make the oscillator audible.[3][4]

This instrument was a remote electromechanical musical instrument using telegraphy and electric buzzers which can generate fixed timbre sound. Though it lacked an arbitrary sound-synthesis function, some have erroneously called it the first synthesizer.[1][2]

Early additive synthesizer – Tonewheel organs

In 1897, Thaddeus Cahill invented the Teleharmonium (or Dynamophone) which used dynamos (early electric generator),[5] and was capable of additive synthesis like the Hammond organ, which was invented in 1934. However, Cahill's business was unsuccessful for various reasons (size of system, rapid evolutions of electronics, crosstalk issues on the telephone line etc.), and similar but more compact instruments were subsequently developed, such as electronic and tonewheel organs.

Emergence of electronics and early electronic instruments

In 1906, American engineer, Lee De Forest ushered in the "electronics age".[6] He invented the first amplifying vacuum tube, called the Audion tube. This led to new entertainment technologies, including radio and sound films. These new technologies also influenced the music industry, and resulted in various early electronic musical instruments that used vacuum tubes, including:

- Audion piano by Lee De Forest in 1915[7]

- Theremin by Léon Theremin in 1920[8]

- Ondes Martenot by Maurice Martenot in 1928

- Trautonium by Friedrich Trautwein in 1929

Most of these early instruments used "heterodyne circuits" to produce audio frequencies, and were limited in their synthesis capabilities. Ondes Martenot and Trautonium were continuously developed for several decades, finally developing qualities similar to later synthesizers.

Graphical sound

In the 1920s, Arseny Avraamov developed various systems of graphic sonic art,[9] and similar graphical sound systems were developed around the world, such as those as seen on the Holzer 2010.[10] In 1938, USSR engineer Yevgeny Murzin designed a compositional tool called ANS, one of the earliest real-time additive synthesizers using optoelectronics. Although his idea of reconstructing a sound from its visible image was apparently simple, the instrument was not realized until 20 years later, in 1958, as Murzin was "an engineer who worked in areas unrelated to music" (Kreichi 1997).[11]

Subtractive synthesis & polyphonic synthesizer

In the 1930s and 1940s, the basic elements required for the modern analog subtractive synthesizers — audio oscillators, audio filters, envelope controllers, and various effects units — had already appeared and were utilized in several electronic instruments.

The earliest polyphonic synthesizers were developed in Germany and the United States. The Warbo Formant Organ developed by Harald Bode in Germany in 1937, was a four-voice key-assignment keyboard with two formant filters and a dynamic envelope controller[12][13] and possibly manufactured commercially by a factory in Dachau, according to the 120 years of Electronic Music.[14][verification needed] The Hammond Novachord released in 1939, was an electronic keyboard that used twelve sets of top-octave oscillators with octave dividers to generate sound, with vibrato, a resonator filter bank and a dynamic envelope controller. During the three years that Hammond manufactured this model, 1,069 units were shipped, but production was discontinued at the start of World War II.[15][16] Both instruments were the forerunners of the later electronic organs and polyphonic synthesizers.

Monophonic electronic keyboards

This section needs expansion with: TO BE ADDED: Hammond Solovox (1940), Hohner Multimonica (1940), Ondioline (1941), Clavioline (1947), Clavivox (1952). You can help by adding to it. (August 2013) |

Georges Jenny built his first ondioline in France in 1941.

Other innovations

In the late 1940s, Canadian inventor and composer, Hugh Le Caine invented Electronic Sackbut, which provided the earliest realtime control of three aspects of sound (volume, pitch and timbre), corresponding to today's touch-sensitive keyboard, pitch and modulation controllers. The controllers were initially implemented as a multidimensional pressure keyboard in 1945, then changed to a group of dedicated controllers operated by left hand in 1948.[17]

In Japan, as early as in 1935, Yamaha released Magna organ,[18] a multi-timbral keyboard instrument based on electrically-blown free reeds with pickups.[19] It may have been similar to the electrostatic reed organs developed by Frederick Albert Hoschke in 1934 and then manufactured by Everett and Wurlitzer until 1961.

However, at least one Japanese was not satisfied the situation at that time. In 1949, Japanese composer Minao Shibata discussed the concept of "a musical instrument with very high performance" that can "synthesize any kind of sound waves" and is "...operated very easily," predicting that with such an instrument, "...the music scene will be changed drastically."[neutrality is disputed][20][21]

Electronic music studios as sound synthesizers

After World War II, electronic music including electroacoustic music and musique concrète was created by contemporary composers, and numerous electronic music studios were established around the world, especially in Bonn, Cologne, Paris and Milan. These studios were typically filled with electronic equipment including oscillators, filters, tape recorders, audio consoles etc., and the whole studio functioned as a "sound synthesizer".

Origin of the term "sound synthesizer"

In 1951–1952, RCA produced a machine called the Electronic Music Synthesizer; however, it was more accurately a composition machine, because it did not produce sounds in real time.[22] RCA then developed the first programmable sound synthesizer, RCA Mark II Sound Synthesizer, installing it at the Columbia-Princeton Electronic Music Center in 1957.[23] Prominent composers including Vladimir Ussachevsky, Otto Luening, Milton Babbitt, Halim El-Dabh, Bülent Arel, Charles Wuorinen, and Mario Davidovsky used the RCA Synthesizer extensively in various compositions.[24]

From modular synthesizer to popular music

In 1959–1960, Harald Bode developed a modular synthesizer and sound processor,[25][26] and in 1961, he wrote a paper exploring the concept of self-contained portable modular synthesizer using newly emerging transistor technology.[27] He also served as AES session chairman on music and electronic for the fall conventions in 1962 and 1964.[28] His ideas were adopted by Donald Buchla and Robert Moog in the United States, and Paul Knetoff in Italy[29][30][31] at about the same time:[32] among them, Moog is known as the first synthesizer designer to popularize the voltage control technique in analog electronic musical instruments.[32]

Robert Moog built his first prototype between 1963-1964, and was then commissioned by the Alwin Nikolais Dance Theater of NY;[33][34] while Donald Buchla was commissioned by Morton Subotnick.[35][36] In the late 1960s to 1970s, the development of miniaturized solid-state components allowed synthesizers to become self-contained, portable instruments, as proposed by Harald Bode in 1961. By the early 1980s, companies were selling compact, modestly priced synthesizers to the public. This, along with the development of Musical Instrument Digital Interface (MIDI), made it easier to integrate and synchronize synthesizers and other electronic instruments for use in musical composition. In the 1990s, synthesizer emulations began to appear in computer software, known as software synthesizers. Later, VST and other plugins were able to emulate classic hardware synthesizers to a moderate degree.

The synthesizer had a considerable effect on 20th-century music.[37] Micky Dolenz of The Monkees bought one of the first Moog synthesizers. The band was the first to release an album featuring a Moog with Pisces, Aquarius, Capricorn & Jones Ltd. in 1967,[38] which reached #1 in the charts. The Perrey and Kingsley album The In Sound From Way Out! using the Moog and tape loops was released in 1966. A few months later, both the Rolling Stones' "2000 Light Years from Home" and the title track of the Doors' 1967 album Strange Days also featured a Moog, played by Brian Jones and Paul Beaver respectively. In the same year, Bruce Haack built a homemade synthesizer which he demonstrated on Mister Rogers Neighborhood. The synthesizer included a sampler (musical instrument) which recorded, stored, played and looped sounds controlled by switches, light sensors and human skin contact. Wendy Carlos's Switched-On Bach (1968), recorded using Moog synthesizers, also influenced numerous musicians of that era and is one of the most popular recordings of classical music ever made,[39] alongside the records of Isao Tomita (particularly Snowflakes are Dancing in 1974), who in the early 1970s utilized synthesizers to create new artificial sounds (rather than simply mimicking real instruments)[40] and made significant advances in analog synthesizer programming.[41]

The sound of the Moog reached the mass market with Simon and Garfunkel's Bookends in 1968 and The Beatles' Abbey Road the following year; hundreds of other popular recordings subsequently used synthesizers, most famously the portable Minimoog. Electronic music albums by Beaver and Krause, Tonto's Expanding Head Band, The United States of America, and White Noise reached a sizable cult audience and progressive rock musicians such as Richard Wright of Pink Floyd and Rick Wakeman of Yes were soon using the new portable synthesizers extensively. Stevie Wonder and Herbie Hancock also contributed strongly to the popularisation of synthesizers in Black American music.[42][43] Other early users included Emerson, Lake & Palmer's Keith Emerson, Todd Rundgren, Pete Townshend, and The Crazy World of Arthur Brown's Vincent Crane. In Europe, the first no 1 single to feature a Moog prominently was Chicory Tip's 1972 hit Son of My Father.[44]

Polyphonic keyboards and the digital revolution

In 1978, the success of the Prophet 5, a polyphonic and microprocessor-controlled keyboard synthesizer, strongly aided the shift of synthesizers towards their familiar modern shape, away from large modular units and towards smaller keyboard instruments.[45] This form factor helped accelerate the integration of synthesizers into popular music, a shift that had been lent powerful momentum by the Minimoog, and also later the ARP Odyssey.[46] Earlier polyphonic electronic instruments of the 1970s, rooted in string synthesizers before advancing to multi-synthesizers incorporating monosynths and more, gradually fell out of fashion in the wake of these newer, note-assigned polyphonic keyboard synthesizers.[47] These polyphonic synthesizers were mainly manufactured in the United States and Japan from the mid-1970s to the early-1980s, and included the Yamaha CS-80 (1976), Oberheim's Polyphonic and OBX (1975 and 1979), Sequential Circuits' Prophet-5 (1978), and Roland's Jupiter 4 and Jupiter 8 (1978 and 1981).

By the end of the 1970s, digital synthesizers and digital samplers arrived on the market around the world (and are still sold today),[note 1] as the result of preceding research and development.[note 1] Compared with analog synthesizer sounds, the digital sounds produced by these new instruments tended to have a number of different characteristics: clear attack and sound outlines, carrying sounds, rich overtones with inharmonic contents, and complex motion of sound textures, amongst others. While these new instruments were expensive, these characteristics meant musicians were quick to adopt them, especially in the United Kingdom[48] and the United States. This encouraged a trend towards producing music using digital sounds,[note 2] and laid the foundations for the development of the inexpensive digital instruments popular in the next decade (see below). Relatively successful instruments with each selling more than several hundred units per series, included the NED Synclavier (1977), Fairlight CMI (1979), E-mu Emulator (1981), and PPG Wave (1981).[note 1][48][49][50][51]

In 1983, however, Yamaha's revolutionary DX7 digital synthesizer[52][53] swept through popular music, leading to the adoption and development of digital synthesizers in many varying forms during the 1980s, and the rapid decline of analog synthesizer technology. In 1987, Roland's D50 synthesizer was released which combined the already existed sample-based synthesis[note 3] and the onboard digital effects,[54] while Korg's even more popular M1 (1988) now also heralded the era of the workstation synthesizer, based on ROM sample sounds for composing and sequencing whole songs, rather than traditional sound synthesis per se.[55]

Throughout the 1990s, the popularity of electronic dance music employing analog sounds, the appearance of digital analog modelling synthesizers to recreate these sounds, and the development of the Eurorack modular synthesiser system, initially introduced with the Doepfer A-100 and since adopted by other manufacturers, all contributed to the resurgence of interest in analog technology. The turn of the century also saw improvements in technology that led to the popularity of digital software synthesizers.[56] In the 2010s, new analog synthesizers, both in keyboard and modular form, are released alongside current digital hardware instruments.[57]

Impact on popular music

This section needs expansion. You can help by adding to it. (August 2014) |

In the 1970s, Jean Michel Jarre, Larry Fast, and Vangelis released successful synthesizer-led instrumental albums. Over time, this helped influence the emergence of synthpop, a subgenre of new wave, in the late 1970s. The work of German electronic bands such as Kraftwerk and Tangerine Dream, British acts Gary Numan and David Bowie and the Japanese Yellow Magic Orchestra were also influential in the development of the genre.[58] Gary Numan's 1979 hits "Are 'Friends' Electric?" and "Cars" made heavy use of synthesizers.[59][60] OMD's "Enola Gay" (1980) used distinctive electronic percussion and a synthesized melody. Soft Cell used a synthesized melody on their 1981 hit "Tainted Love".[58] Nick Rhodes, keyboardist of Duran Duran, used various synthesizers including the Roland Jupiter-4 and Jupiter-8.[61]

Chart hits include Depeche Mode's "Just Can't Get Enough" (1981),[58] The Human League's "Don't You Want Me"[62] and Giorgio Moroder's "Flashdance... What a Feeling" (1983) for Irene Cara. Other notable synthpop groups included New Order, Visage, Japan, Ultravox,[58] Spandau Ballet, Culture Club, Eurythmics, Yazoo, Thompson Twins, A Flock of Seagulls, Heaven 17, Erasure, Soft Cell, Blancmange, Pet Shop Boys, Bronski Beat, Kajagoogoo, ABC, Naked Eyes, Devo, and the early work of Tears for Fears and Talk Talk. Giorgio Moroder, Howard Jones, Kitaro, Stevie Wonder, Peter Gabriel, Thomas Dolby, Kate Bush, Dónal Lunny and Frank Zappa also all made use of synthesizers.

The synthesizer became one of the most important instruments in the music industry.[58]

Types of synthesis

Additive synthesis builds sounds by adding together waveforms (which are usually harmonically related). Early analog examples of additive synthesizers are the Teleharmonium and Hammond organ. To implement real-time additive synthesis, wavetable synthesis is useful for reducing required hardware/processing power,[63] and is commonly used in low-end MIDI instruments (such as educational keyboards) and low-end sound cards.

Subtractive synthesis is based on filtering harmonically rich waveforms. Due to its simplicity, it is the basis of early synthesizers such as the Moog synthesizer. Subtractive synthesizers use a simple acoustic model that assumes an instrument can be approximated by a simple signal generator (producing sawtooth waves, square waves, etc.) followed by a filter. The combination of simple modulation routings (such as pulse width modulation and oscillator sync), along with the physically unrealistic lowpass filters, is responsible for the "classic synthesizer" sound commonly associated with "analog synthesis"—a term which is often mistakenly used when referring to software synthesizers using subtractive synthesis.

FM synthesis (frequency modulation synthesis) is a process that usually involves the use of at least two signal generators (sine-wave oscillators, commonly referred to as "operators" in FM-only synthesizers) to create and modify a voice. Often, this is done through the analog or digital generation of a signal that modulates the tonal and amplitude characteristics of a base carrier signal. FM synthesis was pioneered by John Chowning, who patented the idea and sold it to Yamaha. Unlike the exponential relationship between voltage-in-to-frequency-out and multiple waveforms in classical 1-volt-per-octave synthesizer oscillators, Chowning-style FM synthesis uses a linear voltage-in-to-frequency-out relationship and sine-wave oscillators. The resulting complex waveform may have many component frequencies, and there is no requirement that they all bear a harmonic relationship. Sophisticated FM synths such as the Yamaha DX-7 series can have 6 operators per voice; some synths with FM can also often use filters and variable amplifier types to alter the signal's characteristics into a sonic voice that either roughly imitates acoustic instruments or creates sounds that are unique. FM synthesis is especially valuable for metallic or clangorous noises such as bells, cymbals, or other percussion.

Phase distortion synthesis is a method implemented on Casio CZ synthesizers. It is quite similar to FM synthesis but avoids infringing on the Chowning FM patent. It can be categorized as both modulation synthesis (along with FM synthesis), and distortion synthesis along with waveshaping synthesis.

Granular synthesis is a type of synthesis based on manipulating very small sample slices.

Physical modelling synthesis is the synthesis of sound by using a set of equations and algorithms to simulate a real instrument, or some other physical source of sound. This involves modelling components of musical objects and creating systems that define action, filters, envelopes and other parameters over time. Various models can also be combined, e.g. the model of a violin with characteristics of a pedal steel guitar and the action of piano hammer. When an initial set of parameters is run through the physical simulation, the simulated sound is generated. Although physical modeling was not a new concept in acoustics and synthesis, it was not until the development of the Karplus-Strong algorithm and the increase in DSP power in the late 1980s that commercial implementations became feasible. The quality and speed of physical modeling on computers improves with higher processing power.

Sample-based synthesis involves recording a real instrument as a digitized waveform, and then playing back its recordings at different speeds (pitches) to produce different tones. This technique is referred to a "sampling". Most samplers designate a part of the sample for each component of the ADSR envelope, repeating that section while changing the volume according to the envelope. This allows samplers to vary the envelope while playing the same note. See also Wavetable synthesis, Vector synthesis.

Analysis/resynthesis is a form of synthesis that uses a series of bandpass filters or Fourier transforms to analyze the harmonic content of a sound. The results are then used to resynthesize the sound using a band of oscillators. The vocoder, linear predictive coding, and some forms of speech synthesis are based on analysis/resynthesis.

Essynth is a mathematical model for interactive sound synthesis based on evolutionary computation and uses genetic operators and fitness functions to create sound.

Imitative synthesis

Sound synthesis can be used to mimic acoustic sound sources. Generally, a sound that does not change over time includes a fundamental partial or harmonic, and any number of partials. Synthesis may attempt to mimic the amplitude and pitch of the partials in an acoustic sound source.

When natural sounds are analyzed in the frequency domain (as on a spectrum analyzer), the spectra of their sounds exhibits amplitude spikes at each of the fundamental tone's harmonics corresponding to resonant properties of the instruments (spectral peaks that are also referred to as formants). Some harmonics may have higher amplitudes than others. The specific set of harmonic-vs-amplitude pairs is known as a sound's harmonic content. A synthesized sound requires accurate reproduction of the original sound in both the frequency domain and the time domain. A sound does not necessarily have the same harmonic content throughout the duration of the sound. Typically, high-frequency harmonics die out more quickly than the lower harmonics.

In most conventional synthesizers, for purposes of re-synthesis, recordings of real instruments are composed of several components representing the acoustic responses of different parts of the instrument, the sounds produced by the instrument during different parts of a performance, or the behavior of the instrument under different playing conditions (pitch, intensity of playing, fingering, etc.)

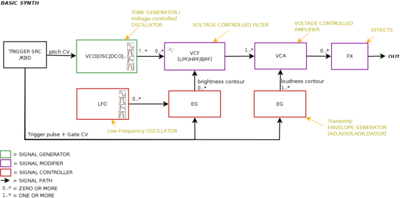

Components

Synthesizers generate sound through various analogue and digital techniques. Early synthesizers were analog hardware based but many modern synthesizers use a combination of DSP software and hardware or else are purely software-based (see softsynth). Digital synthesizers often emulate classic analog designs. Sound is controllable by the operator by means of circuits or virtual stages that may include:

- Electronic oscillators – create raw sounds with a timbre that depends upon the waveform generated. Voltage-controlled oscillators (VCOs) and digital oscillators may be used. Harmonic additive synthesis models sounds directly from pure sine waves, somewhat in the manner of an organ, while frequency modulation and phase distortion synthesis use one oscillator to modulate another. Subtractive synthesis depends upon filtering a harmonically rich oscillator waveform. Sample-based and granular synthesis use one or more digitally recorded sounds in place of an oscillator.

- Voltage-controlled filter (VCF) – "shape" the sound generated by the oscillators in the frequency domain, often under the control of an envelope or LFO. These are essential to subtractive synthesis.

- Voltage-controlled amplifier (VCA) – After the signal generated by one (or a mix of more) VCOs has been modified by filters and LFOs, and its waveform has been shaped (contoured) by an ADSR envelope generator, it then passes on to one or more voltage-controlled amplifiers (VCAs). A VCA is a preamp that boosts (amplifies) the electronic signal before passing it on to an external or built-in power amplifier, as well as a means to control its amplitude (volume) using an attenuator. The gain of the VCA is affected by a control voltage (CV), coming from an envelope generator, an LFO, the keyboard or some other source.[64]

- ADSR envelopes – provide envelope modulation to "shape" the volume or harmonic content of the produced note in the time domain with the principle parameters being attack, decay, sustain and release. These are used in most forms of synthesis. ADSR control is provided by envelope generators.

- Low frequency oscillator (LFO) – an oscillator of adjustable frequency that can be used to modulate the sound rhythmically, for example to create tremolo or vibrato or to control a filter's operating frequency. LFOs are used in most forms of synthesis.

- Other sound processing effects such as ring modulators may be encountered.

Filter

Electronic filters are particularly important in subtractive synthesis, being designed to pass some frequency regions through unattenuated while significantly attenuating ("subtracting") others. The low-pass filter is most frequently used, but band-pass filters, band-reject filters and high-pass filters are also sometimes available.

The filter may be controlled with a second ADSR envelope. An "envelope modulation" ("env mod") parameter on many synthesizers with filter envelopes determines how much the envelope affects the filter. If turned all the way down, the filter producs a flat sound with no envelope. When turned up the envelope becomes more noticeable, expanding the minimum and maximum range of the filter.

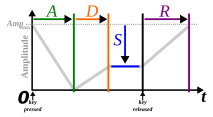

ADSR envelope

| Key | on | off | |||

When an acoustic musical instrument produces sound, the loudness and spectral content of the sound change over time in ways that vary from instrument to instrument. The "attack" and "decay" of a sound have a great effect on the instrument's sonic character.[65] Sound synthesis techniques often employ an envelope generator that controls a sound's parameters at any point in its duration. Most often this is an "ADSR" (Attack Decay Sustain Release) envelope, which may be applied to overall amplitude control, filter frequency, etc. The envelope may be a discrete circuit or module, or implemented in software. The contour of an ADSR envelope is specified using four parameters:

- Attack time is the time taken for initial run-up of level from nil to peak, beginning when the key is first pressed.

- Decay time is the time taken for the subsequent run down from the attack level to the designated sustain level.

- Sustain level is the level during the main sequence of the sound's duration, until the key is released.

- Release time is the time taken for the level to decay from the sustain level to zero after the key is released.

An early implementation of ADSR can be found on the Hammond Novachord in 1938 (which predates the first Moog synthesizer by over 25 years). A seven-position rotary knob set preset ADS parameter for all 72 notes; a pedal controlled release time.[15] The notion of ADSR was specified by Vladimir Ussachevsky (then head of the Columbia-Princeton Electronic Music Center) in 1965 while suggesting improvements for Bob Moog's pioneering work on synthesizers, although the earlier notations of parameter were (T1, T2, Esus, T3), then these were simplified to current form (Attack time, Decay time, Sustain level, Release time) by ARP.[66]

Some electronic musical instruments allow the ADSR envelope to be inverted, which results in opposite behavior compared to the normal ADSR envelope. During the attack phase, the modulated sound parameter fades from the maximum amplitude to zero then, during the decay phase, rises to the value specified by the sustain parameter. After the key has been released the sound parameter rises from sustain amplitude back to maximum amplitude.

A common variation of the ADSR on some synthesizers, such as the Korg MS-20, was ADSHR (attack, decay, sustain, hold, release). By adding a "hold" parameter, the system allowed notes to be held at the sustain level for a fixed length of time before decaying. The General Instruments AY-3-8912 sound chip included a hold time parameter only; the sustain level was not programmable. Another common variation in the same vein is the AHDSR (attack, hold, decay, sustain, release) envelope, in which the "hold" parameter controls how long the envelope stays at full volume before entering the decay phase. Multiple attack, decay and release settings may be found on more sophisticated models.

Certain synthesizers also allow for a delay parameter before the attack. Modern synthesizers like the Dave Smith Instruments Prophet '08 have DADSR (delay, attack, decay, sustain, release) envelopes. The delay setting determines the length of silence between hitting a note and the attack. Some software synthesizers, such as Image-Line's 3xOSC (included with their DAW FL Studio) have DAHDSR (delay, attack, hold, decay, sustain, release) envelopes.

LFO

A low-frequency oscillator (LFO) generates an electronic signal, usually below 20 Hz. LFO signals create a periodic control signal or sweep, often used in vibrato, tremolo and other effects. In certain genres of electronic music, the LFO signal can control the cutoff frequency of a VCF to make a rhythmic wah-wah sound, or the signature dubstep wobble bass.

Patch

A synthesizer patch (some manufacturers chose the term program) is a sound setting. Modular synthesizers used cables ("patch cords") to connect the different sound modules together. Since these machines had no memory to save settings, musicians wrote down the locations of the patch cables and knob positions on a "patch sheet" (which usually showed a diagram of the synthesizer). Ever since, an overall sound setting for any type of synthesizer has been referred to as a patch.

In mid–late 1970s, patch memory (allowing storage and loading of 'patches' or 'programs') began to appear in synths like the Oberheim Four-voice (1975/1976)[67] and Sequential Circuits Prophet-5 (1977/1978). After MIDI was introduced in 1983, more and more synthesizers could import or export patches via MIDI SYSEX commands. When a synthesizer patch is uploaded to a personal computer that has patch editing software installed, the user can alter the parameters of the patch and download it back to the synthesizer. Because there is no standard patch language it is rare that a patch generated on one synthesizer can be used on a different model. However sometimes manufacturers design a family of synthesizers to be compatible.

Control interfaces

Modern synthesizers often look like small pianos, though with many additional knob and button controls. These are integrated controllers, where the sound synthesis electronics are integrated into the same package as the controller. However, many early synthesizers were modular and keyboardless, while most modern synthesizers may be controlled via MIDI, allowing other means of playing such as:

- Fingerboards (ribbon controllers) and touchpads

- Wind controllers

- Guitar-style interfaces

- Drum pads

- Music sequencers

- Non-contact interfaces akin to theremins

- Tangible interfaces like a Reactable, AudioCubes

- Various auxiliary input device including: wheels for pitch bend and modulation, footpedals for expression and sustain, breath controllers, beam controllers, etc.

Fingerboard controller

Right: Mixture Trautonium (replica of 1952)

A ribbon controller or other violin-like user interface may be used to control synthesizer parameters. The idea dates to Léon Theremin's 1922 first concept[68] and his 1932 Fingerboard Theremin and Keyboard Theremin,[69][70] Maurice Martenot's 1928 Ondes Martenot (sliding a metal ring),[71] Friedrich Trautwein's 1929 Trautonium (finger pressure), and was also later utilized by Robert Moog.[72][73][74] The ribbon controller has no moving parts. Instead, a finger pressed down and moved along it creates an electrical contact at some point along a pair of thin, flexible longitudinal strips whose electric potential varies from one end to the other. Older fingerboards used a long wire pressed to a resistive plate. A ribbon controller is similar to a touchpad, but a ribbon controller only registers linear motion. Although it may be used to operate any parameter that is affected by control voltages, a ribbon controller is most commonly associated with pitch bending.

Fingerboard-controlled instruments include the Trautonium (1929), Hellertion (1929) and Heliophon (1936),[75][76][77] Electro-Theremin (Tannerin, late 1950s), Persephone (2004), and the Swarmatron (2004). A ribbon controller is used as an additional controller in the Yamaha CS-80 and CS-60, the Korg Prophecy and Korg Trinity series, the Kurzweil synthesizers, Moog synthesizers, and others.

Rock musician Keith Emerson used it with the Moog modular synthesizer from 1970 onward. In the late 1980s, keyboards in the synth lab at Berklee College of Music were equipped with membrane thin ribbon style controllers that output MIDI. They functioned as MIDI managers, with their programming language printed on their surface, and as expression/performance tools. Designed by Jeff Tripp of Perfect Fretworks Co., they were known as Tripp Strips. Such ribbon controllers can serve as a main MIDI controller instead of a keyboard, as with the Continuum instrument.

Wind controllers

Wind controllers (and wind synthesizers) are convenient for woodwind and brass players, being designed to imitate those instruments. These are usually either analog or MIDI controllers, and sometimes include their own built-in sound modules (synthesizers). In addition to the follow of key arrangements and fingering, the controllers have breath-operated pressure transducers, and may have gate extractors, velocity sensors, and bite sensors. Saxophone style controllers have included the Lyricon, and products by Yamaha, Akai, and Casio. The mouthpieces range from alto clarinet to alto saxophone sizes. The Eigenharp, a controller similar in style to a bassoon, was release by Eigenlabs in 2009. Melodica and recorder style controllers have included the Martinetta (1975)[78] and Variophon (1980),[79] and Joseph Zawinul's custom Korg Pepe.[80] A Harmonica style interface was the Millionizer 2000 (c.1983).[81]

Trumpet style controllers have included products by Steiner/Crumar/Akai, Yamaha, and Morrison. Breath controllers can also be used to control conventional synthesizers, e.g. the Crumar Steiner Masters Touch,[82] Yamaha Breath Controller and compatible products.[83] Several controllers also provide breath-like articulation capabilities. [clarification needed]

Accordion controllers use pressure transducers on bellows for articulation.

Others

Other controllers include: Theremin, lightbeam controllers, touch buttons (touche d’intensité) on the Ondes Martenot, and various types of foot pedals. Envelope following systems, the most sophisticated being the vocoder, are controlled by the power or amplitude of input audio signal. The Talk box allows sound to be manipulated using the vocal tract, although it is rarely categorized as a synthesizer.

MIDI control

Synthesizers became easier to integrate and synchronize with other electronic instruments and controllers with the introduction of Musical Instrument Digital Interface (MIDI) in 1983.[84] First proposed in 1981 by engineer Dave Smith of Sequential Circuits, the MIDI standard was developed by a consortium now known as the MIDI Manufacturers Association.[85] MIDI is an opto-isolated serial interface and communication protocol.[85] It provides for the transmission from one device or instrument to another of real-time performance data. This data includes note events, commands for the selection of instrument presets (i.e. sounds, or programs or patches, previously stored in the instrument's memory), the control of performance-related parameters such as volume, effects levels and the like, as well as synchronization, transport control and other types of data. MIDI interfaces are now almost ubiquitous on music equipment and are commonly available on personal computers (PCs).[85]

The General MIDI (GM) software standard was devised in 1991 to serve as a consistent way of describing a set of over 200 sounds (including percussion) available to a PC for playback of musical scores.[86] For the first time, a given MIDI preset consistently produced a specific instrumental sound on any GM-compatible device. The Standard MIDI File (SMF) format (extension .mid) combined MIDI events with delta times – a form of time-stamping – and became a popular standard for exchanging music scores between computers. In the case of SMF playback using integrated synthesizers (as in computers and cell phones), the hardware component of the MIDI interface design is often unneeded.

Open Sound Control (OSC) is another music data specification designed for online networking. In contrast with MIDI, OSC allows thousands of synthesizers or computers to share music performance data over the Internet in realtime.

Typical roles

Synth lead

In popular music, a synth lead is generally used for playing the main melody of a song, but it is also often used for creating rhythmic or bass effects. Although most commonly heard in electronic dance music, synth leads have been used extensively in hip-hop since the 1980s and rock songs since the 1970s. Most modern music relies heavily on the synth lead to provide a musical hook to sustain the listener's interest throughout an entire song.

Synth pad

A synth pad is a sustained chord or tone generated by a synthesizer, often employed for background harmony and atmosphere in much the same fashion that a string section is often used in acoustic music. Typically, a synth pad plays many whole or half notes, sometimes holding the same note while a lead voice sings or plays an entire musical phrase. Often, the sounds used for synth pads have a vaguely organ, string, or vocal timbre. Much popular music in the 1980s employed synth pads, this being the time of polyphonic synthesizers, as did the then-new styles of smooth jazz and New Age music. One of many well-known songs from the era to incorporate a synth pad is "West End Girls" by the Pet Shop Boys, who were noted users of the technique.

The main feature of a synth pad is very long attack and decay time with extended sustains. In some instances pulse-width modulation (PWM) using a square wave oscillator can be added to create a "vibrating" sound.

Synth bass

The bass synthesizer (or "bass synth") is used to create sounds in the bass range, from simulations of the electric bass or double bass to distorted, buzz-saw-like artificial bass sounds, by generating and combining signals of different frequencies. Bass synth patches may incorporate a range of sounds and tones, including wavetable-style, analog, and FM-style bass sounds, delay effects, distortion effects, envelope filters. A modern digital synthesizer uses a frequency synthesizer microprocessor component to generate signals of different frequencies. While most bass synths are controlled by electronic keyboards or pedalboards, some performers use an electric bass with MIDI pickups to trigger a bass synthesizer.

In the 1970s miniaturized solid-state components allowed self-contained, portable instruments such as the Moog Taurus, a 13-note pedal keyboard played by the feet. The Moog Taurus was used in live performances by a range of pop, rock, and blues-rock bands. An early use of bass synthesizer was in 1972, on a solo album by John Entwistle (the bassist for The Who), entitled Whistle Rymes. Genesis bass player Mike Rutherford used a Dewtron "Mister Bassman" for the recording of their album Nursery Cryme in August 1971. Stevie Wonder introduced synth bass to a pop audience in the early 1970s, notably on "Superstition" (1972) and "Boogie On Reggae Woman" (1974). In 1977 Parliament's funk single "Flash Light" used the bass synthesizer. Lou Reed, widely considered a pioneer of electric guitar textures, played bass synthesizer on the song "Families", from his 1979 album The Bells.

When the programmable music sequencer became widely available in the 1980s (e.g., the Synclavier), bass synths were used to create highly syncopated rhythms and complex, rapid basslines. Bass synth patches incorporate a range of sounds and tones, including wavetable-style, analog, and FM-style bass sounds, delay effects, distortion effects, envelope filters. A particularly influential bass synthesizer was the Roland TB-303 following Firstman SQ-01. Released in late 1981, it featured a built-in sequencer and later became strongly associated with acid house music. This method gained wide popularity after Phuture used it for the single "Acid Tracks" in 1987.[88]

In the 2000s, several equipment manufacturers such as Boss and Akai produced bass synthesizer effect pedals for electric bass guitar players, which simulate the sound of an analog or digital bass synth. With these devices, a bass guitar is used to generate synth bass sounds. The BOSS SYB-3 was one of the early bass synthesizer pedals. The SYB-3 reproduces sounds of analog synthesizers with Digital Signal Processing saw, square, and pulse synth waves and user-adjustable filter cutoff. The Akai bass synth pedal contains a four-oscillator synthesizer with user selectable parameters (attack, decay, envelope depth, dynamics, cutoff, resonance). Bass synthesizer software allows performers to use MIDI to integrate the bass sounds with other synthesizers or drum machines. Bass synthesizers often provide samples from vintage 1970s and 1980s bass synths. Some bass synths are built into an organ style pedalboard or button board.

Arpeggiator

An arpeggiator is a feature available on several synthesizers that automatically steps through a sequence of notes based on an input chord, thus creating an arpeggio. The notes can often be transmitted to a MIDI sequencer for recording and further editing. An arpeggiator may have controls for speed, range, and order in which the notes play; upwards, downwards, or in a random order. More advanced arpeggiators allow the user to step through a pre-programmed complex sequence of notes, or play several arpeggios at once. Some allow a pattern sustained after releasing keys: in this way, sequence of arpeggio patterns may be built up over time by pressing several keys one after the other. Arpeggiators are also commonly found in software sequencers. Some arpeggiators/sequencers expand features into a full phrase sequencer, which allows the user to trigger complex, multi-track blocks of sequenced data from a keyboard or input device, typically synchronized with the tempo of the master clock.

Arpeggiators seem to have grown from the accompaniment system of electronic organs in mid-1960s – mid-1970s,[89] and possibly hardware sequencers[citation needed] of the mid-1960s, such as the 8/16 step analog sequencer on modular synthesizers (Buchla Series 100 (1964/1966)). Also they were commonly fitted to keyboard instruments through the late 1970s and early 1980s. Notable examples are the RMI Harmonic Synthesizer (1974),[90] Roland Jupiter 8, Oberheim OB-8, Roland SH-101, Sequential Circuits Six-Trak and Korg Polysix. A famous example can be heard on Duran Duran's song Rio, in which the arpeggiator on a Roland Jupiter-4 is heard playing a C minor chord in random mode. They fell out of favor by the latter part of the 1980s and early 1990s and were absent from the most popular synthesizers of the period but a resurgence of interest in analog synthesizers during the 1990s, and the use of rapid-fire arpeggios in several popular dance hits, brought with it a resurgence.

See also

- Lists

- Various synthesizers

- Related instruments & technologies

- Components & technologies

- Music genres

- Notable works

Notes

- ^ a b c

Commercially successful early digital synthesizers and digital samplers introduced in the late-1970s through early-1980s (each sold over several hundred of units per series) included:

- NED Synclavier (1977-1992) by New England Digital, based on the research of Dartmouth Digital Synthesizer since 1973.

- Fairlight CMI (1979-1988, over 300 units) in Sydney, based on the early developments of Qasar M8 by Tony Furse in Canberra since 1972.

- Yamaha GS-1, GS-2 (1980, around 100 units) and CE20, CE25 (1982) in Hamamatsu, based on a research of frequency modulation synthesis by John Chowning between 1967-1973, and early developments of TRX-100 and Programmable Algorithm Music Synthesizer (PAMS) by Yamaha between 1973-1979.(Yamaha 2014)

- E-mu Emulator (1981-2000s) in California, roughly based on a notion of wavetable oscillator seen on the MUSIC language in 1960s

- PPG Wave (1981-1987, around 1,000 units) in Hamburg, based on wavetable synthesis previously implemented on PPG Wavecomputer 360, 340 and 380 circa 1978.

The long history of additive synthesis also represents important background research relating to digital synthesis, although not listed above due to the lack of commercial success products; most products in list above, and even Yamaha Vocaloid (EpR based on SMS) in 2003 were influenced by it. - ^ For the details of the new trend of music influenced by early digital instruments, see Fairlight§Artists who used the Fairlight CMI, Synclavier§Notable users and E-mu Emulator§Notable users.

- ^ Sample-based synthesis was previously introduced by the E-mu Emulator II in 1984, Ensoniq Mirage in 1985, Ensoniq ESQ-1 and Korg DSS-1 in 1986, etc.

References

- ^ a b "The Palatin Project-The life and work of Elisha Gray". Palatin Project.

- ^ a b Brown, Jeremy K. (2010). Stevie Wonder: Musician. Infobase Publishing. p. 50. ISBN 978-1-4381-3422-2.

- ^ "Elisha Gray and "The Musical Telegraph"(1876)", 120 Years of Electronic Music, 2005, archived from the original on 2009-02-22, retrieved 2011-08-01

- ^

Chadabe, Joel (February 2000), The Electronic Century Part I: Beginnings, Electronic Musician, pp. 74–90

{{citation}}: Check date values in:|year=/|date=mismatch (help) - ^ US patent 580,035, Thaddeus Cahill, "Art of and apparatus for generating and distributing msic electrically", issued 1897-04-06

- ^ Millard, Max (October 1993). "Lee de Forest, Class of 1893:Father of the Electronics Age". Northfield Mount Hermon Alumni Magazine.

- ^ "The Audion Piano (1915)". 120 Years of Electronic Music.

- ^ Glinsky, Albert (2000), Theremin: Ether Music and Espionage, Urbana, Illinois: University of Illinois Press, p. 26, ISBN 0-252-02582-2

- ^ Edmunds, Neil (2004), Soviet Music and Society Under Lenin and Stalin, London: Routledge Curzon

- ^

Holzer, Derek (February 2010), Tonewheels – a brief history of optical synthesis, Umatic.nl

{{citation}}: CS1 maint: ref duplicates default (link) - ^

Kreichi, Stanislav (10 November 1997), The ANS Synthesizer: Composing on a Photoelectronic Instrument, Theremin Center,

Despite the apparent simplicity of his idea of reconstructing a sound from its visible image, the technical realization of the ANS as a musical instrument did not occur until 20 years later. / Murzin was an engineer who worked in areas unrelated to music, and the development of the ANS synthesizer was a hobby and he had many problems realizing on a practical level.

{{citation}}: CS1 maint: ref duplicates default (link) - ^ Rhea, Thomas L., "Harald Bode's Four-Voice Assignment Keyboard (1937)", eContact!, 13 (4) (reprint ed.), Canadian Electroacoustic Community (July 2011), originally published as Rhea, Tom (December 1979), "Electronic Perspectives", Contemporary Keyboard, 5 (12): 89

- ^ Warbo Formant Organ (photograph), 1937

- ^ "The 'Warbo Formant Orgel' (1937), The 'Melodium' (1938), The 'Melochord' (1947-9), and 'Bode Sound Co' (1963-)", 120 years of Electronic Music, archived from the original on 2012-04-02 (Note: the original URL is still active, however the original title and content have been changed)

- ^ a b Cirocco, Phil (2006). "The Novachord Restoration Project". Cirocco Modular Synthesizers.

- ^ Steve Howell; Dan Wilson. "Novachord". Hollow Sun. (see also 'History' page)

- ^ Gayle Young (1999). "Electronic Sackbut (1945–1973)". HughLeCaine.com.

- ^

一時代を画する新楽器完成 浜松の青年技師山下氏. Hochi Shimbun (in Japanese). 1935-06-08.

{{cite news}}: Unknown parameter|trans_title=ignored (|trans-title=suggested) (help) - ^

新電氣樂器 マグナオルガンの御紹介 (in Japanese). Hamamatsu: 日本樂器製造株式會社 (Yamaha). October 1935.

特許第一〇八六六四号, 同 第一一〇〇六八号, 同 第一一一二一六号

{{cite book}}: Unknown parameter|trans_title=ignored (|trans-title=suggested) (help) - ^ Fujii, Koichi (2004). "Chronology of early electroacoustic music in Japan: What types of source materials are available?". Organised Sound. 9 (1). Cambridge University Press: 63-77 [64-6]. doi:10.1017/S1355771804000093.

- ^ Holmes 2008, p. 106, Early Electronic Music in Japan

- ^

Davies, Hugh (2001). "Synthesizer [Synthesiser]". In ed. Stanley Sadie and John Tyrrell (ed.). The New Grove Dictionary of Music and Musicians (second ed.). London: Macmillan Publishers. ISBN 978-0-19-517067-2.

{{cite book}}:|editor=has generic name (help) - ^ Holmes 2008, p. 145–146, Early Synthesizers and Experimenters

- ^ "The RCA Synthesizer & Its Synthesists". Contemporary Keyboard. 6 (10). GPI Publications: 64. October 1980. Retrieved 2011-06-05.[full citation needed]

- ^ Harald Bode (The Wurlitzer Company). "Sound Synthesizer Creates New Musical Effects" (PDF). Electronics (December 1, 1961).

- ^ Harald Bode (Bode Sound Co.) (September 1984). "History of Electronic Sound Modification" (PDF). Journal of the Audio Engineering Society (JAES). 32 (10): 730–739. (Note: Draft typescript is available at the tail of PDF version, along with Archived 2011-06-09 at the Wayback Machine without draft.)

- ^ Bode, Harald (1961), "European Electronic Music Instrument Design", Journal of the Audio Engineering Society (JAES), ix (1961): 267

- ^ "In Memoriam" (PDF). Journal of the Audio Engineering Society (JAES). 35 (9): 741. September 1987. Retrieved 2007-07-18.

- ^ Vail 2000, p. 71, The Euro-Synth Industry - Italy. "First things first: In 1964, Paul Ketoff constructed the Synket in Rome. This was around the time that Bob Moog and Don Buchla independently began shipping their modular synthesizer wares."

- ^ Pizzaleo, Luigi (2012), "Durata e articolazione del progetto-Synket" (excerpt from PhD dissertation), LuigiPizzaleo.it (in Italian)

- ^ Pizzaleo, Luigi (2014-07-30), Il liutaio elettronico. Paolo Ketoff e l'invenzione del Synket, Immota harmonia (in Italian), vol. 20, Aracne, ISBN 9788854873636

- ^ a b Holmes 2008, p. 208. "Moog became the first synthesizer designer to popularize the technique of voltage control in analog electronic musical instruments. Donald Buchla in the United States and Paul Ketoff in Italy had been developing commercial synthesizers using the same principle at about the same time, but their equipment never reached the level of public acceptance of Moog's products and only a handful were sold."

- ^

"This Week in Synths: The Stearns Collection Moog, Mike Oldfield's OB-Xa, MOOG IIIp". Create Digital Music. 2007-03-23.

Moog synthesizer, Stearns 2035 is known as 1st commercial Moog synthesizer commissioned by the Alwin Nikolais Dance Theater of New York in October 1964. Now it resides as part of the Stearns Collection at the University of Michigan- "Stearns Collection". School of Music, Theatre & Dance, University of Michigan.

- ^ Catchlove, Lucina (August 2002), "Robert Moog", Remix, Oklahoma City [verification needed]

- ^

Gluck, Robert J. (February 2012), "Nurturing Young Composers: Morton Subotnick's Late-1960s Studio in New York City", Computer Music Journal, 36 (1): 65–80, doi:10.1162/COMJ_a_00106,

Buchla's Electronic Music Box was designed in response to Subotnick's and (San Francisco) Tape Music Center colleague Ramon Sender's (b. 1934) desire for a compositional instrument that generated electronic sounds, and sequences of sounds, without the use of magnetic tape.

- ^

Gluck, Bob (October 16, 2013), "Morton Subotnick's Sidewinder", New Music USA,

When Subotnick (with Ramon Sender) commissioned Donald Buchla to design what became the Buchla Box, his goal was an artist-friendly compositional tool that didn't depend upon recorded sound. ... The process of its development by Don Buchla, initially a spinning light wheel to create waveforms and then a modular system with integrated circuits, is described in the Spring 2012 issue of Computer Music Journal. ... The Buchla prototype was ready for the 1964-1965 season, but was little used prior to Subotnick's departure for New York in 1966. His theater piece Play 4 (1966) was the only work for the Buchla that Subotnick completed in San Francisco.

- ^ Eisengrein, Doug (September 1, 2005), Renewed Vision, Remix Magazine, retrieved 2008-04-16

- ^ Lefcowitz, Eric (1989), The Monkees Tale, Last Gasp, p. 48, ISBN 0-86719-378-6

- ^ Catchlove, Lucinda (April 1, 2002), Wendy Carlos (electronic musician), Remix Magazine

- ^ Tomita at AllMusic. Retrieved 2011-06-04.

- ^ Mark Jenkins (2007), Analog synthesizers: from the legacy of Moog to software synthesis, Elsevier, pp. 133–4, ISBN 0-240-52072-6, retrieved 2011-05-27

- ^ Stevie Wonder, American profile, retrieved 2014-01-09

- ^ "Herbie Hancock profile", Sound on Sound (July 2002), retrieved 2014-01-09

- ^ Chicory Tip (official website)

- ^ "The Prophet 5 and 10", gordonreid.co.uk, retrieved 2014-01-09

- ^ "The Synthesizers that shaped modern music", thevinylfactory.com, retrieved 2014-01-09

- ^ Russ, Martin (May 2004), Sound Synthesis and Sampling, Taylor and Francis, retrieved 2014-01-09

- ^ a b

Leete, Norm. "Fairlight Computer". Sound On Sound (April 1999).

The huge cost of the Fairlight CMI did not put the rich and famous off. Peter Vogel brought an early CMI to the UK in person, and one of the first people to get one was Peter Gabriel. Once UK distributor Syco Systems had been set up, the client list started to grow. ... as the total number of CMIs and Series II / IIxs comes to about 300 (of which only about 50 made it to the UK).

(Note: CMI III seems not in count) - ^

Vogel, Peter (c. 2011). "History (and future)". Peter Vogel Instruments.

over 300 "Fairlights" were sold world-wide.

- ^

Reid, Gordon. "Yamaha GS1 & DX1 - Part 1: The Birth, Rise and Further Rise of FM Synthesis (Retro)". Sound On Sound (August 2001).

the GS1 retailed for £12,000 ... Yamaha sold only around 100 or so GS1s and, in all likelihood, few more GS2s.

- ^

Reid, Gordon. "SOUNDS OF THE '80S - Part 2: The Yamaha DX1 & Its Successors (Retro)". Sound On Sound (September 2001).

With around 200,000 units sold, the DXs and TXs dominated the mid-'80s music scene in a way that no other keyboards or modules had done before, ...

(Note: possibly this top sales record has been overwritten by Korg M1 (250,000 units), according to the Sound On Sound, February 2002) - ^ "[Chapter 2] FM Tone Generators and the Dawn of Home Music Production". Yamaha Synth 40th Anniversary - History. Yamaha Corporation. 2014.

- ^ "Synthlearn – the DX7", synthlearn, retrieved 2014-01-09[verification needed]

- ^ Wiffen, Paul, "History Of Onboard Synth Effects, Part 1", Sound On Sound (March 1999), retrieved 2014-01-09

- ^ "Korg M1", Sound On Sound (February 2002), retrieved 2014-01-09

- ^ Holmes, Paul (22 May 2012), Electronic and Experimental Music, Routlege, retrieved 2014-01-09

- ^ The revival of analog electronics in a digital world, newelectronics, August 2013, retrieved 2014-01-09

- ^ a b c d e Borthwick 2004, p. 120

- ^ George-Warren, Holly (2001), The Rolling Stone Encyclopedia of Rock & Roll, Fireside, pp. 707–734, ISBN 0-7432-0120-5

- ^ Robbins, Ira A (1991), The Trouser Press Record Guide, Maxwell Macmillan International, p. 473, ISBN 0-02-036361-3

- ^ Black, Johnny (2003), "The Greatest Songs Ever! Hungry Like the Wolf", Blender Magazine (January/February 2003), retrieved 2008-04-16[dead link]

- ^ Borthwick 2004, p. 130

- ^ Vail 2000, p. 68

- ^ Reid, Gordon (2000). "Synth Secrets, Part 9: An Introduction to VCAs". Sound on Sound (January 2000). Retrieved 2010-05-25.

- ^ Charles Dodge, Thomas A. Jerse (1997). Computer Music. New York: Schirmer Books. p. 82.

- ^ Pinch, Trevor; Frank Trocco (2004). Analog Days: The Invention and Impact of the Moog Synthesizer. Harvard University Press. ISBN 978-0-674-01617-0.

- ^ "Oberheim Polyphonic Synthesizer Programmer (ad)". Contemporary Keyboard Magazine (ad) (September/October 1976): 19.

- ^ Thom Holmes, Thomas B. Holmes (2002), Electronic and experimental music: pioneers in technology and composition, Routledge, p. 59, ISBN 978-0-415-93644-6

- ^

"Radio Squeals Turned to Music for Entire Orchestra", Popular Science (June 1932): 51

— the article reported Léon Theremin's new electronic instruments used on his electric orchestra's first public recital at Carnegie Hall, New York City, including Fingerboard Theremin, Keyboard Theremin with fingerboard controller, and Terpsitone (a performance instrument in the style of platform on which a dancer could play a music by the movement of body). - ^

Glinsky, Albert (2000), Theremin: ether music and espionage, University of Illinois Press, p. 145, ISBN 978-0-252-02582-2,

In addition to its 61 keys (five octaves), it had a "fingerboard channnel" offering an alternate interface for string players.

- ^ Brend, Mark (2005). Strange sounds: offbeat instruments and sonic experiments in pop. Hal Leonard Corporation. p. 22. ISBN 0-87930-855-9.

- ^ "Moogtonium (1966–1968)". Moog Foundation. — Max Brand's version of Mixture Trautonium, built by Robert Moog during 1966–1968.

- ^ Synthesizer technique. Hal Leonard Publishing Corporation. 1984. p. 47. ISBN 0-88188-290-9.

- ^ Pinch, Trevor; Frank Trocco (2004). Analog Days: The Invention and Impact of the Moog Synthesizer. Harvard University Press. p. 62. ISBN 0-674-01617-3.

- ^ "The "Hellertion"(1929) & the "Heliophon"(1936)", 120 Years of Electronic Music

- ^ Peter Lertes (1933), Elektrische Musik:ein gemeinverständliche Darstellung ihrer Grundlagen, des heutigen Standes der Technik und ihre Zukunftsmöglickkeiten, (Dresden & Leipzig, 1933)

- ^ J. Marx (1947). "Heliophon, ein neues Musikinstrument". Ömz. ii (1947): 314.

- ^ Christoph Reuter, Martinetta and Variophon, Variophon.de

- ^ Christoph Reuter, Variophon and Martinetta Enthusiasts Page, Variophon.de

- ^ Joseph Pepe Zawinul, Melodicas.com (also another photograph is shown on gallery page)

- ^ Millioniser 2000 Promo Video Rock Erickson London, England 1983, MatrixSynth.com, July 21, 2009

- ^ Crumar Steiner Masters Touch CV Breath Controller, MatrixSynth.com, January 21, 2008

- ^ Yamaha DX100 with BC-1 Breath Controller, MatrixSynth.com, December 16, 2007

- ^ The Complete MIDI 1.0 Detailed Specification, MIDI Manufacturers Association Inc., retrieved 2008-04-10

- ^ a b c Rothtein, Joseph (1995), MIDI: A Comprehensive Introduction, A-R Editions, pp. 1–11, ISBN 0-89579-309-1, retrieved 2008-05-30

- ^ Webster, Peter Richard; Williams, David Brian (2005), Experiencing Music Technology: Software, Data, and Hardware, Thomson Schirmer, p. 221, ISBN 0-534-17672-0

- ^ Royalty Free Music : Funk – incompetech (mp3). Kevin MacLeod (incompetech.com).

- ^ Aitken, Stuart (10 May 2011). "Charanjit Singh on how he invented acid house … by mistake". The Guardian.

- ^ US patent 3,358,070, Alan C. Young (Hammond Co.), "Electronic Organ Arpeggio Effect Device", issued 1967-12-12

- ^ "RMI Harmonic Synthesizer". Jarrography – The ultimate Jean Michel Jarre discography.

Bibliography

- Borthwick, Stuart (2004), Popular Music Genres: An Introduction, Edinburgh University Press, p. 120, ISBN 0-7486-1745-0

{{citation}}: Invalid|ref=harv(help) - Holmes, Thom (2008), Electronic and experimental music: technology, music, and culture (3rd ed.), Taylor & Francis, ISBN 0-415-95781-8, retrieved 2011-06-04

- Vail, Mark (2000), Vintage Synthesizers: Groundbreaking Instruments and Pioneering Designers of Electronic Music Synthesizers, Backbeat Books, pp. 68–342, ISBN 0-87930-603-3

Further reading

- Gorges, Peter (2005). Programming Synthesizers. Germany, Bremen: Wizoobooks. ISBN 978-3-934903-48-7.

{{cite book}}: Invalid|ref=harv(help) - Schmitz, Reinhard (2005). Analog Synthesis. Germany, Bremen: Wizoobooks. ISBN 978-3-934903-01-2.

{{cite book}}: Invalid|ref=harv(help) - Shapiro, Peter (2000). Modulations: A History of Electronic Music: Throbbing Words on Sound. ISBN 1-891024-06-X.

{{cite book}}: Invalid|ref=harv(help)