Talk:Numerical differentiation: Difference between revisions

mNo edit summary |

|||

| Line 52: | Line 52: | ||

Evidently, successive derivation with a numerical scheme gives the same result. <span style="font-size: smaller;" class="autosigned">— Preceding [[Wikipedia:Signatures|unsigned]] comment added by [[Special:Contributions/188.97.251.186|188.97.251.186]] ([[User talk:188.97.251.186|talk]]) 19:42, 12 July 2014 (UTC)</span><!-- Template:Unsigned IP --> <!--Autosigned by SineBot--> |

Evidently, successive derivation with a numerical scheme gives the same result. <span style="font-size: smaller;" class="autosigned">— Preceding [[Wikipedia:Signatures|unsigned]] comment added by [[Special:Contributions/188.97.251.186|188.97.251.186]] ([[User talk:188.97.251.186|talk]]) 19:42, 12 July 2014 (UTC)</span><!-- Template:Unsigned IP --> <!--Autosigned by SineBot--> |

||

== code in section "Practical considerations using floating point arithmetic" is incorrect? == |

|||

My criticism on the code in section "Practical considerations using floating point arithmetic": |

|||

1. There is no reason to assume Sqrt(epsilon)*x is a good estimate of the ideal step size. Indeed it should be 2 * Sqrt(epsilon) * Sqrt(|f(x)|) / Sqrt(|f''(x)|). See source [A], lemma 11.13, page 263. |

|||

2. Any sensible method for numerical derivation should be scale independant. Your's is not because you explicitely use max(x, 1) in your calculations. Why do I think scale-independance is important? Say 'x' is of units millivolts and your algorithm yields good results. Then you decide to change 'x' to megavolts in your data. Thus all values of 'x' get rescaled by 10^-6. But then your algorihm gives strikingly different precision?! This clearly should not be true for floating point numbers. |

|||

3. I don't see why it should improve accuracy of your results when you calculate (x+h-h). IMHO this adds further round off errors to your total result. |

|||

If you think what I say is wrong, please add external sources, which support your algorithm and derivations. IMHO I assume you have never read a book on numerical differentiation and you invented your algorithm from scratch. The correct procedure for computing the ideal step size can be read in source [A], Procedure 11.15 on page 265. Having read source [A], I personally find your explanations more misleading than helpful. |

|||

Source [A]: |

|||

[http://www.uio.no/studier/emner/matnat/math/MAT-INF1100/h10/kompendiet/kap11.pdf] |

|||

Revision as of 20:08, 20 October 2015

| Mathematics C‑class High‑priority | ||||||||||

| ||||||||||

Generalizing the 5 point method

Does anyone know how to generalize the 5 point method using , , , and ? I have a series of points in Latitude, Longitude, and Altitude and I'm trying to get an accurate estimation of heading at each point. Yoda of Borg 22:37, 6 September 2007 (UTC)

The file from Numerical Recipe is encoded

Does anyone know the password?--plarq 14:12, 9 March 2009 (GMT+8)

Higher order methods

I think you're supposed to divide by 20*h, not 12*h. — Preceding unsigned comment added by 194.127.8.18 (talk) 15:21, 30 August 2011 (UTC)

Three point?

For the finite difference formula there is mention of "three-point" when actually, only two points are involved, namely at f(x) and f(x + h) or alternatively at f(x - h) and f(x + h); in particular this last does not involve the point f(x). NickyMcLean (talk) 02:25, 7 August 2012 (UTC)

- This one given for "difference methods" seems ok. I think your query assumes that the derivative is based on a quadratic fit (Lagrange polynomial ) for which I think there should be the alternate form given below. See http://www.shodor.org/cserd/Resources/Algorithms/NumericalDifferentiation/ http://www.nd.edu/~zxu2/acms40390F11/sec4-1-derivative-approximation-1.pdf - I dont know math markup, perhaps someone can add it to the article. Shyamal (talk) 03:44, 7 August 2012 (UTC)

-3*y(x) + 4*y(x+h) - y(x+2h)

y'(x) = ------------------------------

2*h

seems to work despite not being {...}over{...} or similar so I'm not sure about the parsing of more complex formulae. I followed the example earlier in the article by activating "edit" and seeing what gibberish was employed. Anyway, although your reference does indeed apply the appellation "three point" to and also to the formula you quote, one manifestly has two points and the other three. Both do indeed span three x-positions (x - h, x and x + h) but one definitely uses three points, and the other two. So, despite your reference, I am in favour of counting correctly! Also, I strongly favour slope estimations that straddle the position of interest, so the three points should be x - h, x, and x + h for the slope estimate at x. NickyMcLean (talk) 21:38, 7 August 2012 (UTC)

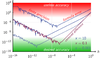

Comparison of two point and Five-point stencil error as a function of h

Thanks for the great work on the article so far. It would be good if the error by h also showed a 4 point method error by h in this fantastic graph. I am trying to work out if I should implement 4 point in my minimization problem and this would be a great help. Doug (talk) 18:32, 23 November 2012 (UTC)

Mixed partial derivatives

AFAIK they are computed by successively differentiating in each variable to the desired order. But does it always give the best approximation? A few words added to the article by experts would be helpful. 89.138.86.3 (talk) 15:56, 5 July 2013 (UTC)

Apparently, successively differentiating does not do the trick for central differences as I found this formula in a paper/ phd thesis (LaTeX code; "i" and "j" are indices):

% if f_{x}(i,j)*f_{y}(i,j) < 0

f_{xy}(i,j) = \frac{2*f(i,j) - f(i+1,j) + f(i+1,j+1) - f(i-1,j) + f(i-1,j-1) - f(i,j+1) - f(i,j-1)}{2*h^{2}}

% else

f_{xy}(i,j) = \frac{-2*f(i,j) + f(i+1,j) - f(i+1,j-1) + f(i-1,j) - f(i-1,j+1) j(i,j+1) + f(i,j-1)}{2*h^{2}}

However, the authour does not mention the consistency.

The source is chapter 5 of "From Pixels to Regions: Partial Differential Equations in Image Analysis" by Thomas Brox.

Another source (I can not recall the name) suggested successive differentiating in case of calculating a forward difference (or more correctly: forward-forward difference, as differentiating was done "forward-direction" for each dimension). It seems to work, but I do have my doubts and therefore would be grateful to see a section concerning forward- and backward-differences in multiple dimensions. — Preceding unsigned comment added by 188.97.251.186 (talk) 17:35, 12 July 2014 (UTC)

I do have to correct what I wrote earlier, as successive differentiation seems to work. "Numerical Recipes" states that e.g. for two dimensions, the mixed partial derivative is (LaTeX code; "i" and "j" are indices, h is grid size):

f_{xy}(i,j) = \frac{(f(x+h,y+h) - f(x+h,y-h)) - (f(x-h,y+h) - f(x-h,y-h))}{4*h^2}

Evidently, successive derivation with a numerical scheme gives the same result. — Preceding unsigned comment added by 188.97.251.186 (talk) 19:42, 12 July 2014 (UTC)

code in section "Practical considerations using floating point arithmetic" is incorrect?

My criticism on the code in section "Practical considerations using floating point arithmetic":

1. There is no reason to assume Sqrt(epsilon)*x is a good estimate of the ideal step size. Indeed it should be 2 * Sqrt(epsilon) * Sqrt(|f(x)|) / Sqrt(|f(x)|). See source [A], lemma 11.13, page 263. 2. Any sensible method for numerical derivation should be scale independant. Your's is not because you explicitely use max(x, 1) in your calculations. Why do I think scale-independance is important? Say 'x' is of units millivolts and your algorithm yields good results. Then you decide to change 'x' to megavolts in your data. Thus all values of 'x' get rescaled by 10^-6. But then your algorihm gives strikingly different precision?! This clearly should not be true for floating point numbers. 3. I don't see why it should improve accuracy of your results when you calculate (x+h-h). IMHO this adds further round off errors to your total result.

If you think what I say is wrong, please add external sources, which support your algorithm and derivations. IMHO I assume you have never read a book on numerical differentiation and you invented your algorithm from scratch. The correct procedure for computing the ideal step size can be read in source [A], Procedure 11.15 on page 265. Having read source [A], I personally find your explanations more misleading than helpful.

Source [A]: [1]