AVL tree: Difference between revisions

Added delete and insert explanations. Still needs links, citations and verification. |

Added new content on bulk operations |

||

| Line 67: | Line 67: | ||

{{refimprove section|date=November 2016}} |

{{refimprove section|date=November 2016}} |

||

When deleting an element from an AVL tree, swap the desired element with the minimum element in the right subtree, or maximum element in the left subtree. Once this has been completed delete the element from the new position (the process may need to be repeated). If the element is now a leaf node, remove it completely. Make sure to perform rotations to maintain the AVL property. |

When deleting an element from an AVL tree, swap the desired element with the minimum element in the right subtree, or maximum element in the left subtree. Once this has been completed delete the element from the new position (the process may need to be repeated). If the element is now a leaf node, remove it completely. Make sure to perform rotations to maintain the AVL property. |

||

===Set operations and bulk operations=== |

|||

In addition to the single-element insert, delete and lookup operations, several set operations have been defined on AVL trees: [[Union (set theory)|union]], [[Intersection (set theory)|intersection]] and [[set difference]]. Then fast ''bulk'' operations on insertions or deletions can be implemented based on these set functions. These set operations rely on two helper operations, ''Split'' and ''Join''. With the new operations, the implementation of AVL trees can be more efficient and highly-parallelizable. <ref name="join-based">{{citation |

|||

| last1 = Blelloch | first1 = Guy E. |

|||

| last2 = Ferizovic | first2 = Daniel |

|||

| last3 = Sun | first3 = Yihan |

|||

| contribution = Just Join for Parallel Ordered Sets |

|||

| doi = 10.1145/2935764.2935768 |

|||

| isbn = 978-1-4503-4210-0 |

|||

| pages = 253--264 |

|||

| publisher = ACM |

|||

| title = [[Symposium on Parallel Algorithms and Architectures|Proc. 28th ACM Symp. Parallel Algorithms and Architectures (SPAA 2016)]] |

|||

| year = 2016}}.</ref> |

|||

*''Join'': The function ''Join'' is on two AVL trees {{math|''t''<sub>1</sub>}} and {{math|''t''<sub>2</sub>}} and a key {{mvar|k}} and will return a tree containing all elements in {{math|''t''<sub>1</sub>}}, {{math|''t''<sub>2</sub>}} as well as {{mvar|k}}. It requires {{mvar|k}} to be greater than all keys in {{math|''t''<sub>1</sub>}} and smaller than all keys in {{math|''t''<sub>2</sub>}}. If the two trees differ by height at most one, ''Join'' simply create a new node with left subtree {{math|''t''<sub>1</sub>}}, root {{mvar|k}} and right subtree {{math|''t''<sub>2</sub>}}. Otherwise, suppose that {{math|''t''<sub>1</sub>}} is higher than {{math|''t''<sub>2</sub>}} for more than one (the other case is symmetric). ''Join'' follows the right spine of {{math|''t''<sub>1</sub>}} until a node {{mvar|c}} which is balanced with {{math|''t''<sub>2</sub>}}. At this point a new node with left child {{mvar|c}}, root {{mvar| k}} and right child {{math|''t''<sub>1</sub>}} is created to replace c. The new node satisfies the AVL invariant, and its height is one greater than {{mvar|c}}. The increase in height can increase the height of its ancestors, possibly invalidating the AVL invariant of those nodes. This can be fixed either with a double rotation if invalid at the parent or a single left rotation if invalid higher in the tree, in both cases restoring the height for any further ancestor nodes. ''Join'' will therefore require at most two rotations. The cost of this function is the difference of the heights between the two input trees. |

|||

*''Split'': To split an AVL tree into two smaller trees, those smaller than key ''x'', and those larger than key ''x'', first draw a path from the root by inserting ''x'' into the AVL. After this insertion, all values less than ''x'' will be found on the left of the path, and all values greater than ''x'' will be found on the right. By applying ''Join'', all the subtrees on the left side are merged bottom-up using keys on the path as intermediate nodes from bottom to top to form the left tree, and the right part is asymmetric. The cost of ''Split'' is order of <math>O(n)</math>, the height of the tree. |

|||

The union of two AVLs {{math|''t''<sub>1</sub>}} and {{math|''t''<sub>2</sub>}} representing sets {{mvar|A}} and {{mvar|B}}, is an AVL {{mvar|''t''}} that represents {{math|''A'' ∪ ''B''}}. The following recursive function computes this union: |

|||

'''function''' union(t<sub>1</sub>, t<sub>2</sub>): |

|||

'''if''' t<sub>1</sub> = nil: |

|||

'''return''' t<sub>2</sub> |

|||

'''if''' t<sub>2</sub> = nil: |

|||

'''return''' t<sub>1</sub> |

|||

t<sub><</sub>, t<sub>></sub> ← split t<sub>2</sub> on t<sub>1</sub>.root |

|||

'''return''' join(t<sub>1</sub>.root,union(left(t<sub>1</sub>), t<sub><</sub>),union(right(t<sub>1</sub>), t<sub>></sub>)) |

|||

Here, ''Split'' is presumed to return two trees: one holding the keys less its input key, one holding the greater keys. (The algorithm is [[persistent data structure|non-destructive]], but an in-place destructive version exists as well.) |

|||

The algorithm for intersection or difference is similar, but requires the ''Join2'' helper routine that is the same as ''Join'' but without the middle key. Based on the new functions for union, intersection or difference, either one key or multiple keys can be inserted to or deleted from the AVL tree. |

|||

The complexity of each of union, intersection and difference is <math>O\left(m \log \left({n\over m}+1\right)\right)</math> for AVLs of sizes <math>m</math> and <math>n(\ge m)</math>. More importantly, since the recursive calls to union, intersection or difference are independent of each other, they can be executed [[parallel programming|in parallel]] with a [[Analysis_of_parallel_algorithms|parallel depth]] <math>O(\log m\log n)</math>.<ref name="join-based"/> |

|||

==Comparison to other structures== |

==Comparison to other structures== |

||

Revision as of 21:05, 12 December 2016

This article needs additional citations for verification. (July 2016) |

| AVL tree | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | tree | |||||||||||||||||||||||

| Invented | 1962 | |||||||||||||||||||||||

| Invented by | Georgy Adelson-Velsky and Evgenii Landis | |||||||||||||||||||||||

| ||||||||||||||||||||||||

In computer science, an AVL tree is a self-balancing binary search tree. It was the first such data structure to be invented.[2] In an AVL tree, the heights of the two child subtrees of any node differ by at most one; if at any time they differ by more than one, rebalancing is done to restore this property. Lookup, insertion, and deletion all take O(log n) time in both the average and worst cases, where n is the number of nodes in the tree prior to the operation. Insertions and deletions may require the tree to be rebalanced by one or more tree rotations.

The AVL tree is named after its two Soviet inventors, Georgy Adelson-Velsky and Evgenii Landis, who published it in their 1962 paper "An algorithm for the organization of information".[3]

AVL trees are often compared with red–black trees because both support the same set of operations and take O(log n) time for the basic operations. For lookup-intensive applications, AVL trees are faster than red–black trees because they are more strictly balanced.[4] Similar to red–black trees, AVL trees are height-balanced. Both are in general not weight-balanced nor μ-balanced for any μ≤1⁄2;[5] that is, sibling nodes can have hugely differing numbers of descendants.

Definition

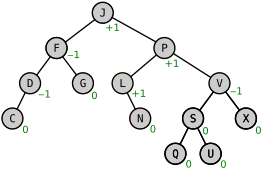

Balance factor

In a binary tree the balance factor of a node N is defined to be the height difference

- BalanceFactor(N) := –Height(LeftSubtree(N)) + Height(RightSubtree(N)) [6]

of its two child subtrees. A binary tree is defined to be an AVL tree if the invariant

- BalanceFactor(N) ∈ {–1,0,+1}

holds for every node N in the tree.

A node N with BalanceFactor(N) < 0 is called "left-heavy", one with BalanceFactor(N) > 0 is called "right-heavy", and one with BalanceFactor(N) = 0 is sometimes simply called "balanced".

- Remark

In the sequel, because there is a one-to-one correspondence between nodes and the subtrees rooted by them, we sometimes leave it to the context whether the name of an object stands for the node or the subtree.

Properties

Balance factors can be kept up-to-date by knowing the previous balance factors and the change in height – it is not necessary to know the absolute height. For holding the AVL balance information, two bits per node are sufficient.[7]

The height h of an AVL tree with n nodes lies in the interval:[8]

- log2(n+1) ≤ h < c log2(n+2)+b

with the golden ratio φ := (1+√5) ⁄2 ≈ 1.618, c := 1⁄ log2 φ ≈ 1.44, and b := c⁄2 log2 5 – 2 ≈ –0.328. This is because an AVL tree of height h contains at least Fh+2 – 1 nodes where {Fh} is the Fibonacci sequence with the seed values F1 = 1, F2 = 1.

Operations

Read-only operations of an AVL tree involve carrying out the same actions as would be carried out on an unbalanced binary search tree, but modifications have to observe and restore the height balance of the subtrees.

Searching

This section needs additional citations for verification. (July 2016) |

Searching for a specific key in an AVL tree can be done the same way as that of a normal unbalanced binary search tree. In order for search to work effectively it has to employ a comparison function which establishes a total order (or at least a total preorder) on the set of keys. The number of comparisons required for successful search is limited by the height h and for unsuccessful search is very close to h, so both are in O(log n).

Traversal

This section needs additional citations for verification. (July 2016) |

Once a node has been found in an AVL tree, the next or previous node can be accessed in amortized constant time. Some instances of exploring these "nearby" nodes require traversing up to h ∝ log(n) links (particularly when navigating from the rightmost leaf of the root’s left subtree to the root or from the root to the leftmost leaf of the root’s right subtree; in the AVL tree of figure 1, moving from node P to the next but one node Q takes 3 steps). However, exploring all n nodes of the tree in this manner would visit each link exactly twice: one downward visit to enter the subtree rooted by that node, another visit upward to leave that node’s subtree after having explored it. And since there are n−1 links in any tree, the amortized cost is found to be 2×(n−1)/n, or approximately 2.

Insert

This section needs expansion. You can help by adding to it. (November 2016) |

This section needs additional citations for verification. (November 2016) |

When inserting an element into an AVL tree, you initially follow the same process as inserting into a Binary Search Tree. Once this has been completed, you verify that the tree maintains the AVL property. If it does not, then you perform tree rotations going upwards from the inserted node to rectify this.

Delete

This section needs expansion. You can help by adding to it. (November 2016) |

This section needs additional citations for verification. (November 2016) |

When deleting an element from an AVL tree, swap the desired element with the minimum element in the right subtree, or maximum element in the left subtree. Once this has been completed delete the element from the new position (the process may need to be repeated). If the element is now a leaf node, remove it completely. Make sure to perform rotations to maintain the AVL property.

Set operations and bulk operations

In addition to the single-element insert, delete and lookup operations, several set operations have been defined on AVL trees: union, intersection and set difference. Then fast bulk operations on insertions or deletions can be implemented based on these set functions. These set operations rely on two helper operations, Split and Join. With the new operations, the implementation of AVL trees can be more efficient and highly-parallelizable. [9]

- Join: The function Join is on two AVL trees t1 and t2 and a key k and will return a tree containing all elements in t1, t2 as well as k. It requires k to be greater than all keys in t1 and smaller than all keys in t2. If the two trees differ by height at most one, Join simply create a new node with left subtree t1, root k and right subtree t2. Otherwise, suppose that t1 is higher than t2 for more than one (the other case is symmetric). Join follows the right spine of t1 until a node c which is balanced with t2. At this point a new node with left child c, root k and right child t1 is created to replace c. The new node satisfies the AVL invariant, and its height is one greater than c. The increase in height can increase the height of its ancestors, possibly invalidating the AVL invariant of those nodes. This can be fixed either with a double rotation if invalid at the parent or a single left rotation if invalid higher in the tree, in both cases restoring the height for any further ancestor nodes. Join will therefore require at most two rotations. The cost of this function is the difference of the heights between the two input trees.

- Split: To split an AVL tree into two smaller trees, those smaller than key x, and those larger than key x, first draw a path from the root by inserting x into the AVL. After this insertion, all values less than x will be found on the left of the path, and all values greater than x will be found on the right. By applying Join, all the subtrees on the left side are merged bottom-up using keys on the path as intermediate nodes from bottom to top to form the left tree, and the right part is asymmetric. The cost of Split is order of , the height of the tree.

The union of two AVLs t1 and t2 representing sets A and B, is an AVL t that represents A ∪ B. The following recursive function computes this union:

function union(t1, t2):

if t1 = nil:

return t2

if t2 = nil:

return t1

t<, t> ← split t2 on t1.root

return join(t1.root,union(left(t1), t<),union(right(t1), t>))

Here, Split is presumed to return two trees: one holding the keys less its input key, one holding the greater keys. (The algorithm is non-destructive, but an in-place destructive version exists as well.)

The algorithm for intersection or difference is similar, but requires the Join2 helper routine that is the same as Join but without the middle key. Based on the new functions for union, intersection or difference, either one key or multiple keys can be inserted to or deleted from the AVL tree.

The complexity of each of union, intersection and difference is for AVLs of sizes and . More importantly, since the recursive calls to union, intersection or difference are independent of each other, they can be executed in parallel with a parallel depth .[9]

Comparison to other structures

Both AVL trees and red–black trees are self-balancing binary search trees and they are related mathematically. Indeed, every AVL tree can be colored red–black.[citation needed] The operations to balance the trees are different; both AVL trees and red-black require O(1) rotations in the worst case, while both also require O(log n) other updates (to colors or heights) in the worst case (though only O(1) amortized). AVL trees require storing 2 bits (or one trit) of information in each node, while red-black trees require just one bit per node. The bigger difference between the two data structures is their height limit.

For a tree of size n ≥ 1

- an AVL tree’s height is at most

- where the golden ratio, and .

- a red–black tree’s height is at most

AVL trees are more rigidly balanced than red–black trees, leading to faster retrieval but slower insertion and deletion.

See also

References

- ^ a b c d e f Eric Alexander. "AVL Trees".

- ^ Robert Sedgewick, Algorithms, Addison-Wesley, 1983, ISBN 0-201-06672-6, page 199, chapter 15: Balanced Trees.

- ^ Georgy Adelson-Velsky, G.; Evgenii Landis (1962). "An algorithm for the organization of information". Proceedings of the USSR Academy of Sciences (in Russian). 146: 263–266. English translation by Myron J. Ricci in Soviet Math. Doklady, 3:1259–1263, 1962.

- ^ Pfaff, Ben (June 2004). "Performance Analysis of BSTs in System Software" (PDF). Stanford University.

- ^ AVL trees are not weight-balanced? (meaning: AVL trees are not μ-balanced?)

Thereby: A Binary Tree is called -balanced, with , if for every node , the inequality - ^ Knuth, Donald E. (2000). Sorting and searching (2. ed., 6. printing, newly updated and rev. ed.). Boston [u.a.]: Addison-Wesley. p. 459. ISBN 0-201-89685-0.

- ^ More precisely: if the AVL balance information is kept in the child nodes – with meaning "when going upward there is an additional increment in height", this can be done with one bit. Nevertheless, the modifying operations can be programmed more efficiently if the balance information can be checked with one test.

- ^ Knuth, Donald E. (2000). Sorting and searching (2. ed., 6. printing, newly updated and rev. ed.). Boston [u.a.]: Addison-Wesley. p. 460. ISBN 0-201-89685-0.

- ^ a b Blelloch, Guy E.; Ferizovic, Daniel; Sun, Yihan (2016), "Just Join for Parallel Ordered Sets", Proc. 28th ACM Symp. Parallel Algorithms and Architectures (SPAA 2016), ACM, pp. 253--264, doi:10.1145/2935764.2935768, ISBN 978-1-4503-4210-0.

- ^ Red–black tree#Proof of asymptotic bounds

Further reading

- Donald Knuth. The Art of Computer Programming, Volume 3: Sorting and Searching, Third Edition. Addison-Wesley, 1997. ISBN 0-201-89685-0. Pages 458–475 of section 6.2.3: Balanced Trees.

External links

This article incorporates public domain material from Paul E. Black. "AVL Tree". Dictionary of Algorithms and Data Structures. NIST.

This article incorporates public domain material from Paul E. Black. "AVL Tree". Dictionary of Algorithms and Data Structures. NIST.- AVL tree demonstration (HTML5/Canvas)

- AVL tree demonstration (requires Flash)

- AVL tree demonstration (requires Java)