Tf–idf: Difference between revisions

m did not find any information on a 'luhn assumption', would either love a citation on that or some more info as the original paper does not state anything like it. |

m clean up, typo(s) fixed: differents → different using AWB |

||

| Line 1: | Line 1: | ||

{{Lowercase|title=tf–idf}} |

{{Lowercase|title=tf–idf}} |

||

In [[information retrieval]], '''tf–idf''' or '''TFIDF''', short for '''term frequency–inverse document frequency''', is a numerical statistic that is intended to reflect how important a word is to a [[document]] in a collection or [[Text corpus|corpus]].<ref>{{Cite book |last= Rajaraman |first1=A. |last2= Ullman |first2= J.D. |doi= 10.1017/CBO9781139058452.002 |chapter= Data Mining |title= Mining of Massive Datasets |pages= 1–17 |year= 2011 |isbn= 978-1-139-05845-2 |url= http://i.stanford.edu/~ullman/mmds/ch1.pdf}}</ref> It is often used as a [[weighting factor]] in searches of information retrieval, [[text mining]], and [[user modeling]]. |

In [[information retrieval]], '''tf–idf''' or '''TFIDF''', short for '''term frequency–inverse document frequency''', is a numerical statistic that is intended to reflect how important a word is to a [[document]] in a collection or [[Text corpus|corpus]].<ref>{{Cite book |last= Rajaraman |first1=A. |last2= Ullman |first2= J.D. |doi= 10.1017/CBO9781139058452.002 |chapter= Data Mining |title= Mining of Massive Datasets |pages= 1–17 |year= 2011 |isbn= 978-1-139-05845-2 |url= http://i.stanford.edu/~ullman/mmds/ch1.pdf}}</ref> It is often used as a [[weighting factor]] in searches of information retrieval, [[text mining]], and [[user modeling]]. |

||

The tf-idf value increases [[Proportionality (mathematics)|proportionally]] to the number of times a word appears in the document, but is often offset by the frequency of the word in the corpus, which helps to adjust for the fact that some words appear more frequently in general. Nowadays, tf-idf is one of the most popular term-weighting schemes; 83% of text-based recommender systems in the domain of digital libraries use tf-idf.<ref>{{Cite journal |last= Breitinger |first=Corinna |last2=Gipp |first2= Bela |last3=Langer |first3=Stefan |date= 2015-07-26 |title= Research-paper recommender systems: a literature survey |url= https://link.springer.com/article/10.1007/s00799-015-0156-0 |journal= International Journal on Digital Libraries |language=en |volume=17 |issue=4 |pages= 305–338 |doi= 10.1007/s00799-015-0156-0 |issn= 1432-5012 |via=}}</ref> |

The tf-idf value increases [[Proportionality (mathematics)|proportionally]] to the number of times a word appears in the document, but is often offset by the frequency of the word in the corpus, which helps to adjust for the fact that some words appear more frequently in general. Nowadays, tf-idf is one of the most popular term-weighting schemes; 83% of text-based recommender systems in the domain of digital libraries use tf-idf.<ref>{{Cite journal |last= Breitinger |first=Corinna |last2=Gipp |first2= Bela |last3=Langer |first3=Stefan |date= 2015-07-26 |title= Research-paper recommender systems: a literature survey |url= https://link.springer.com/article/10.1007/s00799-015-0156-0 |journal= International Journal on Digital Libraries |language=en |volume=17 |issue=4 |pages= 305–338 |doi= 10.1007/s00799-015-0156-0 |issn= 1432-5012 |via=}}</ref> |

||

Variations of the tf–idf weighting scheme are often used by [[search engine]]s as a central tool in scoring and ranking a document's [[Relevance (information retrieval)|relevance]] given a user [[Information retrieval|query]]. tf–idf can be successfully used for [[stop-words]] filtering in various subject fields, including [[automatic summarization |

Variations of the tf–idf weighting scheme are often used by [[search engine]]s as a central tool in scoring and ranking a document's [[Relevance (information retrieval)|relevance]] given a user [[Information retrieval|query]]. tf–idf can be successfully used for [[stop-words]] filtering in various subject fields, including [[automatic summarization|text summarization]] and classification. |

||

One of the simplest [[ranking function]]s is computed by summing the tf–idf for each query term; many more sophisticated ranking functions are variants of this simple model. |

One of the simplest [[ranking function]]s is computed by summing the tf–idf for each query term; many more sophisticated ranking functions are variants of this simple model. |

||

| Line 74: | Line 74: | ||

* <math> |\{d \in D: t \in d\}| </math> : number of documents where the term <math> t </math> appears (i.e., <math> \mathrm{tf}(t,d) \neq 0</math>). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the denominator to <math>1 + |\{d \in D: t \in d\}|</math>. |

* <math> |\{d \in D: t \in d\}| </math> : number of documents where the term <math> t </math> appears (i.e., <math> \mathrm{tf}(t,d) \neq 0</math>). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the denominator to <math>1 + |\{d \in D: t \in d\}|</math>. |

||

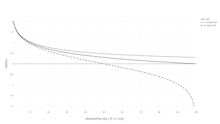

[[File:Plot IDF functions.png|thumb|Plot of |

[[File:Plot IDF functions.png|thumb|Plot of different inverse document frequency functions: standard, smooth, probabilistic.]] |

||

=== Term frequency–Inverse document frequency === |

=== Term frequency–Inverse document frequency === |

||

Revision as of 08:31, 1 December 2017

In information retrieval, tf–idf or TFIDF, short for term frequency–inverse document frequency, is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus.[1] It is often used as a weighting factor in searches of information retrieval, text mining, and user modeling.

The tf-idf value increases proportionally to the number of times a word appears in the document, but is often offset by the frequency of the word in the corpus, which helps to adjust for the fact that some words appear more frequently in general. Nowadays, tf-idf is one of the most popular term-weighting schemes; 83% of text-based recommender systems in the domain of digital libraries use tf-idf.[2]

Variations of the tf–idf weighting scheme are often used by search engines as a central tool in scoring and ranking a document's relevance given a user query. tf–idf can be successfully used for stop-words filtering in various subject fields, including text summarization and classification.

One of the simplest ranking functions is computed by summing the tf–idf for each query term; many more sophisticated ranking functions are variants of this simple model.

Motivations

Term frequency

Suppose we have a set of English text documents and wish to rank which document is most relevant to the query, "the brown cow". A simple way to start out is by eliminating documents that do not contain all three words "the", "brown", and "cow", but this still leaves many documents. To further distinguish them, we might count the number of times each term occurs in each document; the number of times a term occurs in a document is called its term frequency. However, in the case where the length of documents varies greatly, adjustments are often made (see definition below). The first form of term weighting is due to Hans Peter Luhn (1957) which may be summarized as:

- The weight of a term that occurs in a document is simply proportional to the term frequency.[3]

Inverse document frequency

Because the term "the" is so common, term frequency will tend to incorrectly emphasize documents which happen to use the word "the" more frequently, without giving enough weight to the more meaningful terms "brown" and "cow". The term "the" is not a good keyword to distinguish relevant and non-relevant documents and terms, unlike the less-common words "brown" and "cow". Hence an inverse document frequency factor is incorporated which diminishes the weight of terms that occur very frequently in the document set and increases the weight of terms that occur rarely.

Karen Spärck Jones (1972) conceived a statistical interpretation of term specificity called Inverse Document Frequency (IDF), which became a cornerstone of term weighting:

- The specificity of a term can be quantified as an inverse function of the number of documents in which it occurs.[4]

Definition

The tf–idf is the product of two statistics, term frequency and inverse document frequency. Various ways for determining the exact values of both statistics exist.

| weighting scheme | TF weight |

|---|---|

| binary | |

| raw count | |

| term frequency | |

| log normalization | |

| double normalization 0.5 | |

| double normalization K |

Term frequency

In the case of the term frequency tf(t,d), the simplest choice is to use the raw count of a term in a document, i.e. the number of times that term t occurs in document d. If we denote the raw count by ft,d, then the simplest tf scheme is tf(t,d) = ft,d. Other possibilities include[5]: 128

- Boolean "frequencies": tf(t,d) = 1 if t occurs in d and 0 otherwise;

- term frequency adjusted for document length : ft,d ÷ (number of words in d)

- logarithmically scaled frequency: tf(t,d) = log ( 1 + ft,d), or zero if ft,d is zero;[6]

- augmented frequency, to prevent a bias towards longer documents, e.g. raw frequency divided by the raw frequency of the most occurring term in the document:

Inverse document frequency

| weighting scheme | IDF weight () |

|---|---|

| unary | 1 |

| inverse document frequency | |

| inverse document frequency smooth | |

| inverse document frequency max | |

| probabilistic inverse document frequency |

The inverse document frequency is a measure of how much information the word provides, that is, whether the term is common or rare across all documents. It is the logarithmically scaled inverse fraction of the documents that contain the word, obtained by dividing the total number of documents by the number of documents containing the term, and then taking the logarithm of that quotient.

with

- : total number of documents in the corpus

- : number of documents where the term appears (i.e., ). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the denominator to .

Term frequency–Inverse document frequency

Then tf–idf is calculated as

A high weight in tf–idf is reached by a high term frequency (in the given document) and a low document frequency of the term in the whole collection of documents; the weights hence tend to filter out common terms. Since the ratio inside the idf's log function is always greater than or equal to 1, the value of idf (and tf-idf) is greater than or equal to 0. As a term appears in more documents, the ratio inside the logarithm approaches 1, bringing the idf and tf-idf closer to 0.

| weighting scheme | document term weight | query term weight |

|---|---|---|

| 1 | ||

| 2 | ||

| 3 |

Justification of idf

Idf was introduced, as "term specificity", by Karen Spärck Jones in a 1972 paper. Although it has worked well as a heuristic, its theoretical foundations have been troublesome for at least three decades afterward, with many researchers trying to find information theoretic justifications for it.[7]

Spärck Jones's own explanation did not propose much theory, aside from a connection to Zipf's law.[7] Attempts have been made to put idf on a probabilistic footing,[8] by estimating the probability that a given document d contains a term t as the relative document frequency,

so that we can define idf as

Namely, the inverse document frequency is the logarithm of "inverse" relative document frequency.

This probabilistic interpretation in turn takes the same form as that of self-information. However, applying such information-theoretic notions to problems in information retrieval leads to problems when trying to define the appropriate event spaces for the required probability distributions: not only documents need to be taken into account, but also queries and terms.[7]

Example of tf–idf

Suppose that we have term count tables of a corpus consisting of only two documents, as listed on the right.

| Term | Term Count |

|---|---|

| this | 1 |

| is | 1 |

| another | 2 |

| example | 3 |

| Term | Term Count |

|---|---|

| this | 1 |

| is | 1 |

| a | 2 |

| sample | 1 |

The calculation of tf–idf for the term "this" is performed as follows:

In its raw frequency form, tf is just the frequency of the "this" for each document. In each document, the word "this" appears once; but as the document 2 has more words, its relative frequency is smaller.

An idf is constant per corpus, and accounts for the ratio of documents that include the word "this". In this case, we have a corpus of two documents and all of them include the word "this".

So tf–idf is zero for the word "this", which implies that the word is not very informative as it appears in all documents.

A slightly more interesting example arises from the word "example", which occurs three times but only in the second document:

Finally,

(using the base 10 logarithm).

tf-idf Beyond Terms

The idea behind TF–IDF has also been applied to entities other than terms. In 1998, the concept of IDF was applied to citations.[9] The authors argued that "if a very uncommon citation is shared by two documents, this should be weighted more highly than a citation made by a large number of documents". In addition, tf-idf was applied to "visual words" with the purpose of conducting object matching in videos,[10] and entire sentences.[11] However, not in all cases did the concept of TF–IDF prove to be more effective than a plain TF scheme (without IDF). When TF–IDF was applied to citations, researchers could find no improvement over a simple citation–count weight that had no IDF component.[12]

tf-idf Derivates

There are a number of term-weighting schemes that derived from TF–IDF. One of them is TF–PDF (Term Frequency * Proportional Document Frequency).[13] TF-PDF was introduced in 2001 in the context of identifying emerging topics in the media. The PDF component measures the difference of how often a term occurs in different domains. Another derivate is TF-IDuF. In TF-IDuF,[14] IDF is not calculated based on the document corpus that is to be searched or recommended. Instead, IDF is calculated based on users' personal document collections. The authors report that TF-IDuF was equally effective as tf-idf but could also be applied in situations when e.g. a user modeling system has no access to a global document corpus.

See also

References

- ^ Rajaraman, A.; Ullman, J.D. (2011). "Data Mining". Mining of Massive Datasets (PDF). pp. 1–17. doi:10.1017/CBO9781139058452.002. ISBN 978-1-139-05845-2.

- ^ Breitinger, Corinna; Gipp, Bela; Langer, Stefan (2015-07-26). "Research-paper recommender systems: a literature survey". International Journal on Digital Libraries. 17 (4): 305–338. doi:10.1007/s00799-015-0156-0. ISSN 1432-5012.

- ^ Luhn, Hans Peter (1957). "A Statistical Approach to Mechanized Encoding and Searching of Literary Information" (PDF). IBM Journal of research and development. 1 (4). IBM: 315. doi:10.1147/rd.14.0309. Retrieved 2 March 2015.

There is also the probability that the more frequently a notion and combination of notions occur, the more importance the author attaches to them as reflecting the essence of his overall idea.

- ^ Spärck Jones, K. (1972). "A Statistical Interpretation of Term Specificity and Its Application in Retrieval". Journal of Documentation. 28: 11–21. doi:10.1108/eb026526.

- ^ Manning, C.D.; Raghavan, P.; Schutze, H. (2008). "Scoring, term weighting, and the vector space model". Introduction to Information Retrieval (PDF). p. 100. doi:10.1017/CBO9780511809071.007. ISBN 978-0-511-80907-1.

- ^ "TFIDF statistics | SAX-VSM".

- ^ a b c Robertson, S. (2004). "Understanding inverse document frequency: On theoretical arguments for IDF". Journal of Documentation. 60 (5): 503–520. doi:10.1108/00220410410560582.

- ^ See also Probability estimates in practice in Introduction to Information Retrieval.

- ^ Bollacker, Kurt D.; Lawrence, Steve; Giles, C. Lee (1998-01-01). "CiteSeer: An Autonomous Web Agent for Automatic Retrieval and Identification of Interesting Publications". Proceedings of the Second International Conference on Autonomous Agents. AGENTS '98. New York, NY, USA: ACM: 116–123. doi:10.1145/280765.280786. ISBN 0-89791-983-1.

- ^ Sivic, Josef; Zisserman, Andrew (2003-01-01). "Video Google: A Text Retrieval Approach to Object Matching in Videos". Proceedings of the Ninth IEEE International Conference on Computer Vision – Volume 2. ICCV '03. Washington, DC, USA: IEEE Computer Society: 1470–. ISBN 0-7695-1950-4.

- ^ Seki, Yohei. "Sentence Extraction by tf/idf and Position Weighting from Newspaper Articles" (PDF). National Institute of Informatics.

- ^ Beel, Joeran; Breitinger, Corinna (2017). "Evaluating the CC-IDF citation-weighting scheme – How effectively can 'Inverse Document Frequency' (IDF) be applied to references?" (PDF). Proceedings of the 12th iConference.

- ^ Khoo Khyou Bun; Bun, Khoo Khyou; Ishizuka, M. (2001). "Emerging Topic Tracking System". Proceedings Third International Workshop on Advanced Issues of E-Commerce and Web-Based Information Systems. WECWIS 2001: 2. doi:10.1109/wecwis.2001.933900. ISBN 0-7695-1224-0.

- ^ Langer, Stefan; Gipp, Bela (2017). "TF-IDuF: A Novel Term-Weighting Scheme for User Modeling based on Users' Personal Document Collections" (PDF). iConference.

- Salton, G; McGill, M. J. (1986). Introduction to modern information retrieval. McGraw-Hill. ISBN 978-0-07-054484-0.

- Salton, G.; Fox, E. A.; Wu, H. (1983). "Extended Boolean information retrieval". Communications of the ACM. 26 (11): 1022–1036. doi:10.1145/182.358466.

- Salton, G.; Buckley, C. (1988). "Term-weighting approaches in automatic text retrieval". Information Processing & Management. 24 (5): 513–523. doi:10.1016/0306-4573(88)90021-0.

- Wu, H. C.; Luk, R.W.P.; Wong, K.F.; Kwok, K.L. (2008). "Interpreting TF-IDF term weights as making relevance decisions". ACM Transactions on Information Systems. 26 (3): 1. doi:10.1145/1361684.1361686.

External links and suggested reading

- TFxIDF Repository: A definitive guide to the variants and their evolution.

- Gensim is a Python library for vector space modeling and includes tf–idf weighting.

- Robust Hyperlinking: An application of tf–idf for stable document addressability.

- A demo of using tf–idf with PHP and Euclidean distance for Classification

- Anatomy of a search engine

- tf–idf and related definitions as used in Lucene

- TfidfTransformer in scikit-learn

- Text to Matrix Generator (TMG) MATLAB toolbox that can be used for various tasks in text mining (TM) specifically i) indexing, ii) retrieval, iii) dimensionality reduction, iv) clustering, v) classification. The indexing step offers the user the ability to apply local and global weighting methods, including tf–idf.

- Pyevolve: A tutorial series explaining the tf-idf calculation.

- TF/IDF with Google n-Grams and POS Tags