User:WillWare/ML Book Intro: Difference between revisions

No edit summary Tag: Reverted |

No edit summary Tag: Reverted |

||

| Line 1: | Line 1: | ||

[https://en.wikipedia.org/enwiki/w/index.php?title=Special:Book&bookcmd=book_creator Create a book] |

[https://en.wikipedia.org/enwiki/w/index.php?title=Special:Book&bookcmd=book_creator Create a book] [Help:Books Book help] |

||

== Motivation == |

== Motivation == |

||

Revision as of 19:11, 2 December 2020

Create a book [Help:Books Book help]

Motivation

I hope to make this a book that I will find interesting, and from which I can learn. There may then be a good chance that others would also find it interesting.

There are a few threads here that hold my interest. Each is cohesive by itself, but jamming them together all in one book seems to me like a bad idea. The tentative plan at the moment is to produce multiple volumes, so that my profound ignorance can be made abundantly available for the amusement of all mankind.

The lay of the land

Take this with a grain of salt, in light of my aforementioned ignorance.

There are a cluster of related topics including artificial intelligence, machine learning, and neural nets. My first attempt to create a book of these topics was in early 2010, and looking back at it a decade later, it didn't suck as bad as I would have expected. So I am emboldened to take another swing at it. My thoughts on all this stuff have changed surprisingly little in the intervening years.

Some related fields involve more "analog" things: classification, clustering, various sorts of signal processing, Kalman filters, and the contents of the wonderful MIT course 6.432, (Detection, Estimation, and Stochastic Processes). That course was last taught in 2005, and has since been replaced by two others, 6.437 (Inference and Information) and 6.972 (Algorithms for Estimation and Inference). I guess I need to take those next.

One idea that grabbed my interest about that time was the notion of automating science. Not just automating lab work, but automating the scientific method itself. This is discussed at length below.

As I look through this collection of subjects, I find certain cohesive threads.

The Symbolic AI thread

This book aims to address this thread. Here's what I think a reasonable syllabus might look like. I think of this as "discrete" or "digital" machine learning/reasoning, because it deals with essentially logical statements and discrete entities. It tracks context and does not fall victim to the fits of gibberish produced by something like GPT-3.

The data mining thread

There are two powerful ideas here, classification of data objects into categories, and regression which tries to create a mathematical model of an empirically found relationship among pieces of data. This is incidentally where a lot of money is being made these days.

This topic is a little more "analog" in the sense that we are dealing explicitly with probabilities or degrees of certainty. The primary insight here is Bayes' theorem.

- .

This helps us use observations to improve the accuracy of our estimates of hidden variables we cannot directly observe. If a hidden variable happens to be of considerable commercial value, so much the better.

Actually, a huge piece of this volume could simply be a giant R[1] tutorial. Likewise Weka[2].

- Practice

- Recommender system

- Collaborative filtering

- Data mining

- The R language

- Theory

- Information theory

- Statistical classification

- Regression analysis

- Logistic regression

- Bayes' theorem

- Bayesian inference

- Cluster analysis

- Hidden Markov model

- Algorithms

- Naive Bayes classifier

- Bayesian linear regression

- Association rule learning

- Decision tree learning

- Support vector machine

- Principal component analysis

The signal processing thread

One could loosely refer to this as a sort of machine learning, in that the machine is sometimes inferring the value of an unobservable variable, given observations of some other more accessible variable. While this topic is close to my heart, I need to think harder about how to put it all together.

- Statistical inference

- Bayes' theorem

- Bayesian inference

- Fuzzy logic

- Artificial neural network

- Expectation-maximization algorithm

- Hidden Markov model

- Kalman filter

A good page on hidden Markov models.

I should try some data mining on the data from the Framingham Heart Study if that data is available.

The natural language processing thread

This is very vague in my mind right now, and I'm not entirely sure I want to pursue it. I can see enormous social value in machine translation, particularly as the Internet brings all the world closer together.

In the summer of 1989 there were protests in Tiananmen Square in Beijing. Chinese intellectuals and students rallied in favor of more democracy and less central party rule. The whole thing lasted seven weeks, ending in June when the Chinese army cleared the area, leaving 3000 dead.

It seems to me that at least some of the world's problems could be eased if people could (and did) communicate more easily and openly. I would like to see an on-line mechanism for global text chat with instant machine translation from any human language to any other.

The automation of science

Reported in April 2009 by Ross King at Aberystwyth University, the Adam robot used lab automation to perform experiments and data mining to find patterns in the resulting data. It developed novel genomics hypotheses about S. cerevisiae yeast and tested them. Adam's conclusions were manually confirmed by human experimenters, and found to be correct. This was the first instance in human history where a machine contributed new scientific knowledge without human oversight.

Modern science deals with ever-increasing amounts of data, while the cost of computers continues to fall. The need to automate certain aspects of scientific work will grow more urgent in the coming years. We may some day see machine theoreticians and experimentalists collaborating with their human counterparts, participating in a scientific literature that is both human- and machine-readable.

This is an idea that has been in my thoughts quite a bit over several months now, and I've blogged[3] about it.

Can computers do scientific investigation?

I came across a 2001 paper[4] in Science recently that lines up with some thinking I'd been doing myself. The web is full of futuristic literature that envisions man's intellectual legacy being carried forward by computers at a greatly increased pace; this is one of the ideas covered under the umbrella term technological singularity.

In machine learning there are lots of approaches and algorithms that are relevant to the scientific method. The ML folks have long been working on the problem of taking a bunch of data and searching it for organizing structure. This is an important part of how you would formulate a hypothesis when looking at the bunch of data. You would then design experiments to test the hypothesis. If you wanted to automate everything completely, you'd run the experiment in a robotic lab. Conceivably, science could be done by computers and robots without any human participation, and that's what the futurists envision.

The Science paper goes into pretty deep detail about the range and applicability of machine learning methods, as things stood in 2001. I find ML an interesting topic, but I can't claim any real knowledge about it. I'll assume that somebody somewhere can write code to do the things claimed by the paper's authors. It would be fascinating to try that myself some day.

To bring this idea closer to reality, what we need is a widely accepted machine-readable representation for hypotheses, experiments, and experimental results. Since inevitably humans would also participate in this process, we need representations for researchers (human, and possibly machine) and ratings (researcher X thinks hypothesis Y is important, or unimportant, or likely to be true, or likely to be false). So I have been puttering a little bit with some ideas for an XML specification for this sort of ontology.

Specifying experiments isn't that tricky: explain what equipment and conditions and procedure are required, and explain where to look for what outcome, and say which hypotheses are supported or invalidated depending on the outcome. Experimental results are likewise pretty simple. Results should refer to the experiments under test, identifying them in semantic web style with a unique permanently-assigned URI.

The tricky part is an ontology for scientific hypotheses. But you then need a machine-readable language flexible enough to express complex scientific ideas, and that's potentially challenging. Besides, some of these ideas are naturally expressible in ways humans can easily get, but in ways difficult for machines, for instance almost anything involving images.

An XML specification for describing hypotheses, experiments and results in a machine-readable way would be very interesting.

Machines doing actual science, not just lab work

Here's the press release: Robot scientist becomes first machine to discover new scientific knowledge[5]

In an earlier posting, I discussed the idea of computers participating in the reasoning process of the scientific method. There are, as far as I can see, two fields that are applicable to this. One is machine learning, where a computer studies a body of data to find patterns in it. When done with statistical methods, this is called data mining. The other is automated reasoning such as is done with semantic web technology.

So I was quite interested to see the news story linked above. Researchers in the UK have connected a computer to some lab robotics and developed a system that was able to generate new scientific hypotheses about yeast metabolism, and then design and perform experiments to confirm the hypotheses.

This is important because there will always be limits to what human science can accomplish. Humans are limited in their ability to do research, requiring breaks, sleep, and vacations. Humans are limited in their ability to collaborate, because of personality conflicts, politics, and conflicting financial interests. Human talent and intelligence are limited; the Earth is not crawling with Einsteins and Feynmans.

That's obviously not to say that computers would have an unlimited capacity to do science. But their limits would be different, and their areas of strength would be different, and science as a combined effort between humans and computers would be richer and more fruitful than either alone.

I still think it's important to establish specifications for distributing this effort geographically. I would imagine it makes sense to build this stuff on top of semantic web protocols.

I like the idea that with computer assistance, scientific and medical progress might greatly accelerate, curing diseases (hopefully including aging) and offering solutions to perennial social problems like boom-and-bust economic cycles. Then we could all live in a sci-fi paradise.

Foresight Institute conference, Jan 16 and 17, 2010

The Foresight conference is just winding down. The talks were live-blogged over at NextBigFuture by Brian Wang who did a good job of concisely capturing the essentials. My own favorite talk was by Hod Lipson, who talked about a number of things, including something I find fascinating, the automation of science, about which I plan to blog more frequently.

The Adam project was reported in April 2009 in Science by Ross King et. al. at Aberystwyth University. It used lab automation to perform experiments, and data mining to find patterns in the resulting data. Adam developed novel genomics hypotheses about S. cerevisiae yeast and tested them. Adam's conclusions were manually confirmed by human experimenters, and found to be correct. This was the first instance in human history where a machine discovered new scientific knowledge without human oversight.

Here is what I want to see computers doing in the coming years.

- Look for patterns in data -- data mining

- Propose falsifiable hypotheses

- Design experiments to test those hypotheses

- Perform the experiments and collect data

- Confirm or deny hypotheses

- Mine new data for new patterns, repeat the process

In the longer term, I want to see machine theoreticians and experimentalists collaborate with their human counterparts, both working in a scientific literature that is readable and comprehensible for both. This will require the development of a machine-parseable ontology (ideally a widely recognized standard) for sharing elements of the scientific reasoning process: data sets, hypotheses, predictions, deduction, induction, statistical inference, and the design of experiments.

So why do I want all this stuff? For one thing, it's interesting. For another, I am approaching the end of my life and I want to see scientific progress (and particularly medical progress) accelerate considerably in my remaining years. Finally, this looks to me like something where I can make some modestly valuable contribution to humanity with the time and energy I have left.

How hard is generating scientific hypotheses?

In the 1500s, a Danish astronomer named Tycho Brahe used Galileo's invention of the telescope to collect an enormous amount of numerical data describing the motion of the planets. Brahe's assistant Johannes Kepler studied that data and arrived at some interesting conclusions which we now know as Kepler's laws of planetary motion:

- The orbit of every planet is an ellipse with the Sun at a focus.

- A line joining a planet and the Sun sweeps out equal areas during equal intervals of time.

- The square of the orbital period of a planet is directly proportional to the cube of the semi-major axis of its orbit.

Kepler's laws were the starting point from which Isaac Newton formulated his law of gravitation, the inverse-square law that we all know and love.

We have here a three-step process: collect data, find mathematical patterns in the data, and create a theory that explains those patterns. Collecting data is simple in principle, and looking for mathematical patterns is also simple. Kepler's arithmetic was done by hand, but now we have computer programs (like Eureqa) which use genetic programming to find parsimonious mathematical formulas that fit sets of data. You can find Java applets on the web that demonstrate this idea.

So the first two steps aren't too hard. We can arrive rather easily at mathematical formulas that describe various experimentally measurable aspects of reality. That's a good thing. The hard job is the next step: finding theories or "likely stories" that explain why those formulas take whatever form they do. Sometimes the form of the math suggests a mechanism, because you've learned to associate elliptical orbits with conservative force fields which necessarily have an inverse-square law. (Hundreds of years after Newton, that is now a no-brainer.) But generally the problem is non-trivial and so far, as far as I'm aware, requires human insight.

Inference engines and automated reasoning

An inference engine is a computer program that reasons, using some form of knowledge representation.

This can be done with propositional logic or first-order logic, assuming each proposition is completely unambiguous and is either 100% true or 100% false. These simplistic engines are fun little exercises in programming but in real-world situations, reasoning usually needs to consider ambiguities and uncertainties. Instead of simply being true or false, propositions may be likely or unlikely, or their likelihood may be something to be tested or determined. Some elements of some propositions may be poorly defined.

In the unambiguous binary case, it's typical to express rules for generating new propositions as if-then rules with variables in them. We call these production rules because they are used to produce new propositions.

If X is a man, then X is mortal.

Given the statement "Socrates is a man", we

- match the statement to the rule's IF clause

- take note of all variable assignments: X=Socrates

- plug assignments into the THEN clause: "Socrates is mortal"

Obviously this isn't rocket science, but even without handling uncertainty, it will still be useful if scaled to very large numbers of propositions, as in the semantic web.

How to handle uncertainty? This can be done by representing knowledge as a Bayesian network, a directed graph where the edges represent the influences and dependencies between random variables. There is a good tutorial about these online. Here's an example where the probability of rain is an independent variable, and the sprinkler system is usually off if it's raining, and the grass can get wet from either rain or the sprinkler.

There are at least two open-source inference engines that work with Bayesian networks. One is SMILE, another is the OpenBayes library for the Python language. OpenBayes allows you to update the state of your knowledge with a new observation.

- Suppose now that you know that the sprinkler is on and that it is not cloudy, and you wonder what's the probability of the grass being wet : Pr(w|s=1,c=0). This is called evidence...

ie.SetObs({'s':1,'c':0})

- and then perform inference in the same way... The grass is much more likely to be wet because the sprinkler is on!

Here is a list of many more Bayesian network libraries, and another list. There is also a nice tutorial on Learning Bayesian Networks from Data, the process of taking a bunch of data and automatically discovering the Bayesian network that might have produced it. Another Bayesian reasoning system is BLOG.

- Bayesian logic (BLOG) is a first-order probabilistic modeling language under development at MIT and UC Berkeley. It is designed for making inferences about real-world objects that underlie some observed data: for instance, tracking multiple people in a video sequence, or identifying repeated mentions of people and organizations in a set of text documents. BLOG makes it (relatively) easy to represent uncertainty about the number of underlying objects and the mapping between objects and observations.

Are production rule systems and Bayesian network systems mutually compatible? I don't yet know. Do Bayesian networks adequately represent all important forms of uncertainty or vagueness that one might encounter in working with real-world data? I don't know that either. Are there other paradigms I should be checking out? Probably.

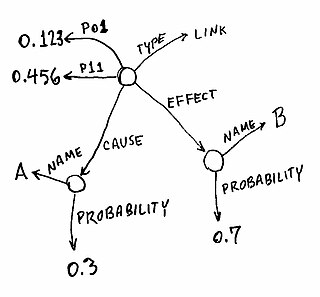

Bayesian nets in RDF, and how to update them

I've banged my head on this for a couple of days and feel close to a solution. The graph looks like this.

Each random boolean variable gets a node, the causal relationship between them gets a node, and each variable gets a probability. The math for updating probabilities is a little tricky, but in a fun and interesting way, so I enjoyed banging on that. At some point I'll tackle more involved cases where there aren't simply two random boolean variables, but that's the logistically simple case that exposes most of the concepts involved. Kinda like the Drosophila of Bayesian inference.

What businesses want

Banking / telecom / retail

- Identify: prospective customers, dissatisfied customers, good customers, bad payers

- Obtain: more effective advertising, less credit risk, less fraud, decreased churn

Biomedical / biometrics

- Medicine: screening, diagnosis and prognosis, drug discovery

- Security: face recognition, signature / fingerprint / iris verification, DNA fingerprinting

Business stuff about the profitability of data mining, especially see the ones at the bottom.

The blog is also interesting.

More:

- http://www.thearling.com/

- http://www.thearling.com/text/dmwhite/dmwhite.htm

- http://www.anderson.ucla.edu/faculty/jason.frand/teacher/technologies/palace/datamining.htm

- http://www.liaad.up.pt/~ltorgo/DataMiningWithR/

R looks boring, but it also looks really important for this stuff.

The R User Conference 2010, July 20-23, National Institute of Standards and Technology (NIST), Gaithersburg, Maryland, USA

Business things, buzzwords, other stuff relating to data mining:

- making better use of data that a business already collects

- exploration and analysis of large quantities of data in order to discover meaningful patterns and rules

- helping customers listen to their data

- classification, prediction, association rules, predictive analytics, data reduction, data exploration, data visualization

- dimension reduction, correlation analysis, principal components analysis, normalization

- linear regression, explanatory modeling, predictive modeling

- naive bayes classifier, k-nearest neighbor

- classification trees, classification rules from trees, regression trees

- logistic regression

- neural networks

- random forest

- discriminant analysis, fisher's linear classification functions

- association rules, the a-priori algorithm, support and confidence

- cluster analysis, euclidean distance, other metrics

- hierarchical (agglomerative) clustering

- non-hierarchical clustering, the k-means algorithm

- extracting hidden knowledge from large volumes of raw data

- extracting hidden predictive information from large databases

- anticipate future trends from analyzing past patterns in organizational data

- Automated discovery of previously unknown patterns

- Chi Square Automatic Interaction Detection

Should I put up a semantic search website?

I can't make money with a semantic search website until it has traction, and until I learn how to sell advertising services. I am tempted to open-source it, except for the data in the AppEngine database. If I open-source it and people contribute code, they'll ask why I'm keeping all the revenue for myself.

But I do want to put up a wiki for suggestions. MirWiki is a Mediawiki clone for AppEngine. The wiki should allow researchers to let me know where I can make improvements in the service.

I can use the development server to prototype the thing.

- http://code.google.com/appengine/docs/java/tools/devserver.html

- http://code.google.com/appengine/docs/python/tools/devserver.html

What I really need to do is to talk to REAL doctors and ask them what online journals they refer to, and what frustrations they have about the search facilities that are available.

You make money by helping doctors find the research papers they need. That may or may not advance the cause of machine-readable scientific literature.

Can the semantic literature search engine be pitched to a VC? Remember that hip young ambitious marketing guys are relatively cheap, as seen at the SCVNGR open house.

A VC will ask, what prevents somebody from cloning the business and killing our revenue? And if everything is open source, the marked-up literature and the search engine and Jena, that's a good argument.

It would have to be that even though the general principles were widely visible, our search engine presented the nicest user experience. So somebody who was willing to suffer with a crappy user experience could do everything and more using Jena and other stuff, with the marked-up literature directly available, but most users would want to go through the web app that had the advertising.

Either that, or you have people register as developers if they want access to the database. Maybe each registered developer gets data that has been watermarked in some way so that we can follow leaks.

The Big Plan

I want to lay out a possible future for this thing. This has direct bearing on the autosci project as well as a probably very good influence on my career.

I'm a stone's throw from getting Rete to work, and then this thing will scale to enormous-sized systems of rules and facts. I can see a possibility of running Rete on a GPU, which could be a huge win.

Get it all working, and verify that it's scalable. This can be done by inventing some dumb heuristics for generating arbitrarily large fact sets using a repeatable pseudorandom generator. Something like a fake patient info database, and the generator takes N as an argument and cranks out N fake patients with plausible medical histories and conditions. Then the production system is supposed to triage them.

Nail down the design. Translate the whole thing to Java and update the wikipedia stuff accordingly. Generate these data sets that illustrate how it's useful for not just medical apps but also synthetic data about product referrals and community recommendations. Package all this stuff up, and write some Groovy code on top to make it even more alluring. Announce the project in some public venue, and be ready for naysayers. Simultaneously do a print run of the book and get 100 copies for maybe $2K. The first 50 people to download the software get a free signed book. They can always download the PDF for free. After the first 50, sell books at a modest markup.

Google's App Engine team does IRC sessions where anybody can ask questions.

- IRC office hours will be held at 7pm Pacific Time. Come join us: http://webchat.freenode.net/?channels=appengine

Try something like that. This would also be a good way to get user feedback that could be used to improve the product. Can I charge for on-line classes done this way?

The product should be dual-licensed. Academic and non-profit users get the terms of some reasonable open-source license, probably not the GPL. Commercial users get to pay license fees.

Hopefully I would quickly find myself in pretty heavy demand, and I could get a large number of one-month and 3-month contracts of setting people up and training their staff. So that could pay my living for several months or maybe years. During all that time, I would be getting to work on this topic which is of interest, and I'd get to work on the next two volumes.

RETE implemented elsewhere

RETE is already used in some open-source projects. In SymPy it appears in facts.py though it claims to be a partial implementation. Still educational.

It also appears in Jena with probably a much more complete implementation.

When I tried Jena with RETE switched on and off, it made no difference. Maybe it's just an interface for connecting to an external RETE engine?

Fuxi does RETE, I think

So RETE appears to be used in SymPy, Jena, and Fuxi, and probably in JESS.

So let's redo the book like this.

- Document Fuxi, Jena, and JESS with plenty of examples. Write the Missing Manual.

- Get Fuxi or Jena to work on an NVIDIA board.

- Document that NVIDIA work as my own project. Post that work on Google Code.

Then try to put the book back together, and print it. Plow ahead with the rest of the plan. The only thing I'm giving up is writing my own engine.

So I've started looking at RETE in Jena and it's not that scary, at least as far as using it. I haven't yet looked at implementation.

My RETE code

The RETE code I wrote in Python is running a hell of a lot faster than Jena on an equivalent set of facts and rules. This probably means that I can write a reasoning engine for Jena that is faster than the ones they have.

There's still the GPU thing.

Random other stuff

videolectures.net

These videos are great.

- http://videolectures.net/Top/Computer_Science/Machine_Learning/

- http://videolectures.net/Top/Computer_Science/Data_Mining/

THIS VIDEO IS REALLY GOOD. The accent is present, but not a problem. A vast survey of the entire field of machine learning applied to big data sets.

Linear and kernel methods are generally quite good for classification. Naive Bayes often does worse.

Here's a video: Introduction to Bayesian Inference, a guy at Cambridge University

He says an interesting thing, that expert systems suffer from combinatorial explosion, you need way too many rules to make it useful.

Trivium: you can run Mac OS on a particular netbook

Other software dealing with data mining, machine learning, etc

Weka is a collection of machine learning algorithms for data mining tasks. The algorithms can either be applied directly to a dataset or called from your own Java code. Weka contains tools for data pre-processing, classification, regression, clustering, association rules, and visualization. It is also well-suited for developing new machine learning schemes. Weka is open source software issued under the GNU General Public License.

Others

- http://torch5.sourceforge.net/

- http://code.google.com/p/deeptorch/

- http://code.google.com/p/golemml/

- Orange (software)

What big topics haven't I addressed yet?

How much of this stuff is actually relevant? How much would it require me to redesign everything? What are the criteria for relevance? My goals are (1) interesting work for the remaining 15 years of my working life, and (2) stuff that contributes to the automation of science project.

- AI in games

- Game-learning AI (chess, checkers, go): Deep Blue, Blondie24

- Fuzzy logic

- Neural nets

- Category:Heuristics

- Non-exhaustive search: gradient descent, conjugate gradient, genetic algorithms, simulated annealing

Updates, July 2010

I've started fooling with Neo4J[6], a graph database. It can be used with Java or Jython. If it can do something equivalent to a fast SPARQL query, then it can perform logical deduction on semantic nets. So that will be something to tinker with, and possibly important.