User:Ethan McCue/sandbox

Article evaluation

For my article evaluation, I am looking at the public article for the Elm programming language. The first thing that jumps out to me is the claim that Elm "is developed with emphasis on usability, performance, and robustness" without any citation given. Statements like that about the quality of the subject of an article feel like they should have a source attached to them. The section on "Limitations" also seems somewhat sparse, and there is probably a lot more that can be added in that area. All of the citations come from the official Elm documentation, which is maintained by the people who create the programming language. This means that they might be biased toward Elm and may embellish its positive qualities. No potential bias is cited in the article though. The talk page is also quite barren, with only a few comments.

Article selection

1. The page for the EDN data format is kinda sparse, especially compared to the other data formats listed there. https://en.wikipedia.org/wiki/Extensible_Data_Notation 2. The page for the Clojure language is really biased. It has phrases like "Unlike other languages" without any citation. That article could use some cleanup https://en.wikipedia.org/wiki/Clojure 3. The page for Functional Programming is really heavy with examples, but there isn't much in the way of description and the words it uses are jumbled at best. It is of far worse quality than the Object oriented programming article. https://en.wikipedia.org/wiki/Functional_programming

-----------

In computing, a persistent data structure is a data structure that always preserves the previous version of itself when it is modified. Such data structures are effectively immutable, as their operations do not (visibly) update the structure in-place, but instead always yield a new updated structure. The term was introduced in Driscoll, Sarnak, Sleator, and Tarjans' 1986 article [1].

A data structure is partially persistent if all versions can be accessed but only the newest version can be modified. The data structure is fully persistent if every version can be both accessed and modified. If there is also a meld or merge operation that can create a new version from two previous versions, the data structure is called confluently persistent. Structures that are not persistent are called ephemeral.[2]

These types of data structures are particularly common in logical and functional programming, and in a purely functional program all data is immutable, so all data structures are automatically fully persistent.[2] Persistent data structures can also be created using in-place updating of data and these may, in general, use less time or storage space than their purely functional counterparts. Purely functional data structures are persistent data structures that completely avoid the use of mutable state, but can often still achieve attractive amortized time complexity bounds.

While persistence can be achieved by simple copying, this is inefficient in CPU and RAM usage, because most operations make only small changes to a data structure. A better method is to exploit the similarity between the new and old versions to share structure between them, such as using the same subtree in a number of tree structures. However, because it rapidly becomes infeasible to determine how many previous versions share which parts of the structure, and because it is often desirable to discard old versions, this necessitates an environment with garbage collection. However, it is not so infeasible that a sophisticated project, such as the ZFS copy-on-write file system, is unable to achieve this by tracking storage allocation directly.

Partially persistent

In the partial persistence model, we may query any previous version of the data structure, but we may only update the latest version. This implies a linear ordering among the versions.

Three methods on balanced binary search tree:

Fat node

Fat node method is to record all changes made to node fields in the nodes themselves, without erasing old values of the fields. This requires that we allow nodes to become arbitrarily “fat”. In other words, each fat node contains the same information and pointer fields as an ephemeral node, along with space for an arbitrary number of extra field values. Each extra field value has an associated field name and a version stamp which indicates the version in which the named field was changed to have the specified value. Besides, each fat node has its own version stamp, indicating the version in which the node was created. The only purpose of nodes having version stamps is to make sure that each node only contains one value per field name per version. In order to navigate through the structure, each original field value in a node has a version stamp of zero.

Complexity of fat node

With using fat node method, it requires O(1) space for every modification: just store the new data. Each modification takes O(1) additional time to store the modification at the end of the modification history. This is an amortized time bound, assuming we store the modification history in a growable array. For access time, we must find the right version at each node as we traverse the structure. If we made m modifications, then each access operation has O(log m) slowdown resulting from the cost of finding the nearest modification in the array.

Path copying

Path copy is to make a copy of all nodes on the path which contains the node we are about to insert or delete. Then we must cascade the change back through the data structure: all nodes that pointed to the old node must be modified to point to the new node instead. These modifications cause more cascading changes, and so on, until we reach to the root. We maintain an array of roots indexed by timestamp. The data structure pointed to by time t’s root is exactly time t’s data structure.

Complexity of path copying

With m modifications, this costs O(log m) additive lookup time. Modification time and space are bounded by the size of the structure, since a single modification may cause the entire structure to be copied. That is O(m) for one update, and thus O(n²) preprocessing time.

A combination

Sleator, Tarjan et al. came up with a way to combine the advantages of fat nodes and path copying, getting O(1) access slowdown and O(1) modification space and time.

In each node, we store one modification box. This box can hold one modification to the node—either a modification to one of the pointers, or to the node’s key, or to some other piece of node-specific data—and a timestamp for when that modification was applied. Initially, every node’s modification box is empty.

Whenever we access a node, we check the modification box, and compare its timestamp against the access time. (The access time specifies the version of the data structure that we care about.) If the modification box is empty, or the access time is before the modification time, then we ignore the modification box and just deal with the normal part of the node. On the other hand, if the access time is after the modification time, then we use the value in the modification box, overriding that value in the node. (Say the modification box has a new left pointer. Then we’ll use it instead of the normal left pointer, but we’ll still use the normal right pointer.)

Modifying a node works like this. (We assume that each modification touches one pointer or similar field.) If the node’s modification box is empty, then we fill it with the modification. Otherwise, the modification box is full. We make a copy of the node, but using only the latest values.(That is, we overwrite one of the node’s fields with the value that was stored in the modification box.) Then we perform the modification directly on the new node, without using the modification box. (We overwrite one of the new node’s fields, and its modification box stays empty.) Finally, we cascade this change to the node’s parent, just like path copying. (This may involve filling the parent’s modification box, or making a copy of the parent recursively. If the node has no parent—it’s the root—we add the new root to a sorted array of roots.)

With this algorithm, given any time t, at most one modification box exists in the data structure with time t. Thus, a modification at time t splits the tree into three parts: one part contains the data from before time t, one part contains the data from after time t, and one part was unaffected by the modification.

Complexity of the combination

Time and space for modifications require amortized analysis. A modification takes O(1) amortized space, and O(1) amortized time. To see why, use a potential function ϕ, where ϕ(T) is the number of full live nodes in T . The live nodes of T are just the nodes that are reachable from the current root at the current time (that is, after the last modification). The full live nodes are the live nodes whose modification boxes are full.

Each modification involves some number of copies, say k, followed by 1 change to a modification box. (Well, not quite—you could add a new root—but that doesn’t change the argument.) Consider each of the k copies. Each costs O(1) space and time, but decreases the potential function by one. (First, the node we copy must be full and live, so it contributes to the potential function. The potential function will only drop, however, if the old node isn’t reachable in the new tree. But we know it isn’t reachable in the new tree—the next step in the algorithm will be to modify the node’s parent to point at the copy. Finally, we know the copy’s modification box is empty. Thus, we’ve replaced a full live node with an empty live node, and ϕ goes down by one.) The final step fills a modification box, which costs O(1) time and increases ϕ by one.

Putting it all together, the change in ϕ is Δϕ =1− k.Thus, we’ve paid O(k +Δϕ)= O(1) space and O(k +Δϕ +1) = O(1) time

Fully persistent

In fully persistent model, both updates and queries are allowed on any version of the data structure.

Confluently persistent

In confluently persistent model, we use combinators to combine input of more than one previous version to output a new single version. Rather than a branching tree, combinations of versions induce a DAG (directed acyclic graph) structure on the version graph.

Examples of persistent data structures

Perhaps the simplest persistent data structure is the singly linked list or cons-based list, a simple list of objects formed by each carrying a reference to the next in the list. This is persistent because we can take a tail of the list, meaning the last k items for some k, and add new nodes on to the front of it. The tail will not be duplicated, instead becoming shared between both the old list and the new list. So long as the contents of the tail are immutable, this sharing will be invisible to the program.

Many common reference-based data structures, such as red–black trees,[3] stacks,[4] and treaps,[5] can easily be adapted to create a persistent version. Some others need slightly more effort, for example: queues, dequeues, and extensions including min-deques (which have an additional O(1) operation min returning the minimal element) and random access deques (which have an additional operation of random access with sub-linear, most often logarithmic, complexity).

There also exist persistent data structures which use destructive[clarification needed] operations, making them impossible to implement efficiently in purely functional languages (like Haskell outside specialized monads like state or IO), but possible in languages like C or Java. These types of data structures can often be avoided with a different design. One primary advantage to using purely persistent data structures is that they often behave better in multi-threaded environments.

Linked lists

This example is taken from Okasaki. See the bibliography.

Singly linked lists are the bread-and-butter data structure in functional languages. In ML-derived languages and Haskell, they are purely functional because once a node in the list has been allocated, it cannot be modified, only copied or destroyed. Note that ML itself is not purely functional.

Consider the two lists:

xs = [0, 1, 2] ys = [3, 4, 5]

These would be represented in memory by:

where a circle indicates a node in the list (the arrow out representing the second element of the node which is a pointer to another node).

Now concatenating the two lists:

zs = xs ++ ys

results in the following memory structure:

Notice that the nodes in list xs have been copied, but the nodes in ys are shared. As a result, the original lists (xs and ys) persist and have not been modified.

The reason for the copy is that the last node in xs (the node containing the original value 2) cannot be modified to point to the start of ys, because that would change the value of xs.

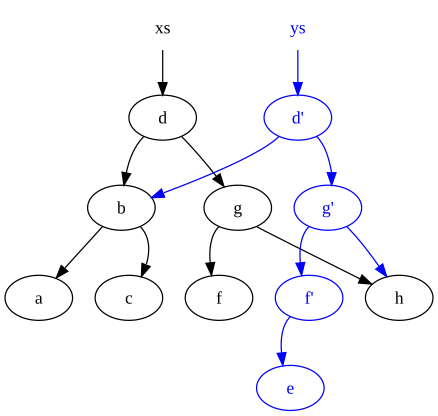

Trees

This example is taken from Okasaki. See the bibliography.

Consider a binary tree used for fast searching, where every node has the recursive invariant that subnodes on the left are less than the node, and subnodes on the right are greater than the node.

For instance, the set of data

xs = [a, b, c, d, f, g, h]

might be represented by the following binary search tree:

A function which inserts data into the binary tree and maintains the invariant is:

fun insert (x, E) = T (E, x, E)

| insert (x, s as T (a, y, b)) =

if x < y then T (insert (x, a), y, b)

else if x > y then T (a, y, insert (x, b))

else s

After executing

ys = insert ("e", xs)

we end up with the following:

Notice two points: Firstly the original tree (xs) persists. Secondly many common nodes are shared between the old tree and the new tree. Such persistence and sharing is difficult to manage without some form of garbage collection (GC) to automatically free up nodes which have no live references, and this is why GC is a feature commonly found in functional programming languages.

Persistent Hash Array Mapped Trie

... Bagwell and Conception ...

... Clojure persistent modification ...

... technical explanation ...

... images from blog post (need to ask permission for use in larger wikipedia) ...

... performance characteristics ...

Usage in programming languages

Haskell

... Pure languages have persitence by default ...

... Availability of data structures over time ...

Clojure

... persistent linked list ...

... differences from classical cons cells ...

... persistent vector, map, set ...

... multithreading, compare and swap ...

... value of values ...

Elm

... List, Dict, Set, Array ...

... Model Update View ...

... uses persistent data structures to make functional code efficient ...

... tree diffing ...

... virtual dom ...

Javascript

... Immutable.js ...

... React and Redux ...

... performance benefits ...

... other libraries ...

Scala

... object functional style ...

... scala 2.8 32-ary trees from clojure ...

Java

... Vavr ...

... backports of scala and clojure code ...

... value objects ...

Reference cycles

Since every value in a purely functional computation is built up out of existing values, it would seem that it is impossible to create a cycle of references. In that case, the reference graph (the graph of the references from object to object) could only be a directed acyclic graph. However, in most functional languages, functions can be defined recursively; this capability allows recursive structures using functional suspensions. In lazy languages, such as Haskell, all data structures are represented as implicitly suspended thunks; in these languages any data structure can be recursive because a value can be defined in terms of itself. Some other languages, such as OCaml, allow the explicit definition of recursive values.

See also

References

- ^ J R Driscoll, N Sarnak, D D Sleator, R E Tarjan (1986). "Making data structures persistent". Proceeding STOC '86. Proceedings of the eighteenth annual ACM symposium on Theory of computing. Pages 109-121.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ a b Kaplan, Haim (2001). "Persistent data structures". Handbook on Data Structures and Applications. CRC Press.

- ^ Neil Sarnak, Robert E. Tarjan (1986). "Planar Point Location Using Persistent Search Trees" (PDF). Communications of the ACM. 29 (7): 669–679. doi:10.1145/6138.6151.

- ^ Chris Okasaki. "Purely Functional Data Structures (thesis)" (PDF).

{{cite journal}}: Cite journal requires|journal=(help) - ^ Liljenzin, Olle. "Confluently Persistent Sets and Maps". arXiv:1301.3388. Bibcode:2013arXiv1301.3388L.

{{cite journal}}: Cite journal requires|journal=(help)

Further reading

- Persistent Data Structures and Managed References - video presentation by Rich Hickey on Clojure's use of persistent data structures and how they support concurrency

- Making Data Structures Persistent by James R. Driscoll, Neil Sarnak, Daniel D. Sleator, Robert E. Tarjan

- Fully persistent arrays for efficient incremental updates and voluminous reads

- Real-Time Deques, Multihead Turing Machines, and Purely Functional Programming

- Purely functional data structures by Chris Okasaki, Cambridge University Press, 1998, ISBN 0-521-66350-4.

- Purely Functional Data Structures thesis by Chris Okasaki (PDF format)

- Fully Persistent Lists with Catenation by James R. Driscoll, Daniel D. Sleator, Robert E. Tarjan (PDF)

- Persistent Data Structures from MIT open course Advanced Algorithms