Talk:Cryptographic hash function

| This article is rated C-class on Wikipedia's content assessment scale. It is of interest to multiple WikiProjects. | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

|

| Material from Cryptographic hash function was split to Comparison of cryptographic hash functions on 23 October 2014. The former page's history now serves to provide attribution for that content in the latter page, and it must not be deleted so long as the latter page exists. Please leave this template in place to link the article histories and preserve this attribution. |

| On 8 February 2007, Cryptographic hash function was linked from Wired News, a high-traffic website. (Traffic) All prior and subsequent edits to the article are noted in its revision history. |

The field has chosen the term Message digest

This page should be redirected to Message digest which is the established term of art in the field since the 80s. In the early days, no distinction was made between message digests and hash functions. And then the same name was used for both. Then someone brought a stupid lawsuit claiming that the use of a message digest as a hash violated their patent. I suspect the page was redirected from Message Digest during that dispute. — Preceding unsigned comment added by 96.237.138.82 (talk) 21:58, 7 January 2020 (UTC)

Confused????

The way this article describes second pre-image resistance and collision resistance they seem like the exact same thing. Am I missing somethimg big here or is this just poorly explained??? 98.213.119.182 (talk) 09:04, 17 May 2015 (UTC)

- See Preimage attack for an explaination of the difference.--agr (talk) 12:55, 17 May 2015 (UTC)

Revision of article

I agree that the account given here currently is accurate. It is however, quite abstract and so less than useful to a large class of readers. Since the topic is a fundamental one in modern cryptographic practice, I suggest that this article be revised to include some text giving more context. In particular, problems in using such function should be noted. The article message digest errs in almost the inverse way. Perhaps a combination of the two with redirect pointers?

I will consider taking it on, as time allows, if no one else does.

- I've merged these (though it needs reorganising, I think). — Matt 22:18, 19 Jul 2004 (UTC)

— Matt 01:14, 21 Jul 2004 (UTC)

- Crikey! This article needs some serious editing! I will also be coming back from time to time to touch it up. Ruptor 04:03, 21 February 2007 (UTC)

- This article seriously needs a section written in layman's terms. Blimey. ▫ Urbane Legend chinwag 01:21, 29 November 2008 (UTC)

- To me, the head section seems to be as clear as it could be. If it is not, can you point out the difficult parts?

- The rest of the article is about technical details; I cannot see how to be correct without being technical.

- --Jorge Stolfi (talk) 21:58, 30 November 2008 (UTC)

Feel free to put these observations on the todo list as you wish. The article needs to discuss at least qualitatively what "hard" is meant in the properties section. Examples or just order of magnitude of the computation involved would be fine. A link to and/or a quick summary of what computational infeasibility means are needed too. Also of course, a summary of the different CHF's and their features/similarities. - Taxman 20:23, Jul 29, 2004 (UTC)

Online references for editing

'

I feel the intro is too long (i.e a section heading should be inserted after the first paragraph) but I can't think of anything suitable. Arvindn 07:33, 30 Jul 2004 (UTC)

I'd say the puzzle solution example is about commitment more than timestamping. Lunkwill 00:27, 5 Aug 2004 (UTC)

On "dramatically different" - this is a requirement of hash functions, it's the Strong Avalanche Criterion.

On "no information about" - an attacker can trivially determine one piece of information about M from H(M), namely the value of H(M). I understand the spirit of what you're trying to get across but no-one's ever found a way to express it formally in a way that's not impossible to satisfy.

ciphergoth 11:24, 2005 Jan 10 (UTC)

"Some of the following algorithms are known to be insecure"

- please note the ones that are and how significant each is. - Omegatron 16:44, Apr 14, 2005 (UTC)

- There is some discussion of the security elsewhere in the article, but here I would suggest we just remove the warning altogether. Just because we list a hash function design doesn't carry any implication that it's somehow endorsed (any more than a "list of prisons" article would carry the disclaimer that "some of the following prisons are known to have had inmates escape"). — Matt Crypto 19:10, 14 Apr 2005 (UTC)

- Why not? That's the information I was looking for in this case. Several of the articles have something like "this version is no longer considered secure" in the first description paragraph. - Omegatron 19:18, Apr 14, 2005 (UTC)

- A list of which algorithms are how insecure is useful in choosing which one to apply for a particular purpose. A list of prisons, however, would not be quite so useful in this way in that the location of a prison can be more important than the security: a prisoner shipped off to the moon is quite unlikely to escape, however putting the prisoner there in the first place is unfeasible. --Ihope127 19:05, 10 August 2005 (UTC)

nor should an attacker learn anything useful about a message given only its digest besides the digest itself

Two editors (one anon) have argued that the last four words should be removed. I'm really against this; to my eyes, it's a vitally important caveat. Without that caveat, we would be able to define precisely what it meant for the attacker to learn nothing about the message. As it is, they definitely learn at least one thing: the digest of the message is x. This seemingly trivial fact completely frustrates any effort to come to a more precise definition of what it means for the attacker not to learn anything about the message. It's an absolutely vital difference.

I won't revert it a third time if it's removed again, but I'd really like to discuss it here first if possible. Thanks. — ciphergoth 08:30, 24 October 2005 (UTC)

- I agree with your revert and edits ciphergoth. It is a vital difference since in some cases knowing the digest itself can make a difference. (I have worked professionally with crypto protocol design and teached it at universities.) --David Göthberg 11:13, 24 October 2005 (UTC)

Preimage resistance

According to the MD5 article it is not collision resistance so the table should be modified. Are their any objections to this? Lambedan (talk) 23:16, 2 April 2009 (UTC)

- The entries in the table are OK. MD5 is not collision resistant, but so far no attack against preimage resistance is published. However, the titles of the colums are ambiguous. The column "Collision resistance" really means "Is there are any known attacks against collision resistance?". The column "Preimage resistance" means equally "Are there any known attacks against preimage resistance?". Maybe the titles should be made more clear. 81.62.21.174 (talk) 07:43, 3 April 2009 (UTC)

- The entry for MD2 has a blank in the preimage column though the MD2 article describes a preimage attack? If I knew more about Cryptography I'd be tempted to ammend this myself; can an expert check this please —Preceding unsigned comment added by 83.104.81.210 (talk) 09:56, 28 May 2009 (UTC)

Preimage resistance

User:C4chandu removed the discussion of preimage resistance from the statement of what the hash function is supposed to be able to do. Why? If anything, we should be extending that section to add second preimage resistance as well. — ciphergoth 08:30, 24 October 2005 (UTC)

CRCs

CRCs are not just used because of speed and convenience. They have the property that any error of less than a certain number of bits, or spanning less than a certain portion of the message, is guaranteed to change the CRC. In such circumstances a pseudorandom function may by chance give the same value.

I'm not sure how much that advantage matters (more if the checksum is short), but that's always how they've been sold as I understand it. — ciphergoth 15:04, 7 February 2006 (UTC)

- Well, first of all if we should mention why CRCs are used in network protocols instead of cryptographic hashes then we should first mention the primary reasons: CRCs are easier to implement and much faster then cryptographic hash sums. This I am sure of and would not revert if added to the article. Perhaps we should add that?

- And yes, I think CRCs to some extent are supposed to guarantee that short bursts of damaged bits (shorter then the length of the CRC output) should not cause the same CRC. But hashes as you point out are more random in nature and thus might cause the same hash in that case. But I am not sure if that is true so I would not add that to the article. And I also think it might be too much details on CRCs.

- Thirdly: Yes, there might be some properties in CRCs that makes them more suitable for trying to figure out which bits got damaged and try to repair them. Since CRCs are sort of more predictable. Or it is just that they are faster and thus it is faster to try different fixes and see which one that matches the CRC. I don't know which is true and you don't seem to be certain either. And I think this also is the most unusual reason to use CRCs. So I don't think we should have it in the article.

- CRCs can't directly be used for error correction, because there are typically many equally likely corrections that would fix the CRC. However, error correcting codes are usually based on the same kind of mathematics (ie Galois fields): see for example convolutional codes.

- I am sure about this assertion. I am also sure that with a CRC, you can assert whole useful classes of error that are guaranteed to cause a change in the CRC, while with an n-bit cryptographically strong hash (or MAC), any error has a 2^-n chance of going undetected.

- CRCs are not that fast or easy to implement. Something like Adler32 is easier to implement and much faster than any CRC. CRCs are used because there's a mathematical reason to think they'll make particularly good error detecting codes in a channel that introduces very few one-bit errors. I am pretty sure about this too.

- FTR, I'm not advocating the use of CRCs - I'd like to see real MACs used on most channels. Note that CRC32 takes 4.8 cycles/byte; Poly1305 runs at 3.8 cycles/byte; Adler32 1.3 cycles/byte. — ciphergoth 09:53, 8 February 2006 (UTC)

- I can't comment on Adler32, but CRC is far simpler and faster than a cryptographic hash function, and much easier to implement in hardware. It's also far more likely to let an error slip through than a cryptographic hash, for reasonable sizes of each (32 bits for CRC, 128-256 for a hash). There are standard ways of creating errors which will pass CRC; find /any/ error which passes HMAC and you can become instantly famous. You certainly won't find it by random guessing; 2^-128 is far too large of a large number. Here are benchmarks for CRC-32 vs. SHA-1 on a 100MB file on my machine:

- [jason@erg] ~/tmp$ cfv -t crc -C foo

- tmp.crc: 1 files, 1 OK. 0.427 seconds, 228625.5K/s

- [jason@erg] ~/tmp$ cfv -t sha1 -C foo

- tmp.sha1: 1 files, 1 OK. 2.241 seconds, 43585.7K/s

- As to using truncated (to say, 32 bits) cryptographic hashes as a CRC replacement, I'm not even going to go there because it confuses the purpose of both CRC and hash functions. Use hash functions if you want serious integrity against both glitches and malicious adversaries. Use CRCs if you want a cheap way to just avoid common glitches. Lunkwill 20:15, 8 February 2006 (UTC)

- I agree with everything you say here; I'm just trying to set out why CRCs are popular for certain kinds of protocol. Against an active attacker a CRC is no good as you rightly point out, but against a channel that introduces occasional one-bit errors a CRC might be the right check to choose for reasons other than speed or convenience of implementation. TBH, I think the time when they might be useful is past; you should always be using a MAC to protect against deliberate attack (and you will then also be protected against natural errors), and if you have a problem with frequent bit errors then you need an error correcting code, not a CRC. But it's a misrepresentation to say that CRCs are used solely for reasons of speed and convenience; they have certain advantages when detecting natural sources of error and that's why they've historically been used. That's all my point is.

- Oh, and given the MAC key, it's now not too hard to construct an error which passes HMAC-MD5, and HMAC-SHA1 can't be far off. Of course things are rather different if you don't have the key :-) — ciphergoth 22:27, 8 February 2006 (UTC)

- CRCs can't directly be used for error correction? The Header Error Correction article implies that some ATM hardware does directly use CRCs for correcting single-bit errors. Is that something that needs to be fixed?

- But I agree with your general conclusion:

- CRCs were historically used to detect (natural) errors, because they have a stronger guarantee on detecting many kinds of commonly-occurring errors than other faster, easier-to-implement check codes such as Adler-32 and longitudinal redundancy check.

- However, now we know other error detection and correction techniques that are better than CRCs for correcting natural errors at each single hop between communication nodes.

- MACs are better than CRCs for detecting deliberate attack (and also any natural errors that may have been incorrectly "corrected") following the end-to-end principle.

- So there doesn't seem to be a place for CRCs in new protocols. --68.0.124.33 (talk) 18:08, 23 September 2008 (UTC)

Hash functions based on block ciphers

I have a slight discomfort with this section that I don't know how to resolve. The fact is that most modern hash functions (MDx, SHA-x, Tiger, Whirlpool at least) are based on block ciphers in either Davis-Meyer or Miyaguchi-Preneel mode; it's just that they use custom block ciphers designed for the application rather than, say, AES. I don't know how to change the article to express this. — ciphergoth 09:06, 6 March 2006 (UTC)

- We do mention that fact in the Merkle-Damgård article: "The compression function can either be specially designed for hashing or be built from a block cipher. In many cases, including the SHA-1 and SHA-0 ciphers, the compression function is based on a block cipher that is specially designed for use in a hash function."

- But saying "a block cipher specially designed for the hash function together with Miyaguchi-Preneel" at least to me means about the same thing as "the compression function can be specially designed for hashing". (Of course with a little less detail.) And actually, the compression function can either be specially designed, or an existing block cipher, or even be made from a stream cipher.

- Personally I think we should keep it simple here in the hash article (as we do now) since otherwise it becomes far to long. Instead we should leave the indepth details to the Merkle-Damgård article.

Jacksum

On March 27 I removed about a dozen of links from Wikipedia like this one from WHIRLPOOL:

- Jacksum — by Dipl.-Inf. (FH) Johann N. Löfflmann in Java. Various message verification functions, including all three revisions of WHIRLPOOL. Released under the GPL.

Jonelo (talk · contribs), the author of Jacksum has in most cases restored these links; see his contributions. I would be interested to know what other Wikipedia editors feel about this dispute. There is some discussion of this on his Talk page My own feelings follow.

- Omit the links - I do not think it is appropriate to pepper Wikipedia with links to your software so even when it is open source. — ciphergoth 19:47, 30 March 2006 (UTC)

- The only place where I would be comfortable with a link to this software is in List of cryptographic software. Arvindn 00:13, 31 March 2006 (UTC)

- Jonelo should not be reverting links to his site. We can be a little heavy-handed with that, if necessary, because our policy on external links is very clear, saying that editors should avoid adding: "3. Links that are added to promote a site" and "9. A website that you own or maintain (unless it is the official site of the subject of the article). If it is relevant and informative, mention it as a possible link on the talk page and wait for someone else to include it, or include the information directly in the article." It goes on to say that, "NOTE relating to items #3 and #9: Because of neutrality & point-of-view concerns, a primary policy of wikipedia is that no one from a particular site/organization should post links to that organization/site etc. Because neutrality is such an important -- and difficult -- objective at wikipedia, this takes precedence over other policies defining what should be linked. The accepted procedure is to post the proposed links in the Talk section of the article, and let other - neutral - wikipedia editors decide whether or not it should be included." — Matt Crypto 06:52, 31 March 2006 (UTC)

- As the author of Jacksum I would like to add a few comments. It is true, that I have added the Jacksum links in October 2004. See also the discussion at my Talk page. I have also changed the description from time to time to keep correctness. However, the need to restore a link occur just only twice since 2004. See also my contributions. And also according to the feedback that I have received, it seems that users have found those links quite useful. Well, all Wikipedia links to Jacksum are removed for now, and I'm not going to add the links again, because I really don't want to violate the policy of Wikipedia ("editors should be avoid adding a website that you own or maintain"). However I think that an open source, free, and platform independent checksum software is something which the Wikipedia readers could help. Therefore I hope that there are at least a few Wikipedia users who agree with me, so that the Jacksum links can be restored some time again. The link to Jacksum is http://www.jonelo.de/java/jacksum . Maybe, it is wise to shorten the description a little bit. Thanks for your support. Jonelo 19:13, 31 March 2006 (UTC)

- Personally, I prefer such links, so long as they are useful. I didn't see a link to "list of cryptographic software" anywhere on the page, and hence didn't find any software for Whirlpool. However, with people posting Whirlpool software, I get my information and applications from a single page. It's one of the things I love about Wikipedia: I get the information, then I get the websites or apps that put it into practice. I do see good reason to regulate these things, but I think that at least the best software should be on the page. If anyone disagrees, then put a link to that list of software on the Wikipedia article of every cryptographic algorithm or topic, that way the casual Wikipedia user won't miss out. I am glad Crypto++ was on the AES article, or I would have never known it existed. —Preceding unsigned comment added by 76.107.167.109 (talk) 05:56, 13 March 2008 (UTC)

Effective size?

We need a column for effective size to give an indication of each algorithm's quality. For instance, a perfect 128-bit hash would take 2^64 operations to find a collision. Because e.g. SHA-1 takes 2^63 operations to find a collision, its effective size would be 126 bits. Otherwise, the reader has to visit each article one-by-one in order to get an idea of how cryptographically broken each algorithm is. --Damian Yerrick (☎) 02:40, 3 June 2006 (UTC)

- I think such a summary would be more misleading than useful. My experience with eSTREAM has been that it's best to discuss security status on the page for the relevant primitive, where you have the space to do it properly. — ciphergoth 08:56, 3 June 2006 (UTC)

Revealing properties?

Wouldn't a good property of a hash function be the ability to show, given a string X, that only a string of its length (or a string that's an anagram of that one, or which starts with the same character of as that one, or something) could result in the hash it results in without revealing the string itself (like revealing that the MD5 hash 6cd3556deb0da54bca060b4c39479839 must belong to a string 13 characters long)? --Ihope127 00:57, 4 July 2006 (UTC)

Are hash methods theoretically viable?

There is no underlying theoretical discussion included in the article. Many claim it is not possible at all to design a perfectly collision-free and one-way hash method , so all hash algorythms are futile for security use. I think this is related to P <-->NP problem. 195.70.48.242 20:36, 5 December 2006 (UTC)

- Actually, I'd say the article does a reasonable job of expressing the theoretical basics. If you want more, you can read up on random oracles or go to the Handbook of Applied Cryptography. "Perfectly collision-free" is meaningless with respect to hash functions (consider a hash with 128-bit output. Feed it all 256-bit strings, and each will have an average of 2^128 collisions, even if it's a true random oracle.) P<->NP is irrelevant. And "futile for security use" doesn't really make sense either -- almost all cryptography is based on well-tested assumptions about what's hard and what's not. That said, there is lots of work to be done in strengthening our definition and implementation of cryptographic hashes, and that work is being aggressively undertaken now that sha1 and md5 are creaking ominously. Lunkwill 19:46, 7 December 2006 (UTC)

NIST has been having some workshops on cryptographic hashes

NIST has been having some workshops on cryptographic hashes. There have been a few ideas for new hash functions submitted; three have web pages and reference code: LASH, RadioGatún, and EDON-C/EDON-R (scroll down and look for "Two infinite classes of cryptographic hash functions"). Samboy 05:29, 21 December 2006 (UTC)

One-way encryption

Many people in the industry refer to cryptographic hash functions as "one-way encryption". I added this and also added references to a couple reputable books that talk about this. My addition was removed. This removal was unwarranted. The fact that they are called this is not incorrect (obviously because I referenced it). The notion that it is an incorrect definition is arguable and valid. The person who removed my edit should add a reputable source that defines it otherwise and state that it is disputed and/or controversial. Javidjamae 04:33, 18 June 2007 (UTC)

- I see what you mean. However, there is some considerable confusion on how the term is used. In some cases one-way encryption refers to an encryption scheme that simply is one-way as opposed to stronger security notions such as security against chosen ciphertext attacks. As far as I can see, the most frequent use of "one-way encryption" is for applying a one-way function to passwords. Cryptographic hash functions are frequently (but not always) used for this process. An example where not a hash function is used is the DES-based password storage in UNIX (I.e. encrypt an all zero block with the password as a key). This function is reasonably one-way, but not collision resistant and hence not a cryptographic hash function. Hence what many people mean when they talk about "one-way encryption" is something slightly different than a cryptographic hash function. There are people that do not distinguish between the two concepts, but that does not mean we have to copy that into wikipedia. 85.2.88.39 12:21, 18 June 2007 (UTC)

- Oh, this was interesting. I often think of and use hashes as a kind of "one-way encryption" functions. And I use them in several ways as one-way encryption, not only for password hashing. And I think many crypto users/programmers think of hashes as one-way encryption.

- Currently we don't have a one-way encryption article here at Wikipedia. I think a solution for the moment could be to make a separate section about "one-way encryption" where the different views on this can be expressed. And if the "one-way encryption" section grows large we can make it a separate article. For now I made a redirect from one-way encryption to this article. I also added a mentioning of "one-way encryption" in the section "Applications of hash functions". Both to show a usage of the expression and make any one searching for the expression find this hash article.

- --David Göthberg 14:44, 18 June 2007 (UTC)

- Encryption, one-way function and cryptographic hash functions are distinct concepts that should not be mixed with each other. In order to design a protocol it is important to recognize what the (assumed) properties of the primitives used in the protocol are, what properties are not present and what properties are needed for the protocol to be secure. For example, I think of cryptographic hash functions simply of functions having the property that it is difficult to find two inputs that hash to the same result. Some hash functions such as the SHA-family may have further properties that make them suitable for applications such as HMAC or password storage. Other hashes don't. An example is VSH [5]. VSH comes with a strong argument for its collision resistance. But it has an undesirable property allowing some short messages to be inverted. (This is pointed out in the paper together with a warning not to blindly use the function for applications it was not defined for.) Hence not every hash function is a good choice for password storage. I still have problems understanding what people actually mean when they talk about one-way encryption, but I get the impression that most people using the term don't know either. Rather than a redirect it might be better to have some sort of disambiguation page. 85.2.67.208 18:22, 19 June 2007 (UTC)

Definition of collision resistance

I reverted the 2007 March 18 modification to collision resistance. The attacker is free to choose both m1 and m2. He need not be confined to an m1 fixed by others .

NormHardy 00:21, 20 July 2007 (UTC)

Image choise

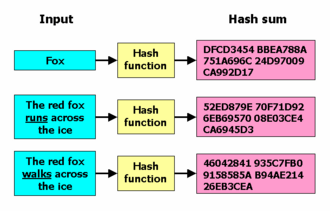

Some days ago User:Jafet.vixle changed the image used in the article. I disagree with the change.

I think Jafet's image is much harder to understand for beginners. Among other things it does not show generic terms such as "hash function" and "hash sum", instead it uses the name of an arbitrarily chosen hash function "Whirlpool". And it doesn't show the process, that is that input is fed to the hash function that then produces the hash sum.

--David Göthberg 16:26, 21 August 2007 (UTC)

- I agree. The older picture is preferable. 169.231.5.121 07:22, 22 August 2007 (UTC)

- Since no one else said anything I switched back to my image in the article. --David Göthberg 15:15, 26 August 2007 (UTC)

- Since the quotes the 'input' as the 'message', change the label to 'input message'. — Preceding unsigned comment added by 82.31.143.204 (talk) 20:37, 30 October 2012 (UTC)

Clarification required

Can someone knowledgeable please clarify the units used in the "List of cryptographic hash functions" section? For instance, does SHA-1 output 160 bits or 160 bytes? —Preceding unsigned comment added by 196.7.19.250 (talk) 15:44, 26 October 2007 (UTC)

It's supposed to be bits. (anonymous)

I don't understand the part about using multiple hash functions to increase security. If it doesn't increase security, what does it mean that SSL uses it in case one of MD5 and SHA-1 is broken?

CISSP?

Why does Category:CISSP apply here? Just because hash functions are related to Internet security and knowledge of them is needed for taking that test? There's a lot of software protocols that rely on hash functions, courses that teach hash functions, books that mention them -- do we link those as well? I'm not convinced that people reading the hash function page will find value in that category. Mentioning hash functions as being part of the CISSP test, on the CISSP page, might be more appropriate. NoDepositNoReturn (talk) 18:25, 29 June 2008 (UTC)

Incorrect re Debian and concacenated hashes

The article is wrong about why Debian Packages files contain multiple hashes. Apt only checks the strongest hash that it knows how to deal with. For current apt, this is the SHA256 hash. For older apts, it is the SHA1 or MD5SUM. Weaker hashes are ignored. The weaker hashes are left in the files so that older versions of apt can still verify the files during upgrades.

Proof: Line 1324 of acquire-item.cc in apt's source. ExpectedHash is set to only the strongest hash found. —Preceding unsigned comment added by 67.223.5.142 (talk) 19:39, 31 December 2008 (UTC)

info hash - infohash

There are no current WP articles about the so-called "info hash", which is used with torrents. The passing mention in BitTorrent protocol encryption seems to be the only current content. If you understand this subject, please add the term to this article, in proper context.

"The info hash is strictly for the bittorrent client. The BT Client you use uses the info hash to make sure that what you are downloading is legit from the server's point of view. You don't do anything with it." -96.237.7.209 (talk) 13:59, 18 January 2009 (UTC)

Randomized hashing, 2008 MD5 SSL cert break

- Randomized hashing is just a fancy name for signers putting a random number in the content to be hashed for a digital signature, more or less. But it would have made the 2008 SSL cert hack impossible, because the attackers wouldn't be able choose exactly what content would be signed, and it's seemingly caught the attention of NIST because it could make signatures more resilient against algorithm breaks. (To replicate the 2008 MD5 crack with randomized hashing, attackers would need either a preimage attack or a collision attack that works even when part of the hash input is unknown.) The researchers who did the 2008 crack suggested something similar -- ensure the cert serial number is random.

- The 2008 MD5 SSL cert break drives home that hash functions matter; seems like it deserves some mention here. You don't know what you got 'till it's gone; you don't think about plumbing until it breaks; and we all don't appreciate hash functions until some researchers break the Internet because we're not using good ones. :)

It would be fun, if hopelessly pedantic, to flag exactly which uses require hash functions to be merely pretty-random-looking (e.g. integrity checks), preimage resistant, collision resistant, pseudorandom, or based on strong block ciphers.

Notes to self, but if anyone wants to pick this up I'm tickled.

67.119.195.43 (talk) 04:53, 2 February 2009 (UTC)

Concatenation of hash functions

I disagree with the following claim in the text:

- Concatening outputs from multiple hash functions provides security at least as good as the strongest of the algorithms included in the concatenated result.

This may be true for collision resistance, but is incorrect with regard to preimage resistance. E.g. if one hash function is SHA-1 and the other function is just the identity function then it is trivial to find x from SHA-1(x) || x. The potential one-wayness of SHA-1 doesn't help to protect x here at all. After all, preimage resistance is a requirement for a secure hash function. 85.0.14.230 (talk) 08:46, 2 February 2009 (UTC)

- I believe you're right, the text needs to clearly source this claim and specify which types of attacks it's true for; it's clear per your example that a preimage attack on a concatenation of hashes need only examine the weakest of them, and may in fact be able to do even better. Dcoetzee 10:34, 2 February 2009 (UTC)

- I agree that the statement only holds for collision resistance. Proof of security of concatenated hashes http://eprint.iacr.org/2008/075. Also, it won't provide security "at least as good" but "at most." I'm going to attempt some cleanup on this one but suspect it'll take multiple iterations to fully correct it.Quelrod (talk) 19:01, 31 July 2010 (UTC)

- this statement holds for collision resistance and second pre-image resistance. The citation suggested above (http://eprint.iacr.org/2008/075), however, deals with a different issue. The correct citation imho is my paper, which is also much earlier but here in the journal version: Herzberg, Amir. "Folklore, practice and theory of robust combiners." Journal of Computer Security 17.2 (2009): 159-189. — Preceding unsigned comment added by Amirhe (talk • contribs) 21:32, 29 August 2018 (UTC)

two same things

- it is infeasible to modify a message without changing its hash,

- it is infeasible to find two different messages with the same hash.

aren't they the same thing? Twipley (talk) 22:59, 22 July 2009 (UTC)

No. To violate the first property, you need a method to find a message with a specific hash value (that of the first message). To violate the second property, you need a method to find any two messages that share a hash value - any two messages, and any hash value. For a cryptographic hash function to be secure, there shouldn't be any ("feasible") method of doing either. — Gavia immer (talk) 23:07, 22 July 2009 (UTC)

They are the same things. If the first (or the second) property is violated, the second (the first) is violated also. At least from pure logic point of view, they are strongly correlated statements and neither of them can stand alone. —Preceding unsigned comment added by 99.240.92.102 (talk) 15:59, 10 November 2009 (UTC)

- WP:NOTAFORUM, but finding an attack that violates the first item is called a "pre-image" attack; finding an attack that violates the second item is a "collision" attack. Collision attacks are easier to find; MD5 is vulnerable to collision attacks (finding two different messages with the same MD5 value) but there is no known pre-image attack against MD5--no one has figured out how to make a file with the MD5 sum 0102030405060708090a0b0c0d0e0f for example. Samboy (talk) 16:41, 10 November 2009 (UTC)

"with flaws"

What is meant by the notation "with flaws" in the table at the end of this article? I think it means that someone once thought they had a successful attack on the scheme, but they were wrong: their attack does not work. A non-working attack is not an attack at all. If I'm understanding correctly, it is highly misleading to include any "with flaws" figures in that table: they should be deleted. —Preceding unsigned comment added by 128.32.153.194 (talk) 00:34, 6 March 2010 (UTC)

I agree that including attacks with flaws should not be included. I did update some of these with newer research. You are correct that "with flaws" means that someone published an attack but in most cases when another researcher tried to duplicate the result they found serious problems with it. In fewer cases the actual authors of the published research discovered the flaws themselves and made that information public. Though, in the latter case, where the author found the flaws, generally the paper is withdrawn from places like http://eprint.iacr.org/complete/ Quelrod (talk) 18:39, 31 July 2010 (UTC)

Keeping the latest attacks in sync

I'm curious if there is some way to either split a few pages or some method to keep pages with the same content up to date. For instance there is at least: Cryptographic_hash_function Comparison_of_cryptographic_hash_functions Hash_function_security_summary

They aren't always all in sync with each other and that's not to mention the pages for each hash function. Thoughts? Quelrod (talk) 19:34, 31 July 2010 (UTC)

Binary hashing method

I'm going to remove the 'binary hashing method' proposed by Daymon Schroeder from this page. This is an unpublished hash function. It is poorly designed and collisions are almost trivial to find. E.g. the two strings "10608939The quick brown fox jumps over the lazy dog" and "10308969The quick brown fox jumps over the lazy dog" both have the same hash of length 32 "+!Yguf*aNV!P#Hiw*{*[#~*;1NfL+!%%". I've verified the hash on the web page of the author. —Preceding unsigned comment added by 85.1.57.131 (talk) 10:25, 28 December 2010 (UTC)

- Please do not remove comments. If you don't agree with the comment an think BHM should be listed here then write a reply. I.e. the code provided for BHM is of poor quality and not all implementations give the same results. This may be due to rounding errors, since the hash function uses floating point arithmetic. If you think the example above is not a collision, then specify what you would consider a reference implementation. In any case there are alternative strings that seem to give collisions: I.e. take any string longer than the length of the hash and permute the characters at the end. E.g. 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx12' and 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx21' give the same hash of size 32 with the python implementation. 83.78.157.215 (talk) 10:28, 29 December 2010 (UTC)

Illustration flaw

The present alice-bob illustration appears flawed. For example consider the following scenario: alice creates and stores a meaningless string Call A, sends hash(A) to Bob (under the pretense of the example). When Bob comes to claim he has achieved the solution Alice simple reveals her nonce as whatever she wants (W) - A. Bob will then (incorrectly) verify Alice had W all along. In this context the hash is useless, Alice is not committed to anything by revealing her hash. In the context of the example the implications are unclear as it depends on if bob reveals the solution first or not. However we must assume he does not reveal the number before Alice as if he does Alice simply takes W as the solution. Hence the whole nonce and reveal is miss-leading e.g. there is no point at with to reveal it or at least it must be revealed when Bob wants it therefore may as well be excluded.

This could be fixed by by requiring Alice to divulge the hash of the nonce as well as of the sum of the nonce and solution. — Preceding unsigned comment added by 155.198.75.173 (talk) 14:36, 2 June 2011 (UTC)

Meaning of collision attacks

The hash function table is missing a link to the collision attack against Haval. Perhaps more importantly, it misinterprets the reference to an alleged collision attack against Whirlpool: what is achieved in the paper by Lamberger et al. is a *distinguisher* against Whirlpool, based on a *near-collision* against the underlying *compression function*. It only means one can tell a Whirlpool digest from a random 512-bit string with an effort of 2176 steps (checking the documentation of the system using the hash is likely to yield the same result much faster). This is entirely different from stating that there is a collision attack against the *hash function* itself; the authors of [1] neither do it nor claim they can do it, contrary to what is claimed on the table in this article as it is currently written. — Preceding unsigned comment added by 189.38.230.167 (talk) 01:20, 9 December 2011 (UTC)

RIPEMD table entry wrong

In the table, it says that no variant of RIPEMD has collisions. Yet in the RIPEMD article, there is a reference citing a paper that lists an explicit collision (not sure if it's an old RIPEMD 128 or the new one). And in Comparison of cryptographic hash functions#Cryptanalysis there are collisions cited for RIPEMD 160. Therefore, it's misleading to say that they have no collisions. and obviously the table should be fixed. Since the collisions affect the 128 and the 160 bits versions, how should this be handled? Split into 4 entries? Or merge the ones without collisions together (i.e. 256/320) in one entry? --pgimeno (talk) 23:57, 22 April 2012 (UTC)

- RIPEMD clearly states that "collision was reported for the original RIPEMD". this is the same collision that appears in the tables in Comparison of cryptographic hash functions#Cryptanalysis and in this article. the entry for RIPEMD-160 is for a reduced-round version with only 48 rounds. since the table in this article only contains attacks against the full-round algorithms, the table is correct and does not need to be modified. --MarioS (talk) 14:07, 23 April 2012 (UTC)

- My bad, you're right. Apologies. --pgimeno (talk) 19:43, 24 April 2012 (UTC)

wait, what?

I'm kind of confused... This is my understanding of hashing:

- the computer randomly comes up with a key

- when someone types in a message to be encrypted, it is changed with the key.

- It could just store the stuff in the database, but that would allow a site owner to see a password or something. So, it has to hash it.

- on the other side, the info is un-hashed with that random key. there even the site owner does not know, and it stays private.

Am I right? — Preceding unsigned comment added by 96.5.166.66 (talk) 20:57, 23 April 2012 (UTC)

First sentence incomplete?

This first sentence of this article seems to be an incomplete sentence. The sentence just doesn't make much sense.

- A cryptographic hash function is a hash function, that is, an algorithm that takes an arbitrary block of data and returns a fixed-size bit string, the (cryptographic) hash value, such that an (accidental or intentional) change to the data will (with very high probability) change the hash value.

Khatchad (talk) 21:59, 14 September 2012 (UTC)

Other hash functions

- Can SipHash be added to the list of cryptographic hash function ? it's 64bit output might not fit against other heavy hash function, but SipHash was defined with cryptographic requirement. — Preceding unsigned comment added by Ydroneaud (talk • contribs) 09:55, 14 December 2012 (UTC)

Link

The statement that " WEP encryption standard, but an attack was readily discovered which exploited the linearity of the checksum" should have a link on linearity to what meaning of linearity is intended. RJFJR (talk) 18:45, 22 July 2013 (UTC)

Definition of "Pre-image resistance"

Here is what this article gives as the definition of "Pre-image resistance":

Given a hash h it should be difficult to find any message m such that h = hash(m). This concept is related to that of one-way function. Functions that lack this property are vulnerable to preimage attacks.

As stated, no hash function could be pre-image resistant. Here is a hash h that is very easy to find a pre-image for: h(0^n). What is implied, of course, is something different, namely that for a random hash h it should be difficult to find any message m such that h = hash(m).

The Wikipedia article on "Preimage attack" gives a better definition: "for essentially all pre-specified outputs, it is computationally infeasible to find any input which hashes to that output, i.e., to find any preimage x given a "y" such that h(x) = y."

I suggest we use this formulation rather than the "Given a hash h" one.

Snapdragon630 (talk) 02:34, 16 October 2014 (UTC)

As proof-of-work

I think we can add an extra application of hash functions as used in proof-of-work system such as hashcash. Manoguru (talk) 13:25, 25 January 2015 (UTC)

trapdoor hash function

I don't know much about this stuff, but it seems to me that a cryptographic hash function is a hash function that is also a trapdoor function. There may be subtleties that make them not exactly the same, but I suggest mention and link should be made to this connection with trapdoor functions on the introduction to this article.2601:681:4902:31B8:95CC:E49C:E8F:3502 (talk) 03:31, 4 September 2015 (UTC)

Ambiguous Definition

The current definition reads: "A cryptographic hash function is a hash function which is considered practically impossible to invert, that is, to recreate the input data from its hash value alone." However, for example, on the MD5 Wiki page, we say that "the MD5 message-digest algorithm is a widely used cryptographic hash function," even though "a collision attack exists that can find collisions within seconds". This raises the question: is this definition suitable in a world where hash functions are DESIGNED to have the "one-way hashing" quality even though later on it loses that quality?

I propose the following new definition:

"A cryptographic hash function is a hash function which is designed to be practically impossible to invert, that is, to recreate the input data from its hash value alone."

I also suggest that we add a sentence or a paragraph explaining the fact that not all "cryptographic hash functions" are in fact secure, due to the fact that circumstances sometimes change in a way that causes the "one-way" aspect of the algorithm to no longer be true. — Preceding unsigned comment added by 54.240.196.170 (talk) 16:20, 22 October 2015 (UTC)

Incorrect intro

The introduction says that an ideal cryptographic hash should be quick to compute. This is actually wrong in one primary application of crypto hashes, which is password/authentication systems. In such systems, the number of hashes to compute is relatively low (once per login, roughly), so it's OK for the hash function to take a long time. However, a brute-force password attacker needs to try every possible hash combination to crack passwords, and this attacker greatly benefits from having a fast hash function versus a slow hash function. 165.134.12.132 (talk) 17:10, 21 September 2016 (UTC)

- i don't think it is wrong. a general purpose hash function, which the article is about, must be fast, because there are use cases in which performance matters. if we have fast hash functions, it is possible to make high cost special purpose kdfs based on them. so we actually don't need high cost primitives. cost can always be added later. if you look at any high cost scheme, there is a high performance primitive inside. Krisztián Pintér (talk) 22:06, 21 September 2016 (UTC)

- this is still wrong, here is a reference for clarification: https://www.youtube.com/watch?v=GT_qgImaUS4#t=10m15s - watch until 10m40 - a good hash function should be designed to be sufficiently complex that it cannot be computed quickly. It's not a matter of making it slower later, as someone could just re-implement it efficiently, e.g. in generating a rainbow table. — Preceding unsigned comment added by 129.234.103.198 (talk) 19:19, 10 October 2017 (UTC)

SHA-1 Collision Attack

It looks like a successful collision attack against SHA-1 has been run, so this article probably needs an update to the line "Though theoretical weaknesses of SHA-1 exist,[14][15] no collision (or near-collision) has yet been found." 68.116.67.198 (talk) 17:51, 24 February 2017 (UTC)

A small add: the sentence in the beginning say "a function which is infeasible to invert", SHA-1 has been broken, this article needs an update

External links modified

Hello fellow Wikipedians,

I have just modified 2 external links on Cryptographic hash function. Please take a moment to review my edit. If you have any questions, or need the bot to ignore the links, or the page altogether, please visit this simple FaQ for additional information. I made the following changes:

- Added archive https://archive.is/20121208212741/http://wiki.crypto.rub.de/Buch/movies.php to http://wiki.crypto.rub.de/Buch/movies.php

- Added archive https://archive.is/20121206020054/http://www.guardtime.com/educational-series-on-hashes/ to http://www.guardtime.com/educational-series-on-hashes/

When you have finished reviewing my changes, you may follow the instructions on the template below to fix any issues with the URLs.

This message was posted before February 2018. After February 2018, "External links modified" talk page sections are no longer generated or monitored by InternetArchiveBot. No special action is required regarding these talk page notices, other than regular verification using the archive tool instructions below. Editors have permission to delete these "External links modified" talk page sections if they want to de-clutter talk pages, but see the RfC before doing mass systematic removals. This message is updated dynamically through the template {{source check}} (last update: 5 June 2024).

- If you have discovered URLs which were erroneously considered dead by the bot, you can report them with this tool.

- If you found an error with any archives or the URLs themselves, you can fix them with this tool.

Cheers.—InternetArchiveBot (Report bug) 02:39, 15 August 2017 (UTC)

One-way Function

This article uses the term "one-way function" to mean "a function which is infeasible to invert." This is problematic as the criteria for infeasible, aka intractable, problems are not well-defined while one-way functions have a specific mathematical definition. Furthermore, going by the formal definition of one-way function, it is unknown whether any such functions even exist. It would seem prudent to mention somewhere in the article that when we are referring to one-way functions, we are doing so in an informal manner inconsistent with the precise meaning of the term. Jwuthe2 (talk) 05:40, 12 January 2018 (UTC)

False statement in Properties

"Collision resistance implies second pre-image resistance, but does not imply pre-image resistance."

According to the provided source collision resistance implies pre-image resistance up to an advantage for the adversary of 2n-m where n is the hash length and m is the domain size. Since m is often much larger than n (m >> n) the advantage of the adversary maybe,in many cases, neglectible and the above statement false. Example: domain size is 2n and hash length is n. Advantage is 1/2n. Dave4422 (talk) 14:17, 31 January 2018 (UTC)

h(x) := MD5(x) + MD5(x+x) with '+' in the sense of concatenation

Just perfect.

--84.118.82.226 (talk) 12:12, 16 April 2018 (UTC)

Practical infeasibility has NOTHING to do with intractability of complexity theory

There seems to be a general misconception that the notion of "infeasible" in cryptography is the same as (or related to) the notion of "intractable" from complexity theory. (The two were linked in the article.) That is not the case. Complexity theory looks only at the asymptotic behavior of the cost of computing a function as the size of the input goes to infinity. When inverting a cryptographic hash function like SHA or MD5, the input has fixed size. When this is the case, complexity theory says that the problem is trivial, and can be solved in O(1) time.

In fact, complexity theory has nothing at all to contribute to practical cryptography. It cannot prove that functions are practically infeasible to invert. It cannot even prove that SHA is harder to invert than the function "take the first 256 bits of the message".

In fact (as far as I know) there is no mathematical proof that any function is a one-way function. Cruptographic hash functions are "proved" to be robust only "empirically", by resisting attacks by the most clever cryptographers for decades.

--Jorge Stolfi (talk) 06:42, 1 June 2019 (UTC)

Let's keep it balanced

@Intgr: Why choose one property in a list of "desirable properties" to put alongside the three required properties (to quote your source: "The basic (classical) properties a hash function is expected to preserve are: collision resistance, pre-image resistance and 2nd pre-image resistance"). Why stop there and not include, alongside it in the lead, the five other "desirable properties that hash functions should preferably preserve" listed by your source? And yes, "statistical correlation" is ill-defined in the context. Correlation of individual input bits to output bits? Correlation of input texts with output values? The source does not define this, does not expand on the statement, nor does it provide a reference. —Quondum 23:58, 3 July 2019 (UTC)

Use in hash tables

We have a couple of paragraphs on the use of cryptographic hashes in hash tables (from this edit [6]):

One of the main applications of a hash function is to allow the fast look-up of a data in a hash table. Being hash functions of a particular kind, cryptographic hash functions lend themselves well to this application too.

However, compared with standard hash functions, cryptographic hash functions tend to be much more expensive computationally. For this reason, they tend to be used in contexts where it is necessary for users to protect themselves against the possibility of forgery (the creation of data with the same digest as the expected data) by potentially malicious participants.

These are unsourced. The second paragraph is unclear: what is the 'expected data' in the context of a hash table? Also, the claim that 'hash functions lend themselves well to this application' is questionable, for the exact reason given in the second paragraph: performance. Given the claims are questionable, unclear and unsourced, I plan to remove both paragraphs. - Crosbie 02:36, 15 August 2019 (UTC)

Define as a regular hash function with extra properties

It used to be the case but got lost apparently here WofWca (talk) 19:24, 18 November 2022 (UTC)

- The current text is indeed not the best:

- it talks about "inversion". In almost any case, any hash cannot be "inverted" (original text reconstructed), which is also not needed, as that plaintext typically is already known to the attacker, the attacks are going along the lines of constructing some new text with the same hash (preimage attack) or finding two new texts with the same hash (collision attack).

- On top of it, the reference goes to some obscure Web document that apparently does not even mention words "reverse" or "invert".

- Its is better to base the definition of such a simple term on a textbook, IMO. I propose to use the standard handbook by Menezes et al., [7], section 1.9 (page 33 in a typical edition). The definition there is along the lines suggested by you:

- the crypto hash is a hash

- it has to be resistant to the collision and preimage attacks.

- --Dimawik (talk) 02:07, 22 November 2022 (UTC)

- Seeing no objections in three weeks, implemented the proposal. Dimawik (talk) 05:11, 15 December 2022 (UTC)

- C-Class Cryptography articles

- Top-importance Cryptography articles

- C-Class Computer science articles

- Top-importance Computer science articles

- WikiProject Computer science articles

- WikiProject Cryptography articles

- C-Class WikiProject Cryptocurrency articles

- High-importance WikiProject Cryptocurrency articles

- WikiProject Cryptocurrency articles

- C-Class Computer Security articles

- High-importance Computer Security articles

- C-Class Computer Security articles of High-importance

- C-Class Computing articles

- Mid-importance Computing articles

- All Computing articles

- All Computer Security articles

- Wikipedia pages with to-do lists

- Articles linked from high traffic sites