Wikipedia talk:The Wikipedia Adventure/Impact

Work log: Monday, January 14th

I sat down to do some work with experimental data around TWA. In this work session, I applied some of the same metrics we use for analyzing the effects of features @ the WMF (e.g. VE, AFT, Onboarding, etc.) to analyze the effectiveness of TWA. All of these metrics were generated over user activity in the week immediately following registration. Experiment = TWA invite. Control = No TWA invite.

Quick take-aways:

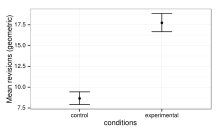

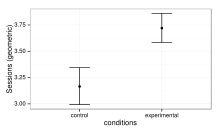

- TWA invitees make substantially more edits, they come back for more sessions and they spend more time editing.

- TWA invitees did not make more edits to article in their first week.

- TWA invitees were insubstantially more likely to productively contribute to an article in their first

Next time, I'd like to look at how much noise (in the statistical sense) I can remove from the data by looking at how many edits the user made in his/her first edit session. I'd also like to look at the survival rate of these editors to see if TWA newcomers are more likely to stick around. --Halfak (WMF) (talk) 20:41, 13 January 2014 (UTC)

Work log: Friday, January 17th

I'm back at it. I've extended my observation period to two weeks. I invented a new metric that I call "short-term survival". A newcomer is a short-term survivor if they make at least one edit in their second week post registration.

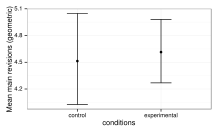

First, let's look at the graphs when they include newcomers' first two weeks of activity.

I'm not too surprised to see that the measurements didn't change much. Most newcomers will stop editing in their first week, so we aren't getting much new data by looking at their second week. What I should really do is trim off the first 24h of data and try the same set of measures again.

In this run, I also generated a couple of models that made use of the actions that newcomers performed in their first 24h in order to control for some noise. I assume that newcomers who make a bunch of article edits in their first 24h are probably much more likely to have positive productivity and survival metrics -- so I checked both.

glm(formula = short_survival ~ main_revisions_24h + bucket, data = merged_stats)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.257476 0.038467 6.693 5.96e-11 ***

main_revisions_24h 0.003637 0.002518 1.444 0.149

TWA_INVITED 0.025807 0.043520 0.593 0.553

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

AIC: 632.98

It doesn't look like either the number of revisions saved in the first 24h or TWA is a significant predictor survival.

glm(formula = productive ~ main_revisions_24h + bucket, data = merged_stats)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.633567 0.033790 18.750 < 2e-16 ***

main_revisions_24h 0.017023 0.002212 7.695 7.79e-14 ***

TWA_INVITE 0.055810 0.038229 1.460 0.145

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

AIC: 504.65

While it looks the number of article revisions saved in the first 24 hours predicts productivity (which makes sense), we still don't find a significant effect for TWA. However, I am hopeful that we are on the right track because our confidence is increasing.

I suspect that, once I trim out the first 24h activities from my dependent variables, we might have a chance of seeing something. --Halfak (WMF) (talk) —Preceding undated comment added 20:31, 17 January 2014 (UTC)

Work log: Thursday, January 30th

I'm working with the full dataset today. I didn't realize that I was working with a small sample of the users who were and were not invited to TWA. Now I have 10X as much data to work with. Also for this run, I generated stats while ignoring edits to TWA pages (userspace).

summary(merged_stats$bucket)

control experimental

3257 10607

What gives? It looks like there's a substantial productivity drop associated with being invited to play TWA. This plays out in all of the metrics that I have. Newcomers make fewer edits, spend less time editing, engage in fewer edit sessions and make fewer "productive edits".

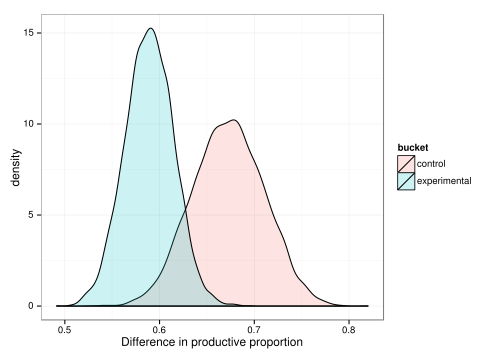

Many of these metrics (e.g. main revisions, productive revisions, etc.) should not have been affected by filtering TWA edits, so what's the deal? Why didn't we see this difference in the smaller sample I was working from before? I ran a simulation to find out how it was that I would end up with the observed difference we saw before if I sampled randomly from the full set of users. The draws from the simulated samples are plotted in the figure below.

It looks like there's some substantial overlap there, but that look is deceiving. In only 0.2% of the simulated samples did I see a difference that was >= 0.05, the observed difference from the last set. That a 1 in 5000 chance. Usually, we declare statistical significance around 5% or a 1 in 20 chance.

To state it simply, either I got the query for extracting user groups wrong, we were extremely unlucky to have gotten a strange subsample before, or the sample I was working from earlier wasn't a random subsample. I'm looking into the issue now to make sure it wasn't my mistake. --Halfak (WMF) (talk) 18:52, 30 January 2014 (UTC)